Understanding the Compute DePIN Sector's Ecosystem Landscape Beyond io.net

TechFlow Selected TechFlow Selected

Understanding the Compute DePIN Sector's Ecosystem Landscape Beyond io.net

Computation DePINs bring back individual developers, fragmented builders, and startups with minimal capital and resources.

Author: PAUL TIMOFEEV

Compiled by: TechFlow

Key Takeaways

-

With the rise of deep learning powering machine learning and generative AI development, computing resources have become increasingly in demand, both requiring highly compute-intensive workloads. However, due to large corporations and governments stockpiling these resources, startups and independent developers now face a shortage of GPUs in the market, leading to excessively high costs and/or unavailability.

-

Compute DePINs can create decentralized markets for computing resources like GPUs by allowing anyone in the world to contribute their idle supply in exchange for monetary rewards. This aims to help underserved GPU consumers access new supply channels, enabling them to obtain the development resources needed for their workloads at reduced costs and overhead.

-

Compute DePINs still face numerous economic and technical challenges when competing with traditional centralized service providers—some of which will resolve over time, while others will require new solutions and optimizations.

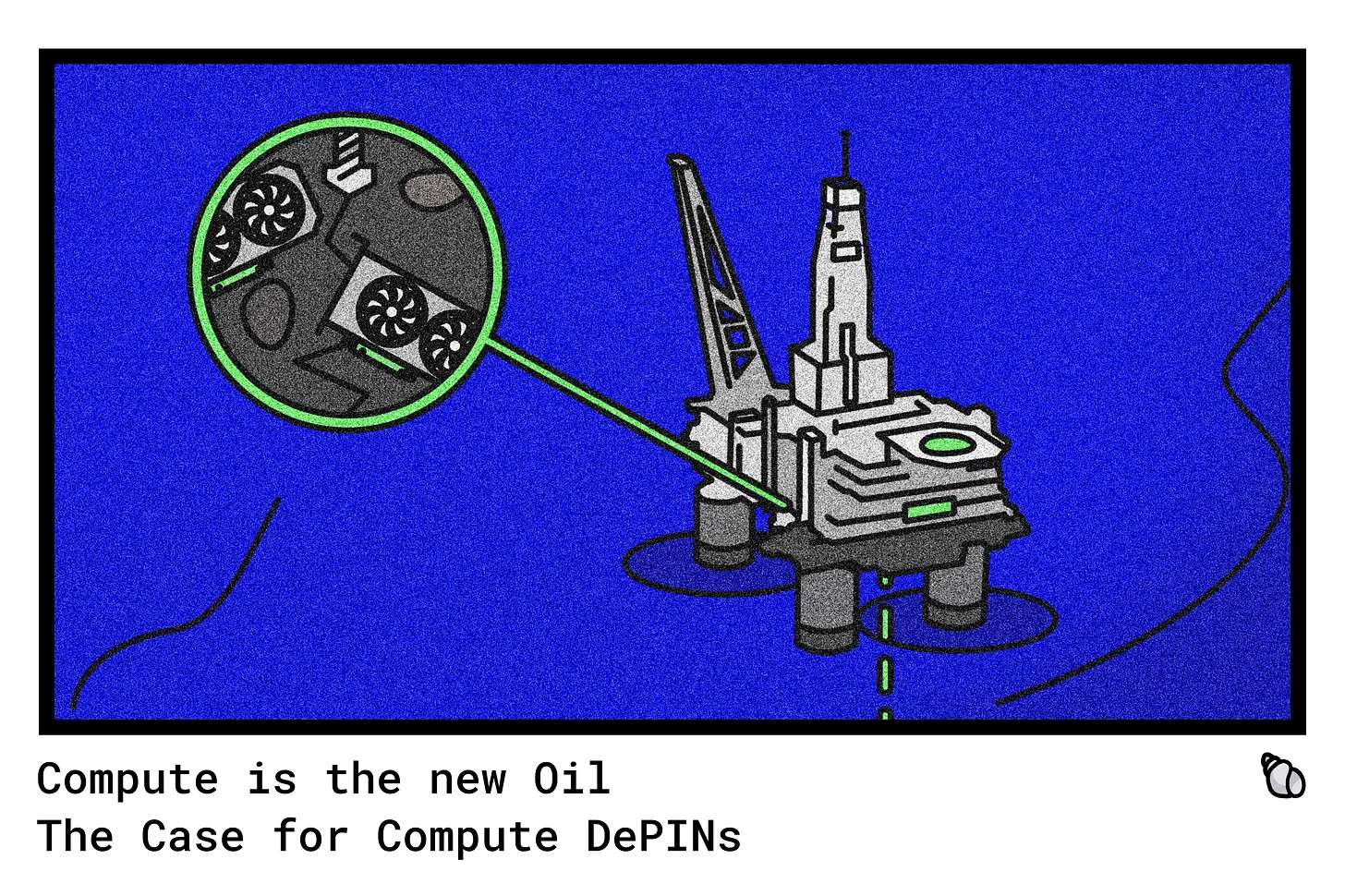

Computation Is the New Oil

Since the Industrial Revolution, technology has advanced humanity at an unprecedented pace, affecting or completely transforming nearly every aspect of daily life. Computers eventually emerged as the culmination of collective efforts by researchers, scholars, and computer engineers. Initially designed to solve large-scale arithmetic tasks for advanced military operations, computers have evolved into the backbone of modern life. As the impact of computing on humanity continues to grow at an unprecedented rate, so does the demand for these machines and the resources that power them—exceeding available supply. This, in turn, creates market dynamics where most developers and businesses cannot access critical resources, placing the development of machine learning and generative artificial intelligence—one of today’s most transformative technologies—in the hands of a few well-funded players. Meanwhile, vast amounts of idle computing resources present a lucrative opportunity to help alleviate the imbalance between supply and demand, amplifying the need for coordination mechanisms between both sides. Therefore, we believe decentralized systems powered by blockchain technology and digital assets are crucial for the broader, more democratic, and responsible development of generative AI products and services.

Computing Resources

Computation can be defined as various activities, applications, or workloads through which computers produce explicit outputs from given inputs. Ultimately, it refers to the computational and processing power of computers, the core utility of these machines that drives many parts of the modern world, generating up to $1.1 trillion in revenue in just the past year alone.

Computing resources refer to the various hardware and software components that make computation and processing possible. As the number of applications and functionalities they enable continues to grow, these components are becoming increasingly important and prevalent in everyday life. This has led to a race among nation-states and corporations to accumulate as many of these resources as possible as a means of survival. This is reflected in the market performance of companies providing these resources (e.g., Nvidia, whose market capitalization has grown over 3,000% in the past five years).

GPU

GPUs are one of the most important resources in modern high-performance computing. The core function of a GPU is to act as a specialized circuit that accelerates computer graphics workloads via parallel processing. Originally serving the gaming and personal computer industries, GPUs have evolved to support many emerging technologies shaping the future (such as consoles and PCs, mobile devices, cloud computing, and the Internet of Things). However, demand for these resources has intensified particularly due to the rise of machine learning and artificial intelligence—by executing computations in parallel, GPUs accelerate ML and AI operations, thereby enhancing the processing capabilities and performance of the resulting technologies.

The Rise of AI

At its core, AI enables computers and machines to simulate human intelligence and problem-solving abilities. AI models operate as neural networks composed of many different data blocks. These models require processing power to identify and learn relationships within this data, then reference those relationships when creating outputs based on given inputs.

Despite common perception, AI development and production are not new; in 1967, Frank Rosenblatt built the Mark 1 Perceptron, the first neural network-based computer that "learned" through trial and error. Furthermore, much of the academic research laying the foundation for today’s AI developments was published in the late 1990s and early 2000s, and the industry has been evolving ever since.

Beyond R&D, “narrow” AI models already play key roles in various powerful applications used today. Examples include social media algorithms, Apple’s Siri, Amazon’s Alexa, personalized product recommendations, and more. Notably, the rise of deep learning has transformed the development of Artificial General Intelligence (AGI). Deep learning algorithms use larger, or “deeper,” neural networks than traditional machine learning applications, serving as a more scalable and higher-performing alternative. Generative AI models “encode simplified representations of their training data and refer to them to generate new outputs that are similar but not identical.”

Deep learning enables developers to scale generative AI models to images, speech, and other complex data types, and milestone applications like ChatGPT have set records for fastest user growth in modern history—these are only early iterations of what generative AI and deep learning may achieve.

Given this, it should come as no surprise that generative AI development involves multiple compute-intensive workloads, requiring massive processing and computational power.

According to the trio of demands in deep learning applications, AI application development is constrained by several key workloads:

-

Training – Models must process and analyze large datasets to learn how to respond to given inputs.

-

Fine-tuning – Models undergo a series of iterative processes where various hyperparameters are adjusted and optimized to improve performance and quality.

-

Simulation – Prior to deployment, certain models (e.g., reinforcement learning algorithms) go through a series of simulations for testing.

Compute Crunch: Demand Outstrips Supply

Over recent decades, many technological advances have driven an unprecedented surge in demand for computing and processing power. As a result, demand for computing resources such as GPUs now far exceeds available supply, creating bottlenecks in AI development that will only worsen without effective solutions.

Supply constraints are further exacerbated by numerous companies purchasing GPUs beyond their actual needs, both as a competitive advantage and as a means of survival in the modern global economy. Computing providers often employ contract structures requiring long-term capital commitments, granting customers access to supply beyond their required demand.

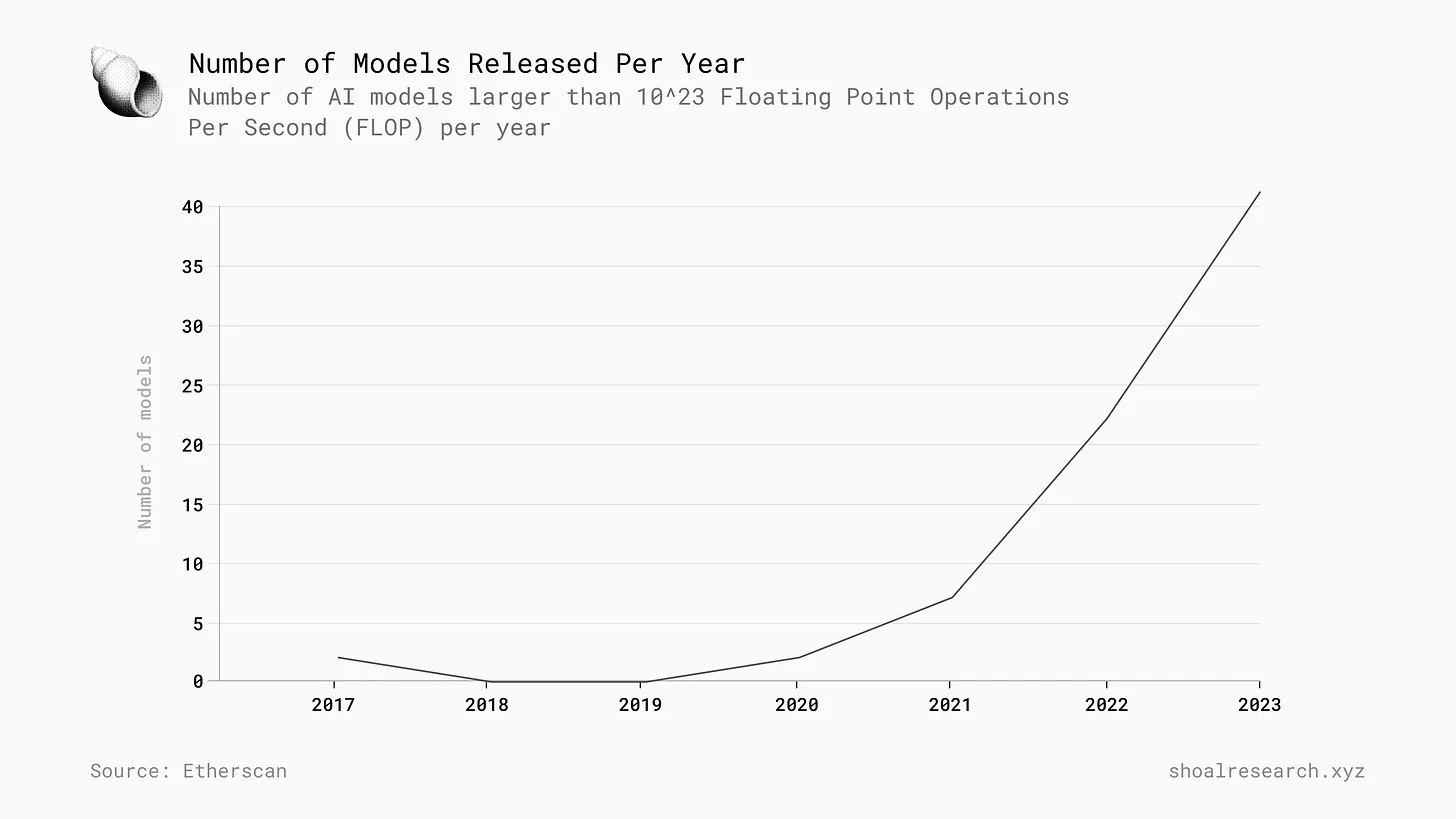

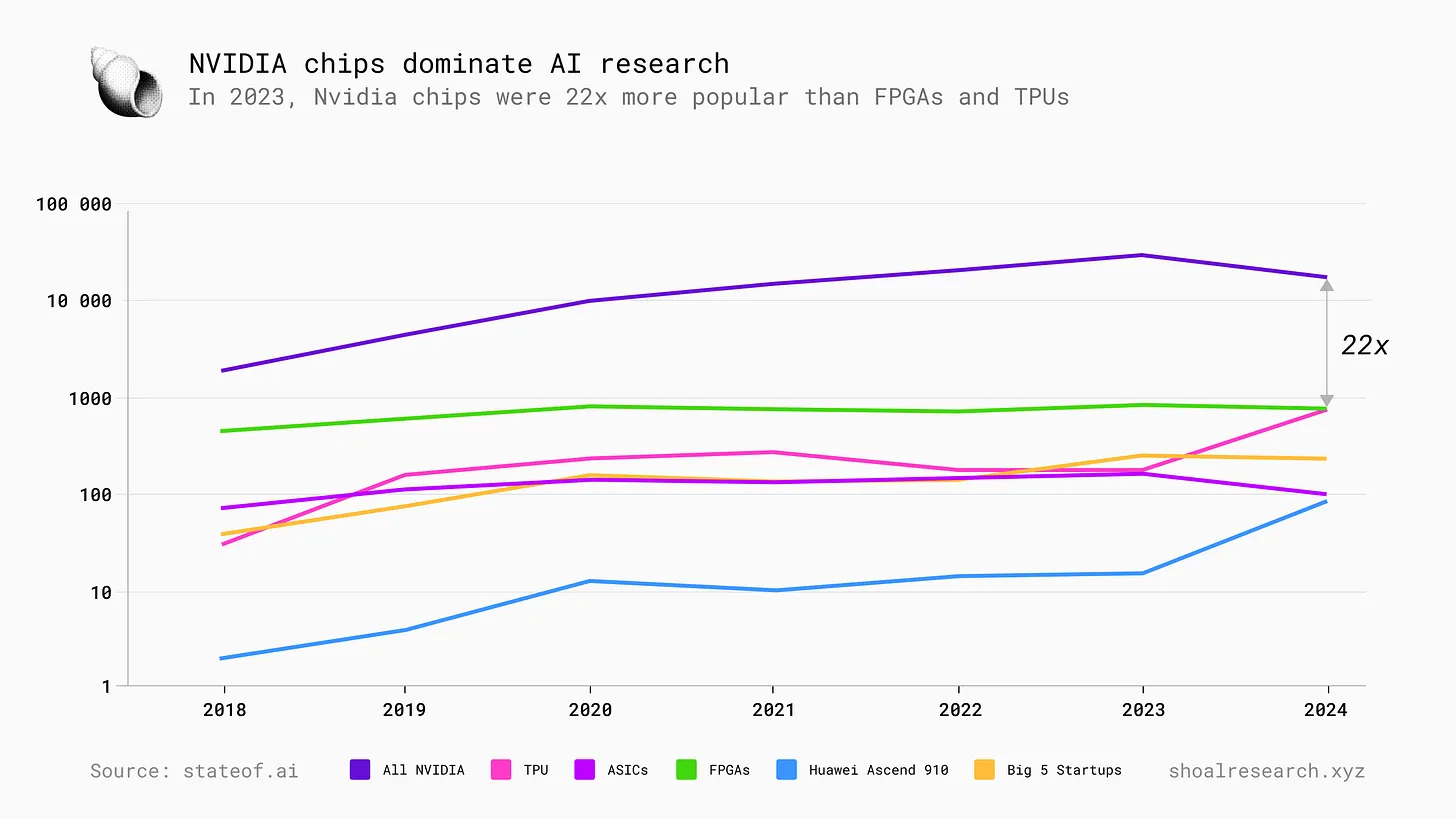

Research from Epoch shows that the overall number of compute-intensive AI models being released is growing rapidly, indicating that resource demand driving these technologies will continue to increase quickly.

As AI model complexity continues to grow, so do the computing and processing demands of application developers. In turn, GPU performance and their subsequent availability will play an increasingly critical role. This is already happening, as demand for high-end GPUs (e.g., those produced by Nvidia) grows, with Nvidia calling GPUs the “rare earth metals” or “gold” of the AI industry.

The rapid commercialization of AI risks concentrating control in the hands of a few tech giants, similar to today’s social media industry, raising concerns about the ethical foundations of these models. A notable example is the recent controversy surrounding Google Gemini. While its many strange responses to prompts were not immediately dangerous, the incident highlighted the inherent risks of having a few companies dominate and control AI development.

Today’s tech startups face increasing challenges in accessing computing resources to support their AI models. These applications perform many compute-intensive processes before model deployment. For smaller firms, accumulating large numbers of GPUs is essentially unsustainable, while traditional cloud computing services (like AWS or Google Cloud), although offering seamless and convenient developer experiences, ultimately lead to high costs due to limited capacity, making them unaffordable for many developers. Ultimately, not everyone can raise $7 trillion to cover their hardware expenses.

So what's the cause?

Nvidia has estimated that over 40K companies worldwide use GPUs for AI and accelerated computing, with a developer community exceeding 4 million. Looking ahead, the global AI market is projected to grow from $515 billion in 2023 to $2.74 trillion by 2032, at a CAGR of 20.4%. Meanwhile, the GPU market is expected to reach $400 billion by 2032, growing at a CAGR of 25%.

However, following the AI revolution, the imbalance between supply and demand for computing resources is intensifying, potentially creating a dystopian future where transformative technologies are centrally dominated by a few well-funded mega-corporations. Therefore, we believe all roads lead toward decentralized alternative solutions to help bridge the gap between the needs of AI developers and available resources.

The Role of DePIN

What Are DePINs?

DePIN is a term coined by the Messari research team, standing for Decentralized Physical Infrastructure Networks. Specifically, decentralized means no single entity extracts rents or restricts access. Physical infrastructure refers to real-world physical resources being utilized. Network refers to a group of coordinated participants working toward a predefined goal or set of goals. Today, the total market cap of DePINs is approximately $28.3 billion.

At its core, DePINs consist of a global network of nodes connecting physical infrastructure resources to blockchains to create decentralized markets that connect buyers and suppliers of resources, where anyone can become a supplier and be rewarded for their service and value contribution to the network. In this context, centralized intermediaries that limit network access through legal and regulatory means and service fees are replaced by decentralized protocols composed of smart contracts and code, governed by their respective token holders.

The value of DePINs lies in their provision of decentralized, accessible, low-cost, and scalable alternatives to traditional resource networks and service providers. They enable decentralized markets to serve specific end goals; the cost of goods and services is determined by market dynamics, and anyone can participate at any time, naturally reducing unit costs due to increased supplier count and minimized profit margins.

Using blockchains allows DePINs to build cryptoeconomic incentive systems that ensure network participants are properly compensated for their services, turning key value contributors into stakeholders. However, it is important to note that network effects—achieving greater productivity by linking small individual networks into larger, more productive systems—are key to unlocking many benefits of DePINs. Additionally, while token rewards have proven effective as network bootstrapping tools, establishing sustainable incentives across the broader DePIN landscape to aid user retention and long-term adoption remains a critical challenge.

How Do DePINs Work?

To better understand the value of DePINs in enabling decentralized computing markets, it’s important to recognize the different structural components involved and how they work together to form decentralized resource networks. Let’s examine the structure and participants of a DePIN.

Protocol

Decentralized protocols—sets of smart contracts built atop underlying “base layer” blockchain networks—facilitate trustless interactions among network participants. Ideally, protocols should be governed by a diverse set of stakeholders actively contributing to the network’s long-term success. These stakeholders then vote using their share of protocol tokens on proposed changes and developments to the DePIN. Given that coordinating distributed networks itself is a significant challenge, core teams often retain initial authority to implement changes, later transitioning power to a Decentralized Autonomous Organization (DAO).

Network Participants

The end users of a resource network are its most valuable participants, classifiable by function.

-

Suppliers: Individuals or entities providing resources to the network in exchange for monetary rewards paid in the DePIN’s native token. Suppliers “connect” to the network via blockchain-native protocols, which may enforce either whitelisted onboarding or permissionless processes. By receiving tokens, suppliers gain equity-like stakes in the network, enabling them to vote on various proposals and developments they believe will drive demand and network value—ultimately creating higher token prices over time. Of course, token-receiving suppliers may also treat DePINs as a form of passive income, selling their tokens upon receipt.

-

Consumers: Individuals or entities actively seeking resources offered by the DePIN—such as AI startups looking for GPUs—representing the demand side of the economic equation. Consumers are drawn to DePINs if they offer tangible advantages over traditional alternatives (e.g., lower costs and overhead). DePINs typically require consumers to pay for resources using their native token, generating value and maintaining stable cash flow.

Resources

DePINs can serve different markets and adopt different business models for resource allocation. Blockworks offers a useful framework: Dedicated Hardware DePINs, which distribute proprietary, purpose-built hardware to suppliers; Commodity Hardware DePINs, which allow distribution of existing idle resources, including but not limited to computing, storage, and bandwidth.

Economic Model

In an ideally functioning DePIN, value comes from revenue paid by consumers for supplier-provided resources. Sustained demand implies sustained demand for the native token, aligning economic incentives for suppliers and token holders. Generating sustainable organic demand in early stages is challenging for most startups, which is why DePINs offer inflationary token incentives to attract early suppliers and bootstrap network supply as a means of generating demand and thus more organic supply. This mirrors how venture capital firms subsidized passenger fares during Uber’s early stages to bootstrap an initial customer base, further attracting drivers and strengthening its network effects.

DePINs need to strategically manage token incentives, as they play a crucial role in the network’s overall success. When demand and network revenue rise, token issuance should decrease. Conversely, when demand and revenue fall, token issuance should be used again to incentivize supply.

To further illustrate what a successful DePIN network looks like, consider the “DePIN flywheel”—a positive feedback loop for bootstrapping DePINs. Summarized below:

-

DePIN distributes inflationary token rewards to incentivize suppliers to contribute resources and establish a baseline level of consumable supply.

-

Assuming supplier numbers begin to grow, competitive dynamics emerge within the network, improving the overall quality of goods and services provided until they surpass existing market solutions, gaining a competitive edge. This means the decentralized system outperforms traditional centralized service providers—a non-trivial achievement.

-

Organic demand for the DePIN begins to form, providing legitimate cash flow to suppliers. This presents a compelling opportunity for investors and suppliers alike, further driving network demand and thus token price.

-

Rising token prices increase supplier income, attracting more suppliers and restarting the flywheel.

This framework offers a compelling growth strategy, though it is largely theoretical and assumes the resources provided remain competitively attractive over time.

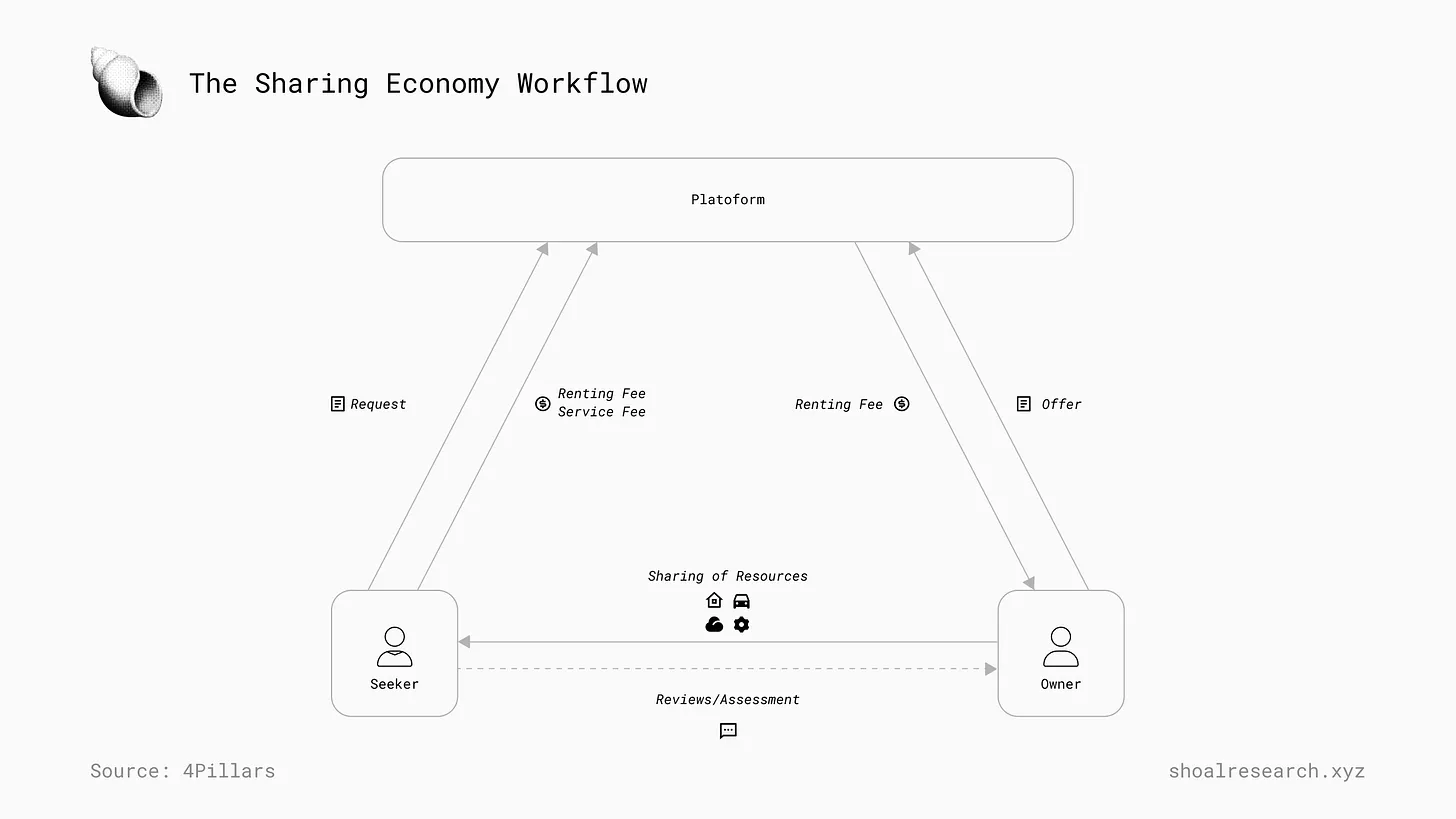

Compute DePINs

Decentralized computing markets belong to a broader movement—the “sharing economy”—a peer-to-peer economic system based on consumers directly sharing goods and services with each other via online platforms. Pioneered by companies like eBay and now dominated by Airbnb and Uber, this model is poised for disruption as the next generation of transformative technologies sweeps global markets. Valued at $150 billion in 2023, the sharing economy is projected to grow to nearly $800 billion by 2031, reflecting broader consumer behavior trends that we believe DePINs will benefit from and play a key role in.

Rationale

Compute DePINs are peer-to-peer networks that facilitate the allocation of computing resources through decentralized markets connecting suppliers and buyers. A key distinction of these networks is their focus on commodity hardware resources already in the hands of many people today. As discussed, the emergence of deep learning and generative AI has caused a surge in demand for processing power due to their resource-intensive workloads, creating bottlenecks in AI development access to critical resources. Simply put, decentralized computing markets aim to alleviate these bottlenecks by creating a new supply stream—one spanning globally that anyone can join.

In a compute DePIN, any individual or entity can lend idle resources at any time and receive appropriate compensation. Simultaneously, any individual or entity can access necessary resources from a global, permissionless network at lower cost and greater flexibility than existing market offerings. Thus, we can describe participants in compute DePINs through a simple economic framework:

-

Supply Side: Individuals or entities possessing computing resources and willing to lend or sell their computing power in exchange for subsidies.

-

Demand Side: Individuals or entities needing computing resources and willing to pay for them.

Key Advantages of Compute DePINs

Compute DePINs offer numerous advantages making them attractive alternatives to centralized service providers and markets. First, enabling permissionless cross-border market participation unlocks a new supply stream, increasing the quantity of critical resources needed for compute-intensive workloads. Compute DePINs focus on hardware resources most people already own—anyone with a gaming PC already has a GPU that can be rented out. This expands the scope of developers and teams capable of building next-generation goods and services, benefiting more people globally.

Furthermore, the blockchain infrastructure supporting DePINs provides an efficient and scalable settlement rail for facilitating micropayments required in peer-to-peer transactions. Crypto-native financial assets (tokens) provide a shared unit of value, with demand-side participants using it to pay suppliers, aligning economic incentives through a distribution mechanism consistent with today’s increasingly globalized economy. Referring back to our previously outlined DePIN flywheel, strategically managing economic incentives is highly beneficial for increasing network effects (on both supply and demand sides) in DePINs, which in turn increases competition among suppliers. This dynamic reduces unit costs while improving service quality, creating sustainable competitive advantages for DePINs from which suppliers—as token holders and key value contributors—can benefit.

DePINs resemble cloud computing service providers in their aim to deliver flexible user experiences, with resources accessible and payable on-demand. According to Grandview Research's projections, the global cloud computing market is expected to grow at a CAGR of 21.2%, reaching over $2.4 trillion by 2030, demonstrating the viability of such business models against a backdrop of rising future computing resource demand. Modern cloud platforms rely on central servers to handle all communication between client devices and servers, creating single points of failure in their operations. However, being built on blockchains allows DePINs to offer stronger censorship resistance and resilience compared to traditional service providers. Attacking a single organization or entity (e.g., a central cloud provider) jeopardizes the entire underlying resource network, whereas DePINs are designed via their distributed nature to withstand such events. First, blockchains themselves are globally distributed networks of dedicated nodes designed to resist centralized network authority. Additionally, compute DePINs allow permissionless network participation, bypassing legal and regulatory barriers. Depending on the nature of token distribution, DePINs can adopt fair voting processes to decide on proposed protocol changes and developments, eliminating the possibility of a single entity abruptly shutting down the entire network.

Current State of Compute DePINs

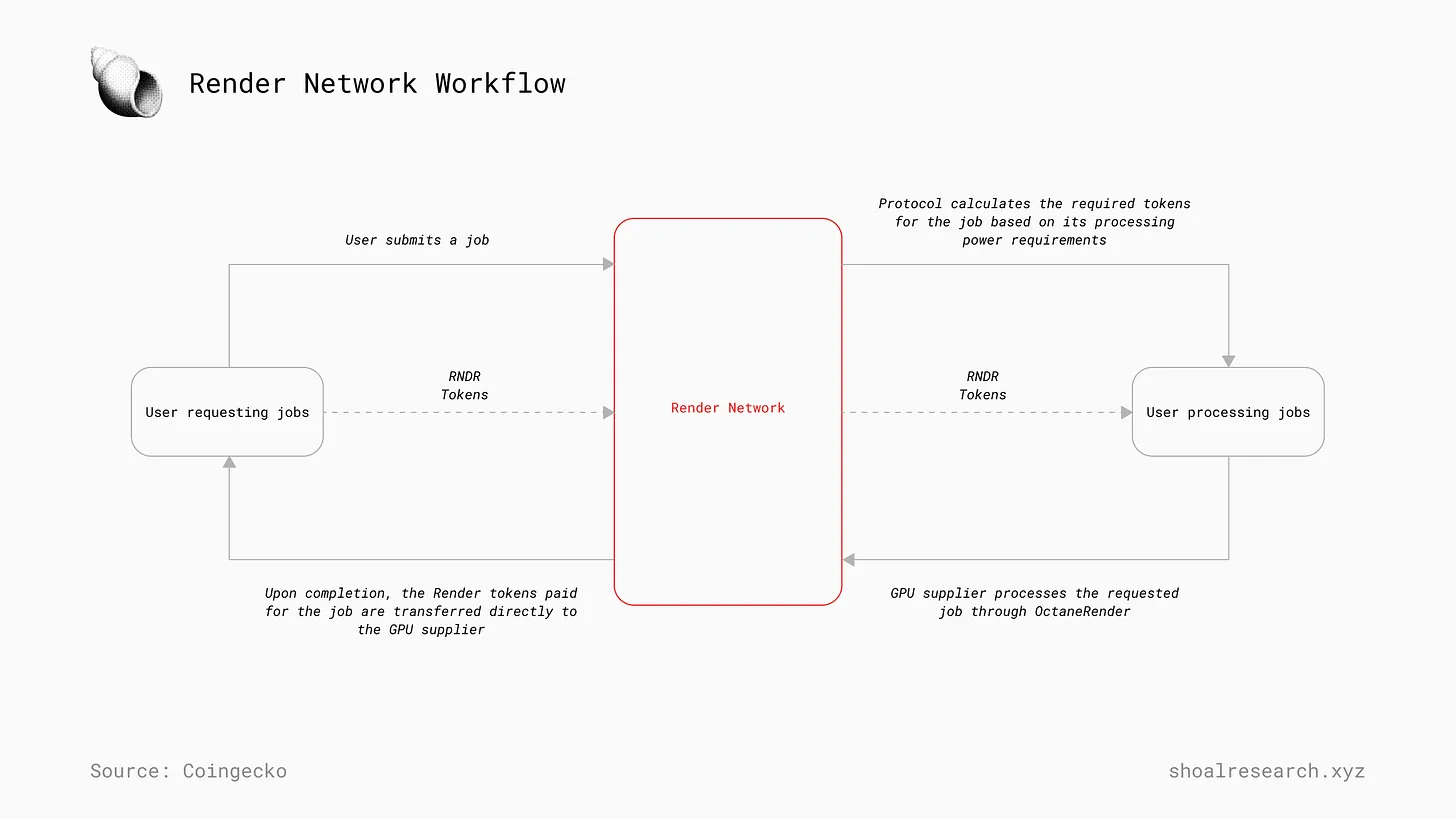

Render Network

Render Network is a compute DePIN that connects buyers and sellers of GPUs through a decentralized computing market, with transactions settled via its native token. Render’s GPU marketplace involves two key parties—creators seeking access to processing power and node operators renting out idle GPUs in exchange for compensation in native Render tokens. Node operators are ranked via a reputation system, and creators can select GPUs from a multi-tiered pricing system. The Proof-of-Render (POR) consensus algorithm coordinates operations, with node operators committing their computational resources (GPUs) to process tasks—specifically, graphic rendering jobs. Upon task completion, the POR algorithm updates the node operator’s status, including reputation score adjustments based on task quality. Render’s blockchain infrastructure facilitates work payments, providing a transparent and efficient settlement rail for suppliers and buyers to transact via the network token.

Render Network was initially conceived by Jules Urbach in 2009 and launched on Ethereum (RNDR) in September 2020, migrating to Solana (RENDER) about three years later to improve network performance and reduce operational costs.

As of this writing, Render Network has processed up to 33 million tasks (measured in rendered frames), with total nodes growing to 5,600 since inception. Approximately 60k RENDER has been burned, a process occurring during work credit distribution to node operators.

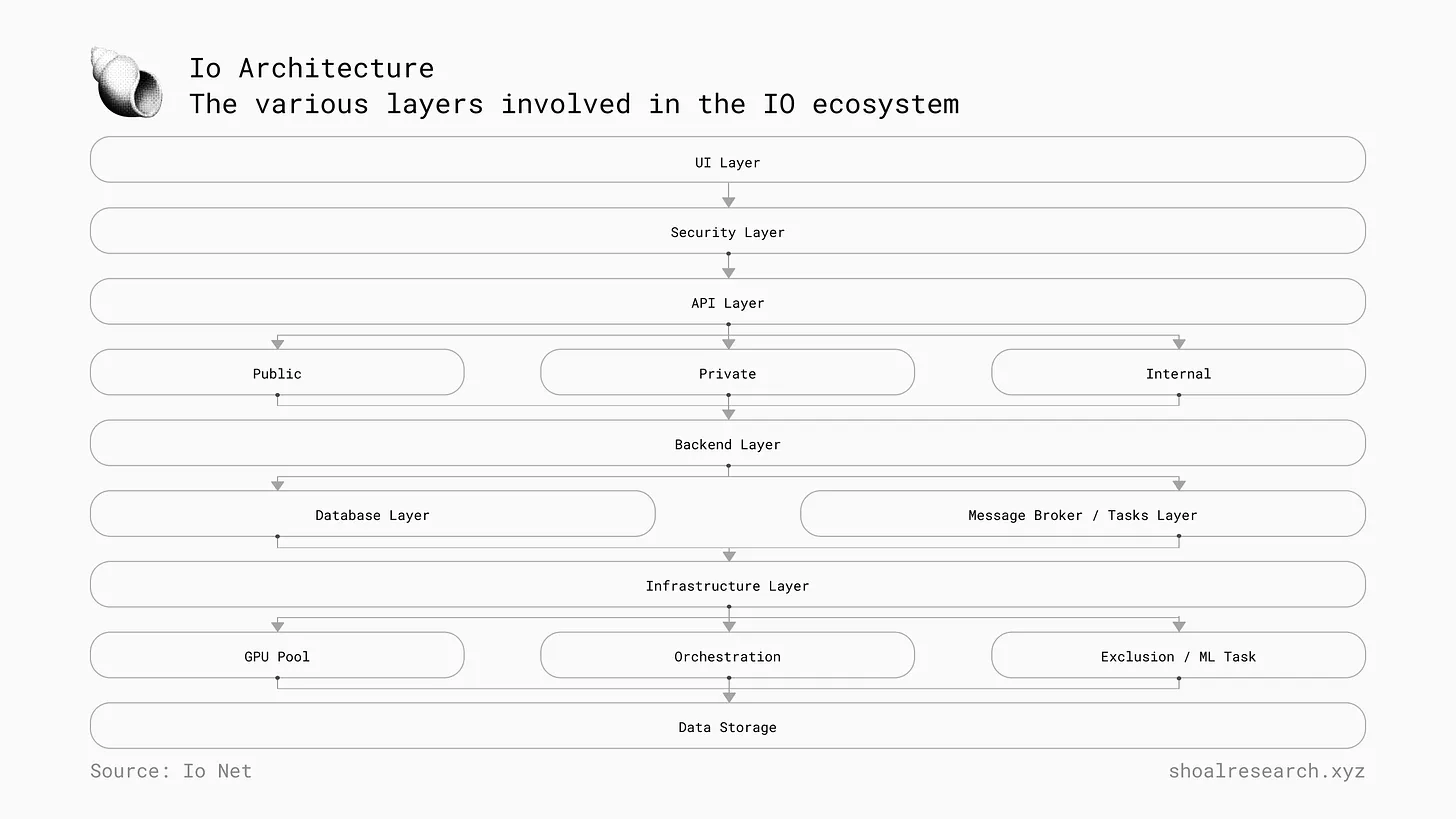

IO Net

Io Net is launching a decentralized GPU network on Solana, acting as a coordination layer between vast idle computing resources and individuals or entities needing the processing power they provide. Io Net’s unique value proposition is not direct competition with other DePINs in the market, but rather aggregating GPUs from various sources—including data centers, miners, and other DePINs such as Render Network and Filecoin—while leveraging its proprietary DePIN—the Internet-of-GPUs (IoG)—to coordinate operations and align market participant incentives. Io Net customers can customize their workload clusters on IO Cloud by selecting processor type, location, communication speed, compliance, and service duration. Conversely, anyone with a supported GPU model (12 GB RAM, 256 GB SSD) can participate as an IO Worker, lending idle computing resources to the network. While payments are currently settled in fiat and USDC, the network will soon support payments in its native $IO token. Resource pricing is determined by supply and demand, along with various GPU specifications and configuration algorithms. Io Net’s ultimate goal is to become the preferred GPU marketplace by offering lower costs and higher service quality than modern cloud service providers.

The multi-layer IO architecture can be mapped as follows:

-

UI Layer – Composed of public website, customer portal, and Workers portal.

-

Security Layer – This layer includes firewalls for network protection, authentication services for user verification, and logging services for tracking activity.

-

API Layer – This layer serves as a communication layer, comprising public API (for website), private API (for Workers), and internal API (for cluster management, analytics, and monitoring reports).

-

Backend Layer – Manages Workers, cluster/GPU operations, customer interactions, billing and usage monitoring, analytics, and auto-scaling.

-

Database Layer – This layer acts as the system’s data repository, using primary storage (for structured data) and cache (for frequently accessed temporary data).

-

Message Broker and Task Layer – Facilitates asynchronous communication and task management.

-

Infrastructure Layer – Contains the GPU pool, orchestration tools, and manages task deployment.

Current Stats/Roadmap

-

As of this writing:

-

Total Network Revenue – $1.08M

-

Total Compute Hours – 837.6K hours

-

Total Cluster-Ready GPUs – 20.4K

-

Total Cluster-Ready CPUs – 5.6K

-

Total On-Chain Transactions – 1.67M

-

Total Inferences – 335.7K

-

Total Clusters Created – 15.1K

(Data sourced from Io Net explorer)

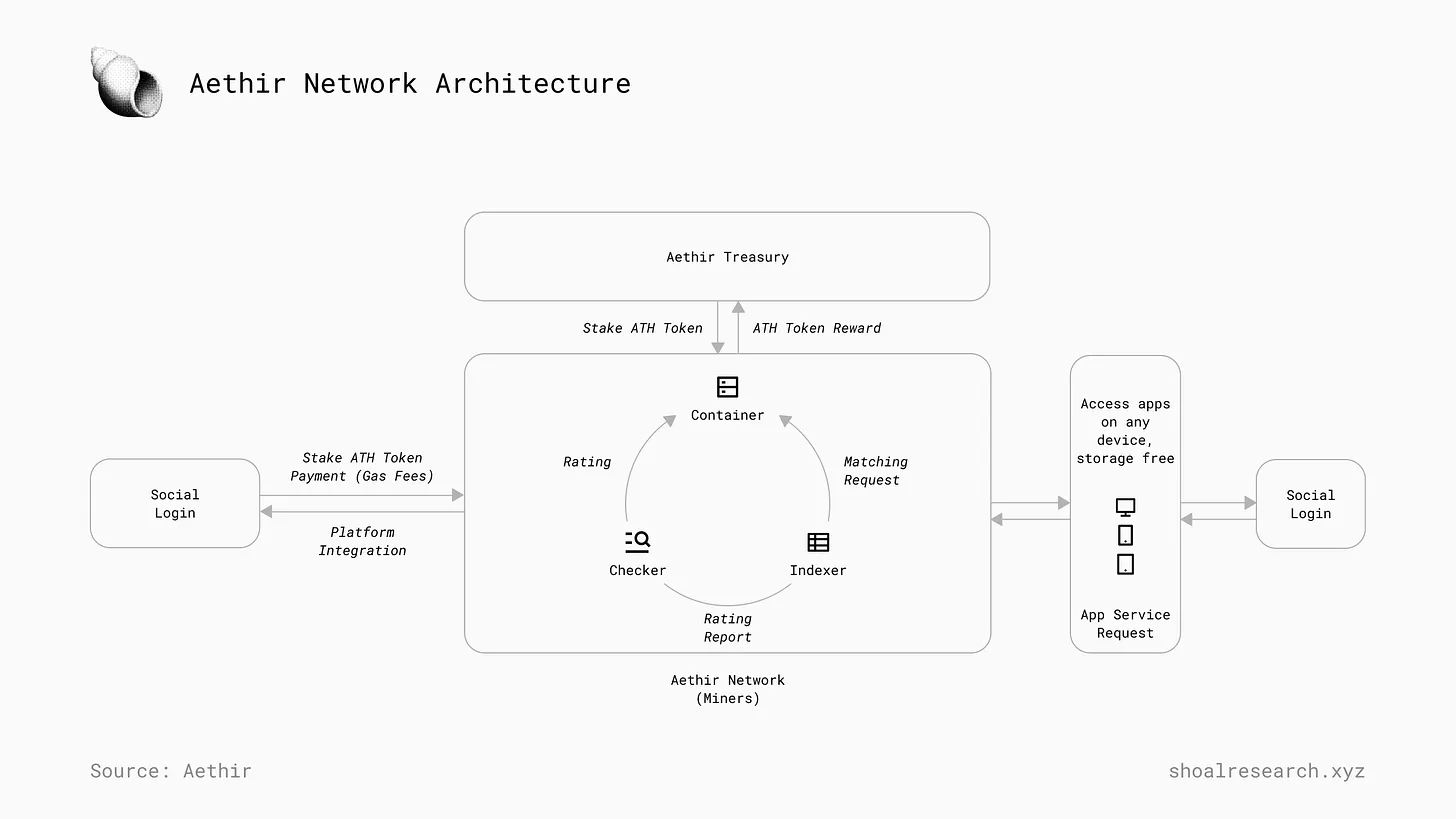

Aethir

Aethir is a cloud computing DePIN that facilitates the sharing of high-performance computing resources in compute-intensive domains and applications. It leverages resource pooling to achieve global GPU distribution at significantly reduced costs and enables decentralized ownership through distributed resource ownership. Designed specifically for high-performance workloads, Aethir serves industries such as gaming and AI model training and inference. By unifying GPU clusters into a single network, Aethir aims to increase cluster scale, thereby improving the overall performance and reliability of services offered on its network.

The Aethir Network is a decentralized economy composed of miners, developers, users, token holders, and the Aethir DAO. Three key roles ensure the network’s successful operation: Containers, Indexers, and Checkers. Containers are core nodes of the network performing vital operations to maintain network activity, including transaction validation and real-time rendering of digital content. Checkers act as quality assurance personnel, continuously monitoring container performance and service quality to ensure reliable and efficient operations for GPU consumers. Indexers match users with the best available containers. Underpinning this structure is the Arbitrum Layer 2 blockchain, which provides a decentralized settlement layer for paying for goods and services on the Aethir network using the native $ATH token.

Proof of Render

Nodes in the Aethir network have two key functions—Proof of Render Capacity, where a set of these worker nodes is randomly selected every 15 minutes to validate transactions; and Proof of Render Work, which closely monitors network performance to ensure users receive optimal service, adjusting resources based on demand and geography. Miner rewards are distributed to participants running nodes on the Aethir network, calculated based on the value of computing resources they contribute, with rewards paid in the native $ATH token.

Nosana

Nosana is a decentralized GPU network built on Solana. Nosana allows anyone to contribute idle computing resources and receive rewards in the form of $NOS tokens. The DePIN enables cost-effective GPU allocation usable for running complex AI workloads without the overhead of traditional cloud solutions. Anyone can run a Nosana node by lending idle GPUs, earning token rewards proportional to the GPU power they provide to the network.

The network connects two parties allocating computing resources: users seeking access to computing resources and node operators providing those resources. Key protocol decisions and upgrades are voted on by NOS token holders and managed by the Nosana DAO.

Nosana has laid out an extensive roadmap for its future—Galactica (v1.0 - H1/H2 2024) will launch the mainnet, release CLI and SDK, and focus on expanding the network via consumer GPU container nodes. Triangulum (v1.X - H2 2024) will integrate major machine learning protocols and connectors such as PyTorch, HuggingFace, and TensorFlow. Whirlpool (v1.X - H1 2025) will expand support for diversified GPUs including AMD, Intel, and Apple Silicon. Sombrero (v1.X - H2 2025) will add support for mid-to-large enterprises, fiat payments, invoicing, and team features.

Akash

The Akash Network is an open-source proof-of-stake network built on the Cosmos SDK, allowing anyone to join and contribute permissionlessly, creating a decentralized cloud computing market. The $AKT token secures the network, facilitates resource payments, and coordinates economic behavior among network participants. The Akash Network consists of several key components:

-

Blockchain Layer, using Tendermint Core and Cosmos SDK for consensus.

-

Application Layer, managing deployments and resource allocation.

-

Provider Layer, managing resources, bids, and user application deployments.

-

User Layer, enabling users to interact with the Akash network, manage resources, and monitor application status via CLI, console, and dashboard.

Initially focused on storage and CPU leasing services, the network has expanded its offerings to include GPU leasing and allocation in response to growing demand for AI training and inference workloads, via its AkashML platform. AkashML uses a “reverse auction” system, where customers (called tenants) submit their desired GPU prices, and computing suppliers (called providers) compete to supply the requested GPUs.

As of this writing, the Akash blockchain has completed over 12.9 million transactions, with over $535,000 spent accessing computing resources and more than 189,000 unique deployments leased out.

Honorable Mentions

The compute DePIN space is still evolving, with many teams competing to bring innovative and efficient solutions to market. Other examples worth further exploration include Hyperbolic, building a collaborative open-access platform for resource pooling in AI development, and Exabits, building a distributed computing power network backed by compute miners.

Key Considerations and Future Outlook

Now that we’ve covered the fundamentals of compute DePINs and reviewed several current case studies, it’s important to consider the implications of these decentralized networks, including their strengths and weaknesses.

Challenges

Building distributed networks at scale often requires trade-offs in performance, security, and resilience. For example, training AI models on a globally distributed commodity hardware network may be far less cost-effective and time-efficient than doing so on centralized service providers. As previously mentioned, AI models and their workloads are becoming increasingly complex, requiring more high-performance GPUs rather than commodity GPUs.

This is why large enterprises stockpile high-performance GPUs and why compute DePINs aiming to address GPU shortages by establishing a permissionless market where anyone can rent out idle GPUs face inherent challenges (see this tweet for more on challenges faced by decentralized AI protocols). Protocols can address this in two key ways: establishing benchmark requirements for GPU providers wishing to contribute to the network, and pooling computing resources contributed to the network to achieve greater overall coherence. Nevertheless, building this model is inherently challenging compared to centralized service providers, who can allocate more funds to directly negotiate with hardware providers like Nvidia. This is something DePINs should consider moving forward. If a decentralized protocol holds sufficient capital, the DAO could vote to allocate part of it toward purchasing high-performance GPUs, which could be managed in a decentralized manner and rented out at premium rates above commodity GPUs.

Another challenge specific to compute DePINs is managing proper resource utilization. In their early stages, most compute DePINs will face structural demand insufficiency, much like many startups today. Generally, DePINs face the challenge of building enough supply early on to reach minimum viable product quality. Without supply, the network cannot generate sustainable demand or serve its customers during peak demand periods. On the other hand, excess supply is also problematic. Beyond a certain threshold, additional supply only helps if network utilization approaches or reaches full capacity. Otherwise, DePINs risk overpaying for supply, leading to underutilization, and unless the protocol increases token issuance to maintain supplier engagement, supplier revenues will decline.

A telecom network is useless without broad geographic coverage. A taxi network is useless if passengers must wait too long for rides. A DePIN is useless if it must pay people to provide resources long-term. Centralized service providers can forecast resource demand and efficiently manage supply, while compute DePINs lack a central authority to manage resource utilization. Therefore, strategically determining resource utilization is especially critical for DePINs.

A bigger question is whether decentralized GPU markets might no longer face GPU shortages. Mark Zuckerberg recently stated in an interview that he believes energy will become the new bottleneck, not computing resources, as companies will now rush to build data centers at scale instead of hoarding computing resources as they do today. Of course, this implies potential reductions in GPU costs, but raises the question of how AI startups will compete with large companies on performance and quality of goods and services if building proprietary data centers raises the overall standard for AI model performance.

The Case for Compute DePINs

To reiterate, the gap between AI model complexity and their subsequent processing and computational demands versus available high-performance GPUs and other computing resources is widening.

Compute DePINs hold promise as innovative disruptors in computing markets today dominated by major hardware manufacturers and cloud computing service providers, based on several key capabilities:

1) Offering lower costs for goods and services.

2) Providing stronger censorship resistance and network resilience guarantees.

3) Benefiting from potential regulatory guidelines that may require AI models to be as open as possible for fine-tuning and training, easily accessible to all.

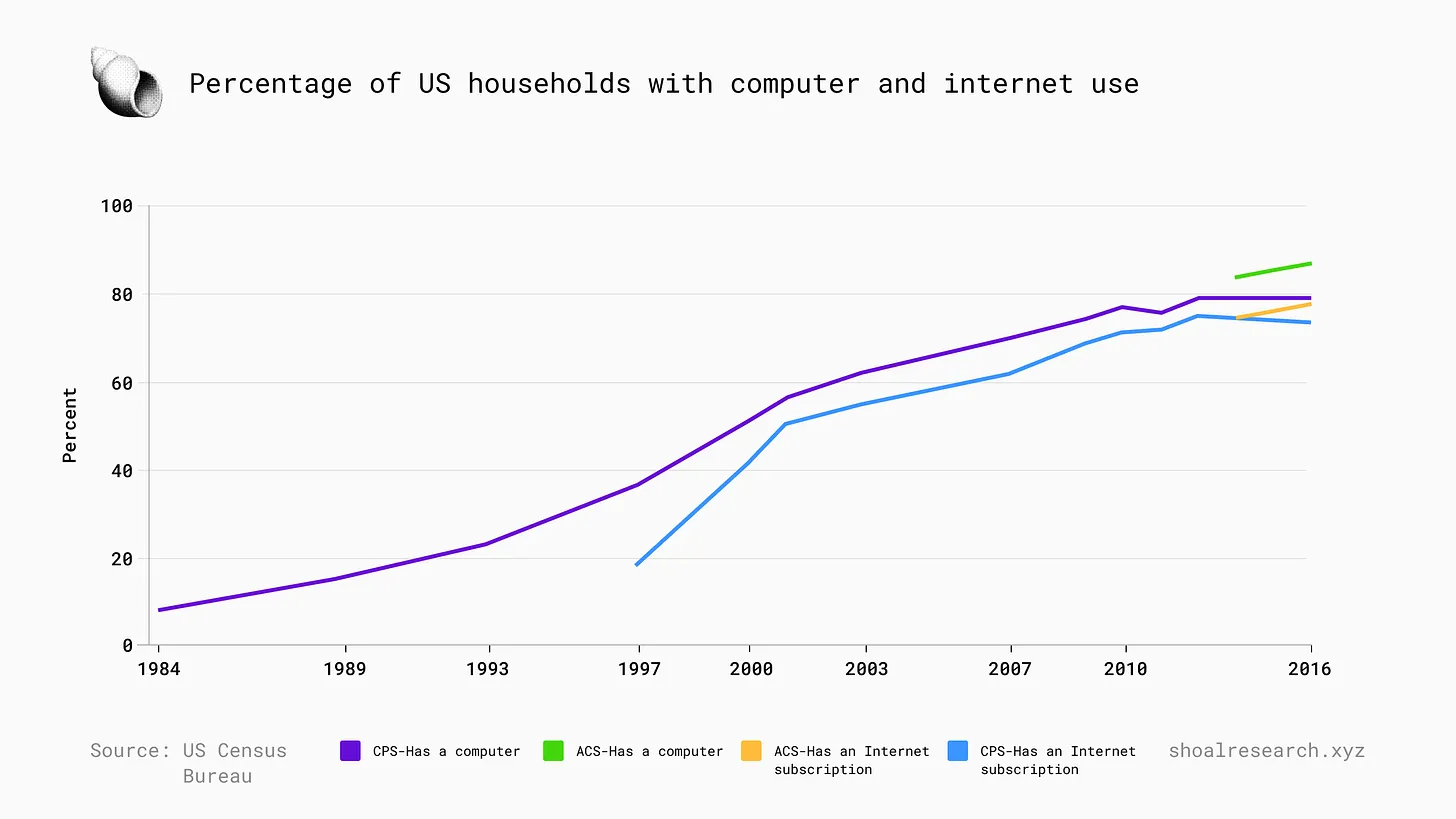

The proportion of U.S. households with computers and internet access has grown exponentially, nearing 100%. Many regions globally have also seen significant growth. This indicates an increasing number of potential computing resource providers (GPU owners) who, given sufficient monetary incentives and seamless transaction processes, would be willing to rent out idle supply. Of course, this is a very rough estimate, but it suggests the foundational basis for a sustainable shared-economy computing resource model may already exist.

Beyond AI, future computing demand will come from many other industries, such as quantum computing. The quantum computing market is projected to grow from $928.8 million in 2023 to $6,528.8 million by 2030, at a CAGR of 32.1%. Production in this sector will require different kinds of resources, and it will be interesting to see whether any quantum computing DePINs emerge and what they might look like.

“An ecosystem of open models running on consumer hardware is an important hedge against a future where value is highly concentrated in AI and most human thought is read and mediated by central servers controlled by a few people. These models also carry far lower risks than those of corporate giants and militaries.” — Vitalik Buterin

Large enterprises may not be—and likely never will be—the target audience for DePINs. Compute DePINs bring individual developers, scattered builders, and startups with minimal funding and resources back into the game. They allow idle supply to be converted into innovative ideas and solutions, realized through richer computing capabilities. AI will undoubtedly transform the lives of billions. Instead of fearing AI replacing everyone’s jobs, we should encourage the idea that AI can empower individuals, independent entrepreneurs, startups, and the general public.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News