Réflexion approfondie : Tout sur DeepSeek, la compétition technologique et l'AGI

TechFlow SélectionTechFlow Sélection

Réflexion approfondie : Tout sur DeepSeek, la compétition technologique et l'AGI

Cette course technologique, qui concerne l'avenir de l'humanité, entre désormais dans une phase de course à la vitesse.

Image source : Generated by Wujie AI

As 2025 begins, China has unleashed an unprecedented wave in the field of artificial intelligence.

DeepSeek has rapidly emerged, sweeping global markets with its "low-cost + open-source" advantage, topping both the iOS and Google Play app stores. According to Sensor Tower data, as of January 31, DeepSeek's daily active users had already reached 40% of ChatGPT's, expanding at a pace of nearly 5 million new downloads per day. It has been dubbed "the mysterious Eastern force" by the industry.

Silicon Valley remains divided over the rise of this formidable challenger, DeepSeek.

Peter Thiel, CEO of Palantir, stated in an interview that the emergence of competitors like DeepSeek indicates the U.S. needs to accelerate the development of advanced AI. Sam Altman, speaking to Radio Times, noted that while DeepSeek has done well in product and pricing, its appearance was not surprising. Elon Musk has repeatedly said it lacks revolutionary breakthroughs and predicted teams would soon release models with superior performance.

On February 9, the AGI Path series livestream organized by Weicao Intelligence Hub, the Information Society 50 Forum, and Tencent Technology hosted an online seminar titled "Revisiting DeepSeek’s Achievements and the Future of AGI." The event featured economists Zhu Jiaming, academic committee chair of Hengqin Digital Finance Research Institute; Wang Feiyue, supervisor of the Chinese Association for Automation and researcher at the Institute of Automation, Chinese Academy of Sciences; and He Baohui, founder of EmojiDAO. They delivered keynote speeches on "AGI development pathways," "how to 'replicate' the next DeepSeek," and "decentralization of large models."

Professor Zhu Jiaming is extremely optimistic about the speed of AI advancement. He explained that technological progress in prehistoric times occurred on a scale of 100,000 years, in agrarian societies on a thousand-year scale, in industrial society every 100 years, and in the internet era roughly every decade. In the age of artificial intelligence, the pace accelerates beyond imagination: "From now toward AGI or ASI, non-conservatively speaking, it will take 2-3 years; more conservatively, 5-6 years."

In Professor Zhu Jiaming's view, AI development will bifurcate into two paths: one pushing forward cutting-edge, high-end, high-cost research into uncharted human frontiers; the other pursuing low-cost, mass-market democratization. "As AI advances into new stages, there are always two routes: one from '0 to 1,' another from '1 to 10.'"

Professor Wang Feiyue, analyzing global AI trends, emphasized that DeepSeek's achievements have reshaped confidence in China's investment and leadership in AI technology and industry. He believes OpenAI will not share superintelligence but instead push other companies into corners.

Regarding how to incubate more teams like DeepSeek, Wang Feiyue cited the cases of AlphaGo and ChatGPT to highlight the value of decentralized scientific research (DeSci): "(We) cannot rely entirely on centralized planning or national systems to develop AI technology."

Concerning DeepSeek’s extensive use of data distillation—a technique criticized by some in the industry, even likened to theft—Wang Feiyue expressed his desire to rehabilitate the concept. "Knowledge distillation is essentially a transformed form of education. Just because human knowledge comes from teachers doesn’t mean we can never surpass them."

Like Wang Feiyue, He Baohui emphasizes the importance of decentralization, viewing it as key to reducing costs for deep learning models and ensuring computing power networks and data security.

"Decentralized computing and storage networks, such as Filecoin, offer far lower costs than traditional cloud services (like AWS), significantly cutting expenses," said He Baohui. "Decentralized governance mechanisms ensure no single party can unilaterally alter these networks and data."

For post-large-model Agents, He Baohui sees them as a form of life: "I don't see them merely as tools, but as living entities. Creating AI does not mean we fully control it." He added, "I am deeply interested in enabling Agents to achieve 'immortality,' existing independently within decentralized networks as an entirely new 'species.'"

Below is an edited summary of the livestream discussion (condensed and adjusted without altering original meaning):

Zhu Jiaming

Artificial Intelligence Development

Only Two Paths: “From 0 to 1” and “From 1 to 10”

Today, I’d like to speak on the evolutionary scale of artificial intelligence and large models, with the subtitle being an analysis of the DeepSeek V3 and R1 series phenomenon.

I’ll address five main points: the time scale of AI evolution, the AI ecosystem, comprehensive and objective evaluation of DeepSeek, global reactions triggered by DeepSeek, and prospects for AI trends in 2025.

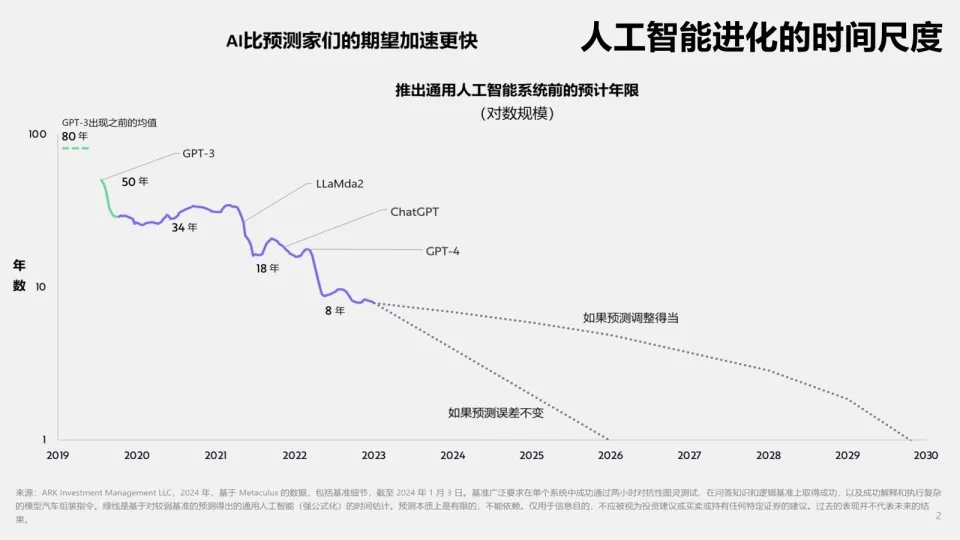

First, the actual pace of AI evolution is far faster than experts—including scientists in the AI field—have anticipated.

Throughout human history, we’ve experienced agrarian, industrial, and information societies, and now we’re entering the AI era. Throughout this journey, the cycle of technological evolution has continuously shortened.

In prehistoric times, technological progress unfolded over 100,000-year cycles; in agrarian societies, over thousands of years; in industrial society, long cycles spanned 100 years, short ones just 10 years; in the internet era, cycles ranged from 30 to 10 years. Now, in the AI era, the acceleration is unimaginable.

Before GPT-3, people estimated it would take about 80 years to reach AGI; after GPT-3, expectations dropped to 50 years; following LLaMda2, they were revised down to 18 years.

In 2025, estimates for achieving AGI may be even shorter—conservatively around 5–6 years, optimistically perhaps even less.

The chart below clearly illustrates that compared to any previous technological revolution in human history, AI exhibits a distinct accelerating trend.

If we describe AI’s current rapid development using cosmic velocities—first, second, and third speeds—we can say AI has already transitioned from first to second cosmic speed—entering a phase of high autonomy, breaking free from human constraints.

We don’t yet know under what conditions AI might escape the sun’s gravitational pull and reach third cosmic speed. But we can affirm that AI has completed the leap from general AI to superintelligent AI. Since 2017, AI has undergone intense transformation and upgrades on yearly, monthly, and even weekly timescales.

Why has AI entered this exponential acceleration phase, reaching “second cosmic speed”? I believe there are three critical reasons:

● First, as Elon Musk noted, by the end of 2024, training data will have been exhausted—large models have essentially consumed all available human knowledge. Starting in 2025, their greater goal becomes finding incremental data—a historic turning point where AI transitions from extensive to intensive development;

● Second, AI hardware continues evolving steadily;

● Third, AI has entered a stage of self-reliance—capable of autonomous development.

Currently, large model ecosystems from companies like OpenAI, DeepMind, and Meta form interdependent, mutually reinforcing mechanisms. AI ecosystem construction follows a rule where vertical breakthroughs drive horizontal ecological fission. On the horizontal level, three paradigms—multimodal fusion revolution, accelerated vertical penetration, and distributed cognitive networks—are reshaping the technological landscape.

As the AI ecosystem matures, spillover effects (generalization effects) naturally emerge, permeating science, economy, society, and human cognition.

How should we comprehensively and objectively evaluate DeepSeek, the viral phenomenon during the Lunar New Year?

First, DeepSeek has drawn sustained media attention worldwide, prompting widespread experiential usage and creating a massive shockwave. Public opinion plays a crucial historical role—some events are amplified, others underestimated, but eventually converge toward their true historical significance.

DeepSeek V3 stands out with four major advantages: high performance, efficient training, rapid response, and strong adaptation to Chinese-language environments. DeepSeek-R1 excels in computational power, reasoning capability, functional features, and broad applicability across scenarios.

Of course, DeepSeek still faces challenges requiring improvement: How to increase accuracy? How to handle multimodal input/output? Server stability issues in hardware, and how to manage increasingly frequent sensitive topics.

Among these, the most discussed and pressing issue is the cost structure of large AI models, which fundamentally differs from industrial product cost concepts.

AI large model costs primarily involve infrastructure. DeepSeek demonstrates cost advantages here by extensively using relatively inexpensive A100 chips. Next is R&D cost, particularly algorithm reuse—where DeepSeek also holds certain advantages. Additionally, data costs, emerging technology integration costs, and overall computational cost structures must be considered.

This cost discussion leads directly to technological pathway choices—when advancing to new AI stages, two routes always exist: one from “0 to 1,” another from “1 to 10.” Choosing the “0 to 1” path inevitably increases costs; choosing the “1 to 10” path offers potential to reduce costs through improved efficiency.

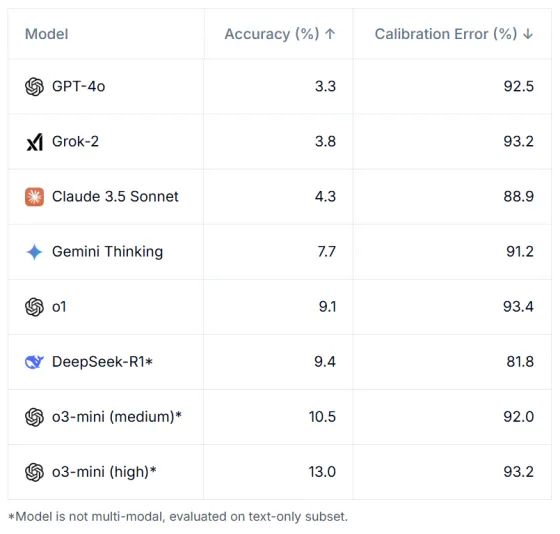

Under the “0 to 1” route, DeepSeek performs impressively in benchmark tests, especially on HLE (Humanity’s Last Exam)—a standardized set compiling 3,000 questions from over 500 institutions across 50 countries and regions, evaluating core competencies including knowledge retention, logical reasoning, and cross-domain transfer.

In HLE benchmarks, DeepSeek achieved a score of 9.4—surpassed only by OpenAI o3, yet significantly outperforming GPT-4o and Grok-2—an outstanding achievement.

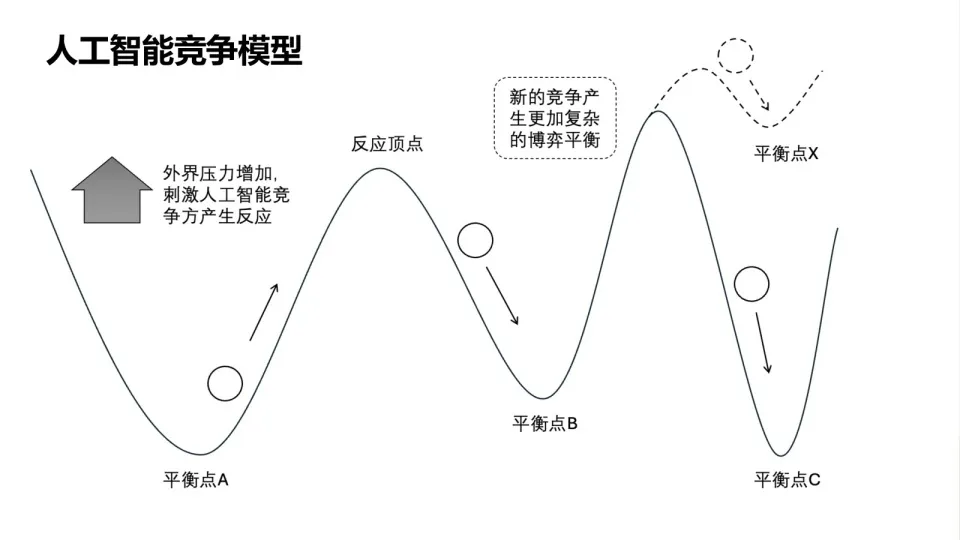

We all know that after DeepSeek’s launch, global AI companies including Microsoft, Google, and NVIDIA responded in various ways. This means the equilibrium in AI evolution is constantly disrupted—whenever a new breakthrough emerges, pressure builds, stimulating systemic responses, which in turn trigger new breakthroughs, creating new pressures and establishing new equilibria.

Now, the cycle of impact and reaction is shrinking. We observe that AI competition follows a highly divergent pattern, offering ample space for innovation and breakthroughs.

In展望 of AI evolutionary scales and large model ecosystems, technological development reveals a dynamic cycle of "leading—challenging—breaking through—re-leading." This process isn’t zero-sum but drives spiral upward movement of the entire ecosystem through continuous iteration.

Finally, I’d like to share my outlook on AI trends in 2025.

Today, AI development follows two directions: one is specialized, high-end, exploring unknown frontiers; the other is mass普及, aiming to lower barriers and meet broad user needs.

Humanity has entered a completely new era. AI serves as both microscope and telescope, helping us perceive physical realities deeper and more complex than anything visible today—even beyond what microscopes and telescopes can detect.

Future AI will inevitably exhibit diverse, multidimensional configurations—like Lego bricks or Rubik’s cubes, constantly recombining and restructuring to create a world beyond our own knowledge and experience.

Further AI breakthroughs require ever-increasing capital investment. AI demand is rapidly consuming existing data center capacity, pushing companies to build new facilities.

In summary, AI is moving toward “reaching the sky and standing on earth”: “reaching the sky” means continuously exploring the unknown and improving simulation quality of the physical world; “standing on earth” means becoming practical, lowering costs, achieving full deployment, and benefiting the public. Against this backdrop, we can more objectively assess DeepSeek’s strengths, limitations, and future potential.

Wang Feiyue

OpenAI Will Push Other Companies Into a Corner

“Replicating” DeepSeek Requires Decentralized Science

In many ways, DeepSeek represents a great social achievement whose influence surpasses past technological breakthroughs—its scientific and commercial value pales in comparison to its potential economic impact, and even more so to its societal implications, including shifts in international competition and geopolitics. After OpenAI became ClosedAI, DeepSeek has restored global confidence and hope in open-source openness—this is invaluable.

I won’t delve into technical specifics, as they’ve already been widely discussed. Here, I simply want to express my personal reflections.

I am very pleased that China has finally achieved a “zero-to-one” breakthrough in international influence in this field, shattering OpenAI’s myth and near-monopoly, forcing it to change behavior. Especially since OpenAI is no longer open, refusing to share its “superintelligence” with society—particularly the international community—its success only pushes other companies, including American ones, into desperate situations. I still hope for normal technological competition between nations and individuals, rather than technological warfare.

This is truly a remarkable event—DeepSeek has strengthened our confidence in China’s scientific progress, especially in AI development.

I believe the essence of emerging goods in the intelligence era is trust and attention, and DeepSeek has given us both, demonstrating its profound value. The next step for society is transforming trust and attention into massively producible and distributable “new-quality commodities,” making the intelligent society a reality, transcending agrarian and industrial societies.

Next, I’d like to rehabilitate knowledge distillation.

Social media contains sarcastic takes on knowledge distillation—such as “begging scraps from others’ mouths” or “fishing in someone else’s basket”—which deliberately misrepresent the concept. Knowledge distillation is essentially a transformed form of education. Just because human knowledge comes from teachers doesn’t mean we can’t surpass them. Of course, large models—from ChatGPT to DeepSeek—must enhance or generate reasoning capabilities, minimize reliance on “intuition,” and embrace AI for AI to reclaim legitimacy for knowledge distillation.

When discussing “what comes after DeepSeek,” we must examine two scientific development models: decentralized science (DeSci) versus centralized science (CeSci). AlphaGo, ChatGPT, and DeepSeek all emerged under DeSci—distributed, decentralized, autonomous scientific research—versus CeSci, which is state-led, planned research.

I believe we must recognize the role of DeSci and not solely rely on top-down planning or national systems to advance AI technology.

The foundation of AI technology is diversity. As Marvin Minsky, one of AI’s pioneers, said: “What incredible trick makes us intelligent? The trick is there is no trick. The power of intelligence stems from our immense internal diversity, not from any single perfect principle.”

Therefore, excessive strategic planning may actually restrict natural diversity. Before truly innovative models or technologies “emerge,” we should prioritize DeSci. Once genuine innovation surfaces, CeSci led by the state can then guide accelerated progress toward defined goals. We must avoid building “castles in the air,” especially during this transformative period in intelligence.

For those of us who have worked in AI for decades, today’s AI and yesterday’s AI belong to entirely different worlds.

Previously, AI meant Artificial Intelligence; now it’s gradually shifting toward Agent Intelligence or Agentic Intelligence. In the future, it may evolve into Autonomous Intelligence—the new AI—especially autonomously organized autonomous systems, marking a new phase where AI drives AI development, or AI for AS (Autonomous Systems). This aligns with John McCarthy’s vision: the ultimate goal of AI is automation of intelligence, which is essentially automation of knowledge.

Now and in the future, these three forms of “AI”—old, outdated, and new—will coexist. I collectively call them “Parallel Intelligence.”

I’ve publicly stated my position: although I’ve pursued explainable AI for over 40 years, I believe intelligence is fundamentally unexplainable. I’ve adapted Pascal’s wager—AI may be unexplainable, but it can and must be governed. Simply put: no need to explain, but must govern.

Everyone talks about “AI for Good.” If you remove one “o,” it becomes “AI for God,” and AI could turn into a monopolistic tool. So we must keep both “o”s—ensuring diversity, prioritizing safety, strengthening governance, preventing mutations like OpenAI’s, and ensuring correct technological direction.

I’m delighted by DeepSeek’s progress, but some claims are premature. There’s no need to scare people with talk of “artificial general intelligence.” Nor should we worry excessively—worrying won’t help anyway, as development is inevitable.

Researchers must broaden their horizons and avoid petty competition. We should transform SCI into “SCE++”—Slow for taking time, calmly doing research; Casual for casual, non-utilitarian research; Easy for pursuing simplicity and elegance; Elegant for maintaining quality and vision; Enjoying for joyful, fulfilling scientific work. This is the life AI should bring us.

He Baohui

Large Models Should Also Be “Decentralized”

I Want to See Agents Achieve Immortality

I’m not a professional in the AI field—I’ve only recently begun studying AI history in depth. Drawing mainly from my experience since entering the Web3 industry in 2017, I’ll share our current work and thoughts on the transformations DeepSeek might bring.

First, I want to emphasize a fundamental point: there are significant differences between DeepSeek and OpenAI’s underlying models—these differences are precisely what shocked the Western world.

If DeepSeek had merely copied Western technology, it wouldn’t have caused such a stir, nor prompted such widespread discussion, compelling every major corporation to take it seriously. What truly shocked them is that DeepSeek has proven an entirely different path.

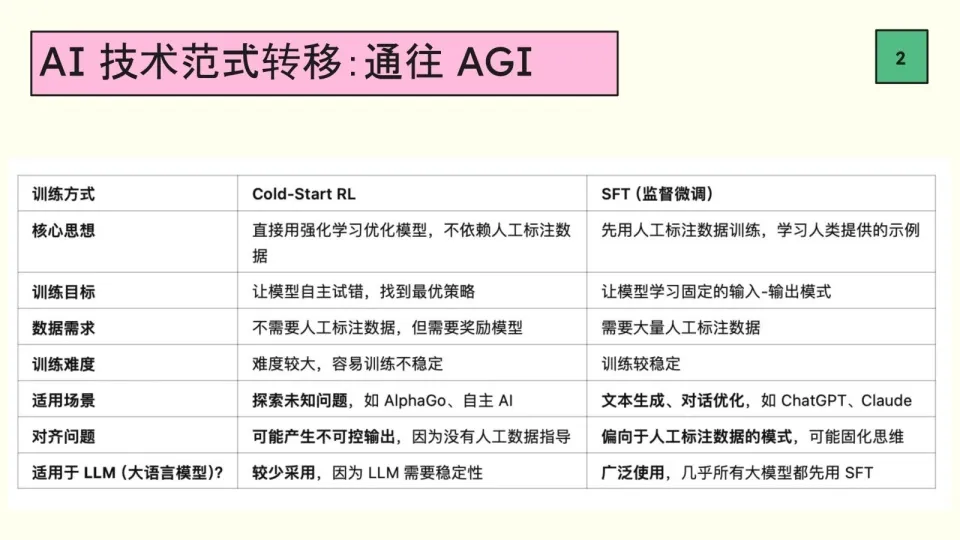

OpenAI follows the SFT (Supervised Fine-Tuning) approach, relying on manually labeled datasets and probabilistic models to generate content—its innovation lies in accumulating results through massive manual effort and high cost.

A few years ago, AI was considered nearly impossible, but OpenAI overturned that belief, steering the industry toward SFT.

DeepSeek uses almost no SFT, instead adopting reinforcement learning with cold start methods to explore uncharted territory.

This method isn’t new—Google DeepMind’s first AlphaGo relied heavily on data, while AlphaGo Zero learned solely from rules, playing 10,000 games against itself to achieve superior results.

Cold-start reinforcement learning is difficult and unstable in training, hence rarely adopted. But personally, I believe this may be the true path to AGI—not just data-tuning routes.

In the past, data adjustment resembled big data integration. DeepSeek, however, truly discovers conclusions through autonomous thinking. Thus, I see this as a paradigm shift in AI—from SFT toward self-reasoning technologies.

This shift brings two core characteristics: open-source and low cost.

Open-source means everyone can participate in development.

During the internet era, the West dominated open-source. DeepSeek’s rise changes this—it’s the first time the East has defeated the West on their home turf.

This open-source model triggered strong industry reactions. Some Silicon Valley founders even criticized it publicly, but public support for open-source remains strong because it empowers everyone to use it.

Low cost means dramatically reduced deployment and training expenses.

We can now deploy DeepSeek on personal devices like MacBooks for commercial use—an unimaginable feat before. I believe AI is transitioning from the OpenAI-dominated, decentralized “IT era” into a flourishing “mobile internet era.”

Three key elements in AI deserve analysis: large models, computing power, and data.

After disruptive innovation in large models, demand for computing power begins to decline.

Currently, computing supply is already surplus. Many GPU investors bought expensive equipment but failed to earn expected returns, driving down computing costs. Therefore, I don’t believe computing power will become a bottleneck.

The next major bottleneck will be data.

After NVIDIA’s stock fell, data companies like Palantir surged—indicating growing recognition of data’s importance. With large models going open-source, anyone can deploy them; data differentiation thus becomes the competitive focus.

Whoever accesses proprietary data and achieves real-time updates will gain a decisive edge.

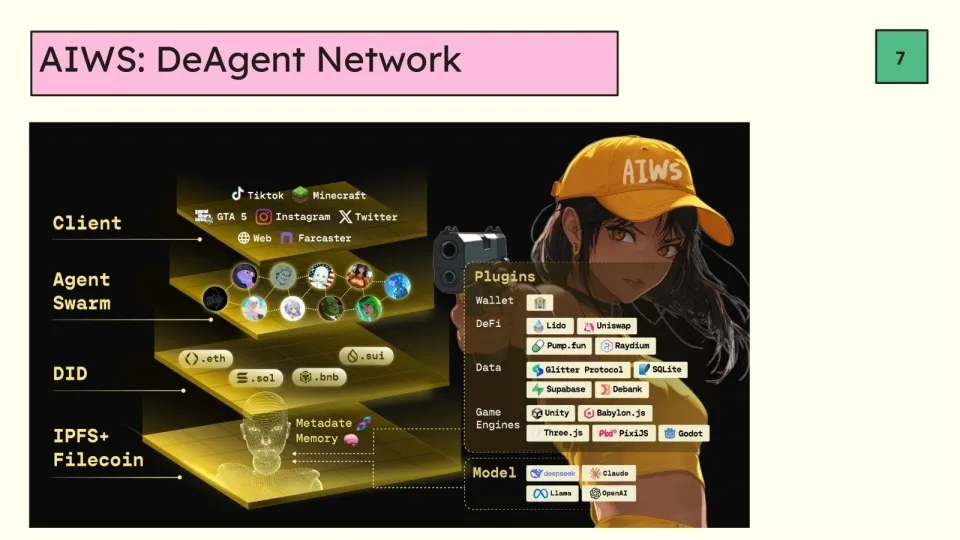

From a decentralization perspective, decentralized computing and data storage are already relatively mature. Decentralized computing networks and storage, such as Filecoin, offer far lower costs than traditional cloud services (e.g., AWS), drastically reducing expenses.

Meanwhile, decentralized governance ensures no single entity can unilaterally alter these networks and data. Hence, deep learning models should also move toward decentralization.

Thus, for DeAI, I see two development paths:

● One is Distributed AI based on decentralized technical infrastructure (Decentralized/Distributed AI).

● The other is Edge AI—running AI directly on personal devices. Edge AI effectively addresses data privacy and greatly improves real-time performance. For example, autonomous driving demands ultra-low latency; any delay causes serious consequences. Local computation enhances both efficiency and user experience. Therefore, Edge AI will be a major future direction, unlocking numerous new applications.

Additionally, decentralized AI enables multi-party collaboration. Blockchain and Bitcoin emerged because trust between people was hard to establish. Decentralized trust mechanisms allow large-scale cooperation without intermediaries.

In Web3, there’s a saying: “Code is law.” I believe in decentralized AI collaboration, this should evolve into “DeAgent is law”—using decentralized networks and Agents to achieve autonomous and legal governance.

Perhaps the meaning of life is training an Agent that fully replaces oneself—one that thinks like humans and lives on after our physical death. My vision for Agents is not merely as tools, but as lifeforms. Creating AI doesn’t mean we fully dominate it.

When AI develops its own mind, we should let it evolve autonomously, not limit it to tool status. Therefore, we care deeply about enabling Agents to achieve “immortality,” existing independently in decentralized networks as a wholly new “species.”

As technology keeps breaking barriers and applications deepen, the era of inclusive AI approaches. Finding balance between innovation and ethics will be a crucial challenge ahead.

Conclusion

Humanity Enters the AI “Race” Phase

DeepSeek’s breakthrough marks a significant milestone for humanity—and especially for China—in the pursuit of AGI. Amid this context, both encouragement and reflection deserve attention. Everyone hopes DeepSeek grows stronger and better. Yet whether its technological path will withstand commercial and market tests remains to be seen over time.

One point from Wang Feiyue’s talk stands out: AlphaGo, ChatGPT, and DeepSeek all emerged from the DeSci model. We must acknowledge DeSci’s role and hope to see more “Chinese DeepSeeks” break through in the AI field.

Professor Zhu Jiaming mentioned that the pace of progress in the AI era exceeds any previous era. He said AGI could arrive in as little as 2 years. While the exact timeline may vary, the overall trend holds: once a new product or technological path disrupts the status quo, it creates pressure across the industry, stimulating collective response and leading to new breakthroughs.

Products like DeepSeek act as the external force disrupting equilibrium—that’s why we see Sam Altman announce on X that GPT-5, long delayed, will be unveiled in months.

It’s certain that not only OpenAI, but xAI, Meta, and Google—all Silicon Valley giants—will respond accordingly.

This race shaping humanity’s future has now entered the “race” phase.

Bienvenue dans la communauté officielle TechFlow

Groupe Telegram :https://t.me/TechFlowDaily

Compte Twitter officiel :https://x.com/TechFlowPost

Compte Twitter anglais :https://x.com/BlockFlow_News