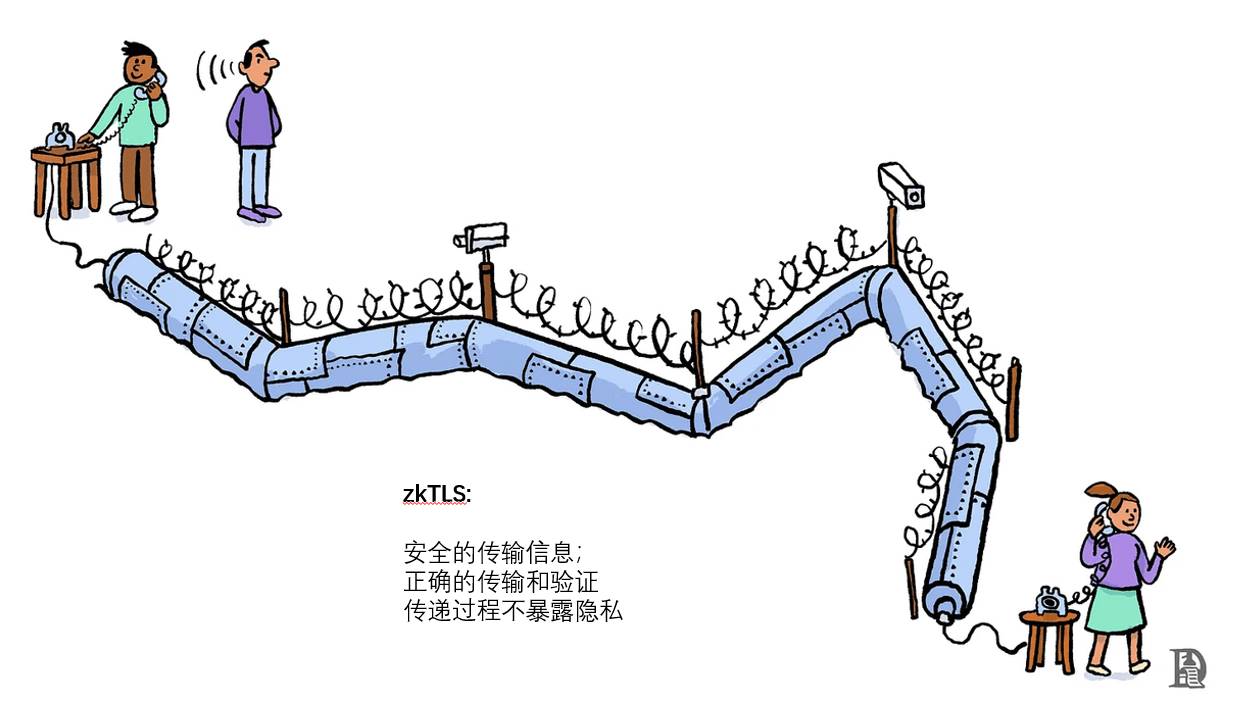

Technology Stack Expansion: Overview of zkTLS Principles and Potential Application Scenarios

TechFlow Selected TechFlow Selected

Technology Stack Expansion: Overview of zkTLS Principles and Potential Application Scenarios

The biggest advantage of zkTLS is that it reduces the cost of achieving availability for Web2 HTTPS resources.

Author: @Web3_Mario

Abstract: Recently, I've been exploring new project directions and encountered a previously unfamiliar tech stack during product design. After conducting some research, I’ve organized my findings to share with you.

In general, zkTLS is a novel technology combining zero-knowledge proofs (ZKP) and TLS (Transport Layer Security). In the Web3 space, it's primarily used to enable on-chain virtual machines to verify the authenticity of off-chain HTTPS data without relying on third-party trust. This authenticity includes three aspects: the data truly originates from a specific HTTPS resource, the returned data hasn't been tampered with, and the timeliness of the data can be guaranteed.

Through this cryptographic mechanism, on-chain smart contracts gain the ability to reliably access off-chain Web2 HTTPS resources, breaking down data silos.

What is the TLS Protocol

To deeply understand the value of zkTLS, it's necessary to briefly review the TLS protocol. TLS (Transport Layer Security) provides encryption, authentication, and data integrity for network communications, ensuring secure data transmission between clients (e.g., browsers) and servers (e.g., websites). Those not specialized in network development may have noticed that some website domains start with https while others use http. When accessing the latter, mainstream browsers typically display a "not secure" warning. The former often triggers warnings like "Your connection is not private" or "HTTPS certificate error," both stemming from TLS protocol behavior.

Specifically, HTTPS is essentially HTTP layered over TLS, which ensures privacy, data integrity, and server authenticity verification. We know that HTTP transmits data in plaintext and cannot verify server authenticity, leading to several security issues:

1. Information exchanged between you and the server could be eavesdropped, causing privacy leaks;

2. You cannot verify the server’s authenticity—your request might be hijacked by a malicious node returning forged data;

3. You cannot verify data integrity—data might be lost or altered due to network issues.

TLS was designed precisely to solve these problems. Some readers may be familiar with SSL. In fact, TLS evolved from SSL 3.1; only the name changed due to commercial reasons, but they are fundamentally the same. Thus, in certain contexts, the two terms are interchangeable.

The main approaches TLS uses to address these issues are:

1. Encrypted communication: Uses symmetric encryption (AES, ChaCha20) to prevent eavesdropping.

2. Identity authentication: Uses digital certificates (e.g., X.509) issued by trusted third parties (CAs) to authenticate the server and prevent man-in-the-middle attacks (MITM).

3. Data integrity: Uses HMAC (Hash-based Message Authentication Code) or AEAD (Authenticated Encryption with Associated Data) to ensure data hasn’t been altered.

Let’s briefly walk through the technical details of HTTPS using TLS during data exchange. The process consists of two stages: first, the handshake phase, where client and server negotiate security parameters and establish an encrypted session; second, the data transfer phase, where encrypted communication occurs using a session key. The full process involves four steps:

1. Client sends ClientHello:

The client (e.g., browser) sends a ClientHello message containing:

l Supported TLS versions (e.g., TLS 1.3)

l Supported cipher suites (e.g., AES-GCM, ChaCha20)

l A random number (Client Random) for key generation

l Key exchange parameters (e.g., ECDHE public key)

l SNI (Server Name Indication), optional, supports multiple domain HTTPS

This informs the server of the client’s cryptographic capabilities and prepares security parameters.

2. Server sends ServerHello:

The server responds with a ServerHello message including:

l Selected cipher suite

l Server Random number

l Server certificate (X.509)

l Server key exchange parameters (e.g., ECDHE public key)

l Finished message (to confirm handshake completion)

This allows the client to verify the server’s identity and confirm negotiated parameters.

3. Client verifies server:

The client performs the following:

l Validates the server certificate: checks if it's issued by a trusted CA and not expired or revoked;

l Computes shared key: uses its own and the server’s ECDHE public keys to derive the session key, used later for symmetric encryption (e.g., AES-GCM);

l Sends Finished message: proves handshake integrity and prevents MITM attacks.

This ensures server trustworthiness and establishes the session key.

4. Begin encrypted communication:

Both parties now communicate using the agreed session key.

l Symmetric encryption (e.g., AES-GCM, ChaCha20) encrypts data efficiently and securely.

l Data integrity protection: AEAD (e.g., AES-GCM) prevents tampering.

These four steps effectively resolve HTTP’s security shortcomings. However, this widely adopted Web2 foundational technology creates challenges for Web3 application development—especially when on-chain smart contracts need to access off-chain data. Due to data availability concerns, on-chain VMs do not allow external data calls, ensuring data traceability and thus safeguarding consensus security.

Yet after iterations, developers realized DApps still require off-chain data, leading to the emergence of Oracle projects like Chainlink and Pyth. These act as relays between on-chain and off-chain data, bridging data silos. To ensure relayed data reliability, Oracles typically use PoS consensus—making malicious behavior costlier than gains, economically disincentivizing false data reporting. For example, to obtain BTC’s weighted price across centralized exchanges like Binance and Coinbase within a smart contract, such Oracles must fetch, aggregate, and transmit the data on-chain for storage before use.

What Problems Does zkTLS Solve?

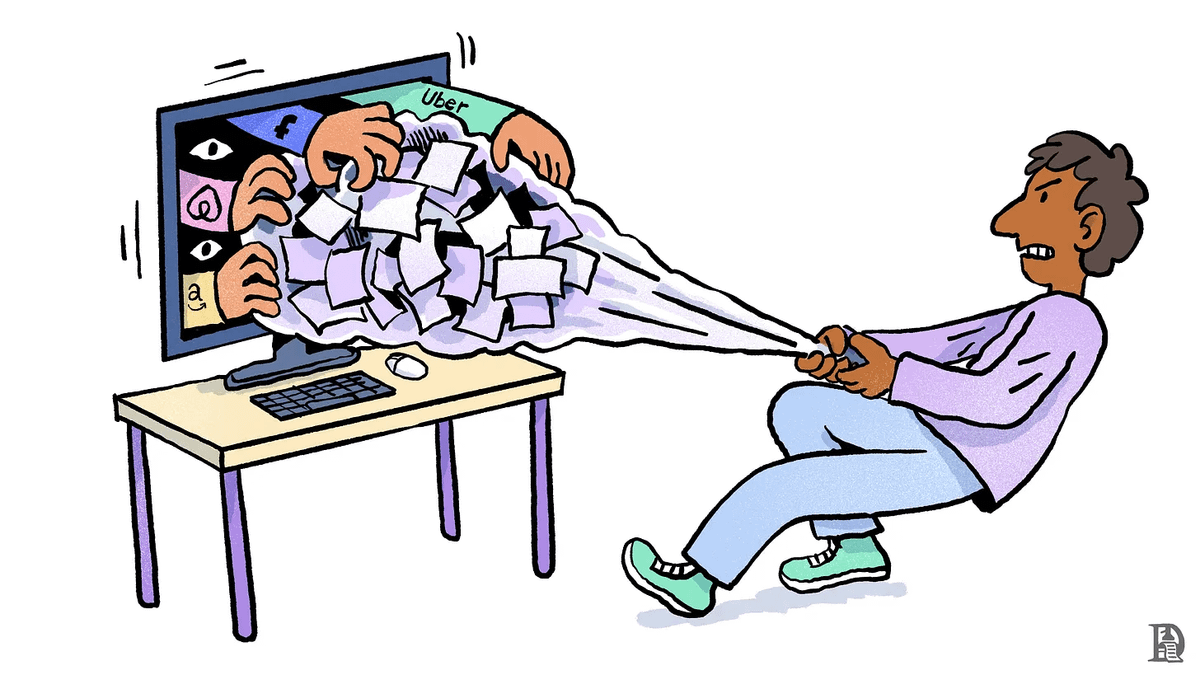

However, people found two major issues with Oracle-based data retrieval:

1. High cost: Ensuring Oracle-provided on-chain data is authentic and unaltered requires PoS consensus. But PoS security depends on staked capital, incurring maintenance costs. Moreover, PoS systems often involve significant data redundancy—data must be repeatedly transmitted, computed, and aggregated across the network before achieving consensus, further increasing usage costs. Consequently, Oracle projects usually offer only popular data (e.g., BTC prices) for free to attract users. Custom or niche data requires payment, hindering innovation—especially for long-tail or customized needs.

2. Low efficiency: PoS consensus takes time, causing delays in on-chain data updates. This latency is problematic for high-frequency applications where real-time accuracy is crucial.

To address these issues, zkTLS emerged. Its core idea is to leverage ZKP algorithms so that on-chain smart contracts, acting as verifiers, can directly confirm that data provided by a node genuinely came from a specific HTTPS resource and remains untampered—eliminating the high costs associated with traditional Oracle consensus mechanisms.

You might wonder: why not simply equip on-chain VMs with native Web2 API calling capability? The answer is no—on-chain environments must remain closed to ensure data traceability. During consensus, all nodes must apply uniform, objective logic to verify data or execution results. This enables most honest nodes to independently validate outcomes based on redundant local data, even in fully trustless settings. However, with Web2 data, establishing such uniform validation logic is difficult—network latency could cause different nodes to receive varying responses from the same HTTPS resource, complicating consensus, especially for high-frequency data. Another critical issue lies in TLS security: it relies on client-generated random values (Client Random) and key exchange parameters to negotiate encryption keys with the server. But on-chain environments are transparent. If smart contracts were to manage these secrets, sensitive data would be exposed, compromising privacy.

zkTLS offers an alternative approach—using cryptography instead of consensus to achieve data reliability at lower cost, similar to how ZK-Rollups optimize over OP-Rollups. Specifically, by introducing ZKPs, zkTLS generates a proof from off-chain relay nodes’ HTTPS requests, including CA certificate validation, timing evidence, and data integrity proofs via HMAC or AEAD. On-chain, only essential verification data and algorithms are stored, enabling smart contracts to verify data authenticity, freshness, and source reliability—without exposing sensitive information. Detailed algorithmic specifics are beyond this discussion; interested readers are encouraged to explore further.

The biggest advantage of this approach is drastically reducing the cost of making Web2 HTTPS resources usable on-chain. This unlocks many new use cases—such as lowering costs for pricing long-tail assets, leveraging authoritative Web2 websites for on-chain KYC to enhance DID systems, and improving Web3 Game architecture. Naturally, zkTLS also disrupts existing Web3 businesses, particularly dominant Oracle projects. In response, industry leaders like Chainlink and Pyth are actively researching this area to maintain leadership amid technological shifts, potentially giving rise to new business models—shifting from time-based to usage-based pricing, or offering compute-as-a-service. As with most ZK projects, the key challenge remains reducing computational costs to achieve commercial viability.

In conclusion, when designing products, consider tracking zkTLS developments and integrating this tech stack where appropriate—it may inspire innovations in both business models and technical architecture.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News