From Storing the Past to Computing the Future: AO Hyperparallel Computer

TechFlow Selected TechFlow Selected

From Storing the Past to Computing the Future: AO Hyperparallel Computer

What exactly is AO, and what is the logic behind its performance?

Author: YBB Capital Researcher Zeke

Introduction

The two mainstream blockchain architecture designs that have emerged in Web3 today are becoming somewhat aesthetically tiresome. Whether it's the oversaturated modular blockchains or the new Layer 1s that constantly emphasize performance without actually demonstrating any real advantage, their ecosystems are essentially replicas or minor improvements of Ethereum’s—offering such homogenized experiences that users have long lost interest. However, Arweave’s newly proposed AO protocol stands out by enabling ultra-high-performance computing on a storage blockchain, even achieving near-Web2-level user experience. This appears vastly different from the scaling methods and architectural designs we currently know. So what exactly is AO? And what logic underpins its performance?

Understanding AO

The name "AO" comes from “Actor-Oriented,” a programming paradigm derived from the Actor Model of concurrent computation. Its overall design extends Smart Weave while adhering to the Actor Model principle centered around message passing. Simply put, AO can be understood as a “hyper-parallel computer” operating on the Arweave network through a modular architecture. In terms of implementation, AO is not a conventional modular execution layer but rather a communication protocol that standardizes message passing and data processing. The core goal of this protocol is to enable collaboration among different “roles” within the network via message exchange, thereby creating a computational layer whose performance can scale infinitely. Ultimately, this allows Arweave—the so-called “giant hard drive”—to achieve cloud-level speed, scalable computing power, and extensibility within a decentralized trust environment.

AO Architecture

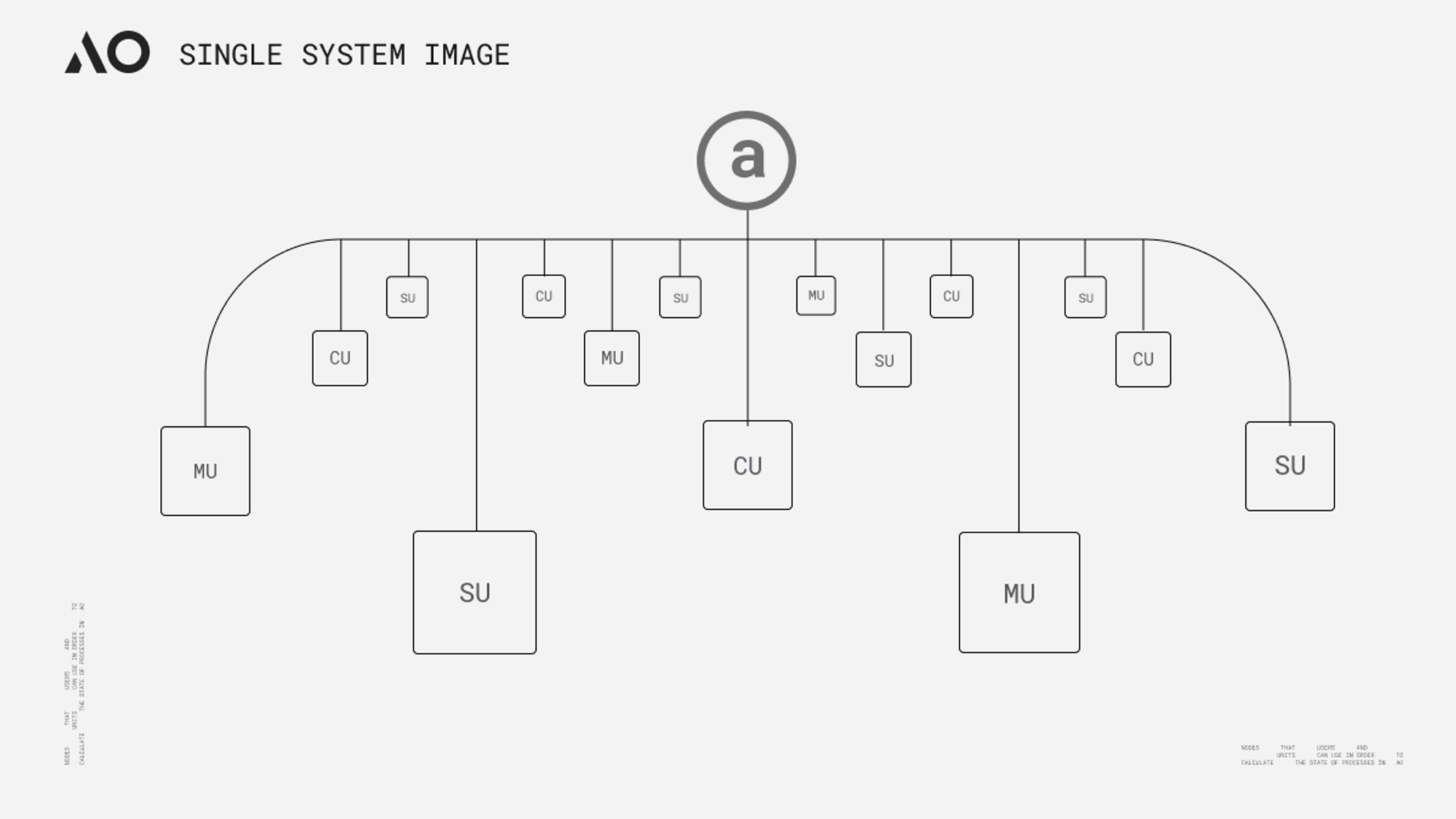

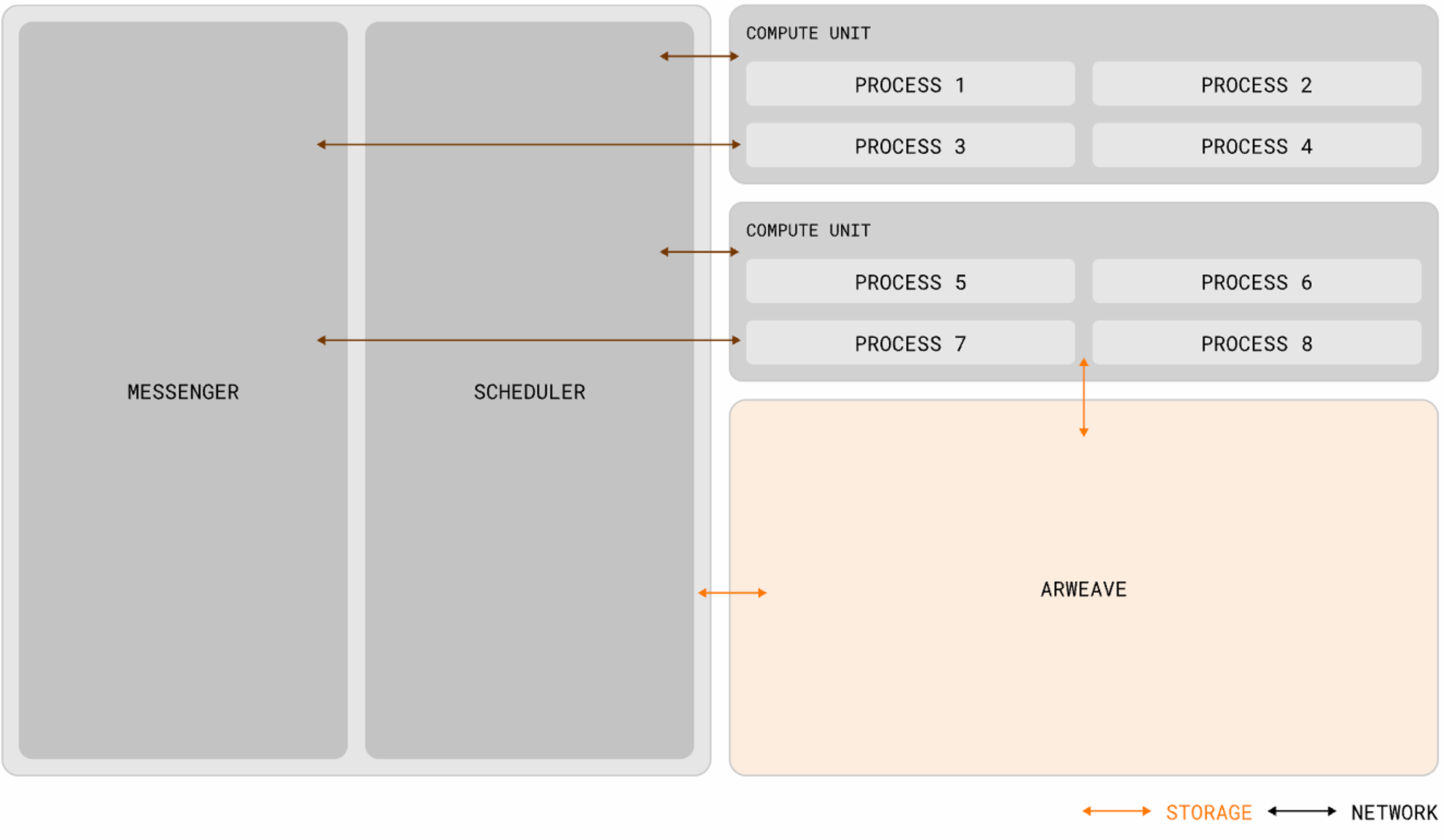

AO’s concept bears some resemblance to Gavin Wood’s idea of “Core Time” division and recombination presented at last year’s Polkadot Decoded conference—both aim to create a so-called “high-performance world computer” by scheduling and coordinating computing resources. However, there are fundamental differences between them. Heterogeneous (or exotic) scheduling involves deconstructing and reorganizing relay chain blockspace resources, which doesn’t significantly alter Polkadot’s architecture. While computational performance breaks free from the limitations of single parachains under slot models, it remains capped by Polkadot’s maximum number of idle cores. In contrast, AO theoretically offers nearly unlimited computing capacity through horizontal node expansion (in practice, likely limited by network incentives) and greater flexibility. Architecturally, AO standardizes data processing and message representation, utilizing three network units (subnets) to handle message ordering, scheduling, and computation. According to official documentation, these components and their functions can be summarized as follows:

● Process: A process can be seen as a set of instructions executed within AO. When initialized, a process defines its required computing environment—including virtual machine, scheduler, memory requirements, and necessary extensions. These processes maintain a “holographic” state (each process stores its state independently in Arweave’s message log; see the “Verifiability” section below for further explanation). This holographic nature means processes operate independently and dynamically, executable by appropriate compute units. Besides receiving messages directly from user wallets, processes can also receive messages forwarded from other processes via Messenger Units;

● Message: Every interaction between a user (or another process) and a process is represented by a message. Messages must conform to Arweave’s native ANS-104 data item format to ensure structural consistency and facilitate Arweave’s data preservation. More intuitively, messages resemble transaction IDs (TX IDs) in traditional blockchains—but they are not identical;

● Messenger Unit (MU): MUs relay messages through a process called 'cranking', ensuring seamless communication across the system. Once a message is sent, the MU routes it to the appropriate destination (SU) within the network, coordinates interactions, and recursively handles any generated outbox messages. This continues until all messages are processed. Beyond relaying, MUs provide additional functionalities such as managing process subscriptions and handling scheduled cron interactions;

● Scheduler Unit (SU): Upon receiving a message, the SU initiates key operations to maintain process continuity and integrity. It assigns a unique incremental nonce to ensure message order relative to others within the same process. This assignment is cryptographically signed to guarantee authenticity and sequence integrity. To further enhance reliability, the SU uploads both the signed assignment and the message to Arweave’s data layer, ensuring availability, immutability, and protection against tampering or loss;

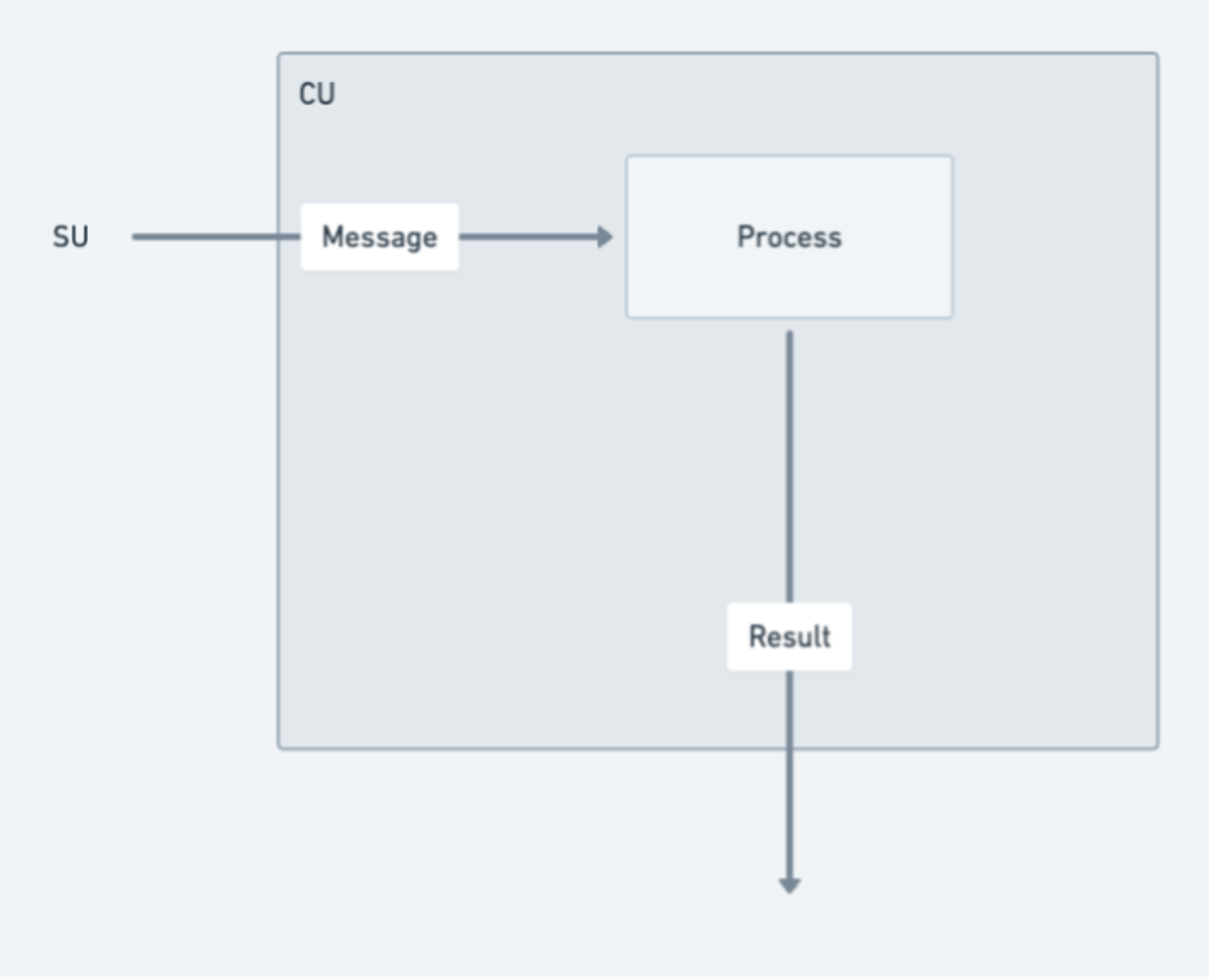

● Compute Unit (CU): CUs compete in a peer-to-peer computing market to fulfill requests from users or SUs for resolving process states. After completing state computation, a CU returns a signed proof with the specific result to the requester. Additionally, CUs can generate and publish signed state proofs that other nodes may load—though this requires paying a proportional fee.

Operating System – AOS

AOS functions as the operating system or terminal tool within the AO protocol, used for downloading, running, and managing threads. It provides an environment where developers can build, deploy, and run applications. On AOS, developers leverage the AO protocol to develop and deploy apps and interact with the AO network.

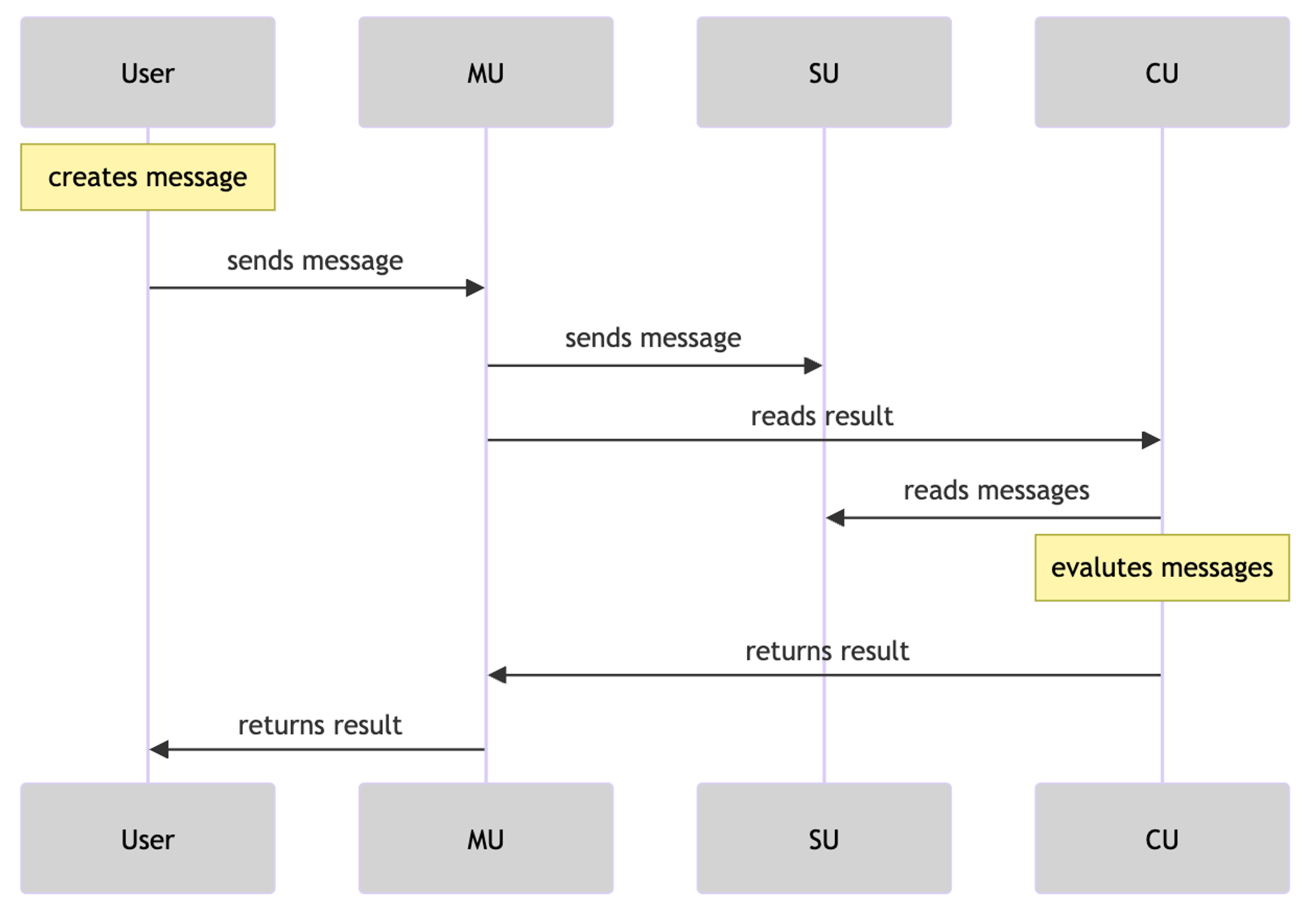

Operational Logic

The Actor Model promotes a philosophy known as “everything is an actor.” All components and entities within this model are treated as “actors,” each possessing its own state, behavior, and mailbox. They communicate asynchronously via message passing, allowing the entire system to function in a distributed and concurrent manner. AO operates similarly: components and even users are abstracted as “actors” communicating through a message-passing layer, linking processes together into a parallelized, non-shared-state distributed computing system.

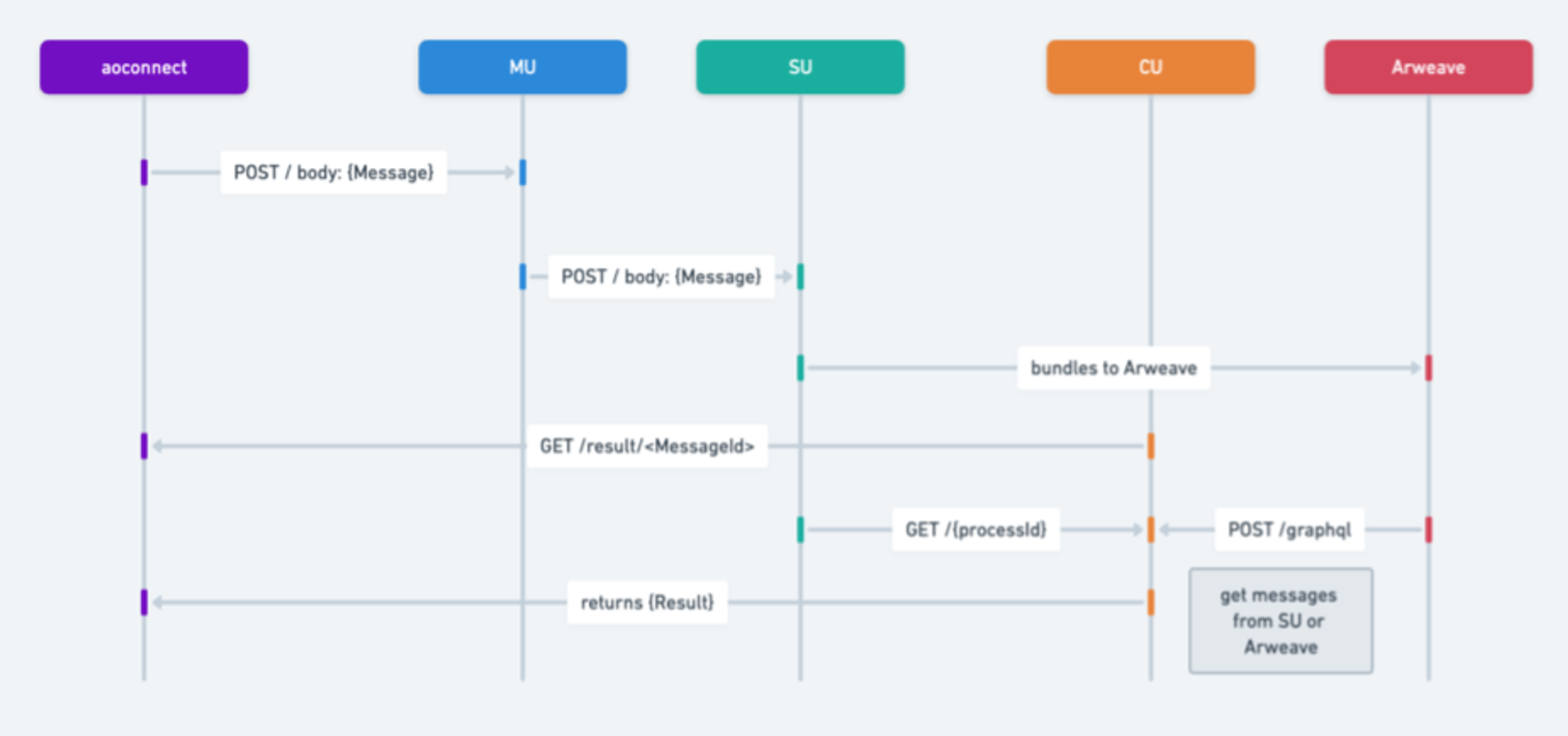

Below is a brief description of the message flow steps:

1. Message Initiation:

○ A user or process creates a message requesting action from another process.

○ The MU receives the message and sends it via a POST request to other services.

2. Message Processing and Forwarding:

○ The MU processes the POST request and forwards the message to the SU (Scheduler Unit).

○ The SU interacts with Arweave’s storage or data layer to store the message.

3. Retrieving Results by Message ID:

○ The CU (Compute Unit) receives a GET request and retrieves results based on the message ID, evaluating the message’s impact on the process. It can return output based on a single message identifier.

4. Information Retrieval:

○ The SU receives a GET request and retrieves message information based on a given time range and process ID.

5. Push Outbox Messages:

○ The final step pushes all outbox messages.

○ This involves checking messages and generations within the result object.

○ Based on these checks, steps 2, 3, and 4 can be repeated for each relevant message or generation.

What Has AO Changed? "1"

Differences from Conventional Networks:

1. Parallel Processing Capability: Unlike networks like Ethereum, where the base layer and each Rollup effectively run as a single process, AO supports an arbitrary number of processes running in parallel while maintaining verifiable computation. Moreover, while those networks operate under global synchronized states, AO processes maintain independent states. This independence enables AO processes to handle far more interactions and scale computationally, making it ideal for high-performance, reliability-demanding applications;

2. Verifiable Reproducibility: While some decentralized networks like Akash and peer-to-peer systems like Urbit offer large-scale computing capabilities, unlike AO, they do not provide verifiable reproducibility of interactions or rely on non-permanent storage solutions for logging interactions.

Differences Between AO’s Node Network and Traditional Computing Environments:

● Compatibility: AO supports various thread formats—whether WASM-based or EVM-compatible—and they can be bridged onto AO using technical adaptations.

● Content Co-Creation Projects: AO supports content co-creation initiatives, enabling atomic NFT issuance and allowing data uploads combined with UDL to construct NFTs on AO.

● Data Composability: NFTs on AR and AO achieve data composability, enabling articles or content to be shared and displayed across multiple platforms while preserving source consistency and original attributes. When content updates occur, the AO network broadcasts these changes to all related platforms, ensuring synchronization and up-to-date propagation.

● Value Distribution and Ownership: Creators can sell their works as NFTs and transmit ownership information via the AO network, realizing value return for content.

Project Support:

1. Built on Arweave: AO leverages Arweave’s features to eliminate vulnerabilities associated with centralized providers—such as single points of failure, data leaks, and censorship. Computations on AO are transparent and verifiable via Arweave-stored, reproducible message logs and decentralized minimal-trust characteristics;

2. Decentralized Foundation: AO’s decentralized foundation helps overcome scalability limits imposed by physical infrastructure. Anyone can easily create an AO process from their device without specialized knowledge, tools, or infrastructure, ensuring even individuals and small entities can achieve global reach and participation.

AO’s Verifiability Issue

After understanding AO’s framework and logic, one common question arises: AO lacks the global characteristics typical of traditional decentralized protocols or chains—can verifiability and decentralization really be achieved just by uploading data to Arweave? This is precisely where AO’s brilliance lies. AO itself operates off-chain, does not solve verifiability, nor does it alter consensus. The AR team’s approach separates AO’s role from Arweave’s, then connects them modularly: AO handles communication and computation, while Arweave solely provides storage and verification. Their relationship resembles mapping—AO only needs to ensure interaction logs are stored on Arweave, projecting its state onto Arweave to create a hologram. This holographic state projection guarantees consistency, reliability, and determinism during state computation. Furthermore, message logs on Arweave can reverse-trigger AO processes to execute specific actions (automatically waking up and performing dynamic operations according to predefined conditions and schedules).

As shared by Hill and Outprog, if we simplify the verification logic further, AO can be imagined as an inscription computing framework based on a hyper-parallel indexer. Just as Bitcoin inscription indexers validate inscriptions by extracting JSON data and recording balance information in off-chain databases using indexing rules, although indexers perform off-chain validation, users can verify inscriptions by switching between multiple indexers or running their own—eliminating concerns about malicious behavior. As mentioned earlier, message ordering and process holographic state data are uploaded to Arweave. Thus, using the SCP paradigm (Storage Consensus Paradigm—which can be simply understood here as an on-chain indexer version of indexing rules, notably predating traditional indexers), anyone can reconstruct AO or any thread on AO from holographic data stored on Arweave. Users don't need to run full nodes to verify trusted states; similar to switching indexers, they merely submit query requests to one or multiple CU nodes via SU. Given Arweave’s high storage capacity and low cost, this model empowers AO developers to build supercomputing layers far surpassing Bitcoin inscription functionality.

AO vs ICP

Let us summarize AO’s characteristics using a few keywords: giant native hard drive, boundless parallelism, limitless computation, modular holistic architecture, and holographic-state processes. All this sounds incredibly promising. However, those familiar with various blockchain projects might notice striking similarities between AO and a once-celebrated “unicorn” project—the so-called “Internet Computer” (ICP).

ICP was once hailed as the last great project in the blockchain world, widely embraced by top-tier institutions, reaching a $200 billion FDV during the 2021 bull run. But as the tide receded, ICP’s token value plummeted. By 2023, during the bear market, ICP’s price had dropped nearly 260x from its all-time high. Yet, setting aside token performance, even when reassessed today, ICP still boasts many unique technical strengths. Many of AO’s impressive advantages were already present in ICP years ago. Will AO suffer the same fate as ICP? First, let’s understand why they’re so similar. Both ICP and AO are built upon the Actor Model, focusing on locally-executed blockchains, explaining their overlapping features. ICP’s subnet blockchains consist of high-performance hardware devices (node machines) independently owned and controlled, running the Internet Computer Protocol (ICP). ICP comprises numerous software components bundled into replicas, which replicate state and computation across all nodes within a subnet blockchain.

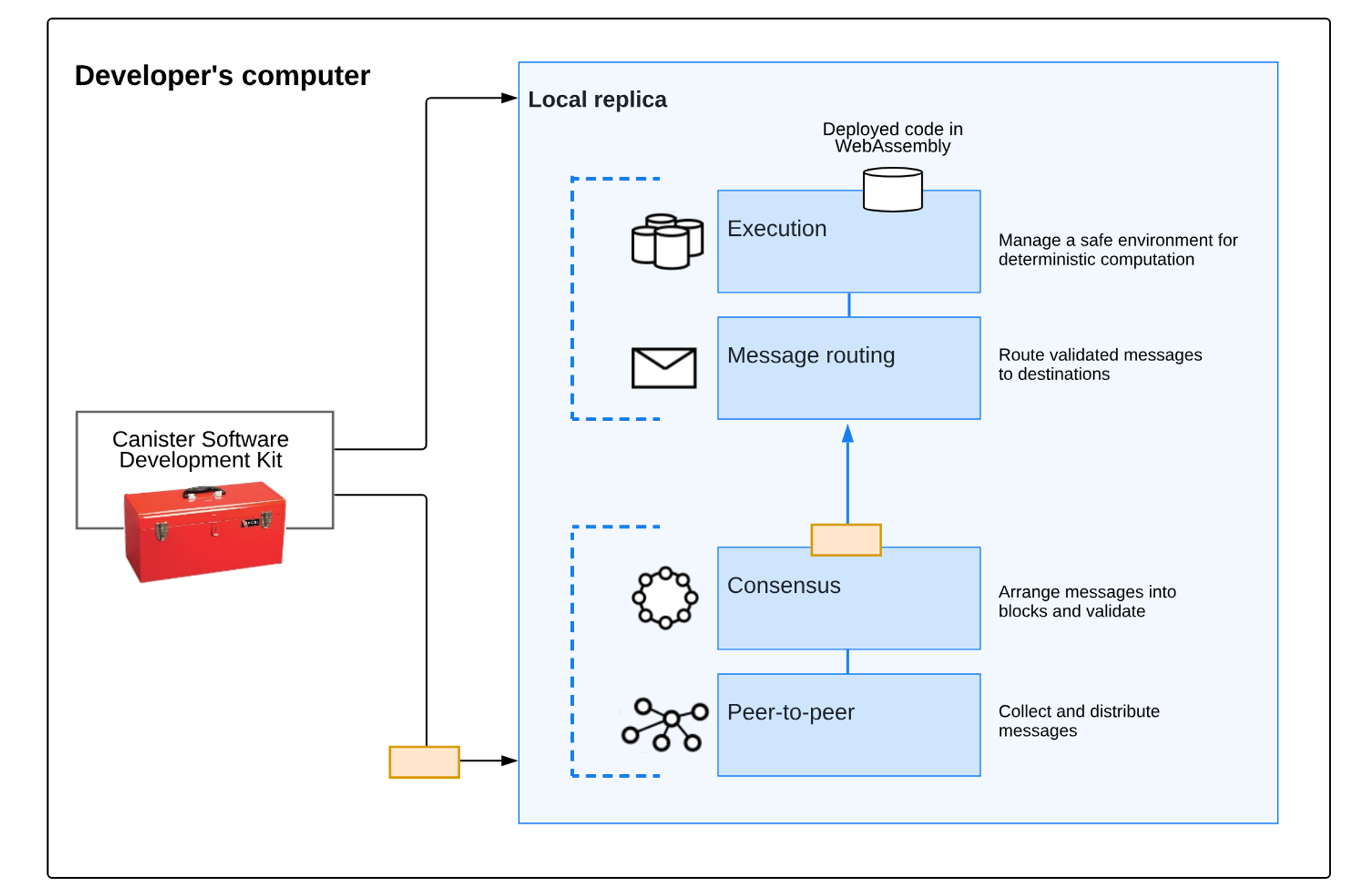

ICP’s replicated architecture consists of four layers:

Peer-to-Peer (P2P) Network Layer: Collects and disseminates messages from users, other nodes within the subnet blockchain, and external subnets. Messages received at the peer layer are replicated across all nodes in the subnet for security, reliability, and resilience;

Consensus Layer: Selects and orders messages from users and different subnets to form blockchain blocks, which are notarized and finalized via Byzantine Fault Tolerant (BFT) consensus, forming an evolving blockchain. Finalized blocks are passed to the message routing layer;

Message Routing Layer: Routes user- and system-generated messages between subnets, manages input/output queues for dApps, and schedules message execution;

Execution Environment Layer: Performs deterministic computations involved in executing smart contracts by processing messages received from the message routing layer.

Subnet Blockchains

A subnet refers to a collection of interacting replicas running separate instances of the consensus mechanism to create their own blockchain, capable of hosting a set of “canisters.” Each subnet can communicate with others and is governed by the root subnet, which delegates authority via chain-key cryptography. ICP uses subnets to enable infinite scalability. Traditional blockchains (and individual subnets) face limitations due to single-node machine compute capacity—since every node must process everything happening on-chain to participate in consensus. Running multiple independent subnets in parallel allows ICP to overcome this single-machine bottleneck.

Why Did It Fail?

As described above, ICP aimed to build a decentralized cloud server—an idea as revolutionary a few years ago as AO seems today. Why did it fail? Simply put, it failed to strike a balance between Web3 ideals and practical usability, ending up neither truly Web3 nor better than centralized clouds. Three main issues stand out. First, ICP’s program system, Canister (equivalent to containers mentioned earlier), is somewhat analogous to AO’s AOS and processes—but not quite the same. ICP programs are encapsulated within Canisters, invisible externally, requiring specific interfaces to access data. This makes asynchronous contract calls unfriendly for DeFi protocols, causing ICP to miss out on capturing financial value during DeFi Summer.

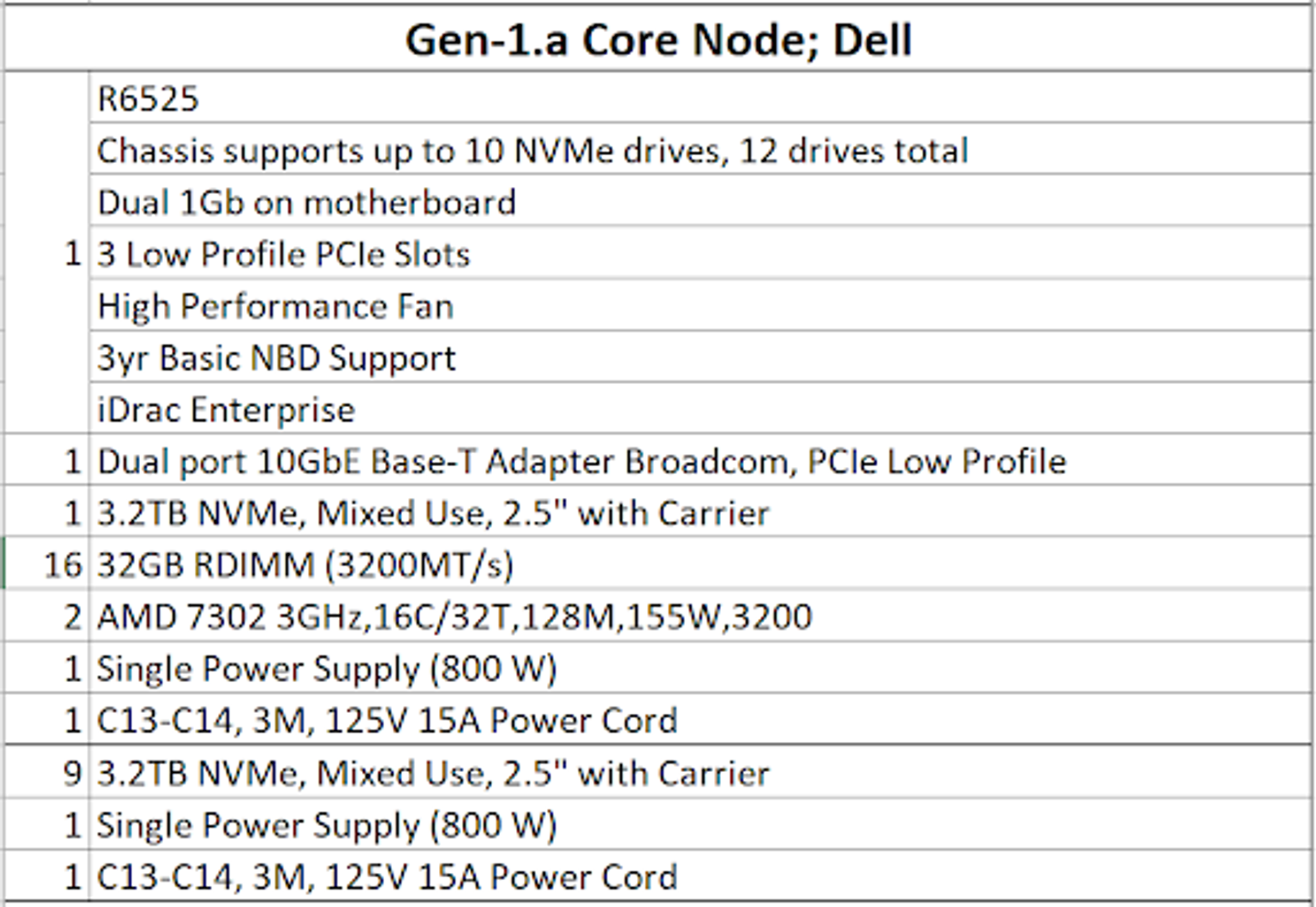

Second, hardware requirements were extremely high, undermining decentralization. The diagram below shows ICP’s minimum node hardware specifications at launch—exaggerated even by today’s standards, exceeding Solana’s specs and demanding more storage than dedicated storage blockchains.

Third, ecosystem scarcity. Even today, ICP remains a high-performance public chain. If it lacks DeFi apps, what about others? Unfortunately, ICP has never produced a killer application since inception—failing to attract either Web2 or Web3 users. Given its insufficient decentralization, why not just use richer, more mature centralized alternatives? Nevertheless, ICP’s technology remains cutting-edge. Features like reverse gas, high compatibility, and infinite scalability remain essential for attracting the next billion users. With the current AI wave, ICP may yet turn things around by leveraging its architectural strengths.

Returning to our earlier question: will AO fail like ICP? Personally, I believe AO won’t repeat history. The latter two reasons behind ICP’s downfall aren’t issues for AO—Arweave already has a solid ecosystem foundation, holographic state projection resolves centralization concerns, and AO offers greater compatibility. Greater challenges may lie in economic model design, support for DeFi, and the century-old question: in non-financial and non-storage domains, how should Web3 manifest itself?

Web3 Should Be More Than Just Narrative

In the Web3 world, perhaps no word appears more frequently than “narrative.” We’ve grown accustomed to assessing most tokens through narrative lenses—a reflection of how many Web3 projects promise grand visions yet deliver awkward user experiences. In contrast, Arweave already hosts several fully functional applications matching Web2-grade usability. For instance, Mirror and ArDrive feel indistinguishable from traditional apps upon use. Yet, Arweave’s value capture as a storage blockchain remains highly constrained—computation may be the inevitable path forward. Especially in today’s landscape, where AI dominates, integrating Web3 faces inherent barriers—as discussed in previous articles. Now, Arweave’s AO introduces a novel architectural alternative to Ethereum-style modularization, providing excellent new infrastructure for Web3 × AI. From Alexandria’s Library to a hyper-parallel computer, Arweave is forging its own paradigm.

References

1. AO Quick Start: Introduction to the Super-Parallel Computer

2. X Space Event Transcript | Is AO an Ethereum Killer? How Will It Drive a New Blockchain Narrative?

4. AO Cookbook

5. AO — The Unimaginable Super-Parallel Computer

6. Multi-Angle Analysis of ICP’s Decline: Unique Technology and a Thin, Cold Ecosystem

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News