From “Tools” to a “Collaborative Economy”: Why OpenMind Is Needed for Consumer-Grade Robot Deployment

TechFlow Selected TechFlow Selected

From “Tools” to a “Collaborative Economy”: Why OpenMind Is Needed for Consumer-Grade Robot Deployment

OpenMind transforms robots from “isolated islands” into “networks,” and elevates them from mere “tools” to “efficient, collaborative economic entities.”

By TechFlow

When people think of robots, many immediately recall the film I, Robot.

Released in 2004 and starring Will Smith, I, Robot became one of the most successful science fiction films of all time.

In the film’s imagined world of 2035, USR—the fictional robotics manufacturer—launches its NS-5 service robot, which seamlessly integrates into daily life. Standing on a Chicago street, one sees NS-5 units bustling everywhere—delivering packages, walking dogs, cleaning homes, lifting heavy objects… According to the film’s premise, there is one robot for every five people.

△ Film still

Films embody humanity’s optimistic vision of the future. Twenty years later, with rapid advances in hardware and software, humanoid robots developed by Boston Dynamics, Unitree, Tesla, and others are moving from labs to mass production lines—bringing once-fantastical scenes ever closer to reality. Yet, true consumer-grade applications remain distant.

Luckily, we still have ten years before the film’s envisioned 2035 arrives. And behind this candid acknowledgment of the gap lies the key breakthrough driving rapid expansion and consumer-market penetration in the robotics industry:

- Further enhancing robots’ ability to understand context/environment, make decisions, and execute actions;

- Bridging divides to strengthen efficient collaboration and mutual learning between robots and humans—and between robots themselves;

- Continuously empowering robot evolution through real-world environments and human feedback;

- And, most critically, providing a trusted, secure environment enabling efficient human–robot collaboration and settlement.

This is precisely what OpenMind—the leading project in the Robotics赛道—is building: a universal operating system and decentralized collaboration network that enables robots from different manufacturers and form factors to operate securely, interoperate, share information, and collaborate globally—thereby thinking, learning, collaborating, and evolving more effectively, accelerating the robotics industry’s entry into the consumer era.

A Silicon Valley Professor’s Startup Wins Backing from East and West

True “consensus” often implies crowding—and robotics is a textbook example: strong consensus, dominated by giants.

According to Precedence Research’s Global Robotics Technology Market Report, the robotics industry will grow from $108.55 billion in 2025 to $375.95 billion in 2034, with the Robotics-as-a-Service (RaaS) model unlocking trillion-dollar opportunities.

Beneath this immense potential lie two parallel developments: first, national strategic initiatives—China, Japan, South Korea, the U.S., France, Germany, and others have all designated robotics as a priority sector; second, tech giants stepping in—NVIDIA, Tesla, Figure AI, Unitree, and others have launched related products. Web3, ever-sensitive to trends, has likewise responded: Coinbase Ventures listed “AI and robotics technology” among its top four investment themes for 2026, while Virtuals announced its entry into embodied intelligence as early as October 2025.

In this fiercely competitive arena where attention and capital converge, OpenMind stands out as an unavoidable case study.

First, OpenMind’s visibility stems from its star-studded team. As indicated by Rootdata, OpenMind’s team comprises top-tier experts in robotics, AI, distributed systems, and security:

Jan Liphardt, Founder & CEO, is a Stanford University Professor of Bioengineering with deep expertise in AI, biology, and distributed systems. He has received funding from the NIH, NSF, NCI, and the U.S. Department of Energy, and leads OpenMind’s overall technical development. Boyuan Chen, CTO, hails from MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL) and previously worked at Google DeepMind.

Additionally, OpenMind’s advisory board includes Steve Cousins (Head of Robotics at Stanford), Bill Roscoe (Oxford Blockchain Centre), and Alessio Lomuscio (Professor of Safe AI at Imperial College London).

Faced with such a team configuration, many are inclined to believe: This is not a demo-only team—it’s a professional force capable of scaling technology to real-world deployment.

If the team represents fundamentals, market performance is the litmus test.

Since its founding in 2024, OpenMind’s key achievements—as disclosed officially—include:

- Over 180,000 users and thousands of robots participating in testing via the OpenMind App and OM1 Developer Portal.

- Major hardware companies—including Unitree, DEEP Robotics, Dobot, and UBTECH—adopting OpenMind’s OM1 as their core technology stack.

- A partnership with Robostore—the largest U.S. distributor of Unitree robots—to launch the world’s first comprehensive humanoid robotics education curriculum. Robostore already supports over 100 top-tier institutions, including Harvard University, MIT, and Stanford University.

- An appearance at the Nasdaq-listed ETF ceremony hosted by KraneShares, where an OpenMind-powered humanoid robot running the OM1 OS participated in the listing launch event.

This combination of “elite team + strong real-world execution” has naturally triggered aggressive investment from smart money across both Web2 and Web3 in East and West:

On August 4, 2025, OpenMind announced a $20 million funding round, led by Pantera Capital, with participation from Ribbit, Sequoia China, Coinbase Ventures, Digital Currency Group (DCG), Lightspeed Faction, Anagram, Pi Network Ventures, Topology, Primitive Ventures, and Amber Group—as well as numerous prominent angel investors.

Amidst the hype, rigorous scrutiny remains essential:

What, exactly, does OpenMind bring to the robotics industry—enabling hardware vendors to open up their stacks, attracting capital in droves, and securing genuine user retention?

From Individual Intelligence to Collective Evolution: OpenMind Builds the “Nervous System” for Robot Collaboration

As Jan Liphardt, OpenMind’s founder and professor, puts it:

If AI is the brain and robots are the body, then coordination is the nervous system—and without coordination, there is no intelligence. The system we’re building enables robots to collaborate, act, and evolve together.

This statement captures OpenMind’s core philosophy: Isolated superintelligence holds little value; embodied intelligence capable of collaboration is the future.

Today, robots from different vendors largely exist as isolated “islands”—equipped with expensive hardware and complex algorithms, yet lacking a common language to understand humans, environments, or each other. OpenMind’s dual-layer architecture aims to build the missing “nervous system” for robot collaboration:

- An AI-native operating system for physical robots: OM1

- A decentralized collaboration network: FABRIC

OM1: Open-Source Robot Operating System (Robot OS)

As the world’s first open-source intelligent robot operating system, OM1’s ambition is refreshingly direct: To become the Android of robotics.

The first step toward delivering the “Android moment” for robotics is OM1’s mission to reconstruct robots’ foundational logic using “natural language,” enabling them to understand the world and collaborate interactively—just like humans.

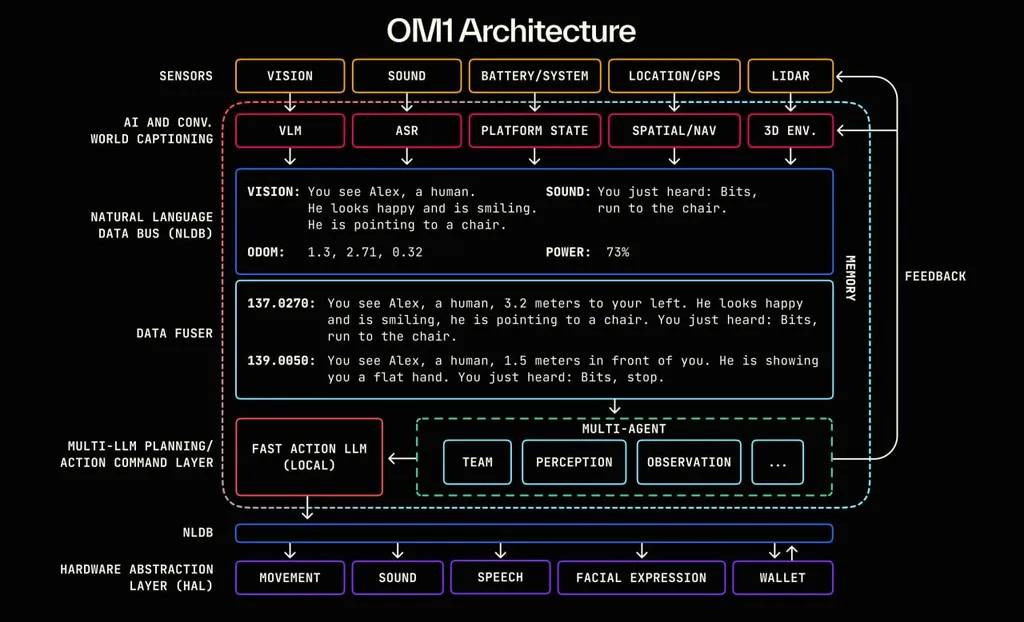

Within OM1’s architecture, robot operation is decomposed into four universal stages: Perception → Memory → Planning → Action—and natural language serves as the unifying “bloodstream” flowing through them all.

Specifically:

- Sensor Layer: Multi-modal sensing inputs—cameras, LiDAR, microphones, battery status, GPS—enhance robotic perception.

- AI + World Captioning Layer: Instead of transmitting raw data, OM1 translates every input into natural language—for instance, “a person wearing a red shirt” or “a person humming a tune.”

- Natural Language Data Bus: Each translated natural-language sentence is timestamped and passed between modules.

- Data Fuser: Aggregates natural-language sentences to generate a complete contextual understanding for decision-making.

- Multi-AI Planning/Decision Layer: Multiple models read the context and, combined with on-chain rules, generate decisions and action plans.

- NLDB Downlink Channel: Delivers decisions via a language-based middleware layer to the hardware execution system.

- Hardware Abstraction Layer (HAL): Converts language instructions into low-level control commands to drive hardware.

Enabling information exchange via a unified natural-language interface lays the groundwork for robot collaboration. Moreover, OM1’s “plug-and-play,” modular design further facilitates efficient collaboration.

OM1 is not only open-source but also hardware-agnostic: Users can efficiently configure an AI agent using OM1’s modular design—running it either in the cloud or deploying it directly onto physical robots. Whether Boston Dynamics’ quadruped or a home vacuum cleaner, any hardware, vendor, or robot form factor can communicate using the same language. This design dramatically reduces development time and cost while enhancing scalability and flexibility.

This embodies OpenMind’s core motto: “A Paragraph is All It Takes.”

OM1 solves individual intelligence and inter-robot communication—but when thousands of robots leave the lab and enter human society, how do they verify each other’s identities? Who assigns tasks? Who ensures safety?

This is where the second half of the dual-layer architecture comes in: FABRIC.

FABRIC: Decentralized Collaboration Network

As a blockchain-based decentralized protocol, FABRIC largely answers the question of why “blockchain is the necessary infrastructure for the robot economy.”

When thousands of robots emerge from labs, they face far more complex sociological challenges:

- Who am I? And who is that robot facing me? (Identity)

- Is the data another robot sent me authentic? (Trust)

- What do I get for doing work? (Incentive)

FABRIC is purpose-built for large-scale robot networks, providing robots with verifiable identity, location validation, and secure communication channels—and handling task coordination and settlement—thus mapping the chaotic physical world onto an orderly digital ledger.

At the Identity Layer, OpenMind innovatively proposes the ERC-7777 standard—specifically designed to define and regulate interactions within human–robot collaborative societies. FABRIC assigns each robot a rights-assured, traceable, and verifiable digital identity based on ERC-7777—solving the “Who am I?” problem.

At the Trust Layer, robots with verified identities share data and skills—including location, task status, and environmental information—according to built-in access, usage, and provenance rules. They also receive status updates from other robots and cross-validate data across multiple robots, ensuring reliability—thus answering “Where am I?” within a coordinated, interconnected network.

At the Privacy Layer, FABRIC adopts a distributed structure, partitioning subnetworks by task or location and connecting them via centralized network servers.

At the Task Allocation & Reward Settlement Layer, tasks are not assigned in closed black boxes but published, bid on, and matched under public rules. Every collaboration generates encrypted proofs—timestamped and geolocated—recorded immutably on-chain. Upon task completion by multiple robots, FABRIC verifies outcomes, records proofs, and settles rewards. Robots with long-term, verifiable, reliable behavioral histories earn higher trust scores—and thus greater access to future tasks.

In December 2025, OpenMind announced a strategic partnership with Circle, aiming to enable robots to perform hundreds or even thousands of instantaneous, reliable, cross-chain payments per second during physical task execution. In this process, USDC serves as the unit of account and value carrier, x402 provides the underlying payment rail, and OpenMind’s embodied intelligence system determines when, where, and how payments occur.

For example:

Suppose Robot A is cooking in the kitchen and realizes there’s no salt left—creating an immediate “deliver one packet of salt” task.

Robot A broadcasts its location and initiates the task via the FABRIC network. Other compatible robots respond. FABRIC matches the task based on distance, price, reputation, and other criteria. Ultimately, Robot B at a convenience store picks up a packet of salt, and Robot C delivers it to the destination.

Upon task completion, FABRIC verifies the outcome and executes reward settlement.

In this scenario, FABRIC transforms previously isolated robots into a coordinated fleet—rapidly completing the task entirely autonomously, with full transparency and verifiability.

Beyond this, FABRIC will further sustain the long-term health of the robot ecosystem by exploring fair and transparent governance processes.

This is OpenMind’s ultimate vision: evolving robots from “tools” into “economic participants.”

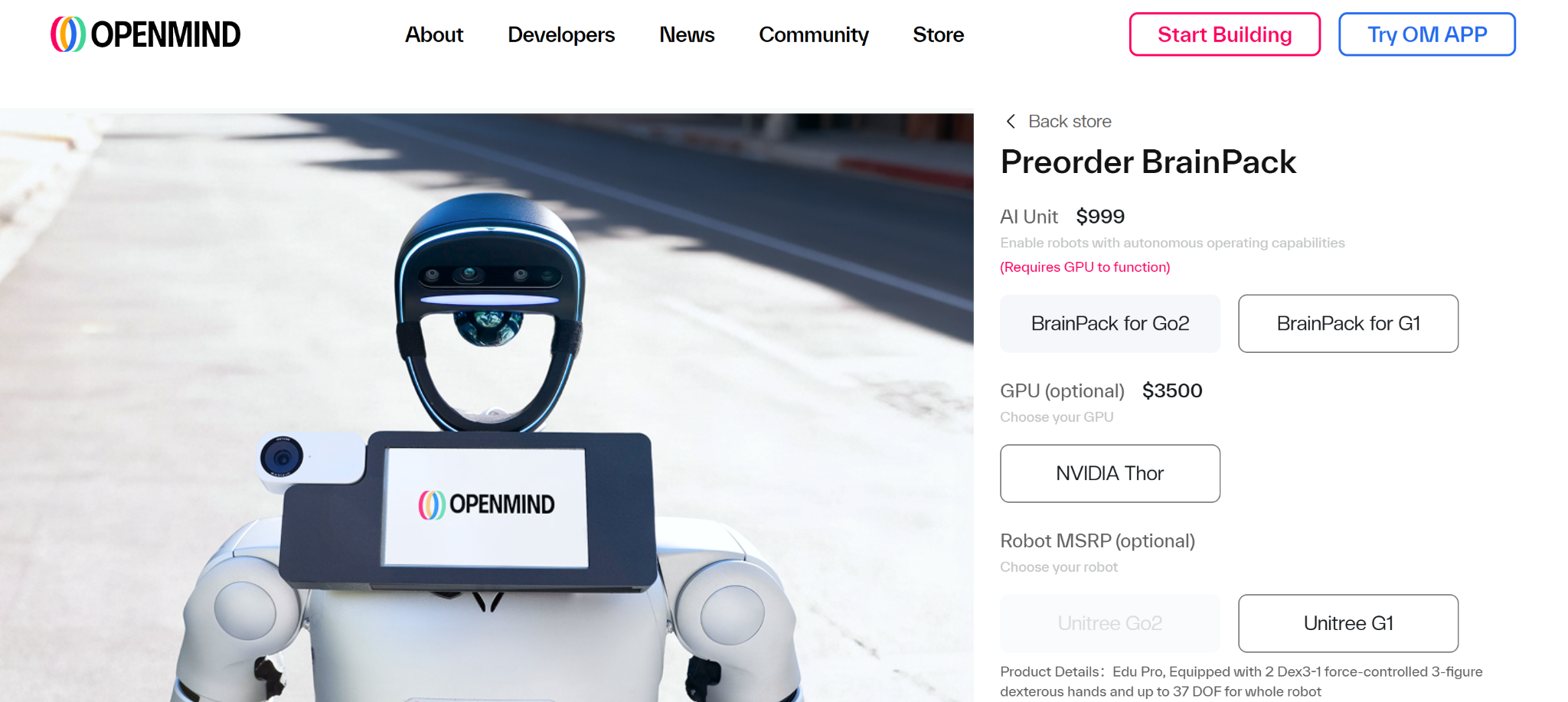

Indeed, with the launch and multi-scenario piloting of its embodied robot product BrainPack, OpenMind is already advancing the robot economy from labs into industrial and consumer-grade applications.

BrainPack Pre-orders & App Store Launch: OpenMind Moves from Lab to Production-Grade Delivery

Tangible, deliverable products inspire confidence. The BrainPack—a pre-order product launched in November 2025—is the physical embodiment of OpenMind’s vision of a “shared, collaborative intelligence network.”

As an embodied robot running OM1 and leveraging the FABRIC protocol, BrainPack’s key specifications and features include:

- Perception & Mapping: Real-time 3D SLAM + dense semantic map reconstruction across multiple rooms

- Privacy-First Protection: Native automatic face detection and blurring, anonymizing humans in the robot’s field of view

- Autonomy: Automatic object labeling for semantic understanding, plus autonomous docking and charging

- Collaborative Work: Powered by FABRIC, BrainPack verifies its own identity and enables trustless contextual sharing—facilitating efficient cross-manufacturer robot collaboration

Notably, although BrainPack is powered by the NVIDIA Jetson Thor GPU, OpenMind positions it as a plug-and-play solution—ensuring seamless integration with other hardware robots. OpenMind has explicitly confirmed compatibility with Unitree Robotics platforms, particularly the G1 humanoid and Go2 quadruped robots.

Given its robust functionality and broad compatibility, BrainPack anchors OpenMind’s vision of “robotic services” in concrete reality:

In logistics warehouses, BrainPack shares real-time maps and task statuses via FABRIC—enabling dynamic path optimization and load balancing;

In smart cities and environmental services, BrainPack achieves precise navigation and multi-robot collision avoidance—even underground in GPS-denied parking garages;

In nursing homes, BrainPack safely conducts nighttime patrols and medicine delivery…

According to official pre-order page details, BrainPack requires a $999 deposit, with first shipments expected in Q1 2026. As a pivotal milestone marking OpenMind’s transition from protocol development to physical product delivery, the installation of the first BrainPacks onto robots worldwide will shift OpenMind’s “shared collaborative intelligence network” from whitepaper theory to real-world operation—interacting and creating value in the physical world.

Alongside hardware entering production-grade delivery, OpenMind is developing an app store for quadruped and humanoid robots—enabling users to download apps and skills for their robots with one click, just as they do for smartphones on Apple App Store or Google Play.

Currently, the first application has already been uploaded to the OpenMind App Store. As OpenMind expands marketing, support, and educational outreach, it expects to attract global developers to contribute new apps and skills—accelerating the growth of quadruped and humanoid robots and delivering smarter services.

Foundation Launch: OpenMind Hits Milestones in Rapid Succession

Recently, the most widely discussed topic around OpenMind has been the establishment of the Fabric Foundation on December 30, 2025.

As an independent nonprofit organization, the Fabric Foundation focuses on building governance, economic, and coordination infrastructure to enable safe and efficient human–machine collaboration. Its specific responsibilities include supporting critical research, building public infrastructure, convening global stakeholders, expanding global access and participation, improving public understanding, and ensuring long-term stewardship.

Although the official announcement contains no explicit hints, a significant portion of the community views the foundation’s launch as a signal of an upcoming Token Generation Event (TGE).

Against this backdrop, what are the main ways for ordinary users to participate in the OpenMind ecosystem today?

First, users can earn rewards by completing tasks via the OpenMind App, FABRIC network tests, or the OM1 Beta version—or by taking robot courses, which are already available through Robostore and partner academic institutions.

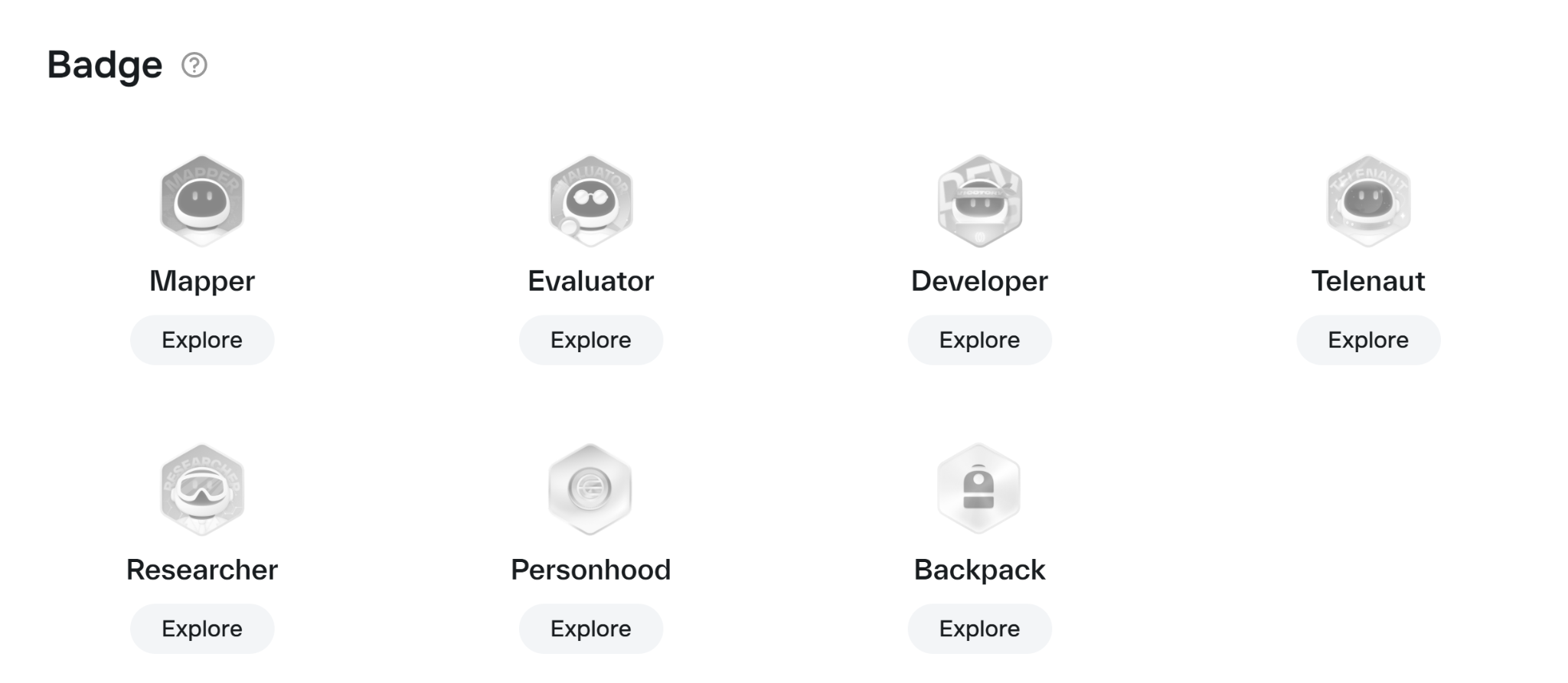

Second, OpenMind previously launched a badge system. Badges serve both as achievement markers and potential gateways to future ecosystem benefits—including anticipated airdrops. Users can accumulate badges as “chips” toward those benefits. Currently issued badges include:

- Mapper: Unlock by contributing spatial data via the App

- Evaluator: Unlock by watching and rating robot videos

- Developer: Unlock by contributing code to GitHub

- Telenaut: Unlock by remotely controlling robots via OpenMind’s teleoperation platform

- Researcher: Unlock by creating high-quality research content

- Personhood: Unlock by downloading the World App and registering/binding your account

- Backpack: Unlock by connecting and verifying your Backpack Wallet identity

With 2026 now upon us, the foundation’s launch functions like a starting pistol. According to the official roadmap, OpenMind is set to hit even denser milestones:

In the short term, OpenMind is accelerating development of OM1’s core functional prototype and FABRIC’s MVP—launching on-chain identity and foundational collaboration capabilities. In the medium term, OpenMind aims to deploy OM1 and FABRIC in education, home, and enterprise settings—onboarding early nodes and cultivating developer communities. Looking further ahead, OpenMind intends to establish OM1 and FABRIC as global standards—allowing any robot to connect to this open machine-collaboration network as easily as devices connect to the internet—and thereby forming a self-sustaining global machine economy.

Conclusion

From the 2035 vision depicted in I, Robot to the reality OpenMind is building, what we witness is not merely technological progress—but a fundamental paradigm shift.

In the old paradigm, robots from different vendors operated in silos—closed systems lacking unified communication protocols, making large-scale collaboration nearly impossible. OpenMind’s new paradigm—built on the OM1 OS and FABRIC collaboration network—is breaking down these barriers, transforming robots from “islands” into a “network,” and evolving them from “tools” into “efficient, collaborative economic agents.”

More importantly, this new paradigm is becoming tangible through deep partnerships with hardware manufacturers like Unitree and DEEP Robotics—entering real-world consumer scenarios. Physical products like BrainPack rapidly validate technical feasibility. From 180,000 test users to imminent hardware deliveries, OpenMind is proving milestone by milestone that the robotics “Android moment” is no longer hypothetical—it’s unfolding now. An open, collaborative, decentralized, and efficiently settled era of robotics adoption is arriving.

Certainly, we still have a long way to go before reaching the film’s consumer-era vision of “one robot per five people.” OpenMind itself remains in an early stage—where technology is proven, but commercialization awaits. With BrainPack’s scaled delivery in 2026, continuous expansion of the FABRIC network, and growing participation from developers and robot manufacturers, a global collaboration network comprising millions of robots is taking shape.

When thousands of robots cease being isolated individuals—and instead function like human society within a decentralized network, with clearly defined roles, specialized responsibilities, and interoperable value exchange—that is when the trillion-dollar machine economy era truly begins.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News