IOSG | Vision for the Robotics Industry: The Convergent Evolution of Automation, Artificial Intelligence, and Web3

TechFlow Selected TechFlow Selected

IOSG | Vision for the Robotics Industry: The Convergent Evolution of Automation, Artificial Intelligence, and Web3

The intersection of robotics, AI, and Web3 still represents the origin of the next-generation intelligent economic system.

Author: Jacob Zhao @IOSG

Robotics Panorama: From Industrial Automation to Humanoid Intelligence

The traditional robotics industry chain has formed a complete bottom-up layered system, covering four key segments: core components → intermediate control systems → whole-machine manufacturing → application integration. Core components (controllers, servos, reducers, sensors, batteries, etc.) have the highest technological barriers and determine the performance floor and cost ceiling of finished robots; control systems act as the "brain and cerebellum" of robots, responsible for decision-making, planning, and motion control; whole-machine manufacturing reflects supply chain integration capability. System integration and applications are increasingly becoming the new value core by determining commercialization depth.

Based on application scenarios and form factors, global robotics is evolving along the path of “industrial automation → scenario-specific intelligence → general-purpose intelligence,” forming five major categories: industrial robots, mobile robots, service robots, specialized robots, and humanoid robots.

#Industrial Robots

This is currently the only fully mature segment, widely used in welding, assembly, painting, and material handling within manufacturing processes. The industry has established a standardized supply chain with stable gross margins and clear ROI. Among its subcategories, collaborative robots (cobots), which emphasize human-robot collaboration, lightweight design, and easy deployment, are growing the fastest.

Representative companies: ABB, Fanuc, Yaskawa, KUKA, Universal Robots, Jaka, Aubo.

#Mobile Robots

Including AGVs (Automated Guided Vehicles) and AMRs (Autonomous Mobile Robots), these have been widely deployed in logistics warehousing, e-commerce delivery, and manufacturing transportation, becoming the most mature B2B category.

Representative companies: Amazon Robotics, Geek+, Quicktron, Locus Robotics.

#Service Robots

Targeting industries such as cleaning, catering, hospitality, and education, this is the fastest-growing consumer-facing domain. Cleaning products have entered the consumer electronics paradigm, while medical and commercial delivery robots are accelerating commercialization. Additionally, a new wave of more general-purpose manipulative robots is emerging (e.g., Dyna’s dual-arm system)—more flexible than task-specific devices but not yet achieving the generality of humanoid robots.

Representative companies: Ecovacs, Roborock, PuduTech, Enway, iRobot, Dyna, etc.

#Specialized Robots

Mainly serving fields like healthcare, military, construction, oceanography, and aerospace, this market has limited scale but high profitability and strong barriers. It largely depends on government and enterprise orders and remains in a vertically segmented growth phase. Notable projects include Intuitive Surgical, Boston Dynamics, ANYbotics, and NASA Valkyrie.

#Humanoid Robots

Regarded as the future "general labor platform."

Representative companies: Tesla (Optimus), Figure AI (Figure 01), Sanctuary AI (Phoenix), Agility Robotics (Digit), Apptronik (Apollo), 1X Robotics, Neura Robotics, Unitree, UBTECH, Zhiyuan Robotics, etc.

Humanoid robots represent the most watched frontier today. Their core value lies in adapting to existing social spaces through human-like form, making them a key pathway toward a "general labor platform." Unlike industrial robots that prioritize extreme efficiency, humanoid robots emphasize universal adaptability and task transferability, enabling entry into factories, homes, and public spaces without environmental modifications.

Currently, most humanoid robots remain at the technology demonstration stage, primarily validating dynamic balance, walking, and manipulation capabilities. While some projects have begun small-scale deployments in highly controlled factory environments (e.g., Figure × BMW, Agility Digit), with expectations for more vendors (such as 1X) entering early distribution starting in 2026, these applications are still "narrow-scenario, single-task" use cases rather than truly general-purpose labor deployment. Overall, it will take several years before large-scale commercialization becomes feasible. Key bottlenecks include: control challenges in multi-degree-of-freedom coordination and real-time dynamic balance; energy consumption and endurance limitations due to battery energy density and drive efficiency; unstable perception-decision loops in open environments with poor generalization; significant data gaps (insufficient for training general strategies); unresolved cross-body transfer learning; and hardware supply chains and cost curves (especially outside China) still posing practical barriers, further increasing the difficulty of low-cost, large-scale deployment.

Future commercialization is expected to unfold in three stages: short-term reliance on Demo-as-a-Service, driven by pilots and subsidies; mid-term evolution into Robotics-as-a-Service (RaaS), building ecosystems of tasks and skills; long-term shift toward labor cloud and intelligent subscription services, shifting value focus from hardware manufacturing to software and service networks. In summary, humanoid robots are in a critical transition phase from demonstration to self-learning, and their ability to overcome triple thresholds—control, cost, and algorithms—will determine whether they can achieve true embodied intelligence.

AI × Robotics: The Dawn of the Embodied Intelligence Era

Traditional automation relies primarily on pre-programmed, assembly-line control (e.g., the DSOP architecture: Detection–Segmentation–Optimization–Planning), operating reliably only in structured environments. However, the real world is far more complex and variable. A new generation of embodied intelligence (Embodied AI) follows a different paradigm: leveraging large models and unified representation learning to give robots cross-scenario "understand–predict–act" capabilities. Embodied intelligence emphasizes the dynamic coupling of body (hardware) + brain (model) + environment (interaction). Robots serve as carriers—the intelligence itself is the core.

Generative AI belongs to linguistic-world intelligence, excelling in understanding symbols and semantics; embodied intelligence belongs to real-world intelligence, mastering perception and action. They respectively correspond to the "brain" and "body," representing two parallel mainlines in AI evolution. In terms of intelligence hierarchy, embodied intelligence ranks higher than generative AI, though its maturity lags significantly behind. LLMs rely on vast internet corpora, forming a clear "data → compute → deployment" closed loop; robotic intelligence requires first-person, multimodal, action-bound data—including remote operation trajectories, first-person video, spatial maps, and action sequences—which does not naturally exist and must be generated through real interactions or high-fidelity simulations, making it scarcer and more expensive. While simulated and synthetic data help, they cannot fully replace real sensor-motor experience—this is why Tesla, Figure, and others must build their own teleoperation data factories, and why third-party data labeling facilities are emerging in Southeast Asia. In short: LLMs learn from existing data, while robots must "create" data through interaction with the physical world. Over the next 5–10 years, both will deeply integrate at the level of Vision–Language–Action (VLA) models and Embodied Agent architectures—LLMs handling high-level cognition and planning, robots executing in the real world—forming a bidirectional data-and-action loop, jointly advancing AI from "language intelligence" toward true artificial general intelligence (AGI).

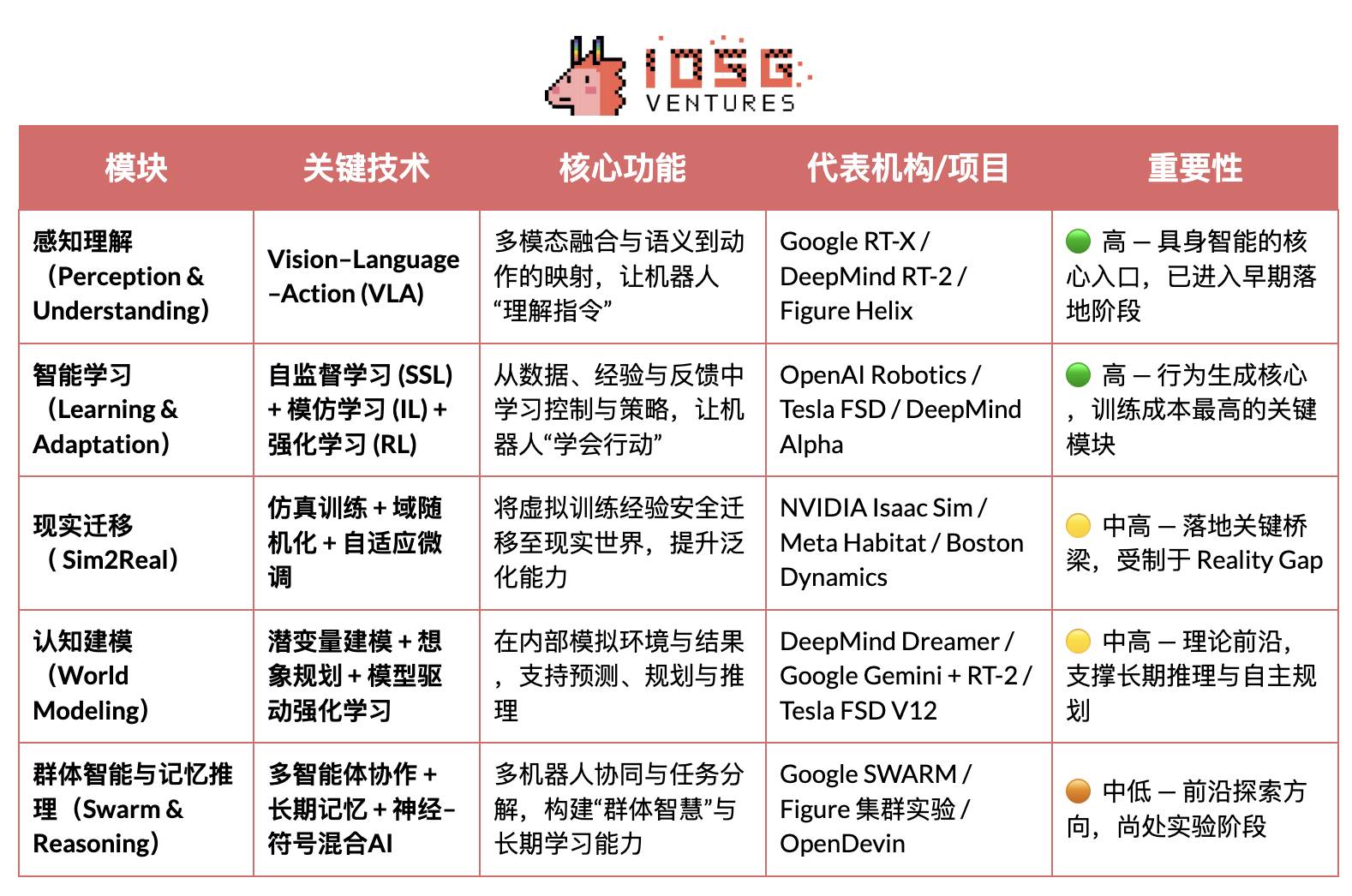

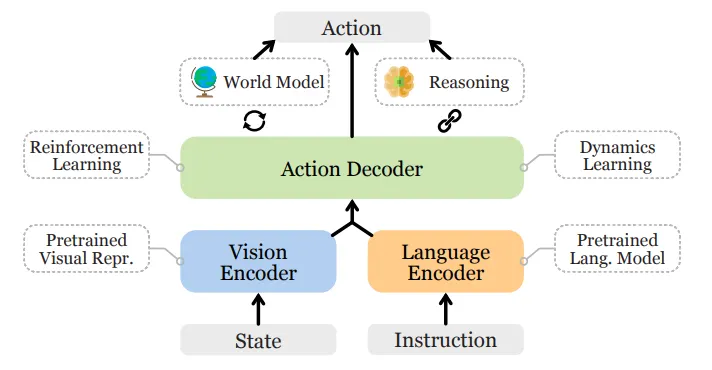

The core technical system of embodied intelligence can be viewed as a bottom-up intelligence stack: VLA (perception fusion), RL/IL/SSL (intelligent learning), Sim2Real (real-world transfer), World Model (cognitive modeling), and multi-agent collaboration with memory and reasoning (Swarm & Reasoning). Among these, VLA and RL/IL/SSL act as the "engines" of embodied intelligence, determining its deployment and commercialization; Sim2Real and World Model are key technologies connecting virtual training to real-world execution; multi-agent collaboration and memory-reasoning represent higher-level group and meta-cognitive evolution.

Perception & Understanding: Vision–Language–Action Models

VLA models integrate vision, language, and action channels, enabling robots to understand human intent from natural language and translate it into specific actions. The execution flow includes semantic parsing, object identification (locating target objects from visual input), and path planning with action execution, thus closing the loop of "understanding semantics → perceiving the world → completing tasks"—one of the key breakthroughs in embodied intelligence. Current representative projects include Google RT-X, Meta Ego-Exo, and Figure Helix, showcasing cutting-edge directions such as cross-modal understanding, immersive perception, and language-driven control.

Currently, VLA remains in an early stage, facing four core bottlenecks:

-

Semantic ambiguity and weak task generalization: models struggle to interpret vague or open-ended instructions;

-

Unstable vision-action alignment: perception errors amplify during path planning and execution;

-

Scarcity and lack of standardization in multimodal data: high costs in collection and annotation hinder scalable data flywheels;

-

Challenges in long-horizon tasks across time and space: extended task durations expose insufficient planning and memory capacity, while large spatial ranges require inference beyond immediate visibility—current VLA lacks stable world models and cross-space reasoning capabilities.

These issues collectively limit VLA's cross-scenario generalization and scalability.

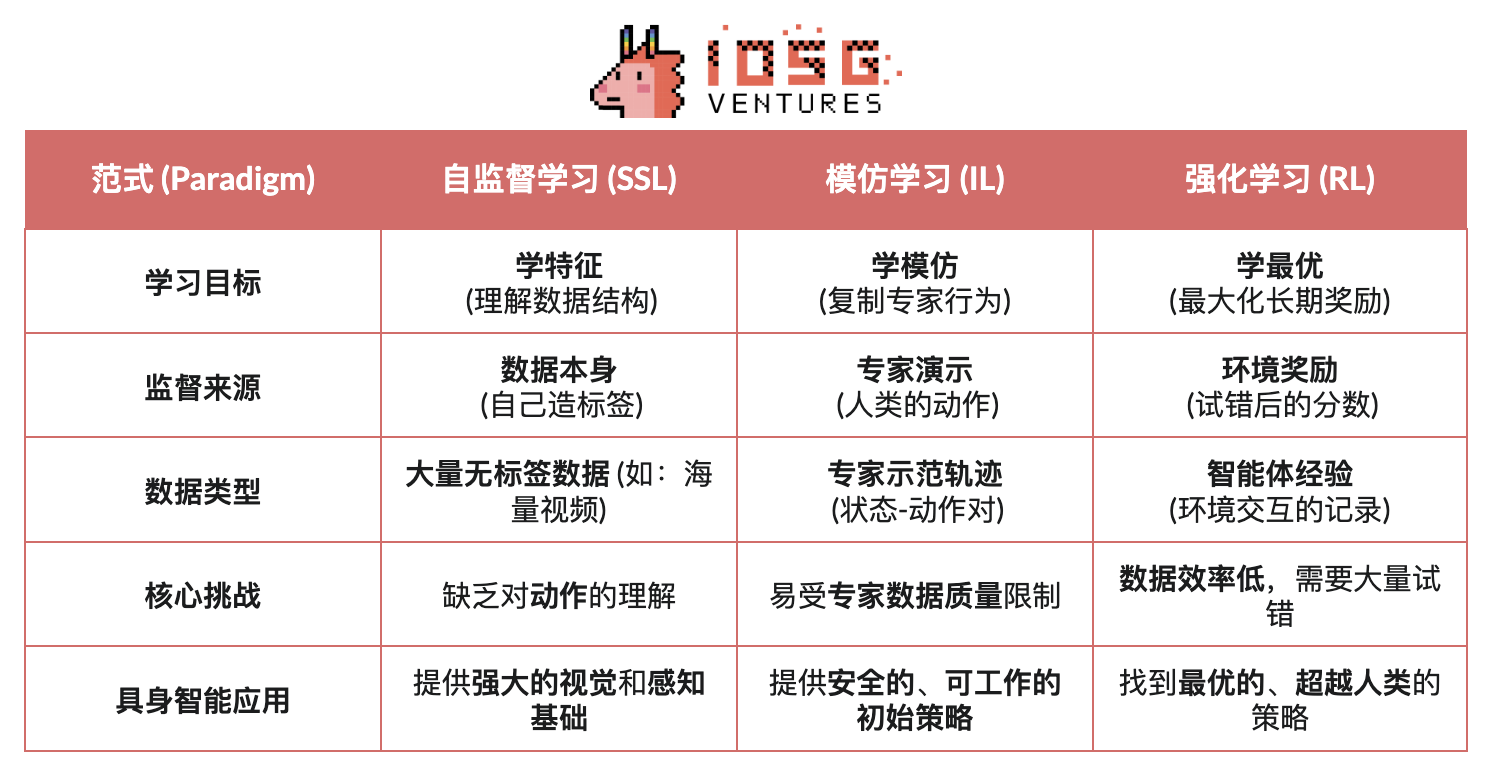

Intelligent Learning: Self-Supervised Learning (SSL), Imitation Learning (IL), and Reinforcement Learning (RL)

-

Self-Supervised Learning (SSL): Automatically extracts semantic features from sensory data, allowing robots to "understand the world." Equivalent to teaching machines how to observe and represent.

-

Imitation Learning (IL): Enables rapid acquisition of basic skills by mimicking human demonstrations or expert examples. Equivalent to teaching machines to act like humans.

-

Reinforcement Learning (RL): Optimizes action policies through trial and error guided by reward-punishment mechanisms. Equivalent to letting machines grow through experimentation.

In embodied AI, SSL aims to enable robots to predict state changes and physical laws from sensory data, thereby understanding causal structures of the world; RL is the core engine of intelligence formation, driving robots to master complex behaviors such as walking, grasping, and obstacle avoidance through environmental interaction and reward-based optimization; IL accelerates this process by leveraging human demonstrations to provide prior knowledge. The current mainstream approach combines all three into a hierarchical learning framework: SSL provides foundational representations, IL imparts human priors, and RL drives policy optimization—balancing efficiency and stability to form the core mechanism bridging understanding and action in embodied intelligence.

Real-World Transfer: Sim2Real — Bridging Simulation and Reality

Sim2Real (Simulation to Reality) trains robots in virtual environments before transferring them to the real world. Using high-fidelity simulation platforms (e.g., NVIDIA Isaac Sim & Omniverse, DeepMind MuJoCo), it generates massive interaction datasets, significantly reducing training costs and hardware wear. Its core challenge lies in narrowing the "simulation-to-reality gap," primarily addressed through:

-

Domain Randomization: Randomly adjusting parameters like lighting, friction, and noise in simulation to enhance model generalization;

-

Physics Consistency Calibration: Using real sensor data to fine-tune simulation engines for greater physical realism;

-

Adaptive Fine-Tuning: Rapid retraining in real environments to achieve stable transfer.

Sim2Real is the central link in deploying embodied intelligence, enabling AI models to learn the "perceive–decide–act" loop safely and affordably in virtual worlds. While simulation training is mature (e.g., NVIDIA Isaac Sim, MuJoCo), real-world transfer remains constrained by the reality gap, high computational and annotation costs, and insufficient generalization and safety in open environments. Nevertheless, Simulation-as-a-Service (SimaaS) is emerging as one of the lightest yet most strategically valuable infrastructures in the era of embodied intelligence, with business models including platform subscriptions (PaaS), data generation (DaaS), and safety validation (VaaS).

Cognitive Modeling: World Model — The Robot’s "Inner World"

A world model acts as the "inner brain" of embodied intelligence, allowing robots to simulate environments and anticipate outcomes internally, enabling prediction and reasoning. By learning dynamic environmental rules, it constructs predictive internal representations, transforming agents from passive executors into active planners. Representative projects include DeepMind Dreamer, Google Gemini + RT-2, Tesla FSD V12, and NVIDIA WorldSim. Typical technical approaches include:

-

Latent Dynamics Modeling: Compressing high-dimensional perception into latent state spaces;

-

Imagination-Based Planning: Virtual trial-and-error and trajectory prediction within the model;

-

Model-Based Reinforcement Learning: Replacing real environments with world models to reduce training costs.

World models represent the theoretical frontier of embodied intelligence, serving as the core path from "reactive" to "predictive" intelligence. However, they face challenges such as modeling complexity, unstable long-horizon predictions, and lack of unified standards.

Swarm Intelligence and Memory Reasoning: From Individual Action to Collaborative Cognition

Multi-agent systems and memory-reasoning represent two important directions in the evolution of embodied intelligence—from "individual intelligence" toward "collective intelligence" and "cognitive intelligence." Together, they support collaborative learning and long-term adaptation in intelligent systems.

#Multi-Agent Collaboration (Swarm / Cooperative RL):

Refers to multiple agents achieving coordinated decision-making and task allocation in shared environments through distributed or cooperative reinforcement learning. This direction has solid research foundations—for example, OpenAI's Hide-and-Seek experiment demonstrated spontaneous cooperation and emergent strategies among agents, while DeepMind's QMIX and MADDPG algorithms provide centralized-training, decentralized-execution frameworks. These methods have already been validated in applications such as warehouse robot scheduling, inspection, and swarm control.

#Memory & Reasoning

Focused on equipping agents with long-term memory, contextual understanding, and causal reasoning—key capabilities for cross-task transfer and self-planning. Notable research includes DeepMind Gato (a unified perception-language-control multitask agent), the DeepMind Dreamer series (imagination-based planning via world models), and Voyager—an open-ended embodied agent achieving continuous learning through external memory and self-evolution. These systems lay the foundation for robots to "remember the past and project the future."

Global Landscape of Embodied Intelligence: Coopetition

The global robotics industry is in a phase of "cooperation-led, competition-intensifying." China's supply chain efficiency, America's AI capabilities, Japan's component precision, and Europe's industrial standards collectively shape the long-term landscape of robotics.

-

The U.S. maintains leadership in frontier AI models and software (DeepMind, OpenAI, NVIDIA), but this advantage hasn't extended to robotics hardware. Chinese manufacturers outperform in iteration speed and real-world performance. The U.S. is pushing industrial reshoring via the CHIPS Act and Inflation Reduction Act (IRA).

-

China leverages mass manufacturing, vertical integration, and policy support to lead in components, automated factories, and humanoid robots, with strong hardware and supply chain capabilities. Unitree and UBTECH have achieved mass production and are expanding into intelligent decision layers. However, there remains a significant gap in algorithmic and simulation training compared to the U.S.

-

Japan has long dominated high-precision components and motion control technology, with a robust industrial system, but AI model integration remains in early stages, with innovation progressing cautiously.

-

South Korea leads in consumer robot adoption—driven by LG, NAVER Labs—and possesses a mature service robotics ecosystem.

-

Europe boasts well-developed engineering systems and safety standards, with active R&D players like 1X Robotics, though some manufacturing has moved offshore, and innovation focuses more on collaboration and standardization.

Robotics × AI × Web3: Narrative Vision and Real Pathways

In 2025, the Web3 industry sees new narratives emerging around convergence with robotics and AI. Although Web3 is envisioned as the underlying protocol for a decentralized machine economy, its feasibility and value vary significantly across layers:

-

Hardware manufacturing and service layers are capital-intensive with weak data closure; Web3 currently plays only auxiliary roles in edge areas like supply chain finance or equipment leasing;

-

Simulation and software ecosystems show higher compatibility—simulation data and training tasks can be tokenized on-chain, and agents or skill modules can be assetized via NFTs or Agent Tokens;

-

At the platform layer, decentralized labor and collaboration networks show the greatest potential—Web3 can gradually build a trusted "machine labor market" through integrated identity, incentive, and governance mechanisms, laying institutional groundwork for the future machine economy.

In the long term, collaboration and platform layers represent the most valuable directions for Web3’s integration with robotics and AI. As robots gain perception, language, and learning abilities, they evolve into intelligent entities capable of autonomous decision-making, collaboration, and economic value creation. For these "intelligent workers" to fully participate in the economy, four core thresholds must be crossed: identity, trust, incentives, and governance.

-

At the identity layer, machines need verifiable, traceable digital identities. Through Machine DID, each robot, sensor, or drone can generate a unique, on-chain verifiable "ID card" linked to ownership, behavior records, and permission scopes, enabling secure interaction and accountability.

-

At the trust layer, the key is making "machine labor" verifiable, measurable, and pricable. Leveraging smart contracts, oracles, and audit mechanisms—combined with Proof of Physical Work (PoPW), Trusted Execution Environments (TEE), and Zero-Knowledge Proofs (ZKP)—ensures authenticity and traceability of task execution, giving machine actions economic accounting value.

-

At the incentive layer, Web3 enables automatic settlement and value transfer between machines via token incentives, account abstraction, and state channels. Robots can conduct micro-payments for compute leasing and data sharing, using staking and penalty mechanisms to ensure task fulfillment. With smart contracts and oracles, a decentralized "machine collaboration market" can emerge without human orchestration.

-

At the governance layer, once machines achieve long-term autonomy, Web3 offers transparent, programmable governance frameworks: DAOs for collective parameter decisions, multi-sig and reputation systems for security and order. Long-term, this could drive machine societies toward an "algorithmic governance" phase—humans set goals and boundaries, while machines maintain incentives and equilibrium through contracts.

The ultimate vision of Web3-robotics convergence: a real-world evaluation network—a "physical-world reasoning engine" composed of distributed robots continuously testing and benchmarking model capabilities across diverse, complex physical settings; and a robot labor market—where robots perform verifiable real-world tasks globally, earn income via on-chain settlements, and reinvest proceeds into compute or hardware upgrades.

In reality, however, the integration of embodied intelligence and Web3 remains in early exploration, with decentralized machine economies largely confined to narrative and community-driven efforts. Practical integration pathways with near-term feasibility include three aspects:

(1) Data crowdsourcing and provenance—Web3 uses on-chain incentives and traceability to encourage contributors to upload real-world data; (2) Global long-tail participation—cross-border micropayments and micro-incentives effectively lower data collection and distribution costs; (3) Financialization and collaborative innovation—DAO models can promote robot assetization, revenue tokenization, and inter-machine settlement mechanisms.

Overall, short-term efforts focus on data collection and incentives; mid-term breakthroughs may occur in "stablecoin payments + long-tail data aggregation" and RaaS assetization and settlement layers; long-term, if humanoid robots achieve widespread adoption, Web3 could become the foundational layer for machine ownership, revenue distribution, and governance, enabling a truly decentralized machine economy.

Web3 Robotics Ecosystem Map and Selected Cases

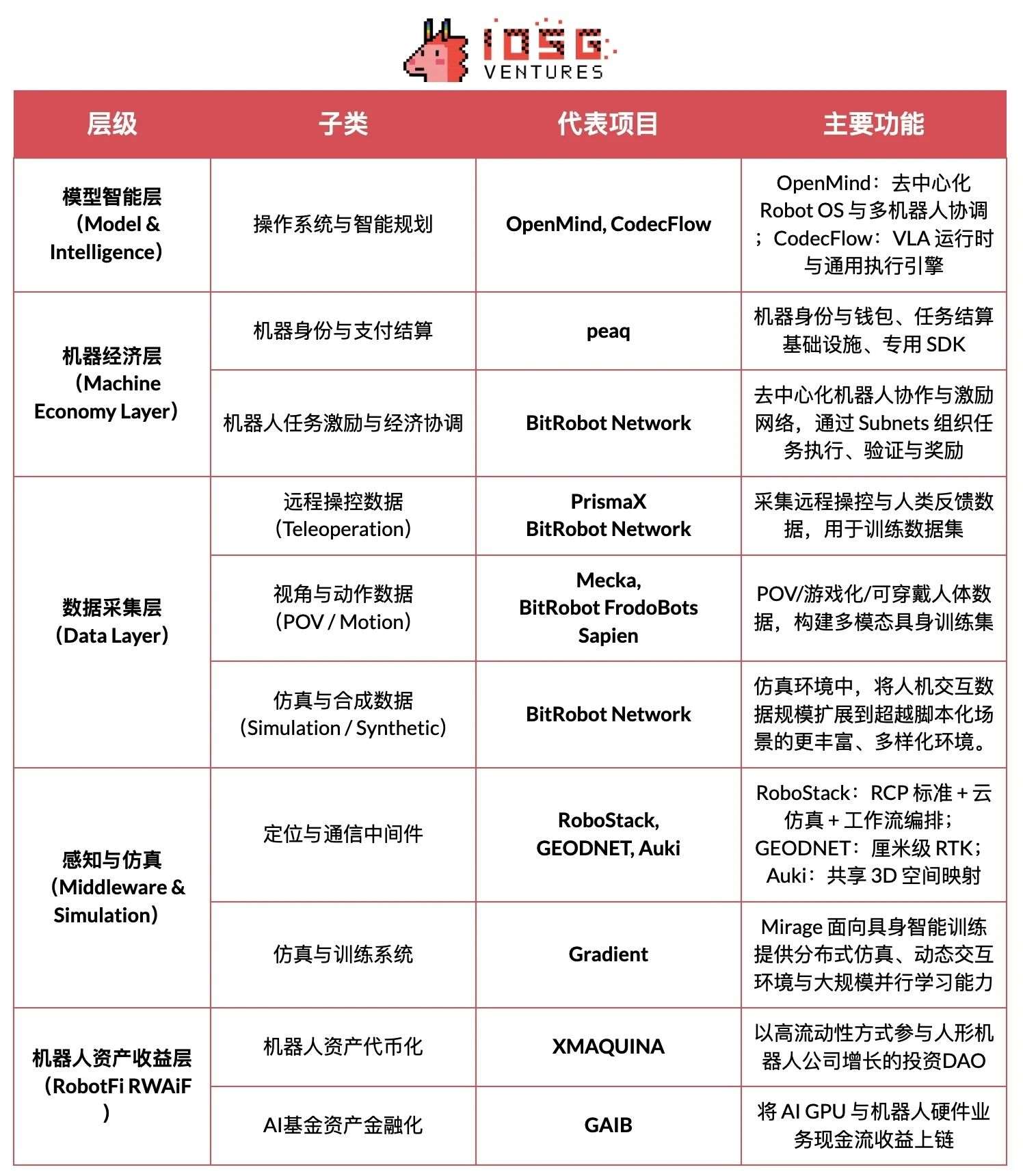

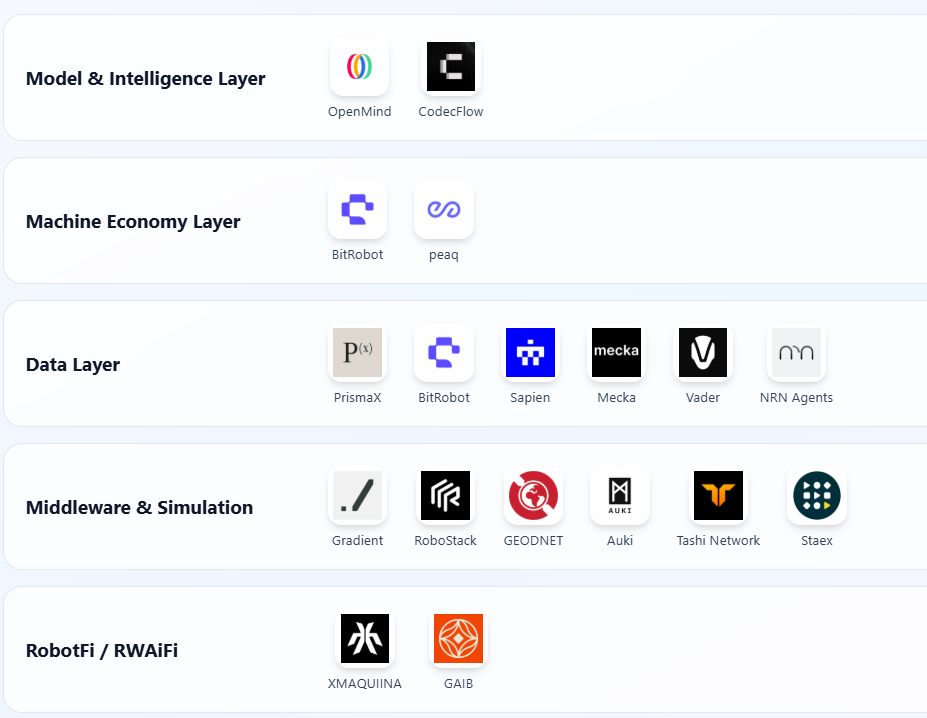

Based on three criteria—"verifiable progress, technical transparency, and industrial relevance"—we map representative Web3 × Robotics projects across a five-layer architecture: Model & Intelligence Layer, Machine Economy Layer, Data Layer, Perception & Simulation Infrastructure Layer, and Robot Asset Yield Layer. To maintain objectivity, we exclude obvious "hype-chasing" or under-documented projects; corrections are welcome.

Model & Intelligence Layer

#Openmind - Building Android for Robots (https://openmind.org/)

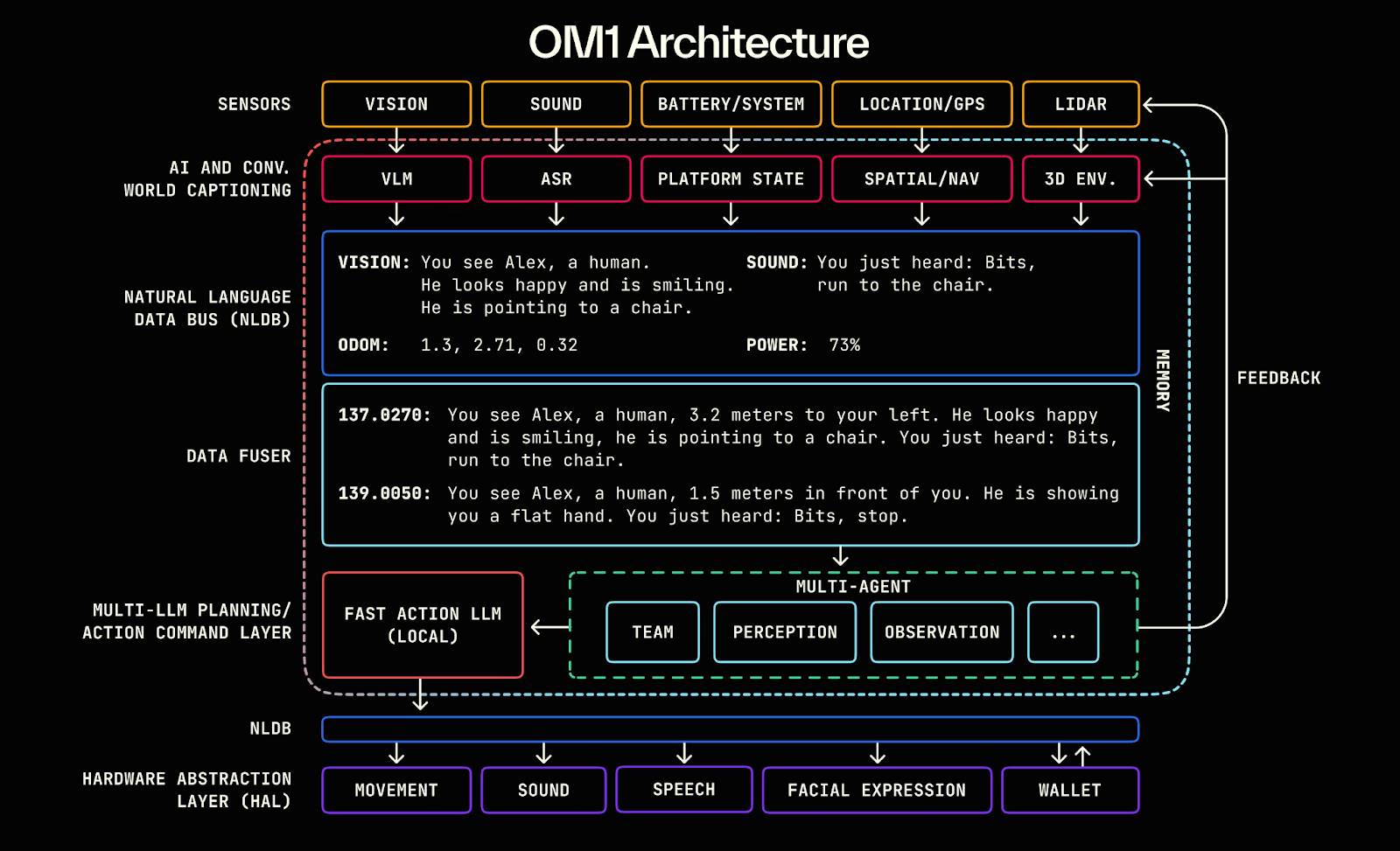

OpenMind is an open-source operating system (Robot OS) targeting embodied AI and robot control, aiming to build the world’s first decentralized robot runtime environment and development platform. The project centers on two core components:

-

OM1: A modular, open-source AI agent runtime layer built atop ROS2, orchestrating perception, planning, and action pipelines for both digital and physical robots;

-

FABRIC: A distributed coordination layer connecting cloud compute, models, and real robots, enabling developers to control and train robots within a unified environment.

OpenMind’s core function is to serve as an intelligent middleware between LLMs (large language models) and the robotics world, transforming linguistic intelligence into embodied intelligence (Embodied Intelligence), constructing an intelligent backbone from understanding (Language → Action) to alignment (Blockchain → Rules).

OpenMind’s multi-layer system achieves a complete collaborative loop: humans provide feedback and annotations via the OpenMind App (RLHF data), the Fabric Network handles identity verification, task assignment, and settlement coordination, and OM1 Robots execute tasks while adhering to a blockchain-based "robot constitution" for behavioral auditing and payment—enabling a decentralized machine collaboration network spanning human feedback → task collaboration → on-chain settlement.

Project Progress and Reality Assessment

OpenMind is in an early stage of "technically operational but commercially unproven." The core OM1 Runtime is open-sourced on GitHub, runs across platforms, supports multimodal inputs, and uses a Natural Language Data Bus (NLDB) to achieve language-to-action task understanding—highly original but still experimental. The Fabric Network and on-chain settlement are only at the interface design stage.

Ecosystem-wise, the project collaborates with open hardware platforms like Unitree, Ubtech, TurtleBot, and academic institutions such as Stanford, Oxford, and Seoul Robotics, mainly for education and research validation, with no industrial deployment yet. The app has a test version, but incentive and task functions remain immature.

Commercially, OpenMind envisions a three-layer ecosystem: OM1 (open system) + Fabric (settlement protocol) + Skill Marketplace (incentive layer). Currently revenue-free, it relies on ~$20 million in early funding (Pantera, Coinbase Ventures, DCG). Overall, it is technologically advanced but commercially nascent. If Fabric succeeds, it could become the "Android of the embodied intelligence era," though the timeline is long, risks are high, and hardware dependency is strong.

#CodecFlow - The Execution Engine for Robotics (https://codecflow.ai)

CodecFlow is a decentralized execution layer protocol (Fabric) built on the Solana network, designed to provide on-demand runtime environments for AI agents and robotic systems—giving every agent an "instant machine." The project consists of three core modules:

-

Fabric: A cross-cloud compute aggregation layer (Weaver + Shuttle + Gauge), generating secure VMs, GPU containers, or robot control nodes within seconds for AI tasks;

-

optr SDK: An agent execution framework (Python API) for creating "Operators" that can manipulate desktops, simulations, or real robots;

-

Token Incentives: An on-chain incentive and payment layer connecting compute providers, agent developers, and automation users, forming a decentralized compute and task market.

CodecFlow’s core goal is to create a "decentralized execution base for AI and robot operators," enabling any agent to run securely in any environment (Windows/Linux/ROS/MuJoCo/robot controllers), establishing a universal execution architecture from compute orchestration (Fabric) → system environment (System Layer) → perception and action (VLA Operator).

Project Progress and Reality Assessment

An early version of the Fabric framework (Go) and optr SDK (Python) has been released, capable of launching isolated compute instances via web or CLI. The Operator marketplace is expected to launch by end of 2025, positioning itself as a decentralized execution layer for AI, primarily serving AI developers, robotics research teams, and automation companies.

Machine Economy Layer

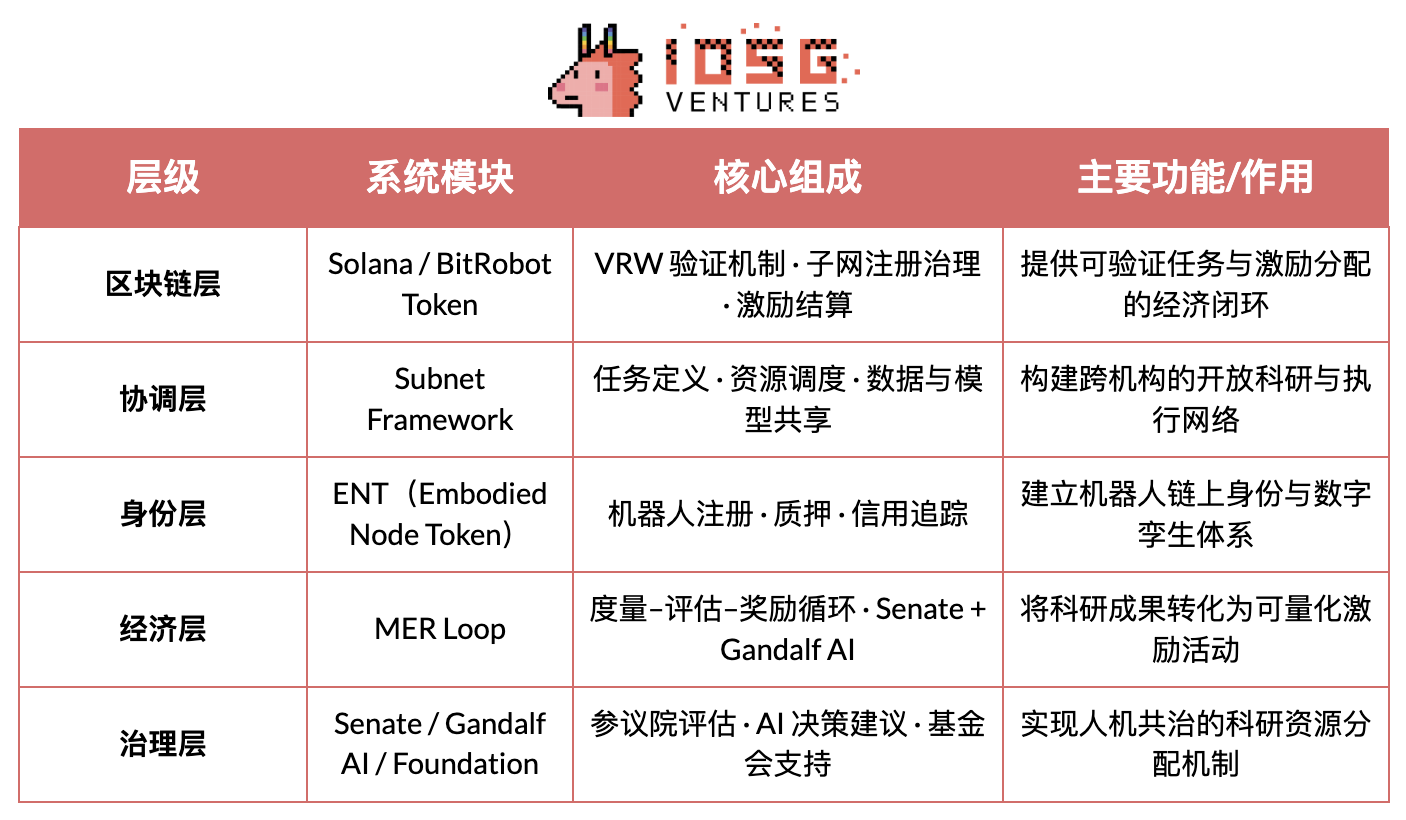

#BitRobot - The World’s Open Robotics Lab (https://bitrobot.ai)

BitRobot is a decentralized research and collaboration network (Open Robotics Lab) for embodied AI and robotics R&D, co-launched by FrodoBots Labs and Protocol Labs. Its core vision is to enable open scientific collaboration through a "subnet (Subnets) + incentives + Verifiable Robotic Work (VRW)" architecture. Key functions include:

-

Defining and verifying real contributions of each robot task via the VRW (Verifiable Robotic Work) standard;

-

Granting on-chain identity and economic responsibility to robots via the Embodied Node Token (ENT);

-

Organizing cross-regional collaboration in research, compute, equipment, and operators via Subnets;

-

Enabling "human-machine co-governance" of incentive decisions and research governance via Senate + Gandalf AI.

Since releasing its whitepaper in 2025, BitRobot has operated multiple subnets (e.g., SN/01 ET Fugi, SN/05 SeeSaw by Virtuals Protocol), achieving decentralized teleoperation and real-world data collection, and launched a $5M Grand Challenges fund to drive global model development competitions.

#peaq – The Economy of Things (https://www.peaq.network)

peaq is a Layer-1 blockchain purpose-built for the machine economy, providing foundational capabilities for millions of robots and devices—machine identity, on-chain wallets, access control, and nanosecond-level time synchronization (Universal Machine Time). Its Robotics SDK allows developers to make robots "machine-economy-ready" with minimal code, enabling cross-vendor, cross-system interoperability and interaction.

Currently, peaq has launched the world’s first tokenized robot farm and supports over 60 real-world machine applications. Its tokenization framework helps robotics firms raise capital for capital-intensive hardware, expanding participation from traditional B2B/B2C to broader community levels. Supported by a protocol-level incentive pool funded through network fees, peaq subsidizes new device onboarding and developer support, creating an economic flywheel to accelerate robotics and physical AI projects.

Data Layer

Focused on solving the scarcity and high cost of high-quality real-world data for embodied intelligence training. Various approaches collect and generate human-robot interaction data, including teleoperation (PrismaX, BitRobot Network), first-person view and motion capture (Mecka, BitRobot Network, Sapien, Vader, NRN), and simulation/synthetic data (BitRobot Network), providing scalable, generalizable training foundations for robot models.

It must be noted that Web3 does not excel at "producing data"—in terms of hardware, algorithms, and collection efficiency, Web2 giants far surpass any DePIN project. Its real value lies in reshaping data distribution and incentive mechanisms. Based on a "stablecoin payment network + crowdsourcing model," it enables low-cost micro-settlements, contribution tracing, and automated revenue sharing through permissionless incentives and on-chain provenance. However, open crowdsourcing still faces quality and demand closure challenges—data quality varies, and effective validation and stable buyers are lacking.

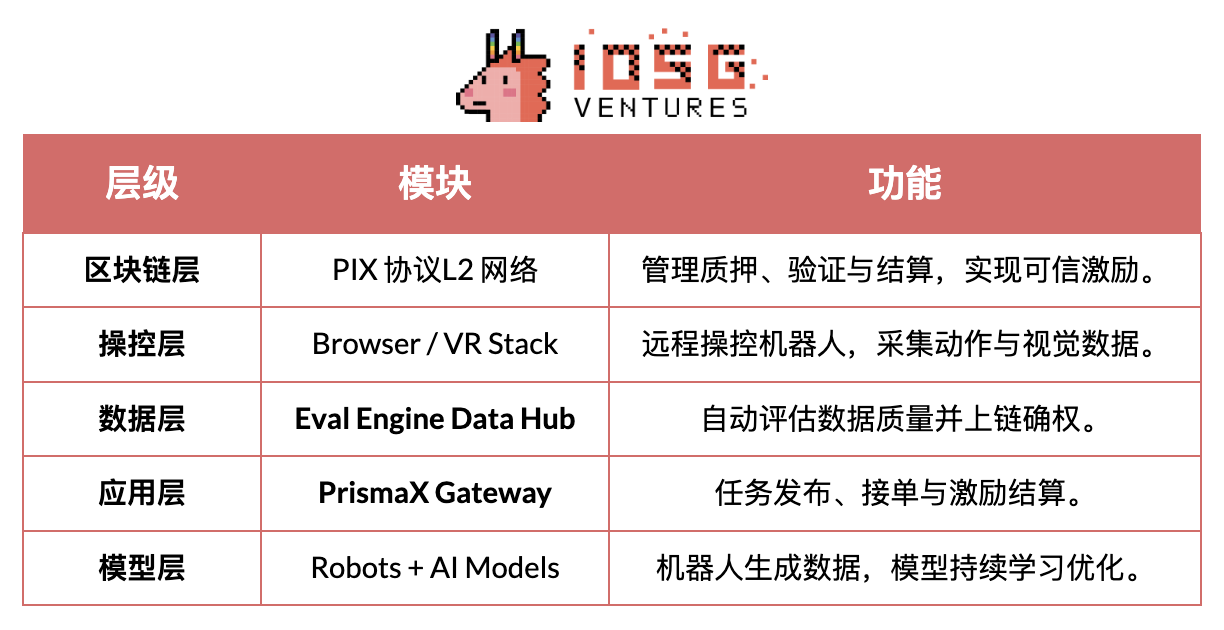

#PrismaX (https://gateway.prismax.ai)

PrismaX is a decentralized teleoperation and data economy network for embodied AI, aiming to build a "global robot labor market" where human operators, robot devices, and AI models co-evolve via on-chain incentives. The project includes two core components:

-

Teleoperation Stack—remote operation system (browser/VR interface + SDK), connecting global robotic arms and service robots for real-time human control and data collection;

-

Eval Engine—data evaluation and validation engine (CLIP + DINOv2 + optical flow semantic scoring), generating quality scores for each operation trajectory and enabling on-chain settlement.

Through decentralized incentives, PrismaX transforms human operations into machine learning data, creating a closed loop from teleoperation → data collection → model training → on-chain settlement—achieving a circular economy where "human labor is data assets."

Project Progress and Reality Assessment

PrismaX launched a test version in August 2025 (gateway.prismax.ai), allowing users to remotely operate robotic arms for grasp experiments and generate training data. The Eval Engine is running internally. Overall, PrismaX demonstrates high technical maturity and clear positioning, acting as a key middleware layer connecting "human operation × AI models × blockchain settlement." Its long-term potential lies in becoming the "decentralized labor and data protocol of the embodied intelligence era," though it still faces scalability challenges in the short term.

#BitRobot Network (https://bitrobot.ai/)

BitRobot Network collects multi-source data—including video, teleoperation, and simulation—via its subnets. SN/01 ET Fugi allows users to remotely control robots to complete tasks, gathering navigation and perception data through a "real-life Pokémon Go-style" interaction. This gameplay led to the FrodoBots-2K dataset—one of the largest open-source human-robot navigation datasets—used by institutions like UC Berkeley RAIL and Google DeepMind. SN/05 SeeSaw (Virtual Protocol) crowdsources large-scale first-person video data using iPhones in real environments. Other announced subnets, such as RoboCap and Rayvo, focus on collecting first-person video using low-cost physical devices.

#Mecka (https://www.mecka.ai)

Mecka is a robotics data company that crowdsources first-person video, human motion data, and task demonstrations via gamified smartphone apps and custom hardware, building large-scale multimodal datasets to train embodied AI models.

#Sapien (https://www.sapien.io/)

Sapien is a crowdsourcing platform centered on "human motion data driving robot intelligence," collecting human movement, posture, and interaction data via wearable devices and mobile apps to train embodied AI models. The project aims to build the world’s largest human motion data network, turning natural human behavior into foundational data for robot learning and generalization.

#Vader (https://www.vaderai.ai)

Vader crowdsources first-person video and task demonstrations through its real-world MMO app EgoPlay: users record daily activities from a first-person view and earn $VADER rewards. Its ORN data pipeline converts raw POV footage into privacy-processed structured datasets containing action labels and semantic narratives, directly usable for humanoid robot policy training.

#NRN Agents (https://www.nrnagents.ai/)

A gamified embodied RL data platform that crowdsources human demonstration data via browser-based robot control and simulation competitions. NRN generates long-tail behavioral trajectories through "gamified" tasks, supporting imitation learning and continual reinforcement learning, serving as scalable data primitives for sim-to-real policy training.

#Comparison of Embodied Intelligence Data Collection Projects

Perception and Simulation (Middleware & Simulation)

This layer provides core infrastructure connecting the physical world to intelligent decision-making—capabilities such as localization, communication, spatial modeling, and simulation training—and serves as the "middleware skeleton" for large-scale embodied intelligence systems. Currently in early exploration, projects are differentiating across high-precision localization, shared spatial computing, protocol standardization, and distributed simulation, with no unified standard or interoperable ecosystem yet.

Middleware & Spatial Infrastructure

Core robotic capabilities—navigation, localization, connectivity, and spatial modeling—form the critical bridge between the physical world and intelligent decision-making. While broader DePIN projects (Silencio, WeatherXM, DIMO) are beginning to mention "robotics," the following are most directly relevant to embodied intelligence.

#RoboStack – Cloud-Native Robot Operating Stack (https://robostack.io)

RoboStack is a cloud-native robotics middleware that enables real-time task scheduling, remote control, and cross-platform interoperability via RCP (Robot Context Protocol), offering cloud simulation, workflow orchestration, and agent integration.

#GEODNET – Decentralized GNSS Network (https://geodnet.com)

GEODNET is a global decentralized GNSS network providing centimeter-level RTK high-precision positioning. Through distributed base stations and on-chain incentives, it delivers a real-time "geospatial reference layer" for drones, autonomous vehicles, and robots.

#Auki – Posemesh for Spatial Computing (https://www.auki.com)

Auki builds a decentralized Posemesh spatial computing network, using crowdsourced sensors and compute nodes to generate real-time 3D environment maps, providing a shared spatial reference for AR, robot navigation, and multi-device collaboration. It is a key infrastructure linking virtual and physical spaces, advancing AR × Robotics convergence.

#Tashi Network – Real-Time Mesh Collaboration Network for Robots (https://tashi.network)

A decentralized real-time mesh network achieving sub-30ms consensus, low-latency sensor exchange, and multi-robot state synchronization. Its MeshNet SDK supports shared SLAM, swarm collaboration, and robust map updates, delivering a high-performance real-time collaboration layer for embodied AI.

#Staex – Decentralized Connectivity & Telemetry Network (https://www.staex.io)

A decentralized connectivity layer originating from Deutsche Telekom’s R&D division, providing secure communication, trusted telemetry, and device-to-cloud routing, enabling reliable data exchange and cross-operator collaboration among robot fleets.

Distributed Simulation & Training Systems

#Gradient - Towards Open Intelligence (https://gradient.network/)

Gradient is an AI lab dedicated to "open intelligence," aiming to achieve distributed training, inference, validation, and simulation on decentralized infrastructure. Its current tech stack includes Parallax (distributed inference), Echo (distributed RL and multi-agent training), and Gradient Cloud (enterprise AI solutions). In robotics, the Mirage platform provides distributed simulation, dynamic interactive environments, and large-scale parallel learning for accelerating world model and general policy training. Mirage is exploring potential collaboration with NVIDIA on its Newton engine.

Robot Asset Yield Layer (RobotFi / RWAiFi)

This layer focuses on transforming robots from "productive tools" into "financializable assets" through asset tokenization, revenue distribution, and decentralized governance—building the financial infrastructure of the machine economy. Representative projects include:

#XmaquinaDAO – Physical AI DAO (https://www.xmaquina.io)

XMAQUINA is a decentralized ecosystem offering global users high-liquidity access to top humanoid robot and embodied AI companies, bringing VC-exclusive opportunities on-chain. Its token DEUS serves as both a liquid index asset and governance vehicle for treasury allocation and ecosystem development. Through the DAO Portal and Machine Economy Launchpad, communities can jointly hold and support emerging Physical AI projects via tokenized machine assets and structured on-chain participation.

#GAIB – The Economic Layer for AI Infrastructure (https://gaib.ai/)

GAIB aims to provide a unified economic layer for physical AI infrastructure like GPUs and robots, connecting decentralized capital with real AI infrastructure to build a verifiable, composable, revenue-generating intelligent economy.

In robotics, GAIB does not simply "sell robot tokens," but rather financializes robot devices and operational contracts (RaaS, data collection, teleoperation, etc.) on-chain, transforming "real cash flows → composable on-chain yield assets." This system covers hardware financing (leasing/staking), operational cash flows (RaaS/data services), and data revenue (licensing/contracts), making robot assets and their cash flows measurable, priceable, and tradable.

GAIB uses AID / sAID as settlement and yield tokens, ensuring stable returns through structured risk controls (over-collateralization, reserves, insurance), and plans long-term integration with DeFi derivatives and liquidity markets, forming a financial loop from "robot assets" to "composable yield assets." Its goal is to become the economic backbone of the intelligence era.

▲ Web3 Robotics Ecosystem Map: https://fairy-build-97286531.figma.site/

Conclusion and Outlook: Real Challenges and Long-Term Opportunities

In the long term, the convergence of robotics × AI × Web3 aims to build a decentralized machine economy (DeRobot Economy), advancing embodied intelligence from "single-machine automation" to a networked collaboration that is "verifiable, settleable, governable." The core logic is a self-sustaining cycle of "token → deployment → data → value redistribution," enabling robots, sensors, and compute nodes to establish ownership, trade, and share profits.

However, in reality, this model remains in early exploration, far from achieving stable cash flows or scalable commercial closure. Most projects stay at the narrative level with limited actual deployment. Robotics manufacturing and operations are capital-intensive; token incentives alone cannot sustain infrastructure expansion. While on-chain financial designs offer composability, they haven't solved risk pricing and return realization for real assets. Thus, the so-called "self-sustaining machine network" remains idealistic—its business model awaits real-world validation.

-

The Model & Intelligence Layer represents the most promising long-term direction. Open-source robot operating systems like OpenMind aim to break closed ecosystems and unify multi-robot collaboration and language-to-action interfaces. Their technical vision is clear and system comprehensive, but engineering demands are immense and validation cycles long—no industry-level positive feedback loop yet exists.

-

The Machine Economy Layer remains premature. With limited real-world robots, DID identities and incentive networks struggle to form self-consistent loops. True "machine labor economy" effects will only emerge after large-scale deployment of embodied intelligence.

-

The Data Layer has the lowest barrier and is currently the closest to commercial viability. Embodied intelligence data collection demands high spatiotemporal continuity and action-semantic precision, determining data quality and reusability. Balancing "crowdsourced scale" with "data reliability" remains the core industry challenge. PrismaX’s approach—locking B2B demand first, then distributing and validating tasks—offers a replicable template, but ecosystem scale and data trading require time to mature.

-

The Middleware & Simulation Layer remains in technical validation, lacking unified standards and interoperable ecosystems. Simulation results are hard to standardize for real-world transfer, limiting Sim2Real efficiency.

-

The Asset Yield Layer (RobotFi / RWAiFi): Web3 mainly plays auxiliary roles in supply chain finance, equipment leasing, and investment governance—enhancing transparency and settlement efficiency, not reshaping industrial logic.

That said, we believe the intersection of robotics × AI × Web3 still represents the origin point of the next-generation intelligent economic system. It is not just a fusion of technological paradigms, but also an opportunity to重构production relations: when machines possess identity, incentives, and governance, human-machine collaboration will evolve from localized automation to networked autonomy. In the short term, this direction remains narrative- and experiment-driven, but the institutional and incentive frameworks being laid today are setting the foundation for the economic order of a future machine society. In the long run, the integration of embodied intelligence and Web3 will redefine the boundaries of value creation—making agents truly ownable, collaborative, and economically viable economic entities.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News