Nvidia's growth engine has only one wheel

TechFlow Selected TechFlow Selected

Nvidia's growth engine has only one wheel

Nvidia has fallen into a vicious cycle where even slightly exceeding expectations is seen as falling short.

By: Li Yuan

Source: GeekPark

On August 28, China time, Nvidia released its second-quarter fiscal 2026 earnings report.

From a performance standpoint, Nvidia once again delivered an outstanding result:

-

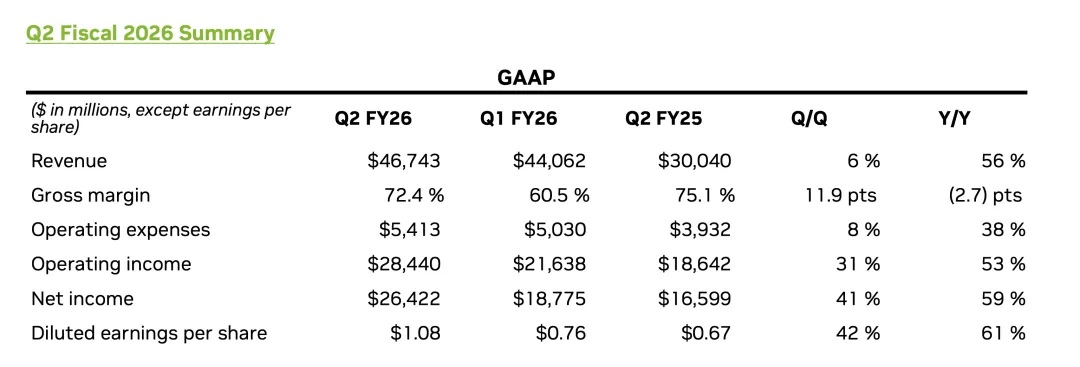

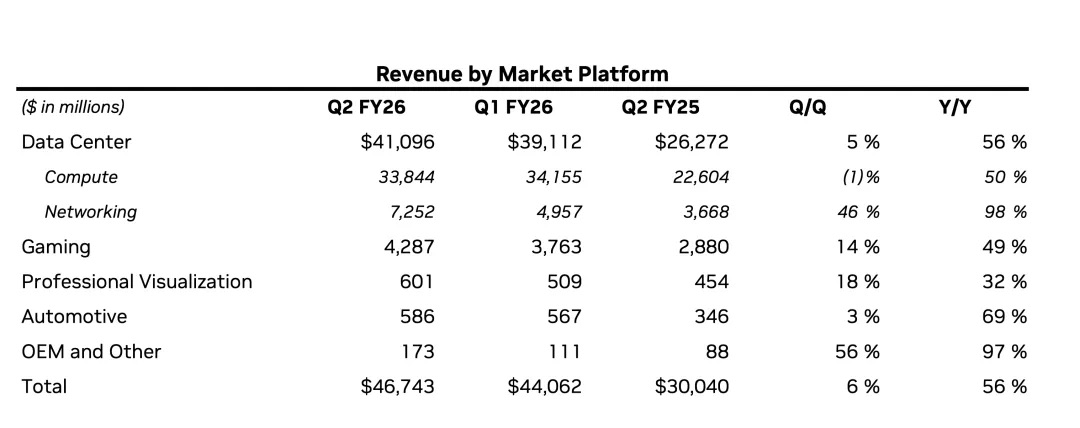

Fiscal Q2 revenue reached $46.743 billion, up 56% year-over-year, slightly exceeding the market's prior expectation of $46.23 billion;

-

Data center business, the core engine, hit a new record with $41.1 billion in revenue, also up 56% year-over-year;

-

Adjusted earnings per share were $1.05, up 54% year-over-year, likewise surpassing expectations.

Yet, despite appearing flawless on the surface, the results failed to fully reassure Wall Street.

The market reacted swiftly and sharply: Nvidia’s stock plunged more than 5% after hours; although the decline narrowed to 3% by the end of after-hours trading, the volatility itself revealed significant concerns.

In today’s market, Nvidia is an exceptionally unique company: its absolute revenue core lies in AI data center operations, and this massive, rapidly growing data center revenue is highly concentrated among a few "whale" clients—large cloud service providers and top-tier AI model developers like OpenAI.

This revenue structure means that Nvidia’s growth is deeply “tied” to the capital expenditures and AI strategies of these leading players. Any shifts from them are directly reflected in Nvidia’s financials and market expectations. Nvidia’s stock price has long ceased to be a simple reflection of its own performance—it has become a barometer of confidence for the entire AI market.

Moreover, its extremely high valuation has already priced in the dream of AI taking off spectacularly. The market has fallen into a paradox where “slightly beating expectations” is seen as underwhelming—only overwhelming, massive outperformance can drive gains.

Beneath the surface, a deeper anxiety persists: the fundamental question about AI remains unanswered—is this compute-driven revolution still moving forward relentlessly, requiring heavy investment for early positioning, or is it approaching an era focused on cost reduction and efficiency optimization? No one knows the answer, but everyone fears the party could end at any moment.

Meanwhile, uncertainties around China operations have further amplified this instability. The earnings report shows that Nvidia did not sell H20 chips to China during Q2, nor does its Q3 outlook include related revenues. Although Jensen Huang expressed long-term optimism about the Chinese market during the earnings call, stating that “the possibility of bringing Blackwell to China is real,” and estimating the market opportunity at $50 billion this year, the short-term revenue gap remains tangible.

As the leader of a company standing atop the world, Jensen Huang remains resolute. He has laid out a grand vision for Nvidia—and indeed for the entire AI industry—predicting during the earnings call that by the end of this decade, annual global spending on AI infrastructure will reach $3 trillion to $4 trillion. His gaze is not fixed on quarterly orders, but on a decade-long, AI-driven industrial revolution.

This conviction is also evident in Nvidia’s shareholder returns: this quarter, the company returned $10 billion to shareholders and announced a new $60 billion share repurchase authorization.

The projected growth for next quarter is concrete: guidance for $54 billion in revenue implies the company will generate over $9.3 billion in additional revenue within just three months.

While this outlook slightly exceeds Wall Street’s consensus, it falls well short of some bullish analysts’ expectations of $60 billion. This mix of insatiable market greed for explosive growth and fear of slowing momentum and external risks represents the greatest challenge Nvidia now faces.

01 Data Center Business Outlook: Chip Transition + Agent AI

As the absolute core of Nvidia’s empire, the data center business this quarter perfectly illustrates the subtle gap between “excellence” and “market expectations.”

From a numbers perspective, the growth story continues.

-

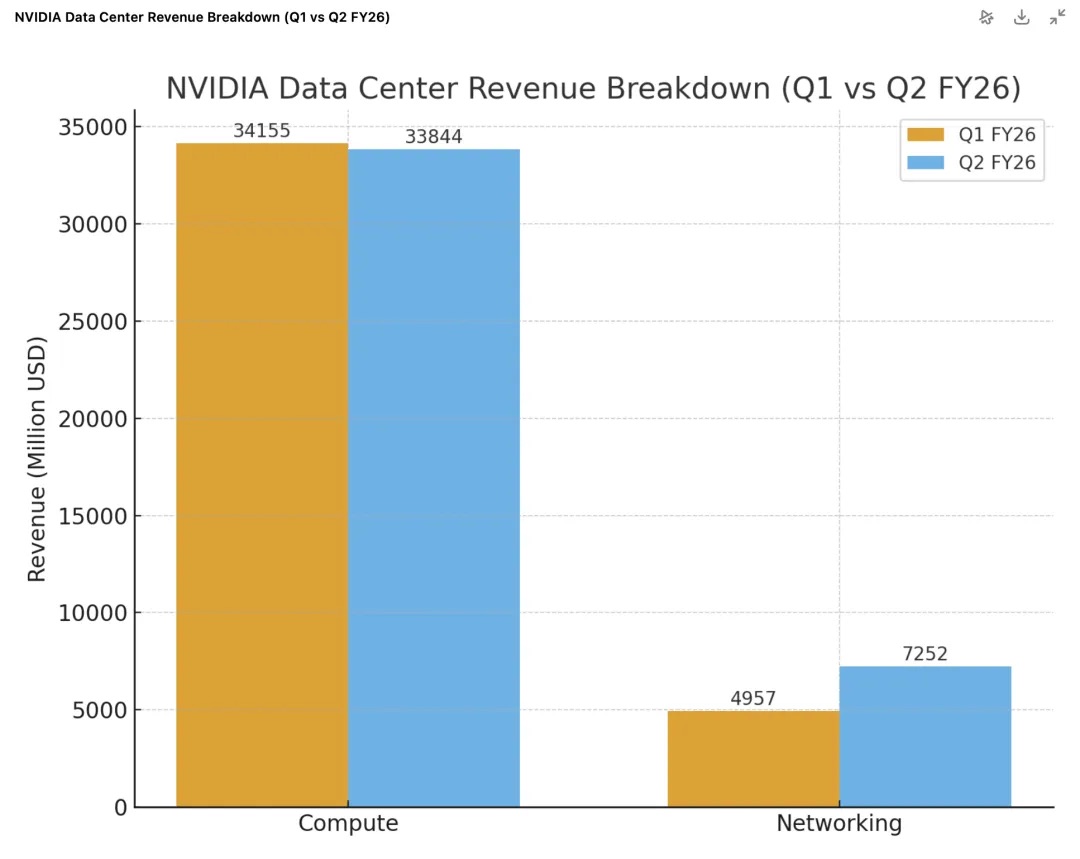

Revenue hits a new high: total data center revenue reached $41.1 billion, up 56% year-over-year and 5% sequentially.

-

Blackwell engine accelerating: next-generation Blackwell architecture products are ramping strongly, with related data center revenue surging 17% sequentially. The flagship GB300 has entered full-scale production, reaching approximately 1,000 racks per week. Meanwhile, the Blackwell Ultra platform has already become a “multi-billion-dollar” product line this quarter, reflecting strong market demand for the new architecture.

-

Networking becomes the “second engine”: networking revenue soared to $7.3 billion this quarter, up 98% year-over-year and 46% sequentially. Notably, Spectrum-X, the AI-optimized Ethernet business, has achieved an annualized revenue exceeding $10 billion.

-

Emerging markets rising fast: “sovereign AI” is becoming a notable growth driver, with Nvidia expecting over $2 billion in revenue this year—more than double last year’s figure.

However, under the market’s microscope, some “flaws” emerged. First, the $41.1 billion revenue narrowly missed the expected $41.3 billion. But this shortfall was primarily due to a $4 billion drop in H20 chip sales to China—a situation consistent with what occurred in Q1.

Luckily, the explosive growth in networking became a key offset to GPU-related pressures. Revenue from networking reached $7.3 billion this quarter, up 98% year-over-year and 46% sequentially, driven largely by strong sales of high-performance networking products like NVLink and InfiniBand tied to the Blackwell platform. These figures clearly show that Nvidia’s success is no longer just about selling standalone GPUs, but rather offering a complete, high-margin “AI factory solution” that includes high-speed interconnect networks.

The core question behind the data is what matters most to investors: can Nvidia sustain high growth at such a massive scale?

In the current landscape, this is hardly a question of “competition.” During the earnings call, Jensen Huang emphasized that due to the rapid pace of AI model iteration and extreme complexity of the tech stack, Nvidia’s general-purpose, full-stack platform holds a significant advantage over specialized ASIC chips, making external competitive pressure non-critical.

Huang also highlighted the current bottleneck in data center development—power. When power becomes the key constraint on data center revenue, “performance per watt” directly determines profitability. This explains why customers are both willing and compelled to upgrade to Nvidia’s latest, most expensive chips annually. Each new architecture—from Hopper to Blackwell to Rubin—delivers a major leap in performance per watt, so buying new chips is essentially an investment in maximizing revenue potential under limited power resources.

The real pressure stems from the natural trajectory of AI development—will AI progress continue at this pace?

To this, Huang offered his answer: Reasoning Agentic AI.

He stated during the earnings call:

“Previously, chatbots operated on a ‘single-trigger’ model—you give an instruction, it generates a response. Now, AI can autonomously conduct research, think, formulate plans, and even invoke tools. This process is called ‘deep thinking’… Compared to the ‘single-trigger’ model, reasoning agentic AI models may require 100x, even 1000x more computing power.”

The core logic here is clear: when AI evolves from a simple “Q&A tool” into an autonomous “agent” capable of completing complex tasks, the underlying computational demand will explode exponentially.

For investors, the narrative around Nvidia’s data center business is now clear and layered: current growth is securely carried by the Blackwell platform; the next wave is already underway—Huang explicitly announced that all six new chips of the next-generation Rubin platform have completed tape-out at TSMC and are now in wafer fabrication, preparing for mass production next year.

The ultimate fuel driving this perpetual growth hinges entirely on whether the market believes the “Agent AI” era will arrive as predicted, rapidly creating endless demand for computing power.

02 On China: Geopolitical Impact Continues

During the earnings call, Jensen Huang reiterated his long-term confidence in the Chinese market, projecting that “China could bring $50 billion in business opportunities this year, with an annual market growth rate of around 50%,” and clearly expressing his desire to “sell newer chips to China.”

The blueprint is optimistic, but the reality in the financials is harsh.

As the primary engine contributing over 88% of revenue, Nvidia’s data center business grew 56% year-over-year this quarter, yet its $41.1 billion revenue fell slightly short of analysts’ $41.29 billion expectation. This marks the second consecutive quarter that the business has missed Wall Street’s targets.

The issue lies in China operations. A deeper breakdown reveals that core GPU computing chips generated $33.8 billion in revenue, down 1% sequentially. This decline was directly caused by zero sales of the H20 “special edition” chip to China this quarter, resulting in a revenue gap of about $4 billion.

To understand this gap, we must revisit policy changes over the past two quarters:

Q1: Policy “Emergency Brake”

-

In April this year, the U.S. government mandated that exports of H20 chips to China require prior licensing, effectively freezing Nvidia’s H20 sales in the Chinese market overnight.

-

Faced with large inventories and contracts prepared for the Chinese market, the company had to take a $4.5 billion charge. Additionally, $2.5 billion in signed orders could not be delivered due to the new rules.

-

Nonetheless, before restrictions fully took effect, Nvidia managed to ship $4.6 billion worth of H20 chips to China. This “last train” sale, though one-time in nature, significantly boosted Q1’s computing business revenue base.

Q2: Revenue “Vacuum Period”

-

In Q2, H20 sales to China dropped to zero.

-

However, Nvidia found new customers outside China and successfully sold $650 million worth of H20 inventory. As a result, the company reversed $180 million in previously set-aside risk provisions back into profits.

-

Overall, H20-related revenue declined by approximately $4 billion compared to Q1. This explains the 1% sequential dip in computing business revenue—because it’s being compared against a Q1 that included a one-time spike.

Currently, U.S. export control policies on AI chips remain uncertain. The former Trump administration proposed requiring companies like Nvidia and AMD to remit 15% of their China chip sales revenue to the government, but this has not yet been formalized into regulation.

Given this uncertainty, Nvidia has adopted the most conservative stance in its official guidance—its $54 billion Q3 revenue forecast explicitly assumes zero H20 revenue from China. However, CFO Colette Kress offered a statement with potential upside: she revealed that the company “is awaiting formal regulations from the White House,” and added, “if geopolitical conditions allow, H20 shipments to China in Q3 could generate $2 billion to $5 billion in revenue.”

Whether, when, and what can be sold to the Chinese market is entirely beyond Nvidia’s control—it rests solely on the balance of geopolitical forces.

03 Supporting Roles: Growing Fast, But Not Enough to Sustain a Trillion-Dollar Valuation

When all attention focuses on the data center business, it’s easy to overlook the growth of Nvidia’s other segments. In fact, if viewed independently, each delivers solid performance.

Gaming was the standout supporting act this quarter.

-

The segment posted $4.3 billion in revenue, up 49% year-over-year and 14% sequentially, showing strong recovery momentum.

-

The growth was driven by new products: the Blackwell-architecture GeForce RTX 5060 became the fastest-selling x60-level GPU in Nvidia’s history upon launch, demonstrating its powerful appeal in the consumer market.

Professional visualization and automotive robotics businesses are sowing seeds for the future.

-

Professional visualization revenue was $601 million, up 32% year-over-year, with high-end RTX workstation GPUs increasingly used in AI-driven workflows such as design, simulation, and industrial digital twins.

-

Automotive and robotics revenue reached $586 million, up 69% year-over-year. The key milestone is the official shipment commencement of DRIVE AGX Thor, the next-generation “supercomputer on wheels” system-on-chip, marking the beginning of commercial payoff in the automotive space.

-

In addition, this quarter saw the official launch of its next-generation robotics computing platform THOR, which achieves an order-of-magnitude improvement in AI performance and energy efficiency over its predecessor. Huang’s rationale is that robotic applications will drive exponential growth in compute demand both on-device and in infrastructure (for training and simulation in Omniverse digital twin platforms), becoming a key long-term driver for data center platforms.

Despite impressive growth rates, however, these businesses are dwarfed by the scale of the data center division.

Gaming revenue of $4.3 billion is only one-tenth that of data center revenue. Combined, professional visualization and automotive robotics generate around $1.2 billion—negligible next to the $41.1 billion data center giant, almost qualifying as “other income.”

This leads to a clear conclusion: in the foreseeable future, none of Nvidia’s “side businesses” can emerge as a second growth pillar rivaling the data center. They are healthy and important, enriching the company’s ecosystem and exploring AI applications in end devices and the physical world.

But for a behemoth whose multi-trillion-dollar valuation depends on generating hundreds of billions in revenue, these segments currently contribute far too little to alleviate the market’s “growth anxiety.”

Nvidia’s stock fate remains firmly tethered to the single “war chariot” of its data center business.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News