The Legendary Weapon Journey: How MATR1X FIRE Brings a Vast Array of Unique In-Game Items into the Web3 World?

TechFlow Selected TechFlow Selected

The Legendary Weapon Journey: How MATR1X FIRE Brings a Vast Array of Unique In-Game Items into the Web3 World?

This article will elaborate in detail on the creation process of the MATR1X Legendary Weapon NFT.

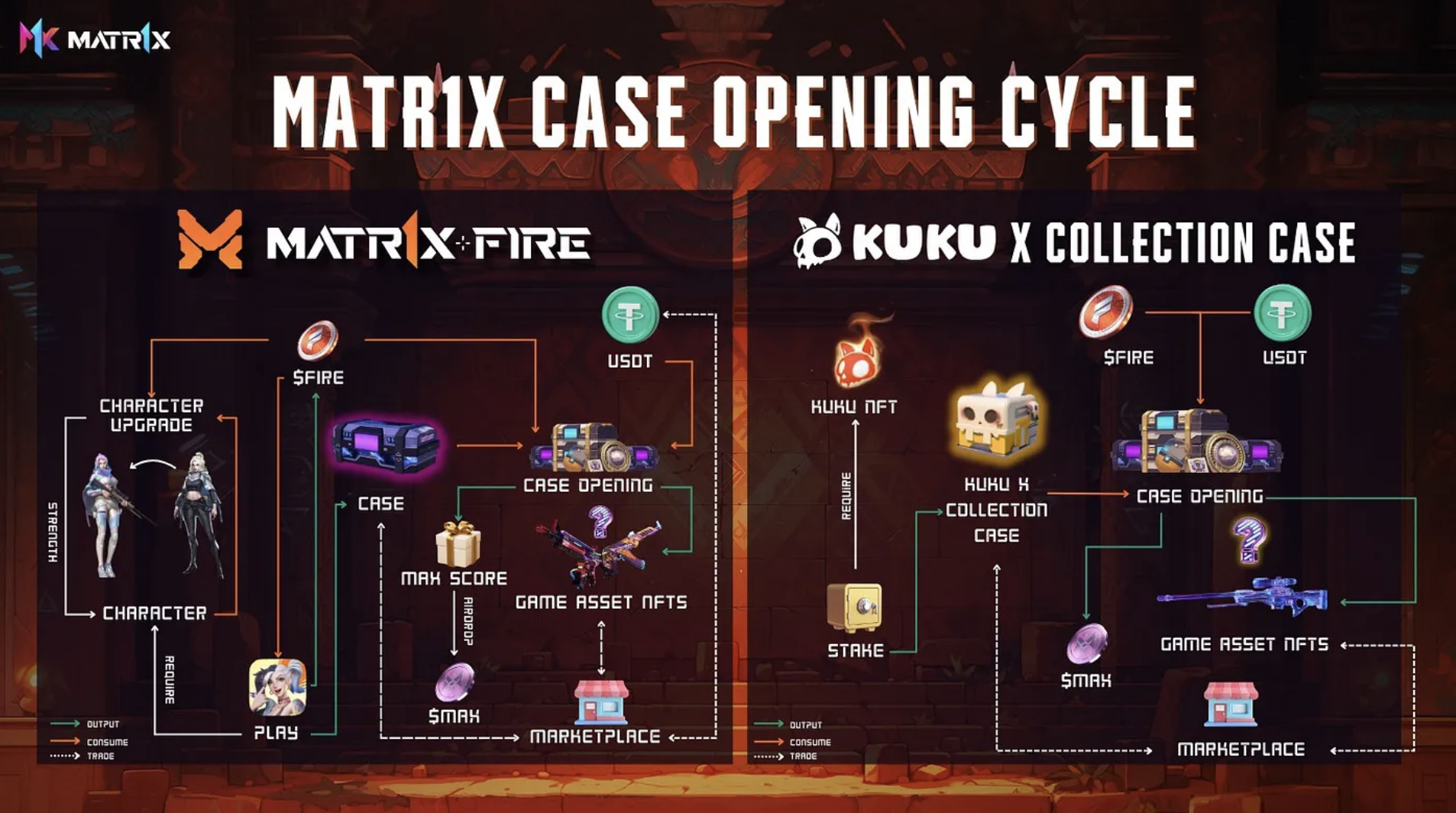

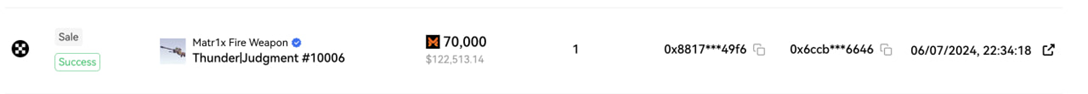

One week after the launch of MATR1X's Apollo Program, a select few lucky users have already obtained its rarest legendary weapon NFT—“Judgment.” The highest recorded sale price for “Judgment” has reached $120,000, sparking widespread discussion in the NFT market and further fueling interest and anticipation around Web3 gaming item NFTs. This article details the creation process behind MATR1X’s legendary weapon NFTs.

Origin of All Things—The Birth of a Legendary Weapon

As the server-side random generator runs, one lucky player draws a legendary weapon with an extremely low drop rate. Within the generator, this legendary weapon is defined by only a few core parameters: NFT ID, configuration ID, wear level, souvenir status, and sticker set. Once these raw attributes are generated, the weapon’s basic information is fully determined. However, these raw data alone are insufficient to represent a truly legendary weapon. To deliver a uniquely meaningful experience to players, much more work lies ahead.

Creating Something from Nothing—Web3-Oriented NFT Metadata Generation

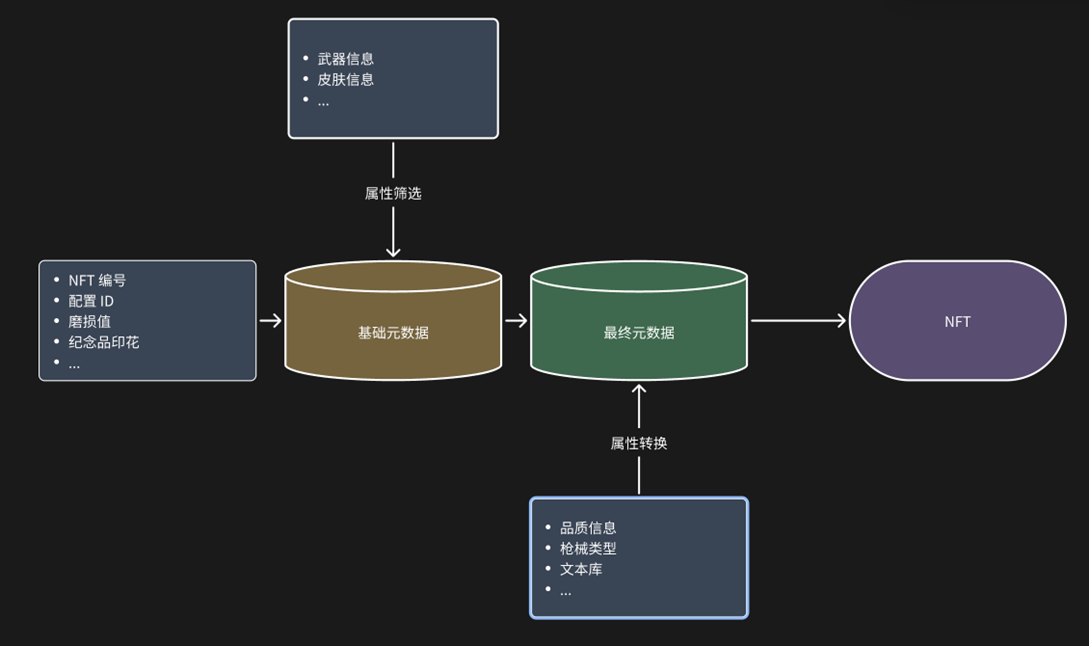

First, we must complete the metadata for this legendary weapon. Using the configuration ID, we retrieve foundational details—such as weapon type, name, and rarity—from multiple configuration tables. However, not all internal data should be exposed directly in the NFT metadata. Therefore, we apply an attribute filter to select only the properties intended for player visibility, which are then included in the metadata.

Another challenge arises: many weapon attributes are purely numerical—for example, the number 7 representing "Legendary" quality. Presenting such raw numbers to players is unacceptable. Hence, we introduced an attribute converter that transforms internal data into human-readable formats. This converter not only translates numeric codes into descriptive text but also localizes all descriptions into English (currently the default language). After processing, the resulting metadata becomes the final, player-facing version.

Artistry in Action—Cloud-Based Rendering with Blender

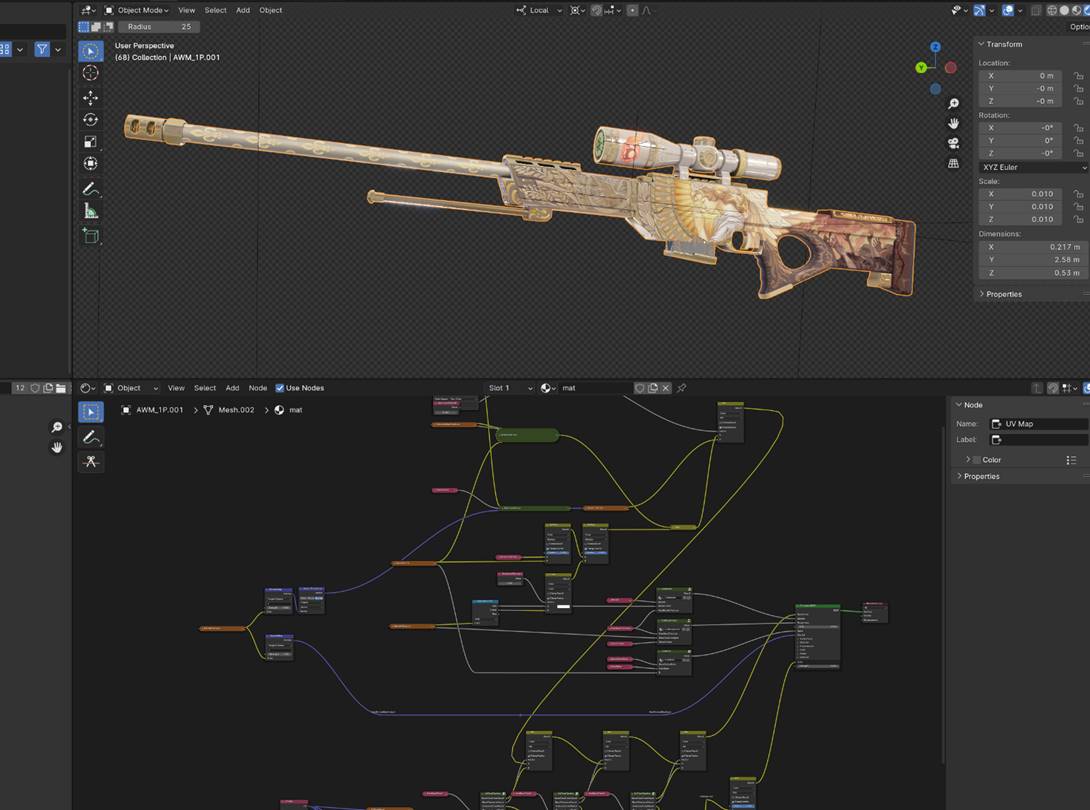

Next comes the most unique and critical component of the metadata: the visual representation of the legendary weapon. Given that each weapon possesses unique wear characteristics and a specific combination of stickers, and considering the vast variety of such combinations possible in MATR1X FIRE, it is impossible for designers to manually illustrate every variation. To solve this, we developed a rendering pipeline based on Blender and deployed it on cloud servers.

Our technical art team first recreated within Blender the same material system used in Unity, ensuring consistency between game assets and rendered visuals. Based on this shared material framework, we built conversion tools enabling seamless transfer of weapons already created in Unity into Blender without rework. Additionally, artists configured multiple lighting setups and camera angles within Blender scenes optimized for different view perspectives, fine-tuning post-processing settings to achieve the final desired render quality.

With this solution, we can generate custom resources tailored to each weapon’s metadata and render a unique NFT image that visually represents its individual traits—delivering to players a truly one-of-a-kind legendary weapon.

Scaling Up—Building a High-Performance Rendering Cluster

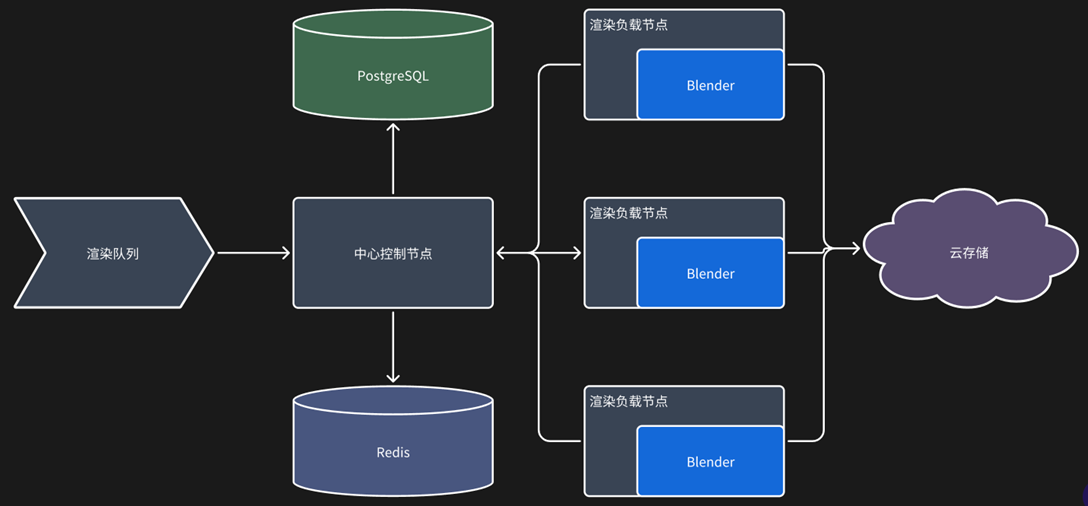

Having solved the rendering challenge for a single weapon, the next step was addressing scalability and response speed for mass rendering. To this end, the team built a dedicated rendering cluster consisting of a central control node and multiple distributed rendering nodes.

Whenever a new weapon is created in-game, its metadata is immediately sent to the cluster’s central control node and queued for rendering. The control node selects an available rendering node and assigns the task. Upon receiving the job, the rendering node invokes the linked Blender renderer, generates the weapon’s NFT image, and uploads it to cloud storage for future access.

Thanks to this cluster architecture, we can instantly generate exclusive NFT images the moment a player acquires a new weapon.

Back to Basics—Metadata and Asset Distribution for Mobile Devices

As a GameFi project NFT, this legendary weapon has one primary use case: enabling players to dominate in-game combat. But how do we ensure that the weapon a player owns off-chain appears identically within the game? We’ve implemented several systems to make this possible.

For metadata, every weapon’s data is stored in a centralized metadata database upon generation. During NFT minting and display, this database serves as the authoritative source for on-chain metadata. When a player brings their weapon into gameplay, the game server retrieves the same data from this database to instantiate the weapon in-game.

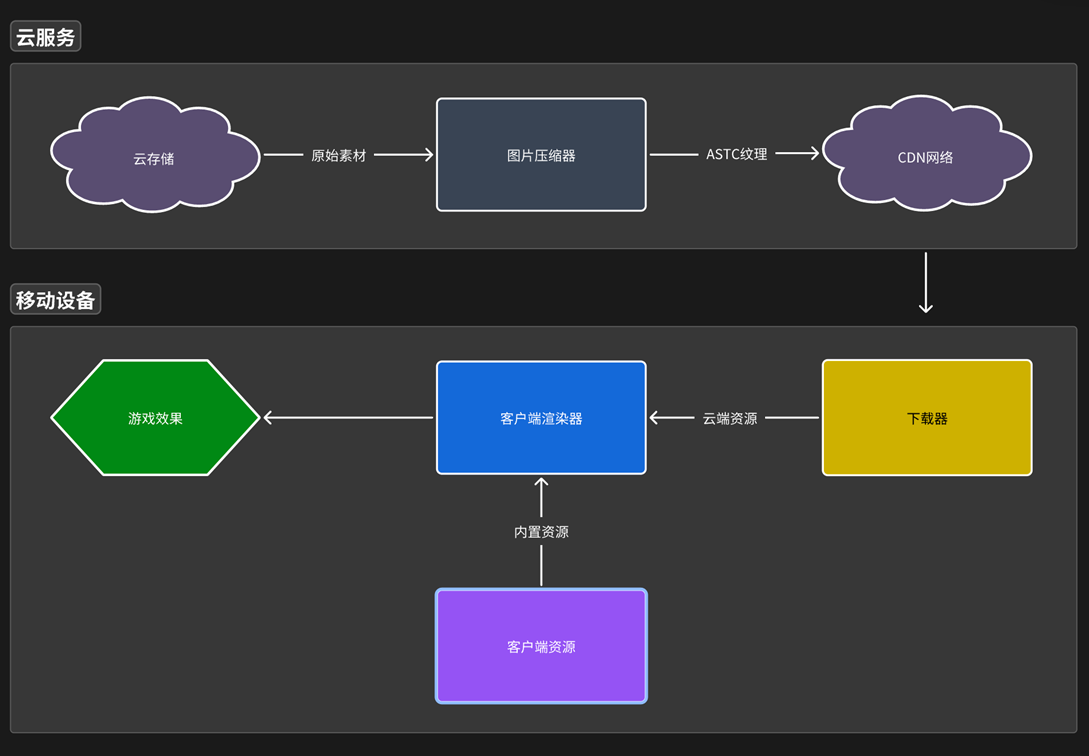

To recreate the exact weapon model during gameplay, intermediate materials generated during the NFT image rendering process are preserved and stored in cloud storage. Players can download these assets and merge them with in-game resources to visually replicate their unique weapon. To optimize performance and stability, we also built a dedicated content delivery network (CDN). All in-game assets are automatically compressed into GPU-friendly texture formats and delivered at resolution tiers appropriate to the user’s mobile device capabilities. This approach balances high-fidelity rendering with the performance constraints of mobile hardware.

Going Further—UGC and 3D Previews

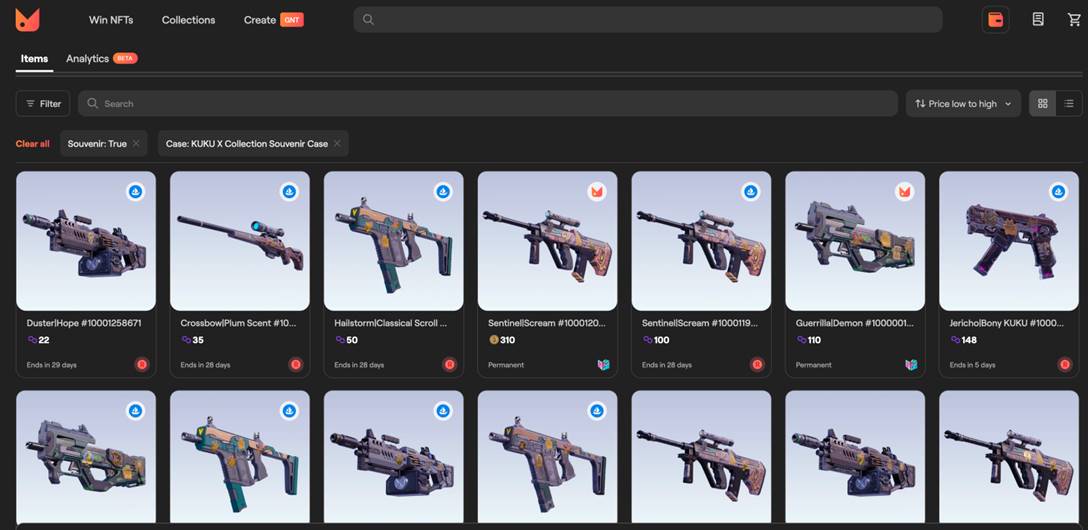

Technology must continuously evolve to deliver increasingly immersive NFT experiences. Looking ahead, we envision deeper integration of this pipeline into asset creation workflows. Offline rendering isn’t just for generating NFT thumbnails—it can also enable richer, more complex content creation. With this technology, we aim to support UGC (user-generated content) directly within games and NFTs. Imagine players imprinting their own NFTs—such as 2061, KUKU, or other partnered collections—onto their weapons as custom engravings.

Moreover, this system isn't limited to weapons. In the future, characters and other new asset types could leverage the same pipeline for dynamic personalization. Beyond the game itself, we’re exploring ways to let players showcase their digital assets in full 3D outside the game environment. Leveraging cloud-based offline rendering, we can process complex assets and deliver them via web-based H5 game engines. This allows even non-players to explore and appreciate the rich visual world of MATR1X FIRE.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News