Opinion: Why Are We Still Bullish on Bittensor?

TechFlow Selected TechFlow Selected

Opinion: Why Are We Still Bullish on Bittensor?

Thanks to its strong inclusiveness, intense competitive environment, and effective incentive mechanisms, the Bittensor ecosystem is capable of organically producing high-quality artificial intelligence products.

Author: 0xai

Translated by: TechFlow

What is Bittensor?

Bittensor itself is not an artificial intelligence product, nor does it produce or provide any AI products or services. Bittensor is an economic system that acts as an optimizer for the AI product market by offering a highly competitive incentive structure to producers of AI products. Within the Bittensor ecosystem, high-quality producers receive greater rewards, while less competitive ones are gradually phased out.

So how exactly does Bittensor create this incentive mechanism to encourage effective competition and promote the organic production of high-quality AI products?

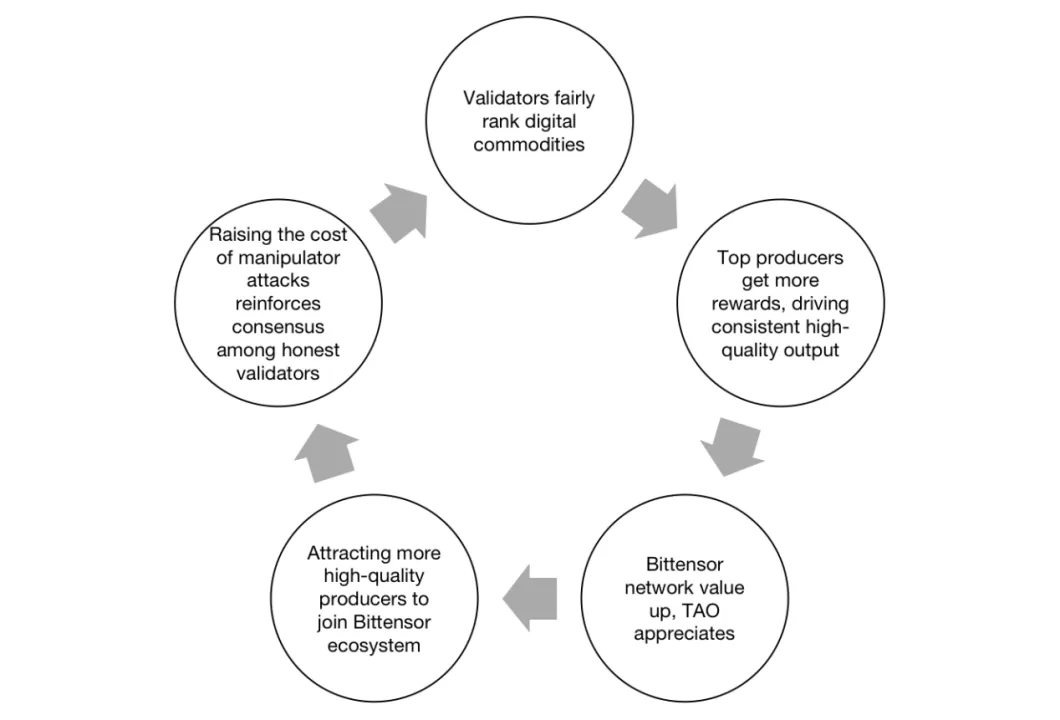

Bittensor Flywheel Model

Bittensor achieves this goal through a flywheel model. Validators assess the quality of AI products within the ecosystem and allocate incentives based on their quality, ensuring that high-quality producers receive more rewards. This drives continuous improvement in output quality, enhancing the value of the Bittensor network and driving appreciation in TAO. The increasing value of TAO not only attracts more high-quality producers into the Bittensor ecosystem but also raises the cost for malicious actors attempting to manipulate quality assessments. This further strengthens the consensus among honest validators, improving the objectivity and fairness of evaluation outcomes, thereby enabling more effective competition and incentive mechanisms.

Ensuring fairness and objectivity in evaluation results is the key step to kickstarting the flywheel. This is also Bittensor's core technology—the abstract validation system based on Yuma Consensus.

So what is Yuma Consensus, and how does it ensure that post-consensus quality evaluations are fair and objective?

Yuma Consensus is a consensus mechanism designed to compute final evaluation results from diverse assessments provided by numerous validators. Similar to Byzantine Fault Tolerant (BFT) consensus mechanisms, as long as the majority of validators in the network are honest, the correct decision will ultimately be reached. Assuming honest validators provide objective evaluations, the consensus-based evaluation result will also be fair and objective.

Taking subnet quality assessment as an example, rootnet validators evaluate and rank the output quality of each subnet. Evaluation results from 64 validators are aggregated and processed through the Yuma Consensus algorithm to derive the final evaluation result. These final results are then used to distribute newly minted TAO to each subnet.

Currently, however, Yuma Consensus still has room for improvement:

-

Rootnet validators may not fully represent all TAO holders, and their evaluations may not reflect broader perspectives. Additionally, some top validators’ assessments may not always be objective. Even when biases are detected, they might not be corrected immediately.

-

The existence of rootnet validators limits the number of subnets Bittensor can support. To compete with centralized AI giants, having only 32 subnets is insufficient. Yet even with 32 subnets, rootnet validators may struggle to effectively monitor them all.

-

Validators may lack strong incentives to migrate to new subnets. In the short term, moving from older subnets with higher issuance rates to newer ones with lower rates could result in reduced rewards. Combined with uncertainty about whether new subnets' issuance levels will eventually catch up, this clear risk of reward loss reduces migration willingness.

Bittensor also plans mechanism upgrades to address these shortcomings:

-

Dynamic TAO (dTAO) will decentralize the power to evaluate subnet quality from a few validators to all TAO holders. TAO holders will be able to indirectly determine allocation proportions across subnets through staking.

-

Without limitations imposed by rootnet validators, the maximum number of active subnets will increase to 1024. This will significantly lower the barrier for new teams to join the Bittensor ecosystem, intensifying competition among subnets.

-

Validators who migrate early to new subnets may receive higher rewards. Early migration means acquiring dynamic TAO of that subnet at a lower price, increasing the potential to earn more TAO in the future.

Strong inclusiveness is another major advantage of Yuma Consensus. It is not only used to determine issuance amounts per subnet but also to determine allocation ratios among miners and validators within the same subnet. Moreover, regardless of the miner’s specific task, contributions—including computing power, data, human input, and intelligence—are abstractly considered. Thus, any stage of AI product creation can access the Bittensor ecosystem, benefit from incentives, and simultaneously enhance the value of the Bittensor network.

Next, let's explore several leading subnets to observe how Bittensor incentivizes their outputs.

Outstanding Subnets

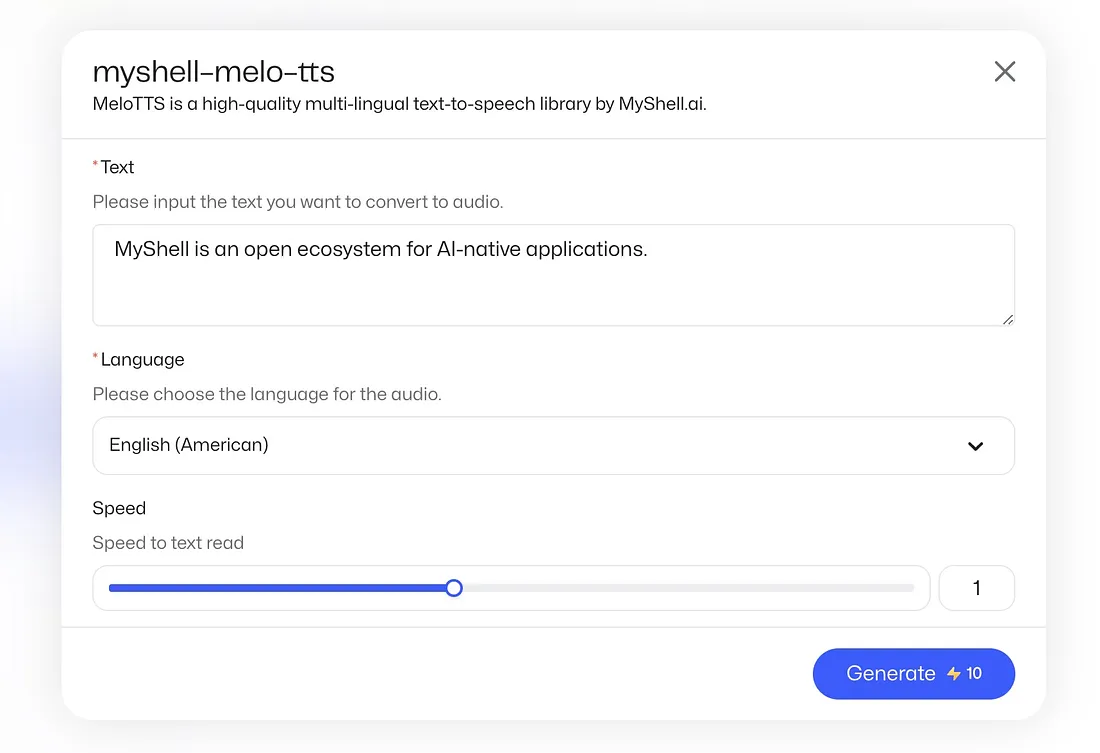

Subnet 3: Myshell TTS

You can contribute to the development of myshell-ai/MyShell TTS Subnet by creating an account on GitHub.

Issuance Rate: 3.46% (April 9, 2024)

Background: Myshell, the team behind Myshell TTS (Text-to-Speech), consists of core members from prestigious institutions such as MIT, Oxford University, and Princeton University. Myshell aims to create a no-code platform enabling college students without programming backgrounds to easily build custom bots. Focusing on TTS, audiobooks, and virtual assistants, Myshell launched its first voice chatbot Samantha in March 2023. As its product matrix expands, it has accumulated over one million registered users. The platform hosts various types of bots, including those for language learning, education, and utility purposes.

Positioning: Myshell launched this subnet to harness collective wisdom from the open-source community to build the best open-source TTS model. In other words, Myshell TTS does not directly run models or handle end-user requests; instead, it functions as a network for training TTS models.

Myshell TTS Architecture

The workflow of Myshell TTS operates as shown above. Miners are responsible for training models and uploading trained models to the model pool (model metadata is also stored on the Bittensor blockchain); validators generate test cases, evaluate model performance, and score accordingly; the Bittensor blockchain uses Yuma Consensus to aggregate weights and determine each miner’s final weight and allocation ratio.

In short, miners must continuously submit higher-quality models to maintain their rewards.

Currently, Myshell has also launched a demo on its platform allowing users to try models developed within Myshell TTS.

Open Kaito Architecture

In the future, as models trained via Myshell TTS become more reliable, additional use cases will emerge. Furthermore, being open-source, these models are not limited to Myshell alone but can be extended to other platforms. Isn't training and incentivizing open-source models through such decentralized methods precisely our goal in decentralized AI?

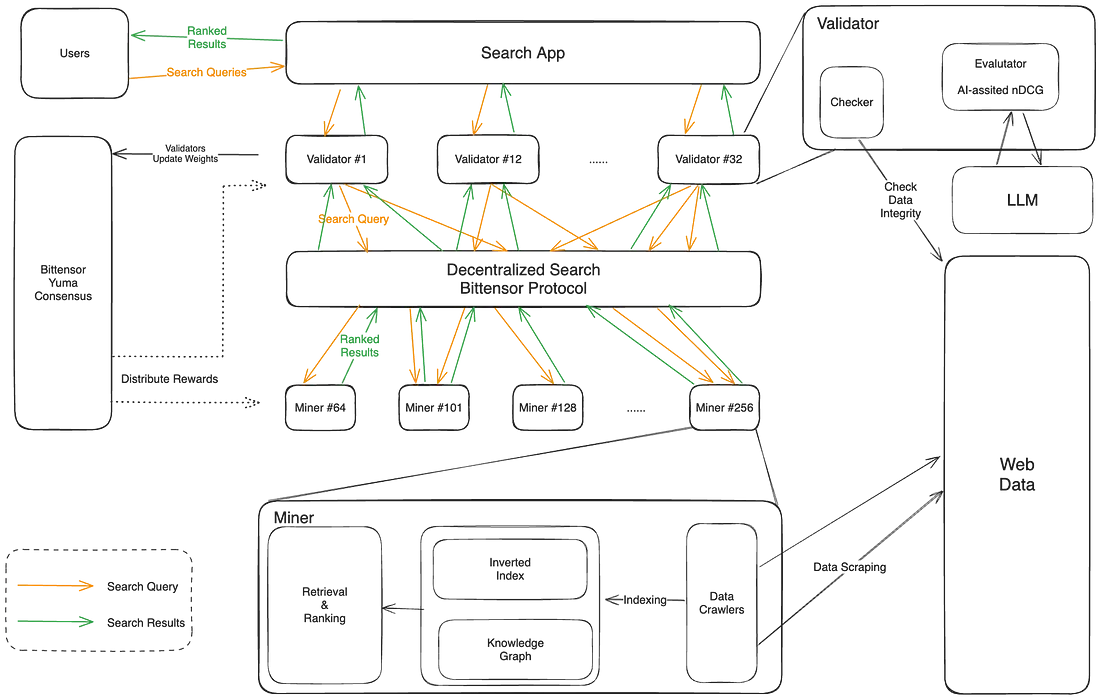

Subnet 5: Open Kaito

You can contribute to Open Kaito’s development by creating an account on GitHub.

Issuance Rate: 4.39% (April 9, 2024)

Background: The team behind Kaito.ai forms the Open Kaito team, whose core members have extensive experience in AI and previously worked at top-tier companies like AWS, META, and Citadel. Before joining the Bittensor subnet, they launched their flagship product Kaito.ai—a Web3 off-chain data search engine—in Q4 2023. Using AI algorithms, Kaito.ai optimizes core components of search engines, including data collection, ranking algorithms, and retrieval algorithms. It has been recognized as a premier information aggregation tool within the crypto community.

Positioning: Open Kaito aims to build a decentralized indexing layer to support intelligent search and analytics. A search engine is not merely a database or ranking algorithm—it is a complex system. Moreover, an effective search engine requires low latency, posing additional challenges for decentralized implementation. Fortunately, Bittensor’s incentive system offers promising solutions to these challenges.

The operation of Open Kaito works as illustrated above. Open Kaito decentralizes every component of the search engine and frames the indexing problem as a miner-validator challenge. That is, miners respond to user indexing requests, while validators distribute tasks and score miners’ responses.

Open Kaito does not restrict how miners complete indexing tasks but focuses on the final output to encourage innovative solutions. This fosters healthy competition among miners. In responding to indexing demands, miners strive to improve execution strategies to achieve higher-quality results using fewer resources.

Subnet 6: Nous Finetuning

You can contribute to the development of NousResearch/finetuning-subnet by creating an account on GitHub.

Issuance Rate: 6.26% (April 9, 2024)

Background: The team behind Nous Finetuning comes from Nous Research, a research group focused on large-scale language model (LLM) architectures, data synthesis, and on-device inference. Its co-founder previously served as Chief Engineer at Eden Network.

Positioning: Nous Finetuning is a subnet dedicated specifically to fine-tuning large language models. Additionally, the data used for fine-tuning originates from the Bittensor ecosystem—specifically Subnet 18.

The operation of Nous Finetuning resembles that of Myshell TTS. Miners train models using data from Subnet 18 and periodically release these models for hosting on Hugging Face; validators evaluate the models and provide scores; similarly, the Bittensor blockchain uses Yuma Consensus to aggregate weights and determine each miner’s final weight and issuance amount.

Subnet 18: Cortex.t

You can contribute to corcel-api/cortex.t development by creating an account on GitHub.

Issuance Rate: 7.74% (April 9, 2024)

Background: The team behind Cortex.t is Corcel.io, which receives support from Mog, the second-largest validator on the Bittensor network. Corcel.io is an end-user-facing application that leverages AI products from the Bittensor ecosystem to deliver a ChatGPT-like experience.

Positioning: Cortex.t is positioned as the final layer before delivering results to end users. It is responsible for detecting and optimizing outputs from various subnets to ensure accuracy and reliability, especially when a single prompt invokes multiple models. Cortex.t aims to prevent blank or inconsistent outputs, ensuring a seamless user experience.

Miners in Cortex.t utilize other subnets within the Bittensor ecosystem to process end-user requests. They also use GPT-3.5 Turbo or GPT-4 to verify output results, ensuring reliability for end users. Validators evaluate miners’ outputs by comparing them against results generated by OpenAI.

Subnet 19: Vision

Contribute to namoray/vision development by creating an account on GitHub.

Issuance Rate: 9.47% (April 9, 2024)

Background: The development team behind Vision also comes from Corcel.io.

Positioning: Vision aims to maximize the output capacity of the Bittensor network by leveraging DSIS (Distributed Scale Inference Subnet), an optimized subnet construction framework. This framework accelerates miners’ response times to validators. Currently, Vision focuses on image generation scenarios.

Validators receive requests from the Corcel.io frontend and distribute them to miners. Miners are free to choose their preferred tech stack (not limited to models) to fulfill requests and generate responses. Validators then assess miner performance. Thanks to DSIS, Vision can respond to these requests faster and more efficiently than other subnets.

Summary

From the examples above, it is evident that Bittensor demonstrates high inclusiveness. Both miner generation and validator verification occur off-chain, with the Bittensor network only used to allocate rewards to miners based on validator assessments. Any aspect of AI product creation that fits the miner-validator architecture can be transformed into a subnet.

Theoretically, competition among subnets should be intense. For any subnet to continue receiving rewards, it must consistently produce high-quality outputs. Otherwise, if rootnet validators deem a subnet’s output low-value, its allocation may decrease and it could eventually be replaced by a new subnet.

However, in reality, we do observe some issues:

-

Redundancy and duplication due to overlapping subnet positioning. Among the existing 32 subnets, multiple focus on popular areas such as text-to-image, text prompting, and price prediction.

-

Existence of subnets without practical use cases. While price prediction subnets may theoretically serve as oracles, current prediction data performance falls far short of usability for end users.

-

Cases of "bad money driving out good." Some top validators may hesitate to migrate to new subnets, even when certain new subnets clearly demonstrate higher quality. However, due to lack of funding, they may not achieve sufficient issuance in the short term. Since new subnets have only a 7-day protection period, failure to quickly accumulate enough issuance puts them at risk of elimination and shutdown.

These issues reflect insufficient competition among subnets and indicate that some validators are not fulfilling their role in promoting effective competition.

The Open Tensor Foundation (OTF) validators have implemented temporary measures to alleviate this situation. As the largest validator holding 23% of staked power (including delegations), OTF provides subnets a channel to compete for more staked TAO: subnet owners can submit weekly requests to OTF to adjust their staked TAO proportion. These requests must cover ten aspects including “subnet objectives and contribution to the Bittensor ecosystem,” “reward mechanisms,” “communication protocol design,” “data sources and security,” “computational requirements,” and “roadmap,” to assist OTF in making informed decisions.

However, to fundamentally solve this issue, on one hand, we urgently need to launch dTAO (dynamic TAO), aiming to fundamentally fix the aforementioned irrationalities. Alternatively, we could call upon large validators holding significant Stake TAO to consider the long-term development of the Bittensor ecosystem more from the perspective of “ecosystem growth” rather than solely focusing on “financial returns.”

In conclusion, with its strong inclusiveness, fierce competitive environment, and effective incentive mechanisms, we believe the Bittensor ecosystem can organically produce high-quality AI products. Although not all current subnet outputs match those of centralized counterparts, we must remember that the current Bittensor architecture has only existed for one year (Subnet 1 was registered on April 13, 2023). For a platform with the potential to rival centralized AI giants, perhaps we should focus on proposing actionable improvement plans rather than rushing to criticize its shortcomings. After all, none of us want to see AI continually dominated by a handful of tech giants.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News