Interview with io.net COO: Aiming to Compete with AWS by Offering More Accessible Decentralized GPUs (with Airdrop Interaction Tutorial)

TechFlow Selected TechFlow Selected

Interview with io.net COO: Aiming to Compete with AWS by Offering More Accessible Decentralized GPUs (with Airdrop Interaction Tutorial)

We aim to embody the spirit of Web3 while outcompeting AWS and GCP.

By AYLO

Translated by TechFlow

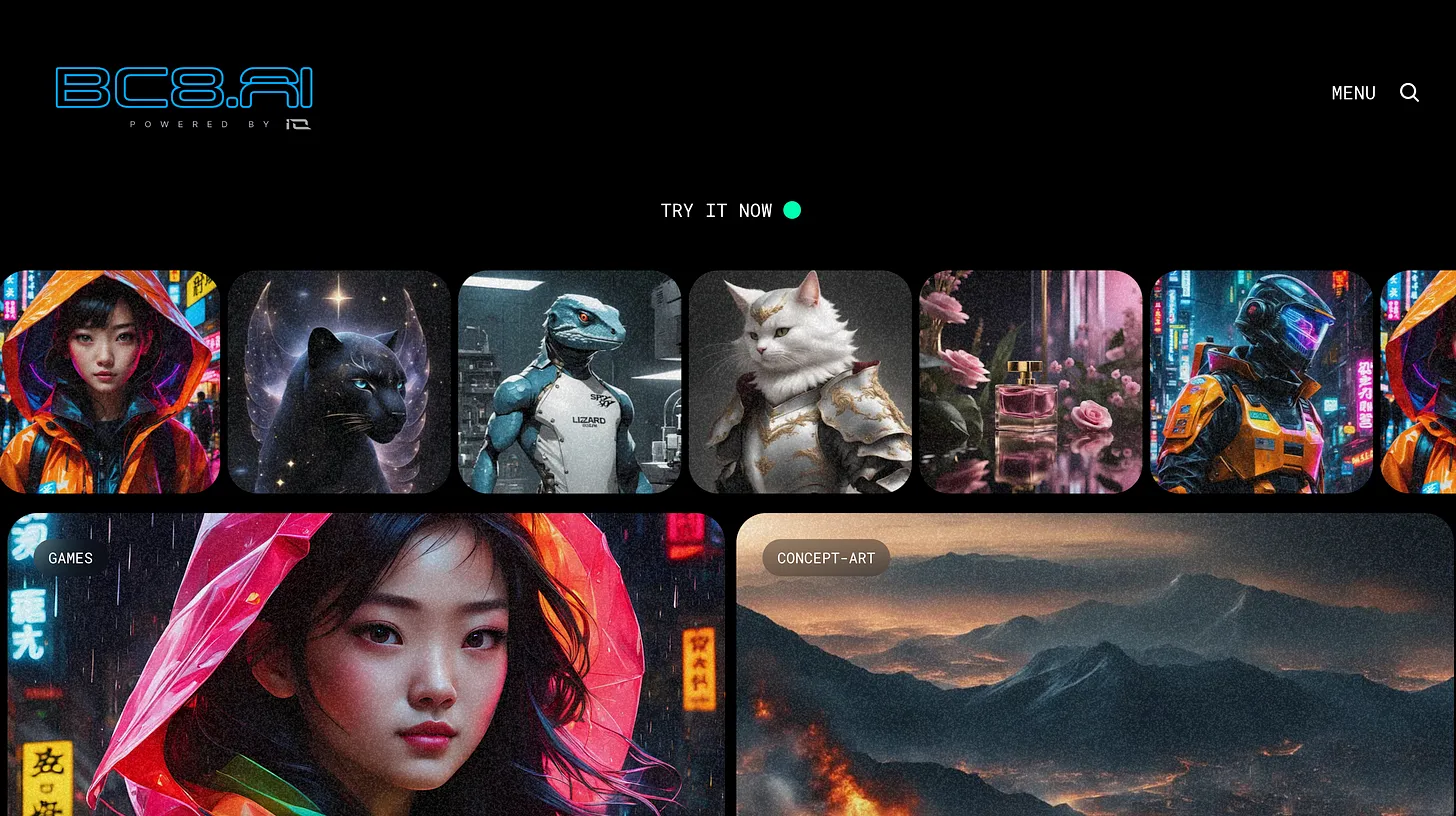

Generated using BC8.AI, a platform powered by io.net

Today I’m bringing you another interview with a project I’m very bullish on.

This project touches on several hot verticals right now: AI + DePin + Solana.

io.net Cloud is an advanced decentralized computing network that allows machine learning engineers to access distributed cloud clusters at a fraction of the cost of centralized services.

I spoke with COO Tory Green to learn more.

The IO token will soon launch on Solana, and I strongly recommend you read this article. I’ll also include information on how to participate in the airdrop (at the end).

I’m a private investor in io.net and firmly believe in their platform because their GPU cluster solution is truly unique.

Introduction to io.net

-

Decentralized AWS for ML (machine learning) training on GPUs

-

Instant, permissionless access to a global network of GPUs and CPUs—already live

-

They have 25,000 nodes

-

Revolutionary technology to cluster GPU clouds together

-

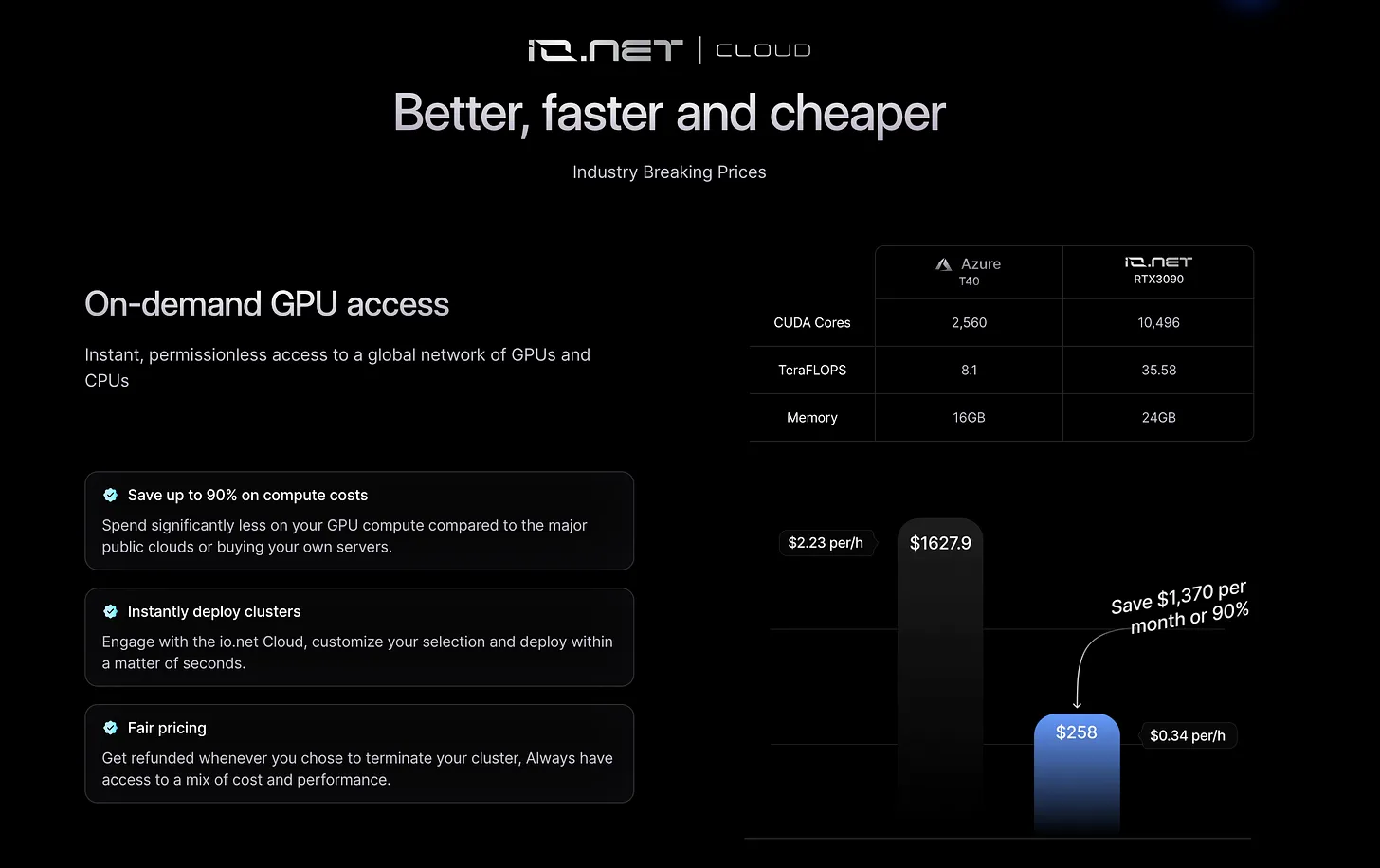

Can save large-scale AI startups up to 90% on compute costs

-

Integrated with Render and Filecoin

-

Built on Solana

They’ve just announced $30 million in funding, attracting some of the biggest backers in the space.

Why should people pay attention to io.net?

We're not only competing against other crypto projects—we’re competing against cloud computing. One of our main advantages we offer customers is significantly lower pricing, up to 90% cheaper. What we really provide is consumer choice, which is where it gets really interesting. Yes, on our platform you can get GPUs at 90% lower cost—I highly recommend trying it out. You can access cheap, fully decentralized consumer-grade GPUs at a steep discount. However, if you need high performance, you can rebuild an AWS-like experience using top-tier hardware like A100s—perhaps only 30% cheaper, but still less than AWS. In some cases, we even deliver better performance than AWS, which could be critical for specific industries like hedge funds.

For one of our major clients, we offered 70% better rates than AWS and 40% better than what they were getting elsewhere. Our platform is user-friendly and permissionless, unlike AWS, which might require detailed business plans and onboarding. Anyone can join and spin up a cluster instantly, while AWS may take days or weeks.

Compared to decentralized competitors, if you try to get a cluster on platforms like Akash, you'll find it’s not instantaneous. They’re more like travel agents, calling their data centers to check GPU availability, which can take weeks. With us, it's instant, cheaper, and permissionless. We aim to embody the spirit of Web3 while beating AWS and GCP.

What does the 2024 roadmap look like?

It breaks down into a business roadmap and a technical roadmap. On the business side, TGE is coming up. We plan to hold a summit this year where we’ll announce many product-related updates. Our focus remains heavily on continuing to build the network, because despite all the excitement around TGE, we see ourselves as a real company and a legitimate competitor to AWS.

We’ll continue expanding our sales team aggressively. We want to follow in the footsteps of companies like Chainlink and Polygon by hiring senior sales executives from firms like Amazon and Google to build a world-class sales organization. This will help us attract AI clients and establish partnerships with entities like Hugging Face and Prettybase.

Our initial customer base consists of large AI startups facing massive AI compute costs. I’m part of a CFO group for tech companies in the Bay Area, and one of the biggest pain points is the high cost of AI computation. One Series A SaaS startup spends $700,000 per month on AI compute—that’s unsustainable. Our goal is to dramatically reduce costs for companies like them.

Once we prove the concept with these early clients, we’ll explore adjacent markets. With two SOC-compliant GPUs on our network, we can target large tech companies or enterprises like JPMorgan or Procter & Gamble, which certainly have internal AI divisions. Our technology supports clusters of up to 500,000 GPUs, potentially giving us greater capacity than AWS or GCP, which can’t physically deploy that many GPUs in one location. This could attract major AI projects like OpenAI for future versions of GPT. However, building a marketplace requires balancing supply and demand; we currently have 25,000 GPUs on the network but a waitlist of 200,000. Over time, our goal is to scale the network to meet growing demand. That’s the business roadmap.

On the technical side, there’s clearly a lot to do. Currently, we support Ray and are actively developing Kubernetes support. But as I mentioned, we’re thinking about expanding our product. If you consider how AI works—for example, when you use ChatGPT, that’s the application, built on a model, which is GPT-3, running its inference on GPUs—we can ultimately start from GPUs and build the entire stack.

We’re also working with Filecoin; many of these partner data centers already have substantial CPU and storage capacity, so we can begin storing models as well. This would allow us to offer compute, model storage, and SDKs for app development, creating a fully decentralized AI ecosystem—almost like a decentralized app store.

What role does the token play in the network?

At a high level, it’s a utility token used to pay for compute on the network. That’s the simplest explanation. I’d also suggest checking out the site bc8.ai.

This is a proof-of-concept we built—a stable diffusion clone—and I believe it’s currently the only fully on-chain AI dApp. Users can make microtransactions via Solana, paying in crypto to generate images. Each transaction compensates four key stakeholders involved in creating the image: the app creator, the model creator, us, and the GPU used—all get paid. Right now, we let people use it for free because we act as both the app owner and model owner, but this is more of a proof-of-concept than a real business.

We plan to expand the network so others can host models and build fully decentralized AI applications. The IO token will power not only our models but any models created. Tokenomics are still being finalized and likely won’t be announced until around April.

Why Solana?

I think there are two reasons. First, we really love the community, and second, frankly, it’s the only blockchain capable of supporting us. If you look at our cost analysis, every time someone runs inference, there are about five transactions. You have the inference, then payments to all stakeholders. So when we run cost analyses with 60,000, 70,000, or 100,000 transactions, each must cost 1/100th of a cent or 1/10th of a cent. Given our transaction volume, Solana is literally our only option. Plus, they’ve been extremely supportive partners, and the community is strong. It was almost a no-brainer.

What do you think your total addressable market is?

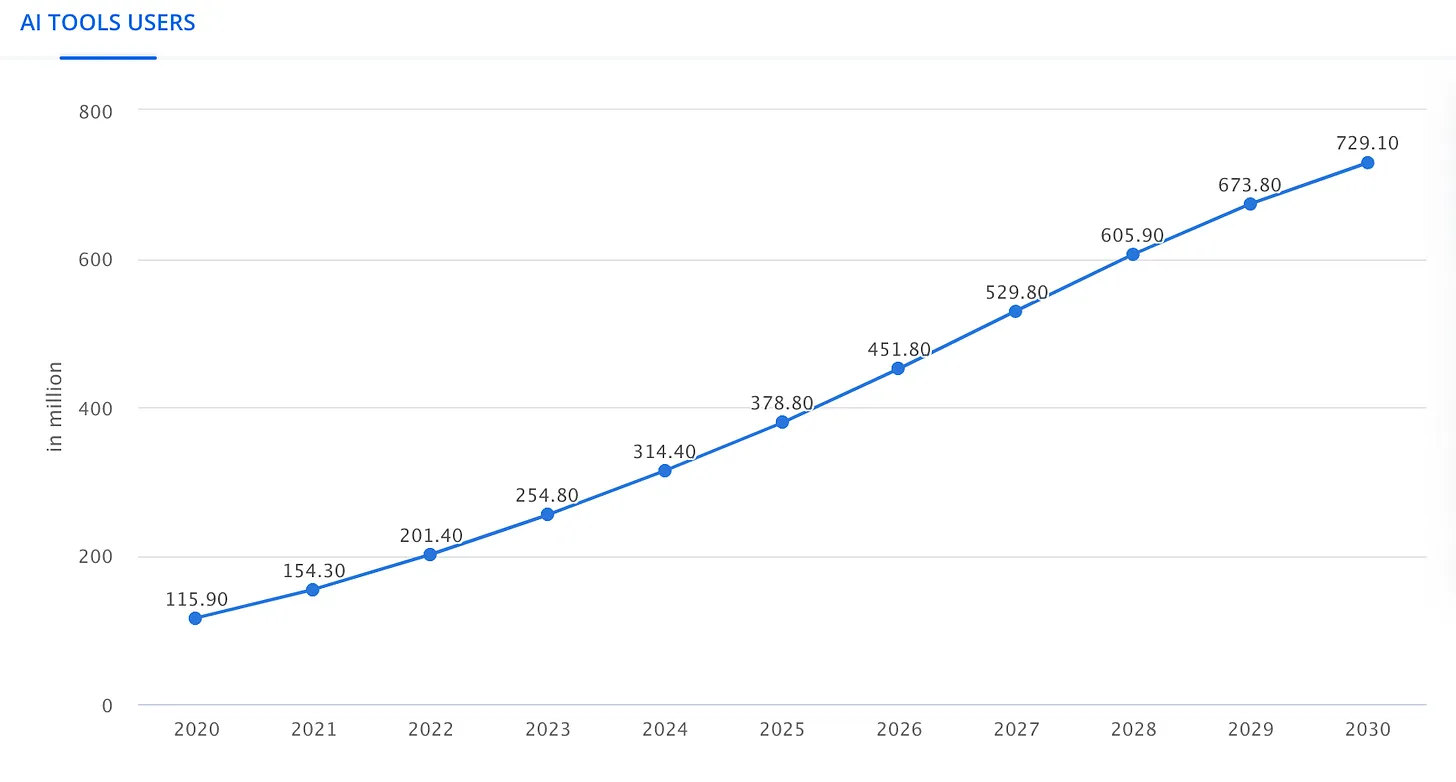

I think it’s unpredictable, you know. We throw around numbers like a trillion, but even that barely captures the full scope. For example, forecasts from firms like Gartner suggest that by 2030, model training alone could account for 1% of GDP—around $300 billion. That statistic is relatively easy to find. But when you consider Nvidia’s CEO stating that only 10% of the AI market is spent on training, your perspective shifts. If inference and training combined represent a $300 billion market, then the entire AI GPU market—compute services alone—could be a $3 trillion market. Then there’s Kathy Wood’s prediction that the overall AI market could reach $80 trillion. This shows the potential scale is almost beyond comprehension.

What do you see as the biggest obstacle to io.net’s growth?

Building a marketplace is hard—easier in crypto perhaps, but still challenging. For example, most of our clients ask for A100s, a top-tier enterprise-grade GPU costing around $30,000 each, and they’re in short supply. They’re extremely hard to source right now. Our sales team is working hard to secure these GPUs—it’s a major challenge due to scarcity and high cost.

We have plenty of 3090s, which are more consumer-grade, and demand isn’t as high. This means we have to adjust our strategy and find clients specifically looking for these types of GPUs. But this kind of imbalance exists in any market, and we’re addressing it by hiring the right people and implementing effective marketing strategies.

Strategically, as I mentioned, we’re currently the only platform capable of building decentralized clusters across different geographies. That’s our current moat. In the short term, we have a significant competitive advantage, and I believe this extends into the mid-term as well. For collaborators like Render, if they can leverage our network and retain 95% of the value, it makes no sense for them to try to replicate our model.

It took our team about two years to develop this capability. So it’s not something easily replicated. Still, eventually someone else might figure it out. By then, we hope to have built enough of a moat. We’re already the largest decentralized GPU network by an order of magnitude, with 25,000 GPUs, while Akash has only 300 and Render just a few thousand.

Our goal is to reach 100,000 GPUs and 500 customers, creating a network effect similar to Facebook. Then the question becomes, “Where else can you go?” Our aim is to become the go-to platform for anyone needing GPU compute, just as Uber dominates ride-sharing and Airbnb dominates accommodations. The key is moving fast to secure our position and become synonymous with decentralized GPU computing.

How to get the IO airdrop?

There are two main ways to qualify for the IO airdrop:

-

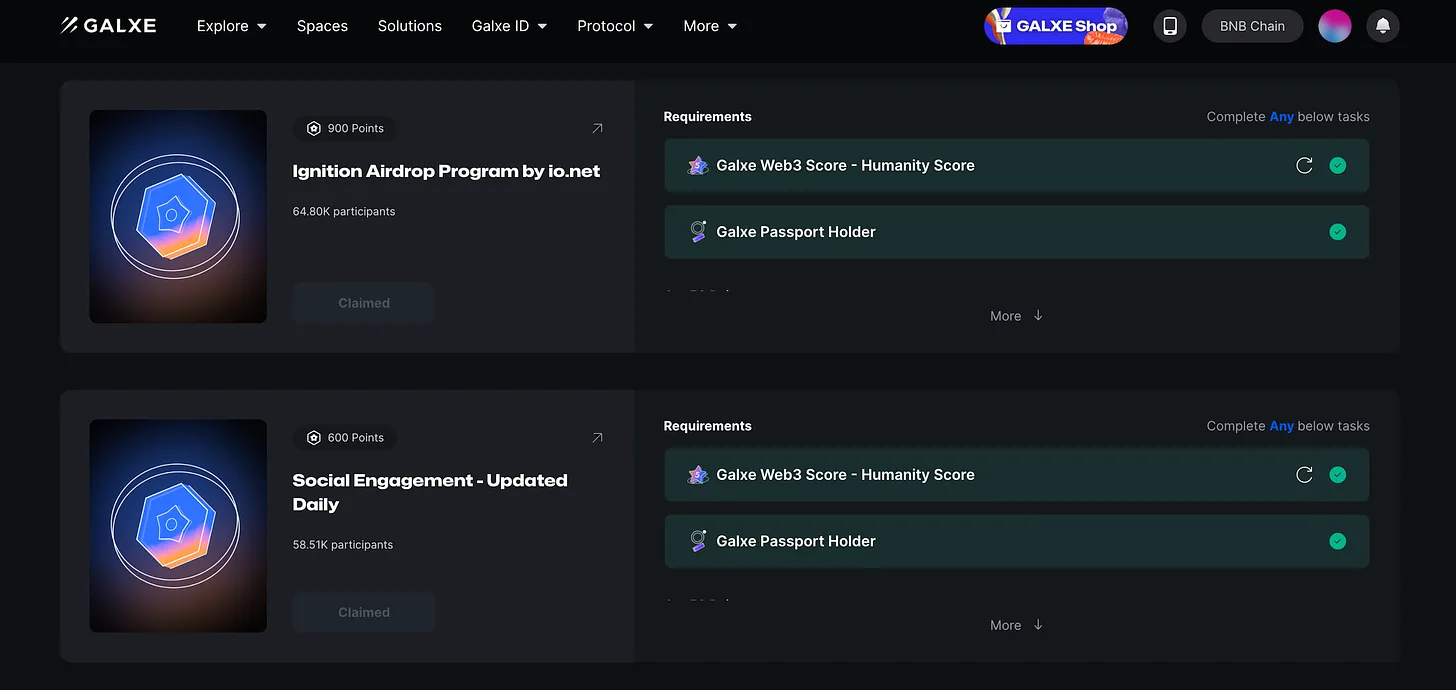

They’re running a Galxe campaign called “Ignition”. You simply complete tasks. It requires proving you’re human by minting a Galxe passport, which is good because it can’t be faked.

-

Provide your GPU/CPU to io.net. Just follow the instructions outlined in the documentation. Even if you’re non-technical, it takes about 10–15 minutes and is quite simple.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News