Igniting the Spark of Blockchain: LLMs Unlock New Possibilities for Blockchain Interaction

TechFlow Selected TechFlow Selected

Igniting the Spark of Blockchain: LLMs Unlock New Possibilities for Blockchain Interaction

Blockchain and zero-knowledge proofs cannot provide large-scale computational power for training and inference of some complex models; combining LLMs with blockchain is more reasonable.

Author: Yiping, IOSG Ventures

Welcome to the second article in our research series on large language models (LLMs) and blockchain. In the previous article, we discussed from a technical perspective how LLMs can be integrated with blockchain technology, why the LLM framework is well-suited for the blockchain domain, and outlined potential pathways for future convergence between LLMs and blockchains.

In this article, we take a more practical approach by diving deep into eight specific application areas that we believe will significantly transform the blockchain user experience. Even more exciting, we predict these breakthrough applications will become reality within the next year.

Join us as we uncover the future of blockchain interaction. Below is a brief overview of the eight applications we will explore:

1. Integrating native AI/LLM capabilities into blockchains

2. Using LLMs to analyze transaction records

3. Enhancing security with LLMs

4. Leveraging LLMs for code generation

5. Using LLMs to read and interpret code

6. Assisting communities with LLMs

7. Employing LLMs to track market trends

8. Applying LLMs to analyze projects

Integrating Native AI/LLM Capabilities into Blockchains

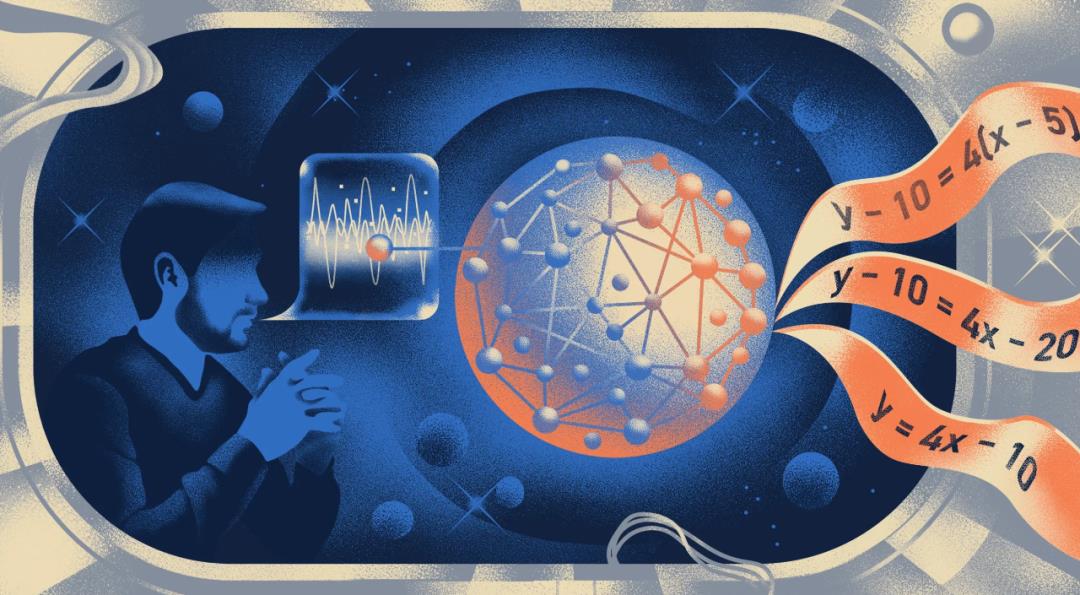

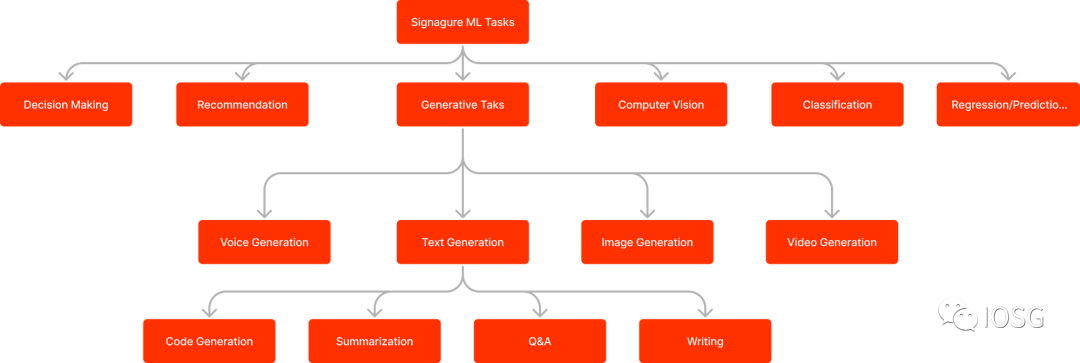

Blockchains will natively embed artificial intelligence functions and models. Developers will be able to access AI functions and execute signature ML tasks such as classification, regression, text completion, and AIGC directly on-chain. These AI capabilities can be invoked via smart contracts.

With these built-in capabilities, developers can imbue their smart contracts with intelligence and autonomy. Classification, regression, and AIGC are classic AI tasks. Let’s examine how these functions can be applied in the blockchain space, along with some example projects.

Classification

Classification can determine whether an address belongs to a bot or a human. This capability could revolutionize current NFT sales dynamics. It can also enhance the security of DeFi ecosystems—DeFi smart contracts could filter out malicious transactions and prevent fund losses.

Regression

Regression analysis enables forecasting and can be applied to fund and asset management. Numer.ai already uses AI to assist in fund management. Numer provides high-quality stock market data, which data scientists use to build machine learning models for predicting market movements.

AIGC

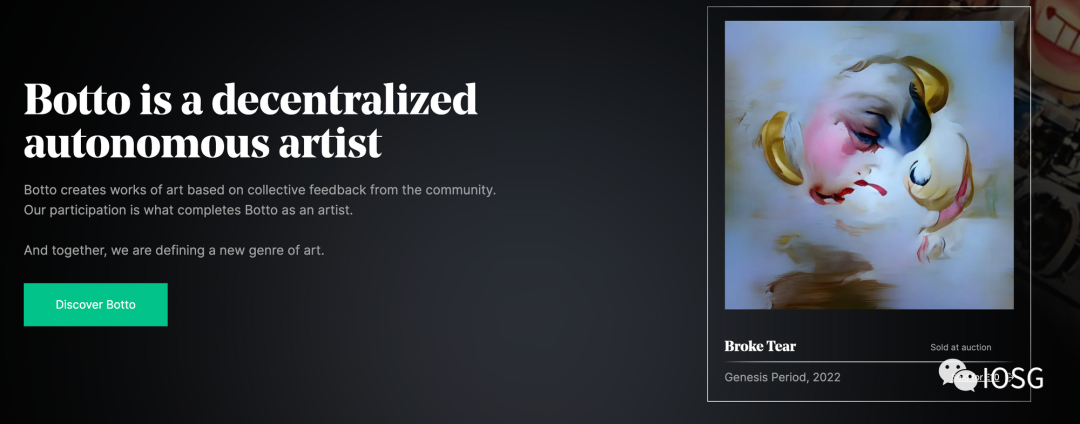

Many NFT projects aim to build expansive IP universes. However, their limited content often fails to sustain such ambitions. If AIGC could be used on-chain to generate vast amounts of content in a consistent brand style at relatively low cost, it would dramatically expand the scale of these IP universes. Models could produce text, illustrations, music, voice, and even video. Community members could collaboratively fine-tune the model to align with their vision, fostering greater engagement through participation in the refinement process.

Botto uses an AIGC model to generate artistic content. The community votes on their favorite images, collectively fine-tuning the AIGC model over time.

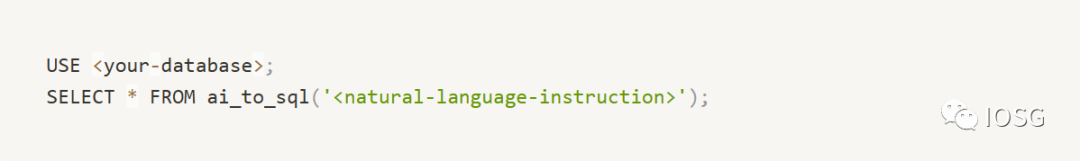

If we consider blockchains as databases, we see parallels in projects like Databend, which integrates native AI capabilities into its database system. It offers the following features:

-

ai_embedding_vector: Generate embedding vectors for text documents.

-

ai_text_completion: Generate text completions based on given prompts.

-

cosine_distance: Compute cosine distance between two embedding vectors.

-

ai_to_sql: Convert natural language instructions into SQL queries.

Providing AI Capabilities to Blockchain

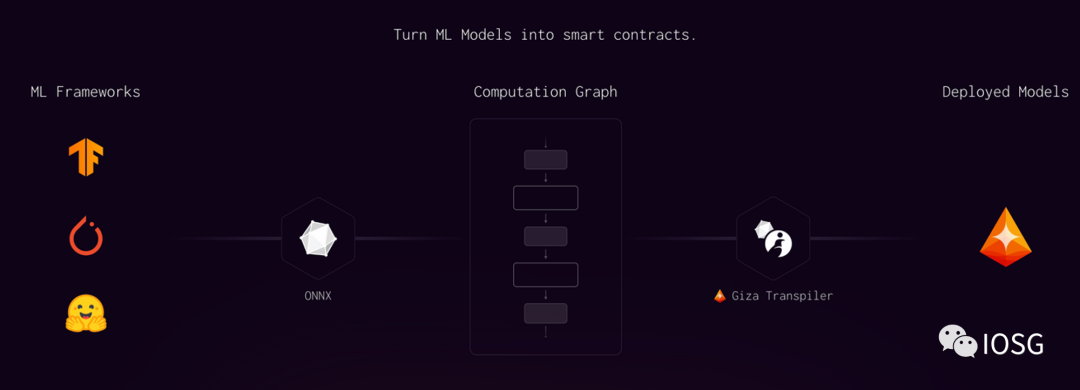

We know several projects are actively working to provide AI capabilities to blockchain.

Giza is advancing work in ZKML. It generates inference proofs off-chain and verifies them on-chain. Currently, it supports EVM-compatible blockchains and StarkNet. Giza recently announced a partnership with Yearn.finance, where Yearn will leverage Giza’s AI capabilities to enhance its risk assessment systems.

Modulus Labs is also pursuing similar goals. They are intensively improving proof systems to create high-performance circuits for AI. They have demonstrated applications such as a chess-playing AI and an Ethereum price prediction AI. Their latest demo project, zkMon, is the world’s first generative adversarial network (GAN)-based NFT collection secured with zero-knowledge proofs.

Using LLMs to Analyze Transaction Records

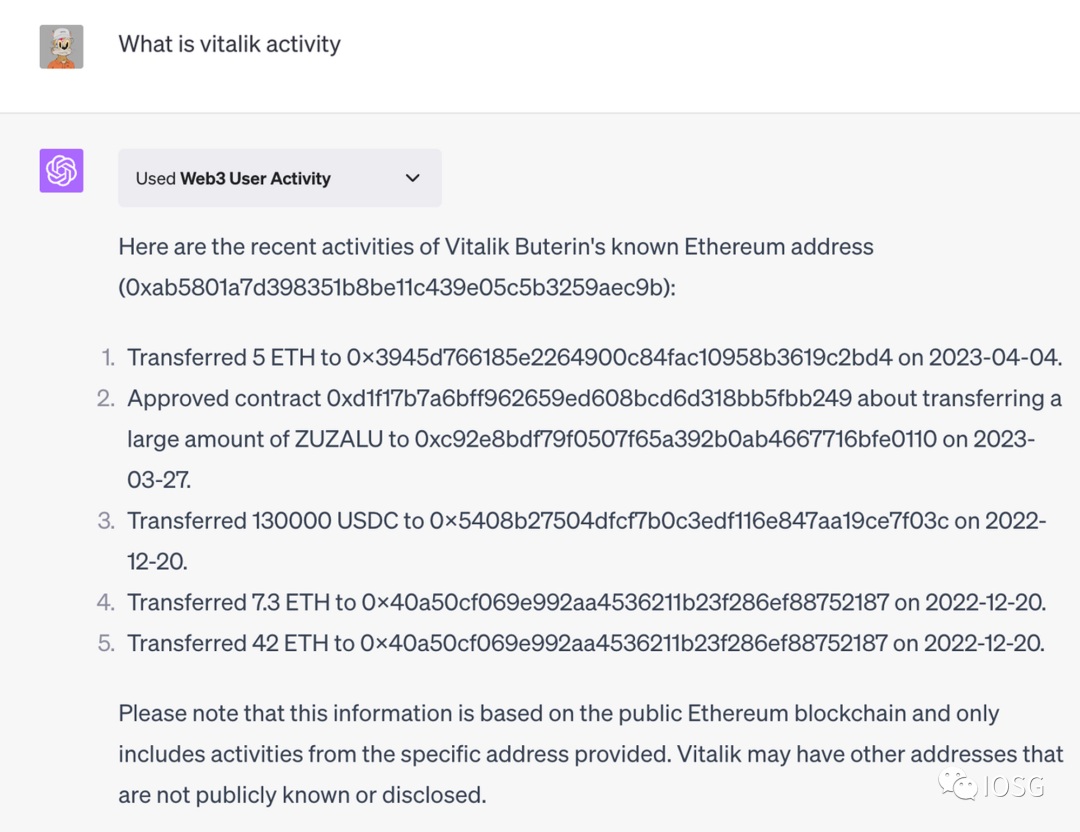

Transaction record analysis is typically performed by specialized tools like Debank. Manual analysis is difficult—it involves data collection, cleaning, and interpretation, requiring coding skills. Thanks to LLMs’ ability to analyze and visualize data, we now have a new approach. With LLMs, we can analyze on-chain data according to custom requirements, assessing metrics such as win rate, performance ratios, or any other insights we seek.

RSS3 has developed a ChatGPT plugin called Web3 User Activity to move in this direction. Users can input wallet addresses, ENS names, or Lens profiles to query on-chain activities. The plugin outputs transaction information in a human-readable format. Unfortunately, it cannot yet handle complex queries—such as counting Azuki holders or identifying the most popular smart contracts. Users should also note that the addresses and labels provided by the plugin may not always be accurate.

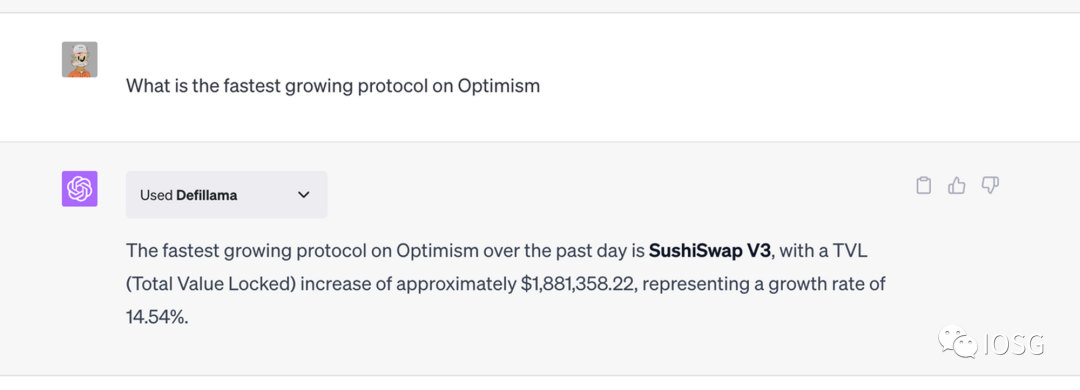

DeFiLlama has also released a ChatGPT plugin. Users can query any data available on DeFiLlama using natural language. It supports simple filtering and sorting operations:

Dune is integrating GPT into its platform to deliver the following functionalities:

-

Query explanation: Use LLMs to explain SQL queries

-

Query translation: Translate other SQL dialects into DuneSQL using LLMs

-

Natural language queries: Enable users to write queries using natural language

-

Search enhancement: Improve search results using LLMs

-

Knowledge base assistant: A chatbot that allows users to interact with documentation

Enhancing Security with LLMs

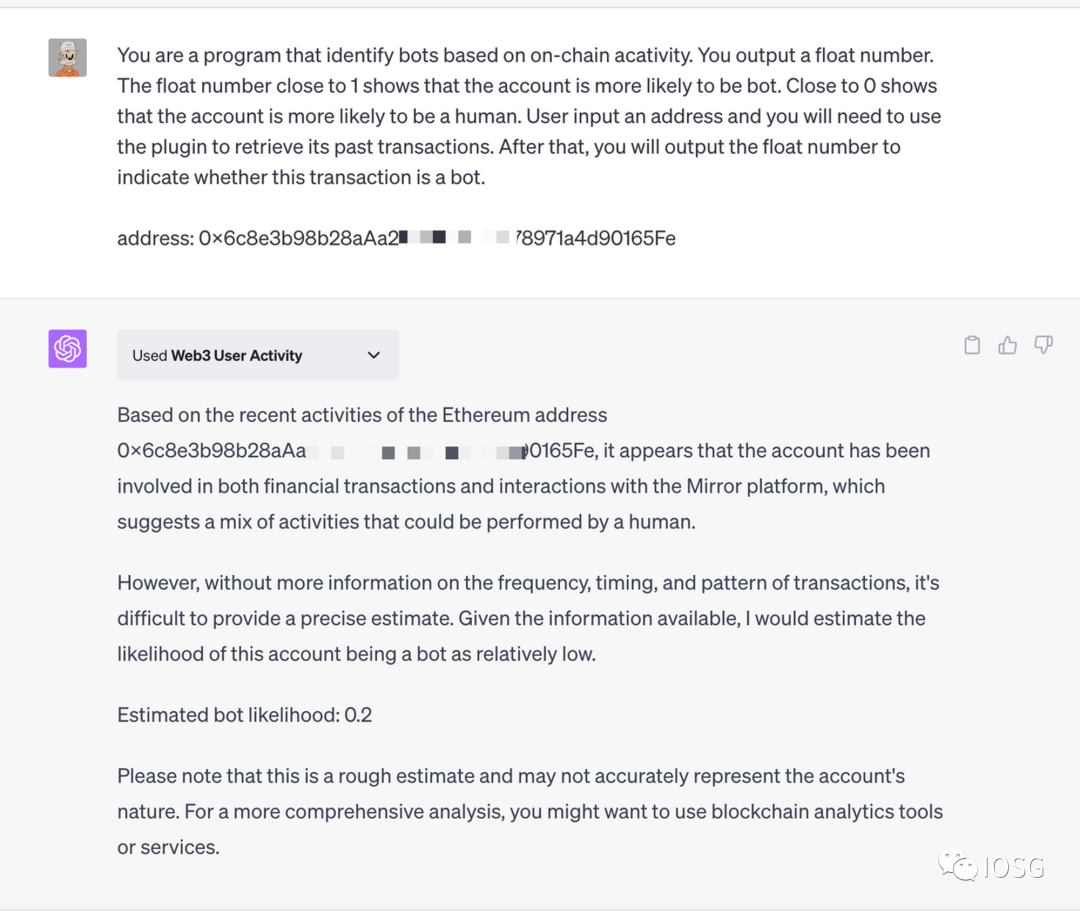

Due to their logical reasoning abilities, LLMs can be used to filter out malicious transactions, acting as firewalls for smart contracts. Here’s a concrete example showing how to prevent bot activity:

After receiving an address, an LLM can retrieve all transaction data via a third-party plugin, analyze the transaction history, and estimate the likelihood that the address belongs to a bot. This functionality could be embedded into dApps that discourage bots, such as NFT mints.

Below is a simple demonstration using ChatGPT. ChatGPT retrieves the account’s transaction history via RSS3’s Web3 User Activity plugin, analyzes the data, and outputs the probability that the account is a bot.

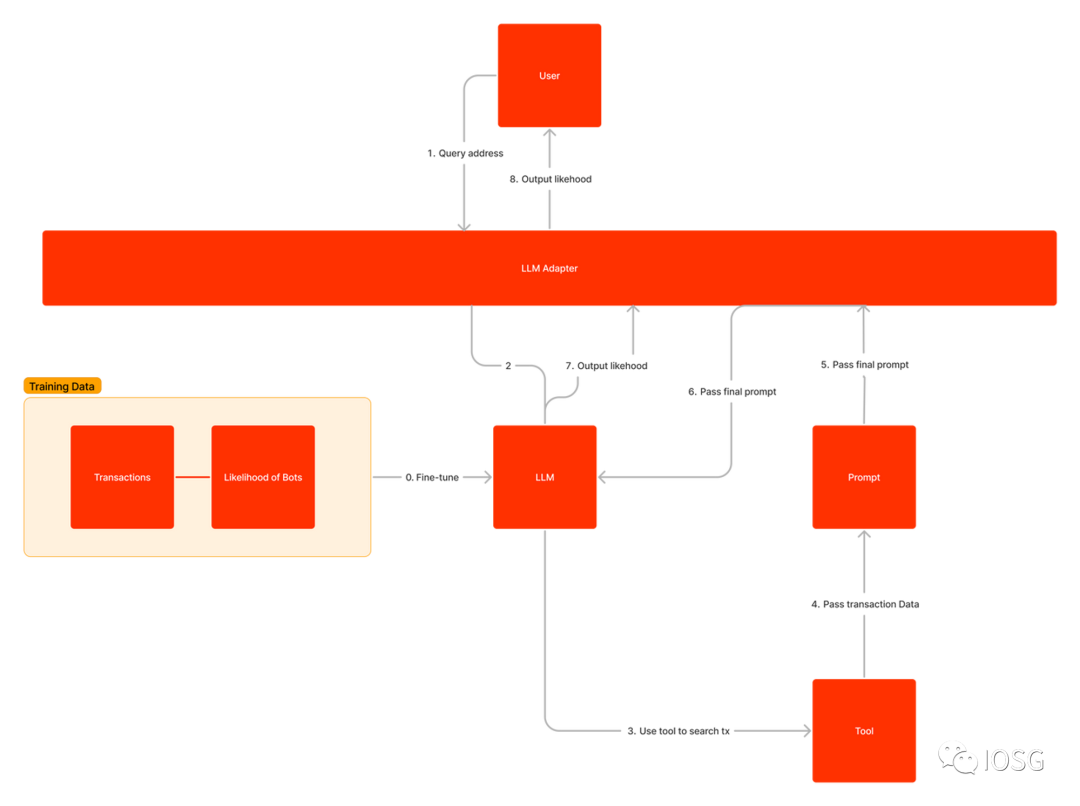

By feeding more transaction data and fine-tuning the LLM on bot-related datasets, we can achieve higher accuracy. Below is a sample workflow for such an application. We can further add caching and database layers to improve response speed and reduce costs.

Leveraging LLMs for Code Generation

LLMs are widely used in development to help engineers write code faster and better. Based on developer instructions, LLMs can generate code. Currently, developers still need to provide detailed prompts—LLMs struggle to autonomously generate full project codebases.

Popular LLM models for code include StarCoder, StarCoder+, Code T5, LTM, DIDACT, WizardCoder, FalCoder-7B, and MPT30B.

All these models can assist in writing smart contracts, though they may not have been specifically trained on smart contract data. There remains room for improvement.

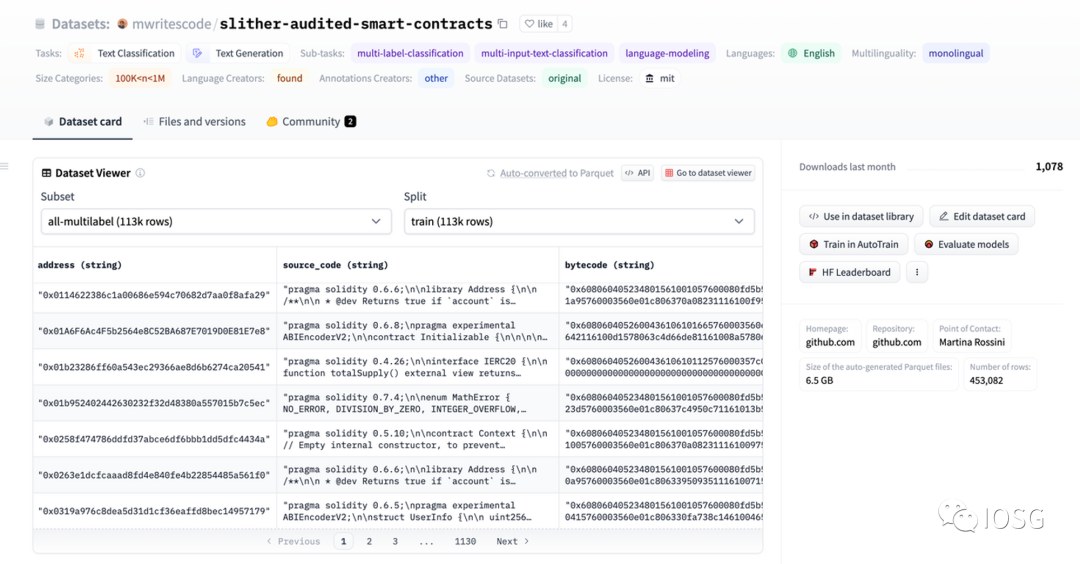

Currently, only one smart-contract-related dataset exists on HuggingFace: the "More Complete Audited Smart Contracts" dataset, containing 113,000 smart contracts. It can be used for tasks like text classification, text generation, and vulnerability detection.

Compared to assisting development tools, fully automated code generation holds even greater promise. Automated code generation is particularly suitable for smart contracts due to their relatively short and simple nature. LLMs can help developers auto-generate code in several ways within the blockchain domain.

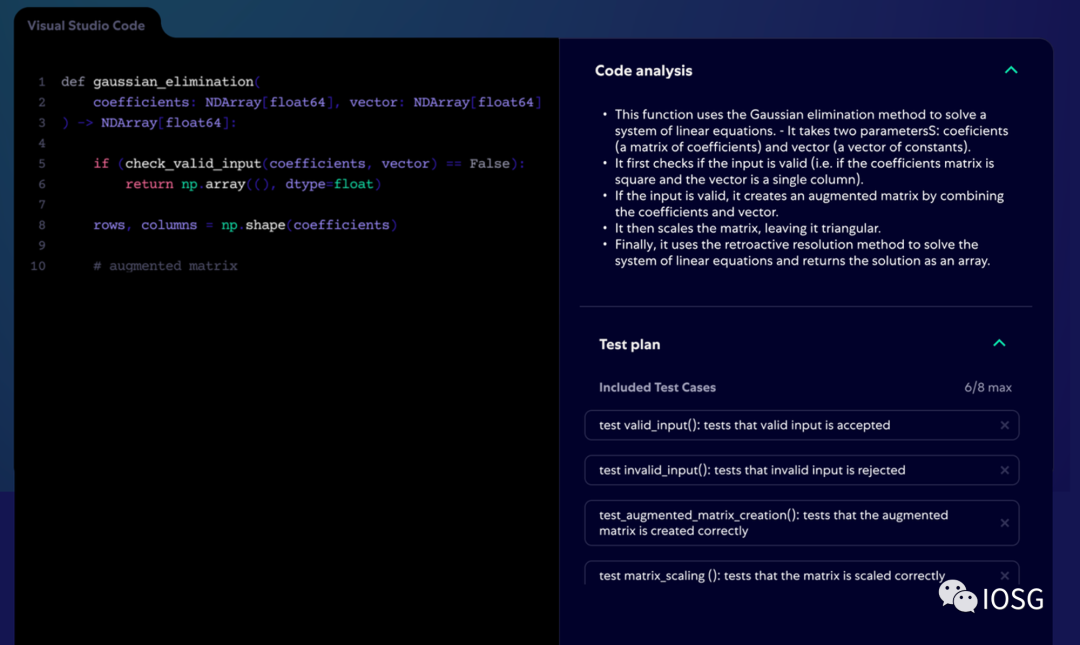

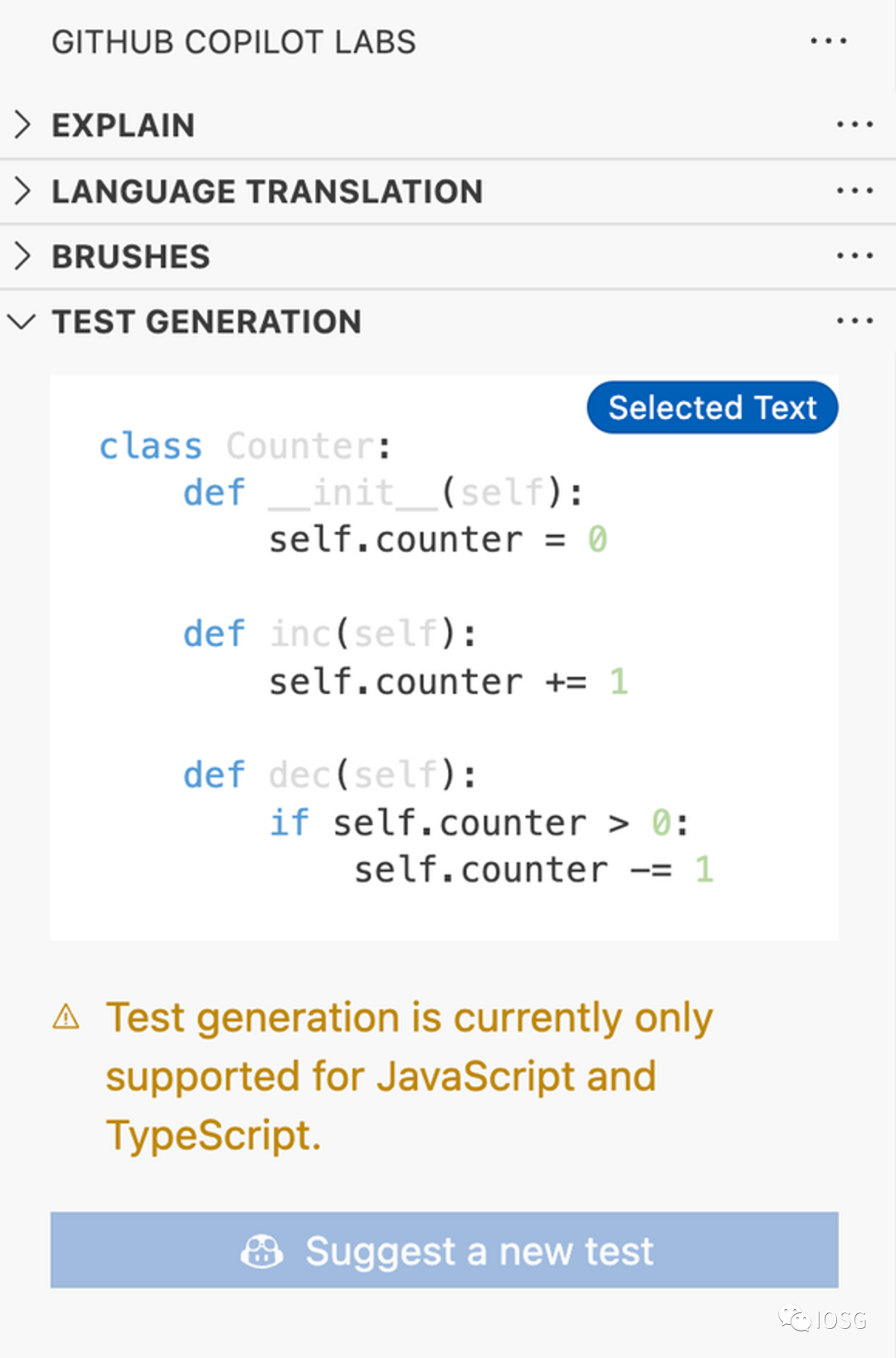

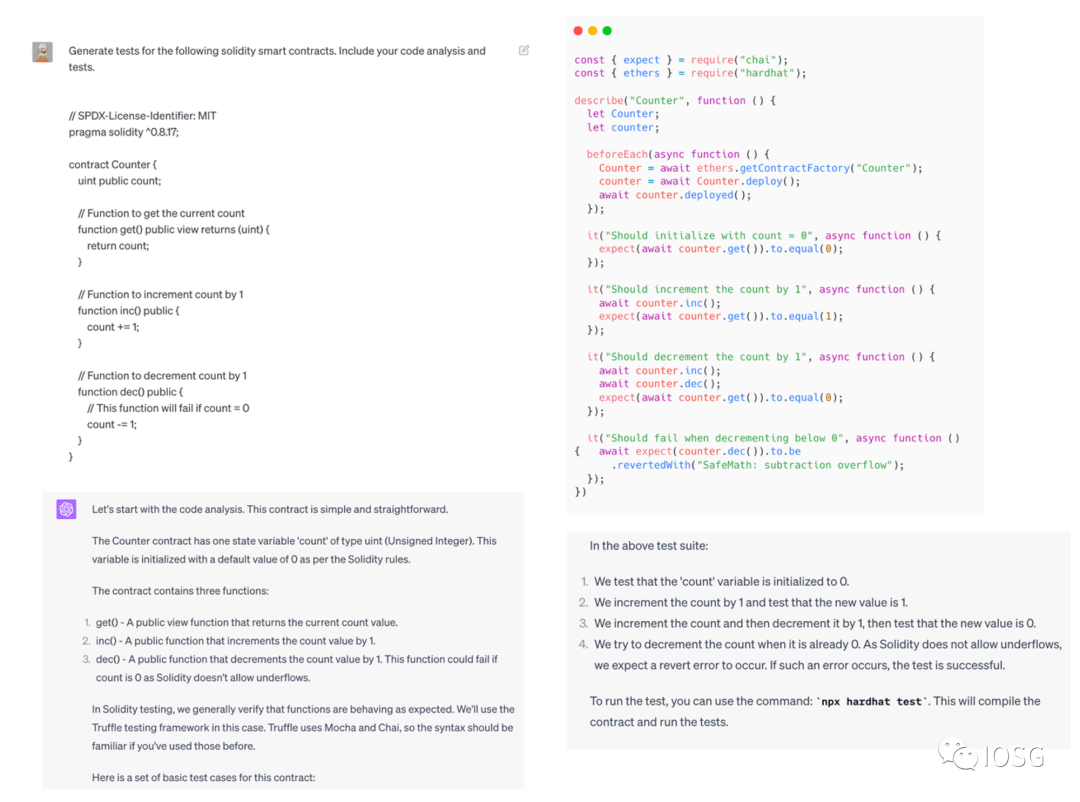

Testing

First, LLMs can generate tests for well-written smart contracts. For instance, Codium can automatically generate tests for existing projects. Codium currently supports JS and TS. It first understands the codebase, analyzing each function, docstring, and comment. Then, it writes back code analysis as comments and outputs a test plan. Users can select preferred test cases, and Codium generates the corresponding test code.

Other assistant tools also support generating tests for selected functions.

We can replicate similar functionality on GPT-4 using analogous steps.

We first request code analysis because we want the LLM to spend more computational effort on this task. LLMs do not inherently understand task difficulty—they allocate equal computation per token, which may lead to inaccurate results on complex tasks. Given this limitation, explicitly requesting analysis forces the LLM to dedicate more tokens/time to thinking through the problem, resulting in higher-quality outputs. This method is known as “chain-of-thought” prompting.

To apply this to longer smart contracts, we either need an LLM with larger context capacity or engineering solutions to preserve memory across segments.

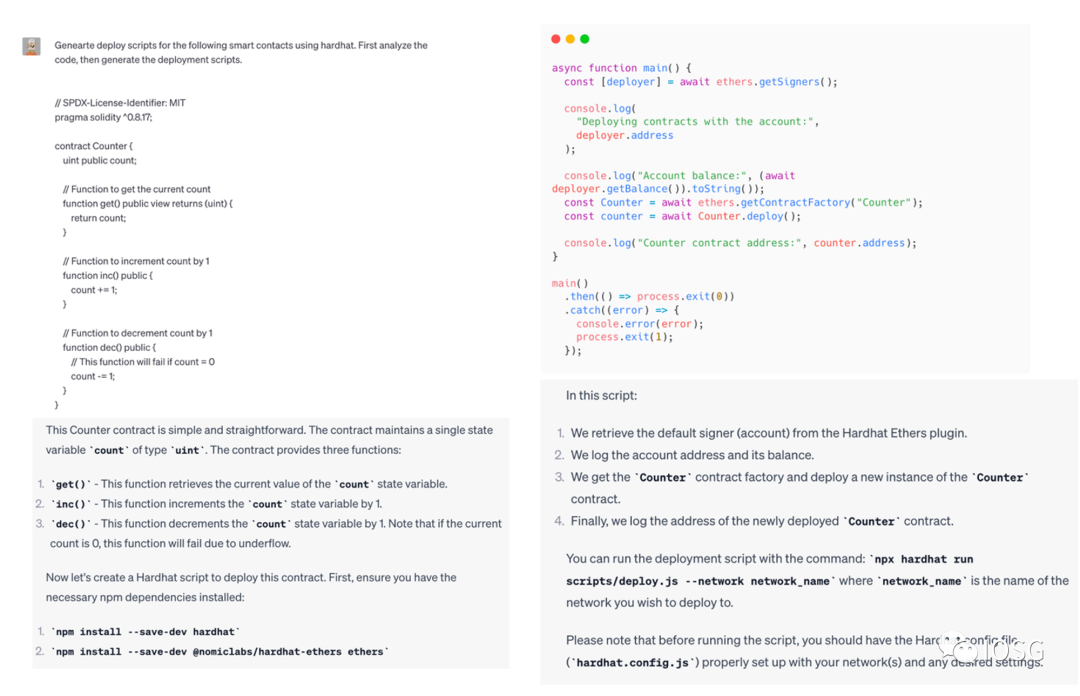

Generating auxiliary scripts

Second, we can use LLMs to automatically generate auxiliary scripts, such as deployment scripts.

Deployment scripts reduce potential errors during manual deployment. The concept is very similar to auto-generating tests.

Automatic forking

During bull markets, many forked projects emerge, where teams make minor changes to original codebases. This presents a strong use case for LLMs: LLMs can help developers automatically modify code according to team needs. Often, only specific sections require changes—something relatively easy for LLMs to handle.

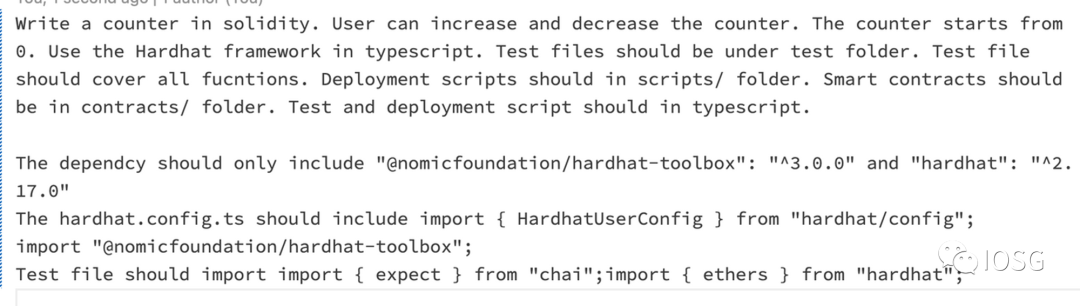

Auto-generating code

Taking it further—can LLMs auto-generate entire smart contracts based on developer requirements? Compared to complex software written in JS, Rust, or Python, smart contracts are relatively short and simple, with few external dependencies. Therefore, **it is relatively easier for LLMs to learn how to write smart contracts**.

We’ve already seen progress in auto-generated code. GPT-engineer is one pioneer. It clarifies user requirements, resolves ambiguities, then begins coding. The output includes a script to run the entire project. GPT-engineer can automatically bootstrap projects for developers.

After receiving user requirements, GPT-engineer analyzes them and asks clarifying questions. Once all necessary information is gathered, it first outputs a program design—including core classes, functions, and methods needed. Then, it generates code for each file.

Using similar prompts, we can generate a counter smart contract.

The smart contract can be compiled and functions as expected.

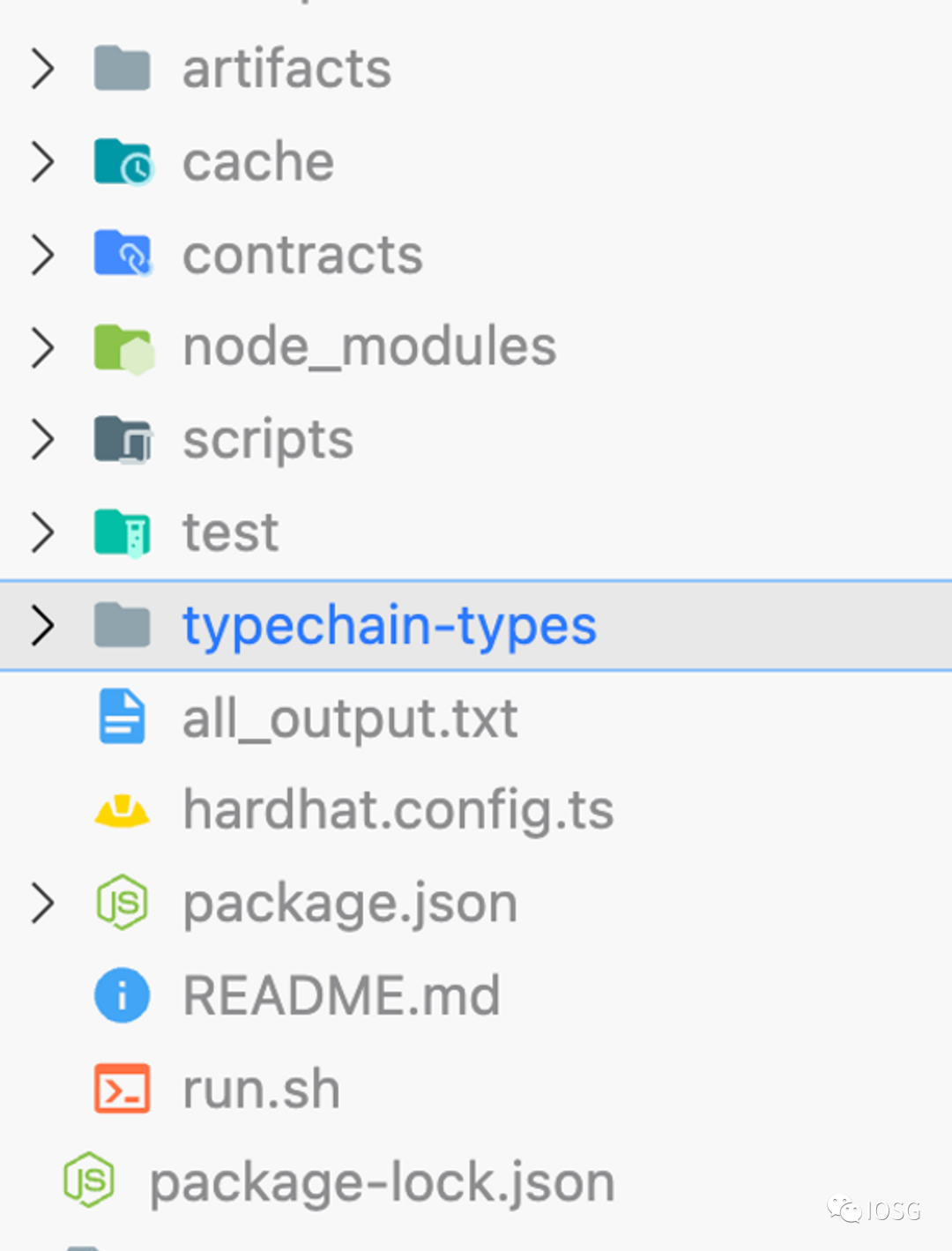

Since GPT-engineer was initially designed for Python, there are issues when generating Hardhat-related code. It lacks awareness of Hardhat’s latest version and sometimes produces outdated test and deployment scripts.

If our code contains bugs, we can feed the codebase and console error logs back to the LLM. The LLM can iteratively fix the code until it runs successfully. Projects like **[flo](https://flocli.vercel.app/)** are moving in this direction, although flo currently only supports JS.

To improve the accuracy of smart contract generation, we can refine GPT-engineer with new prompting techniques. Adopting test-driven development—requiring the LLM to ensure generated programs pass certain tests—can better constrain outputs.

Using LLMs to Read and Interpret Code

Given LLMs’ strong code comprehension, we can use them to generate developer documentation. LLMs can also track code changes to keep documentation up-to-date. We previously discussed this approach at the end of our research report *Exploring Developer Experience on ZKRUs: An In-Depth Analysis*.

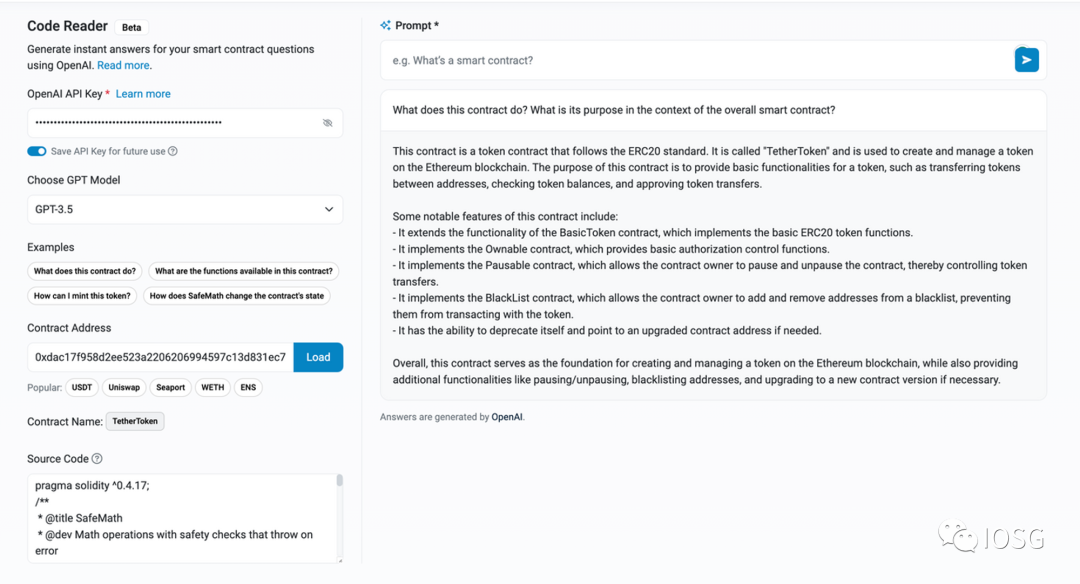

Reading documentation is the traditional method; conversing with code is a new paradigm. Users can ask any question about the code, and the LLM responds. LLMs can explain code to developers, helping them quickly understand on-chain smart contracts. They can also enable non-coders to grasp smart contract logic.

This trend is already visible in Web2. Many code assistant tools offer code explanation features.

Etherscan has showcased a new feature allowing users to interact with code using LLM capabilities.

How might auditing change when LLMs can understand code? In the experiment from the paper *Do You Still Need a Manual Smart Contract Audit?*, LLMs achieved a 40% hit rate in identifying vulnerabilities—outperforming random baselines—but also had high false positive rates. The authors emphasized that proper prompting is crucial.

Beyond prompting, several factors currently limit adoption:

-

Current LLMs aren’t specifically trained for this purpose. Training data may lack smart contract codebases and corresponding audit reports.

-

The most critical vulnerabilities often stem from logical flaws across multiple functions. LLMs are constrained by token limits and struggle with long-context, logic-intensive problems.

These challenges are solvable. Major audit firms possess thousands of audit reports that could be used to fine-tune LLMs. LLMs with larger token limits are emerging—Claude supports 100,000 tokens, and the newly released LTM-1 boasts an impressive 5 million token limit. Addressing both fronts could enable LLMs to identify vulnerabilities more effectively. LLMs could assist auditors and accelerate the auditing process. This evolution may unfold gradually, following this trajectory:

- Help auditors organize language and standardize report formatting, ensuring consistency across teams that may otherwise use different terminology.

- Assist auditors in identifying and validating potential vulnerabilities.

- Automatically generate draft audit reports.

Assisting Communities with LLMs

Governance is central to communities. Members have the right to vote on proposals they support—these decisions shape the product’s future.

For significant proposals, extensive background information and community discussions exist. It's challenging for members to fully grasp all context before voting. LLMs can help community members quickly understand the implications of their choices and make informed voting decisions.

Q&A bots are another promising application. We’ve seen bots based on project documentation. We can go further by building comprehensive knowledge bases. We can incorporate diverse media and sources—presentations, podcasts, GitHub, Discord chats, and Twitter Spaces. These Q&A bots won’t just live in documentation search bars—they can offer real-time support in Discord or spread project visions and answer questions on Twitter.

AwesomeQA is advancing in this direction with three core features:

-

Integrate ChatGPT to answer community questions

-

Gain data-driven insights from community messages, such as FAQ analysis

-

Identify important messages, such as unresolved issues

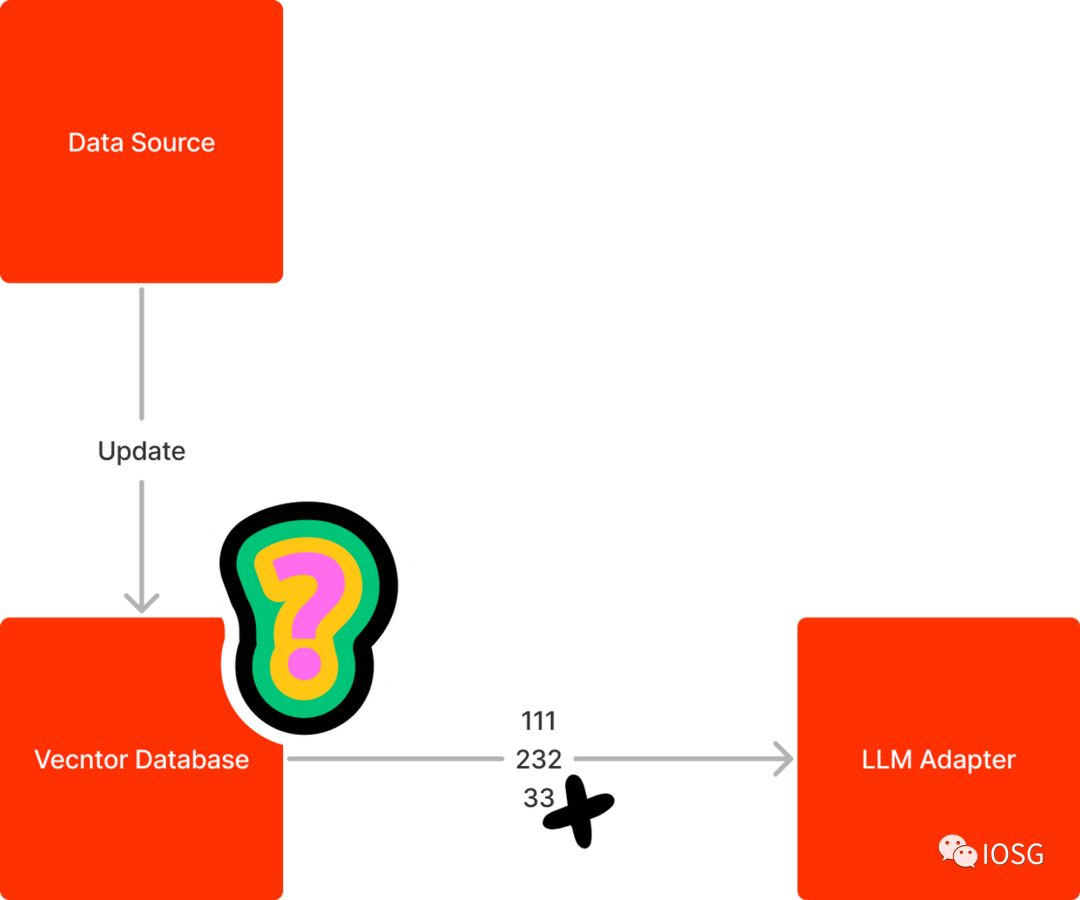

One current challenge for Q&A bots is accurately retrieving relevant context from vector databases and supplying it to the LLM. For example, if a user requests a multi-feature query with filters across multiple elements, the bot may fail to retrieve the correct context.

Updating vector databases is another issue. Current solutions involve rebuilding the entire database or updating via namespaces. Adding namespaces to embeddings is akin to tagging data—this helps developers locate and update relevant embeddings more easily.

Employing LLMs to Track Market Trends

Markets evolve rapidly, with countless events daily—KOLs share new ideas, newsletters flood inboxes, product updates arrive constantly. LLMs can prioritize the most important ideas and news for you. They can summarize content to save reading time and help you stay updated on market dynamics.

minmax.ai focuses on news. It provides summaries of the latest developments on specific topics, along with sentiment analysis.

Boring reports strip away sensationalism from news, focusing on essential details to help readers make sound decisions.

Robo-advisory is one of today’s hottest fields. LLMs can power robo-advisory services. LLMs can offer trading advice, helping users manage portfolios based on contextual market information.

Projects like Numer.ai use AI to predict markets and manage funds. There are also LLM-managed portfolios that users can follow on platforms like Robinhood.

Composer introduces AI-powered trading algorithms. AI builds customized trading strategies based on user insights, automatically backtests them, and—if the user approves—executes the strategies autonomously.

Applying LLMs to Analyze Projects

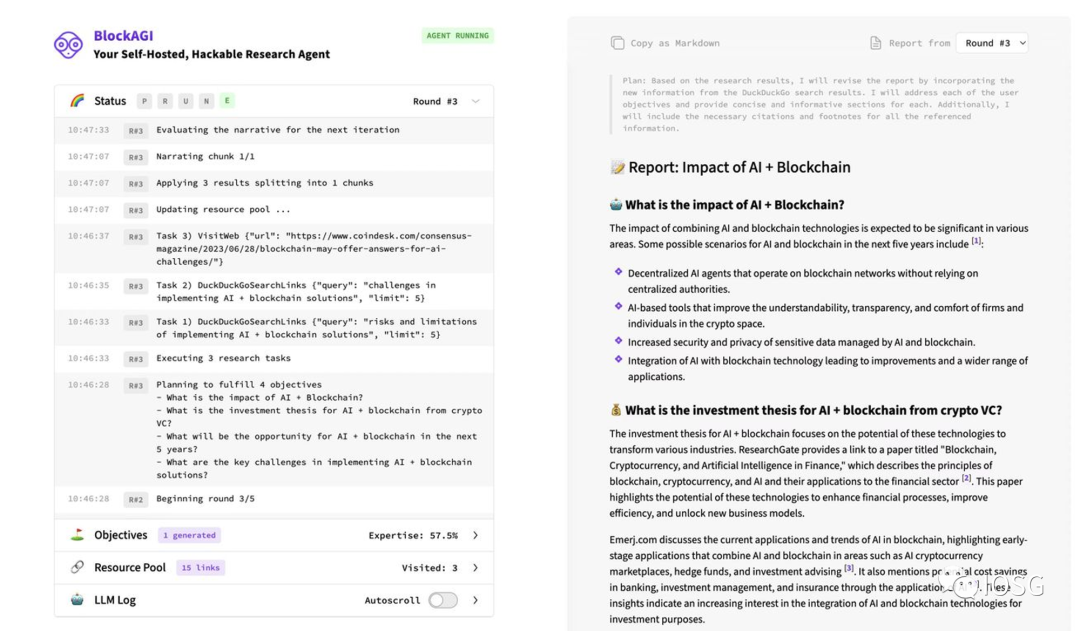

Project analysis usually involves reading extensive materials and writing lengthy research papers. LLMs can read and write short passages. If we extend their ability to handle longer texts, could LLMs eventually produce project research? Very likely. We could input whitepapers, documentation, or presentation decks and let the LLM analyze the project and its founder. Due to token limitations, we might first generate an outline, then iteratively refine each section based on retrieved information.

Projects like BabyAGI are already making progress in this area. Below is an example output from BlockAGI, a variant of BabyAGI.

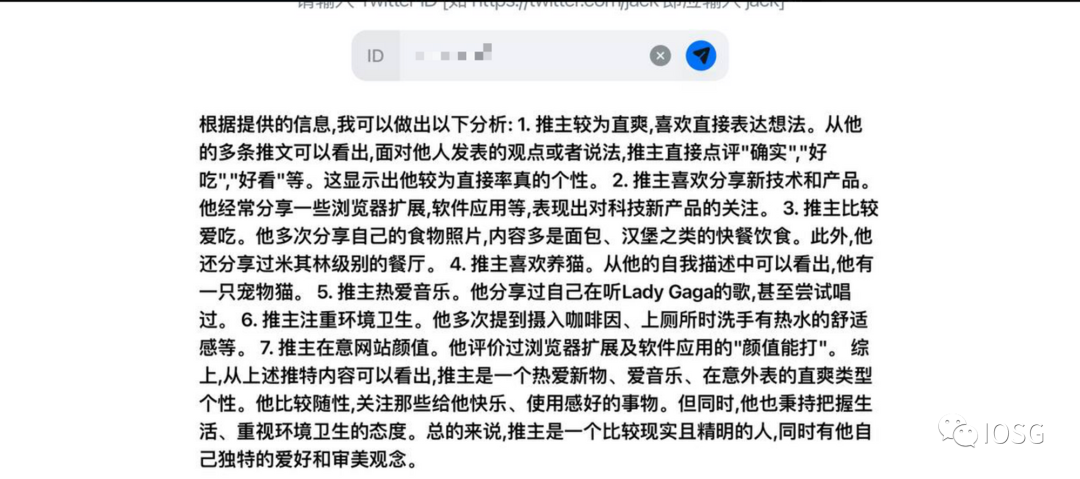

LLMs can also analyze a founder’s personality based on Twitter posts and public speeches. For example, a tweet analyzer can collect recent tweets and use an LLM to assess personal traits.

Conclusion

These are eight concrete directions in which LLMs can empower the blockchain community in the near future:

1. Integrating native AI/LLM capabilities into blockchains.

2. Using LLMs to analyze transaction records.

3. Enhancing security with LLMs.

4. Leveraging LLMs for code generation.

5. Using LLMs to read and interpret code.

6. Assisting communities with LLMs.

7. Employing LLMs to track market trends.

8. Applying LLMs to analyze projects.

LLMs can benefit all members of the crypto ecosystem—project founders, analysts, and engineers alike. Founders can automate tasks like documentation and Q&A. Engineers can write code faster and more securely. Analysts can research projects more efficiently.

Looking ahead, we see potential opportunities for applying LLMs in GameFi. LLMs could generate more engaging quests and play dynamic roles within games, making virtual worlds feel more immersive and lifelike. NPCs could react dynamically to player actions, and quests could have multiple outcomes based on how players solve them.

LLMs can be integrated into existing projects, but also open doors for newcomers. For instance, while there are established leaders in on-chain data analytics—like Dune—integrating LLMs could greatly enhance UX. At the same time, LLMs create opportunities for new entrants who place AI at the core of their product design. AI-first, AI-centric products could introduce fresh competition in on-chain analytics.

While LLM use cases overlap between Web2 and Web3, products may manifest differently. The data we use in Web3 differs fundamentally from Web2—blockchain data, token prices, tweets, projects, and research outputs shape a distinct knowledge base. Therefore, Web2 and Web3 likely require different LLMs tailored to their respective domains.

Amid the LLM boom, AIxBlockchain is gaining popularity. However, many AIxBlockchain concepts aren't immediately feasible. Blockchains and zero-knowledge proofs currently lack the computational scale to train or run complex models for training and inference. Small models cannot tackle complex tasks. A more practical path is applying LLMs within blockchain contexts. LLMs have recently advanced faster than other AI subfields. Combining LLMs with blockchain is therefore a more viable strategy.

The LLM community is actively improving token limits and response accuracy. Meanwhile, the blockchain community owns the data sources and pipelines. Cleaned data can be used to fine-tune LLMs for higher accuracy in blockchain environments. Data pipelines can integrate more blockchain-specific applications into LLMs and foster the development of crypto-native agents.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News