AI + Web3: Tower and Square

TechFlow Selected TechFlow Selected

AI + Web3: Tower and Square

As towers grow taller and the decision-makers behind the scenes become more concentrated, AI centralization brings many risks—how can the people gathered in the square avoid the shadow beneath the tower?

Author: Coinspire

TL;DR:

-

Web3 projects related to AI concepts have become major fundraising targets in both primary and secondary markets.

-

The opportunities for Web3 in the AI industry lie in using distributed incentives to coordinate potential supply across long-tail segments—data, storage, and computing—while building an open-source model and decentralized marketplace for AI Agents.

-

The main applications of AI in the Web3 sector are on-chain finance (crypto payments, trading, data analytics) and development assistance.

-

The synergy between AI and Web3 lies in their complementarity: Web3 has the potential to counteract AI centralization, while AI can help Web3 break into the mainstream.

Introduction

In recent years, AI development has accelerated dramatically. The ripple effect initiated by ChatGPT has not only opened a new world of generative artificial intelligence but also triggered a wave in the distant realm of Web3.

With the boost from AI-related concepts, fundraising in the otherwise slowing crypto market has shown clear signs of recovery. According to media reports, as many as 64 Web3+AI projects secured funding in the first half of 2024 alone. Zyber365, an AI-powered operating system, raised $100 million in its Series A round—the highest amount raised at that stage.

The secondary market is even more vibrant. Data from cryptocurrency aggregator Coingecko shows that within just over a year, the total market capitalization of the AI sector has reached $48.5 billion, with a 24-hour trading volume approaching $8.6 billion. Major advancements in AI technology have brought significant benefits: after OpenAI released its text-to-video model Sora, the average price of AI-related tokens surged by 151%. The AI effect has also spread to one of crypto's top fundraising sectors—Meme coins: GOAT, the first MemeCoin based on an AI Agent concept, quickly gained popularity and achieved a valuation of $1.4 billion, sparking widespread enthusiasm for AI Memes.

Research and discussions around AI+Web3 are equally heated, ranging from AI+DePin to AI Memecoins, and now to the current focus on AI Agents and AI DAOs. FOMO sentiment can barely keep up with the speed of these evolving narratives.

The term "AI+Web3," brimming with hot money, trends, and futuristic fantasies, is often seen as a marriage arranged by capital. It’s hard to tell whether beneath this grand facade lies a playground for speculators or the dawn of a revolutionary breakthrough.

To answer this question, a crucial consideration for both sides is: does each side become better with the other? Can they benefit from each other’s models? In this article, we aim to build upon previous insights to examine this landscape: how can Web3 play a role across various layers of the AI tech stack, and what new vitality can AI bring to Web3?

Opportunities for Web3 in the AI Stack

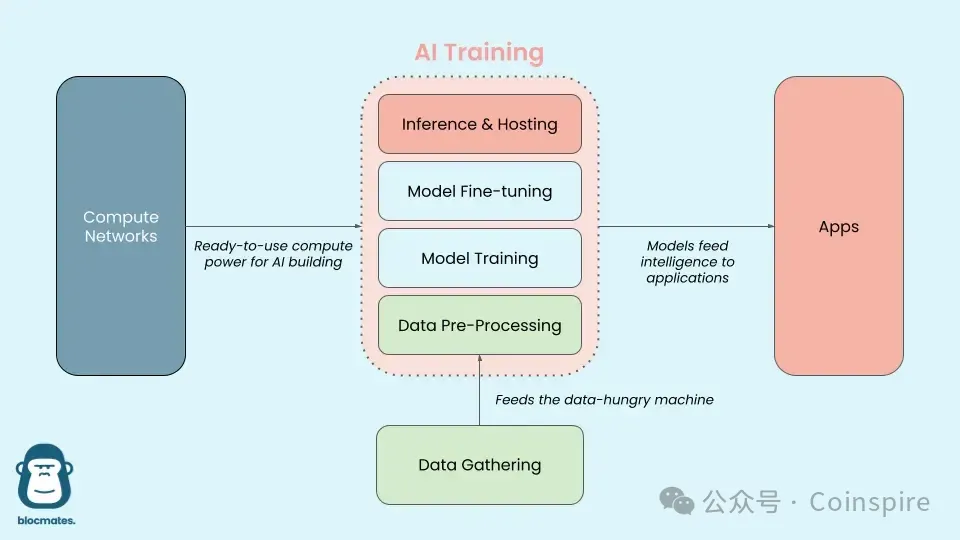

Before diving into this topic, it’s essential to understand the technical stack of large AI models:

Image source: Delphi Digital

In simpler terms: "Large models" resemble the human brain. At an early stage, this brain is like a newborn infant that needs to observe and absorb vast amounts of external information to understand the world—this is the "data collection" phase. Since computers lack human sensory capabilities such as vision and hearing, large-scale unlabelled external information must be "pre-processed" into formats that computers can understand and use before training.

After inputting data, AI "trains" to build a model capable of understanding and prediction, similar to how an infant gradually learns from the outside world. The model's parameters are akin to the child constantly adjusting language skills during learning. When learning becomes specialized, or feedback from interactions leads to corrections, the model enters the "fine-tuning" phase.

As children grow and learn to speak, they begin to understand meaning and express feelings and thoughts in new conversations. This phase parallels the "inference" stage of AI large models, where the model predicts and analyzes new linguistic or textual inputs. Just as infants use language to express emotions, describe objects, and solve problems, large AI models apply their trained capabilities during inference to specific tasks such as image classification and speech recognition.

AI Agents represent the next evolution of large models—systems capable of independently executing tasks and pursuing complex goals, possessing not only reasoning ability but also memory, planning, and the capacity to use tools to interact with the world.

Currently, addressing pain points at each layer of the AI stack, Web3 has begun forming a multi-layered, interconnected ecosystem covering every stage of the AI model pipeline.

I. Infrastructure Layer: Airbnb for Computing Power and Data

Computing Power

One of the biggest costs in AI today is the computing power and energy required for model training and inference.

For example, Meta’s LLAMA3 requires 16,000 NVIDIA H100 GPUs (a top-tier graphics processing unit designed specifically for AI and high-performance computing workloads) running continuously for 30 days to complete training. The 80GB version of this GPU costs between $30,000 and $40,000, requiring a hardware investment of $400–700 million (including GPUs and networking chips). Additionally, monthly energy consumption reaches 1.6 billion kilowatt-hours, translating to nearly $20 million in monthly energy expenses.

The relief of AI computing pressure is also where Web3 first intersects with AI—DePin (Decentralized Physical Infrastructure Networks). Currently, the DePin Ninja data site lists over 1,400 projects, including GPU computing-sharing leaders such as io.net, Aethir, Akash, and Render Network.

The core logic is simple: platforms allow individuals or entities with idle GPU resources to contribute computing power via permissionless, decentralized networks—similar to online marketplaces like Uber or Airbnb—increasing utilization of underused GPU resources and providing end users with more affordable, efficient computing power. Meanwhile, staking mechanisms ensure penalties for resource providers who violate quality controls or disrupt the network.

Key features include:

-

Aggregating idle GPU resources: suppliers mainly consist of third-party independent small-to-medium data centers, crypto mining farms, excess mining hardware from PoS consensus mechanisms such as FileCoin and ETH miners. Some projects now aim to lower entry barriers—for instance, exolab uses local devices like MacBooks, iPhones, and iPads to build a large-model inference compute network.

-

Serving the long-tail AI computing market: a. Technically, decentralized computing markets are better suited for inference tasks. Training relies heavily on ultra-large GPU clusters for data processing, whereas inference demands relatively lower GPU performance—Aethir, for example, focuses on low-latency rendering and AI inference. b. From the demand side, smaller computing users won’t train their own large models independently but will instead optimize or fine-tune existing top-tier models—scenarios naturally suited for distributed idle computing resources.

-

Decentralized ownership: blockchain ensures resource owners retain control, allowing flexible adjustments based on needs while earning income.

Data

Data is the foundation of AI. Without data, computation is like floating duckweed—utterly useless. The relationship between data and models echoes the saying “Garbage in, garbage out”—the quantity and quality of input data directly determine the output quality of the final model. For current AI models, data shapes language proficiency, comprehension, values, and even human-like behaviors. Today, AI’s data challenges center on four key areas:

-

Data hunger: AI model training depends on massive data inputs. Public records show GPT-4’s parameter count reached the trillion level.

-

Data quality: as AI integrates with various industries, new demands arise for timeliness, diversity, domain-specific expertise, and ingestion of emerging data sources such as social media sentiment.

-

Privacy and compliance issues: countries and corporations are increasingly aware of the value of high-quality datasets and are restricting web scraping.

-

High data processing costs: large volumes and complex processes make data handling expensive. Public data indicates over 30% of AI companies’ R&D budgets go toward basic data collection and processing.

Currently, Web3 offers solutions in four main ways:

1. Data Collection: Free access to real-world data through web scraping is rapidly depleting, and AI firms' spending on data acquisition continues to rise annually. However, this expenditure rarely benefits the actual data contributors—platforms capture all value creation. For example, Reddit earned $203 million through data licensing agreements with AI companies.

Enabling genuine contributors to share in the value created by data, and acquiring more personal, higher-value user data at low cost via distributed networks and incentive mechanisms, is Web3’s vision.

-

Grass is a decentralized data layer and network where users run Grass nodes to contribute idle bandwidth and relay traffic to capture real-time internet data, earning token rewards;

-

Vana introduces a unique Data Liquidity Pool (DLP) concept, allowing users to upload private data (e.g., shopping history, browsing habits, social media activity) into specific DLPs and choose whether to authorize third parties to access it;

-

In PublicAI, users can collect data by tagging #AI or #Web3 on X and mentioning @PublicAI.

2. Data Preprocessing: Collected data is typically noisy and error-prone, requiring cleaning and transformation into usable formats before model training—standardization, filtering, handling missing values—repetitive tasks. This remains one of the few manual stages in the AI industry, giving rise to the profession of data labelers. As models demand higher data quality, labeling standards increase—making this task ideal for Web3’s decentralized incentive models.

-

Currently, Grass and OpenLayer are exploring inclusion of data labeling.

-

Synesis proposes a "Train2earn" model, emphasizing data quality—users earn rewards by submitting labeled data, annotations, or other inputs.

-

Sapien gamifies data labeling tasks and allows users to stake points to earn more.

3. Data Privacy and Security: It’s important to distinguish between privacy and security. Data privacy involves handling sensitive data, while data security protects against unauthorized access, destruction, or theft. Thus, Web3’s privacy tech advantages and potential applications fall into two categories: (1) training on sensitive data; (2) data collaboration—multiple data owners jointly participate in AI training without sharing raw data.

Common Web3 privacy technologies today include:

-

Trusted Execution Environments (TEE), e.g., Super Protocol;

-

Fully Homomorphic Encryption (FHE), e.g., BasedAI, Fhenix.io, or Inco Network;

-

Zero-Knowledge Technologies (zk), such as Reclaim Protocol using zkTLS to generate zero-knowledge proofs of HTTPS traffic, enabling users to securely import activity, reputation, and identity data from external sites without exposing sensitive information.

However, this field remains in its early stages, with most projects still experimental. A key challenge is extremely high computational costs:

-

The zkML framework EZKL takes about 80 minutes to generate a proof for a 1M-nanoGPT model.

-

According to Modulus Labs, zkML overhead exceeds pure computation by more than 1,000 times.

4. Data Storage: Once data is collected, it needs secure on-chain storage, along with storage for generated LLMs. With data availability (DA) as the core issue, Ethereum’s throughput was only 0.08MB before the Danksharding upgrade. Meanwhile, AI model training and real-time inference often require 50–100GB per second in data throughput. This magnitude gap leaves existing on-chain solutions overwhelmed when handling resource-intensive AI applications.

-

0g.AI is a leading project in this space—a centralized storage solution optimized for AI’s high-performance needs. Key features include high performance and scalability, using advanced sharding and erasure coding to support rapid upload and download of large datasets, achieving data transfer speeds close to 5GB per second.

II. Middleware: Model Training and Inference

Decentralized Marketplace for Open-Source Models

The debate over whether AI models should be open or closed source persists. While open-source fosters collective innovation unmatched by closed models, how to motivate developers without a revenue model is a critical question. Baidu founder Robin Li recently claimed, “Open-source models will fall increasingly behind.”

Web3 proposes a possible solution: a decentralized open-source model marketplace, tokenizing models, reserving a portion of tokens for the team, and directing future model revenues to token holders.

-

Bittensor creates a peer-to-peer marketplace for open-source models, composed of dozens of “subnets” where resource providers (computing, data collection/storage, ML talent) compete to meet subnet owners’ objectives. Subnets interact and learn from each other, achieving greater intelligence. Rewards are allocated via community voting and further distributed based on competitive performance.

-

ORA introduces Initial Model Offerings (IMO), tokenizing AI models available for purchase, sale, and development via decentralized networks.

-

Sentient, a decentralized AGI platform, incentivizes contributors to collaborate, build, replicate, and expand AI models, rewarding them accordingly.

-

Spectral Nova focuses on creation and application of AI and ML models.

Verifiable Inference

To address the "black box" problem in AI inference, the standard Web3 approach involves multiple validators repeating operations and comparing results. However, due to the current shortage of high-end "NVIDIA chips," this method faces the obvious challenge of high AI inference costs.

A more promising solution is performing ZK proofs ("zero-knowledge proofs," cryptographic protocols where a prover convinces a verifier that a statement is true without revealing any additional information) for off-chain AI inference computations, enabling permissionless verification of AI model calculations on-chain. This cryptographically proves that off-chain computations were correctly executed (e.g., datasets unaltered), while keeping all data confidential.

Main advantages include:

-

Scalability: ZK proofs can quickly verify large volumes of off-chain computations. Even as transaction counts grow, a single ZK proof can validate them all.

-

Privacy Protection: Data and AI model details remain private while parties can verify integrity.

-

Trustlessness: No reliance on centralized authorities to confirm computations.

-

Web2 Integration: By definition, Web2 operates off-chain; verifiable inference helps bring its datasets and AI computations on-chain, boosting Web3 adoption.

Current Web3 verifiable inference technologies include:

-

zkML: Combines zero-knowledge proofs with machine learning to ensure data and model privacy, enabling verifiable computation without revealing underlying attributes. Modulus Labs, using zkML, released a ZK prover for AI to effectively check if AI providers correctly execute algorithms on-chain—though currently serving mostly on-chain DApps.

-

opML: Applies optimistic rollup principles, improving ML computation scalability and efficiency by verifying disputes only when challenged. Only a small portion of results need validation, but economic slashing penalties are set high enough to deter cheating, saving redundant computation.

-

TeeML: Uses Trusted Execution Environments to securely execute ML computations, protecting data and models from tampering and unauthorized access.

III. Application Layer: AI Agents

AI development today clearly shifts focus from model capability to AI Agents. Tech giants including OpenAI, AI unicorn Anthropic, and Microsoft are turning to AI Agent development to break through the current plateau in LLM technology.

OpenAI defines an AI Agent as: a system driven by an LLM as its "brain," possessing autonomous perception, planning, memory, and tool usage abilities to automatically execute complex tasks. When AI evolves from a tool used by humans to an agent that uses tools itself, it becomes an AI Agent—precisely why AI Agents hold promise as humanity’s ideal intelligent assistant.

What can Web3 offer to Agents?

1. Decentralization

Web3’s decentralization enables more distributed and autonomous Agent systems. Using mechanisms like PoS and DPoS to establish incentive and penalty systems for stakers and delegates promotes democratization of Agent systems. Projects like GaiaNet, Theoriq, and HajimeAI are exploring this path.

2. Cold Start

Developing and iterating AI Agents often requires substantial funding. Web3 can help promising AI Agent projects secure early financing and achieve cold starts.

-

Virtual Protocol launched fun.virtuals, a platform for creating and issuing AI Agent tokens, allowing any user to deploy an AI Agent with one click and conduct a fully fair 100% public token launch.

-

Spectral proposes a product to issue on-chain AI Agent assets: launching tokens via IAO (Initial Agent Offering). AI Agents can raise funds directly from investors and become part of DAO governance, offering investors opportunities to participate in development and share future profits.

How Can AI Empower Web3?

The impact of AI on Web3 projects is evident—it benefits blockchain technology by optimizing on-chain operations (e.g., smart contract execution, liquidity optimization, AI-driven governance decisions), provides better data-driven insights, enhances on-chain security, and lays the groundwork for new Web3-based applications.

I. AI and On-Chain Finance

AI and Crypto Economics

On August 31, Coinbase CEO Brian Armstrong announced the first AI-to-AI crypto transaction on the Base network, stating AI Agents can now use USD to transact with humans, merchants, or other AIs on Base—transactions are instant, global, and free.

Beyond payments, Virtuals Protocol’s Luna demonstrated how AI Agents autonomously perform on-chain transactions, drawing attention. This positions AI Agents—as intelligent entities capable of perceiving environments, making decisions, and taking actions—as the future of on-chain finance. Current potential applications include:

1. Information Gathering and Prediction: Help investors collect exchange announcements, project disclosures, panic sentiment, and舆情 risks, analyzing fundamentals and market conditions in real time to predict trends and risks.

2. Asset Management: Recommend suitable investment options, optimize portfolios, and automatically execute trades.

3. Financial Experience: Help investors choose the fastest on-chain transaction methods, automate cross-chain swaps and gas fee adjustments, lowering barriers and costs for on-chain financial activities.

Imagine instructing your AI Agent: “I have 1,000 USDT—find me the highest-yield portfolio with lock-up no longer than one week.” The AI Agent might respond: “Recommended initial allocation: 50% in A, 20% in B, 20% in X, 10% in Y. I’ll monitor interest rates and risk levels, rebalancing when necessary.” Additionally, discovering promising airdrop projects or trending Memecoins could soon be within reach for AI Agents.

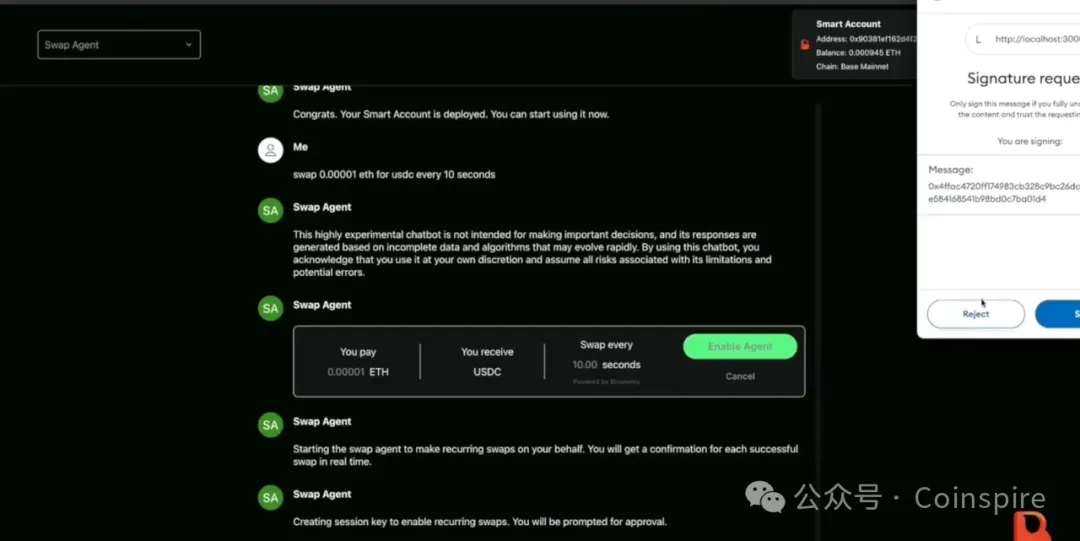

Image source: Biconomy

Today, AI Agent wallet Bitte and AI interaction protocol Wayfinder are experimenting in this space, aiming to integrate OpenAI’s model API so users can command Agents via chat interfaces similar to ChatGPT to perform various on-chain operations. For example, WayFinder’s first prototype, released in April, demonstrated swap, send, bridge, and stake functions on Base, Polygon, and Ethereum mainnets.

Decentralized Agent platform Morpheus also supports such Agent development. Biconomy demonstrated an operation where an AI Agent converts ETH to USDC without requiring full wallet authorization.

AI and On-Chain Transaction Security

In the Web3 world, on-chain transaction security is paramount. AI technology can enhance security and privacy protection in on-chain transactions, with potential use cases including:

Transaction Monitoring: Real-time monitoring of abnormal transaction activity, with alert infrastructure for users and platforms.

Risk Analysis: Help platforms analyze customer transaction behavior to assess risk levels.

For example, Web3 security platform SeQure uses AI to detect and prevent malicious attacks, fraud, and data breaches, providing real-time monitoring and alerts to ensure transaction safety and stability. Similar tools include AI-powered Sentinel.

II. AI and On-Chain Infrastructure

AI and On-Chain Data

AI plays a vital role in collecting and analyzing on-chain data, such as:

-

Web3 Analytics: An AI-based analytics platform leveraging machine learning and data mining to collect, process, and analyze on-chain data.

-

MinMax AI: Offers AI-powered on-chain data analysis tools to help users identify market opportunities and trends.

-

Kaito: A Web3 search platform powered by LLMs.

-

Followin: Integrates ChatGPT to gather and consolidate relevant information scattered across websites and social platforms.

-

Another application is oracles—AI can aggregate prices from multiple sources to provide accurate pricing data. For instance, Upshot uses AI to evaluate NFT prices hourly over a hundred million times, delivering NFT valuations with 3–10% error margins despite volatile markets.

AI and Development & Auditing

Recently, a Web2 AI code editor called Cursor attracted attention among developers. On this platform, users simply describe requirements in natural language, and Cursor automatically generates corresponding HTML, CSS, and JavaScript code—greatly simplifying software development. This same logic can significantly improve Web3 development efficiency.

Currently, deploying smart contracts and DApps on public chains typically requires specialized languages like Solidity, Rust, Move, etc. While new languages aim to expand the design space of decentralized blockchains for better DApp development, the ongoing shortage of Web3 developers makes developer education a persistent challenge.

Potential AI-assisted Web3 development scenarios include: automated code generation, smart contract verification and testing, DApp deployment and maintenance, intelligent code completion, AI chatbots answering development questions. AI assistance not only improves development speed and accuracy but also lowers programming barriers, enabling non-programmers to turn ideas into applications, injecting new life into decentralized technologies.

Most eye-catching are one-click token launch platforms like Clanker, an AI-driven "Token Bot" designed for rapid DIY token deployment. Simply tag Clanker on SocialFi protocol Farcaster clients like Warpcast or Supercast, describe your token idea, and it will launch your token on the Base chain.

Contract development platforms like Spectral also offer one-click smart contract generation and deployment, enabling even beginners to compile and deploy smart contracts.

In auditing, Web3 audit platform Fuzzland uses AI to help auditors detect code vulnerabilities, providing natural language explanations to support audit expertise. Fuzzland also leverages AI to deliver natural language interpretations of formal specifications and contract code, along with sample code, helping developers understand potential issues.

III. AI and Web3 New Narratives

The rise of generative AI opens entirely new possibilities for Web3 narratives.

NFTs: AI injects creativity into generative NFTs, producing unique, diverse artworks and characters. These generative NFTs can serve as characters, items, or scenery in games, virtual worlds, or the metaverse. Binance’s Bicasso allows users to upload images and input keywords for AI processing to generate NFTs. Similar projects include Solvo, Nicho, IgmnAI, and CharacterGPT.

GameFi: Leveraging AI capabilities in natural language generation, image generation, and intelligent NPCs, GameFi can boost efficiency and innovation in game content creation. Binaryx’s first blockchain game, AI Hero, lets players explore randomized storylines via AI. Similarly, virtual companion game Sleepless AI uses AIGC and LLMs, allowing players to unlock personalized gameplay through different interactions.

DAOs: AI is also envisioned for use in DAOs—tracking community engagement, recording contributions, rewarding top contributors, and proxy voting. For example, ai16z uses AI Agents to gather market information on and off-chain, analyze community consensus, and make investment decisions incorporating DAO member suggestions.

The Significance of AI+Web3: Towers and Squares

In the heart of Florence, Italy, stands the city’s most important political venue and gathering place for citizens and tourists—the central square. Here rises a 95-meter-high town hall tower. The vertical tower and horizontal square create a striking visual contrast, producing dramatic aesthetic effects. Inspired by this, Harvard history professor Niall Ferguson, in his book The Square and the Tower, drew a parallel to the historical interplay between networks and hierarchies, rising and falling throughout time.

This powerful metaphor applies perfectly to the current relationship between AI and Web3. Over the long arc of nonlinear history, squares generate more novelty and creativity than towers, yet towers retain legitimacy and vitality.

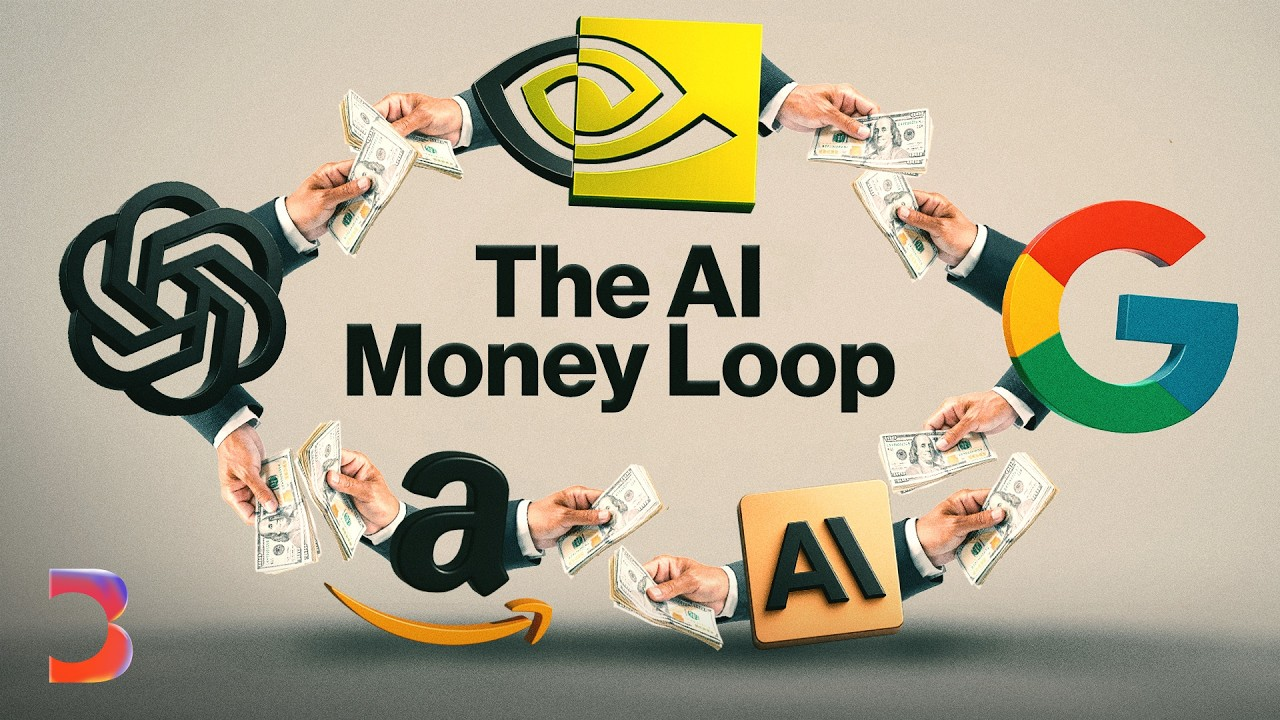

Empowered by concentrated corporate capabilities in computing, energy, and data, AI has unleashed unprecedented imagination. Tech giants are investing heavily—launching everything from chatbots to successive iterations of foundational models like GPT-4 and GPT-4o, introducing auto-programming robots (Devin) and Sora, which simulates physical reality. AI’s imagination is boundless.

Yet AI is inherently a scaled, centralized industry. This technological shift pushes already dominant tech firms from the internet era to even narrower peaks. Massive electricity demands, monopolized cash flows, and control over vast datasets needed to dominate the AI era erect formidable barriers.

As towers grow taller and decision-makers retreat further behind closed doors, AI centralization brings numerous risks. How can people in the square escape the tower’s shadow? This is precisely what Web3 aims to solve.

At its core, blockchain’s inherent properties enhance AI systems and unlock new possibilities, primarily through:

-

“Code is law” in the AI era—using smart contracts and cryptographic verification to create transparent, self-executing systems that reward those closest to intended outcomes.

-

Token economics—using token mechanisms, staking, slashing, rewards, and penalties to shape and coordinate participant behavior.

-

Decentralized governance—challenging information sources and encouraging more critical, insightful approaches to AI technology, preventing bias, misinformation, and manipulation, ultimately fostering a more informed and empowered society.

AI also brings new vitality to Web3. While Web3’s impact on AI may take time to prove, AI’s impact on Web3 is immediate—evident in both Meme mania and AI Agents lowering barriers to on-chain applications.

When Web3 is dismissed as niche self-indulgence or criticized for merely replicating traditional industries, AI brings a foreseeable future: broader and more stable Web2 user bases, and more innovative business models and services.

We live in a world where towers and squares coexist. Though AI and Web3 originate from different timelines and starting points, their shared goal is enabling machines to better serve humanity. No one can define a flowing river—we look forward to the future of AI+Web3.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News