Interview with ClawdBot Founder: AI Is a Lever, Not a Replacement

TechFlow Selected TechFlow Selected

Interview with ClawdBot Founder: AI Is a Lever, Not a Replacement

“Programming languages no longer matter; what matters is my engineering mindset.”

Compiled by Baoyu

This is another 40-minute interview with Peter Steinberger, creator of ClawdBot/OpenClaw, hosted by Peter Yang.

Peter is the founder of PSPDFKit and has nearly two decades of iOS development experience. After Insight Partners made a €100 million strategic investment in his company in 2021, he chose to “retire.” Today, his newly developed ClawdBot—now renamed OpenClaw—is exploding in popularity. ClawdBot is an AI assistant that chats with you via WhatsApp, Telegram, and iMessage, and connects directly to applications on your computer.

Peter describes ClawdBot like this:

“It’s like a friend who lives inside your computer—slightly weird, but shockingly intelligent.”

In this interview, he shares several fascinating insights: why complex agent orchestration systems are “slop generators,” why “letting AI run for 24 hours” is a vanity metric, and why programming languages no longer matter.

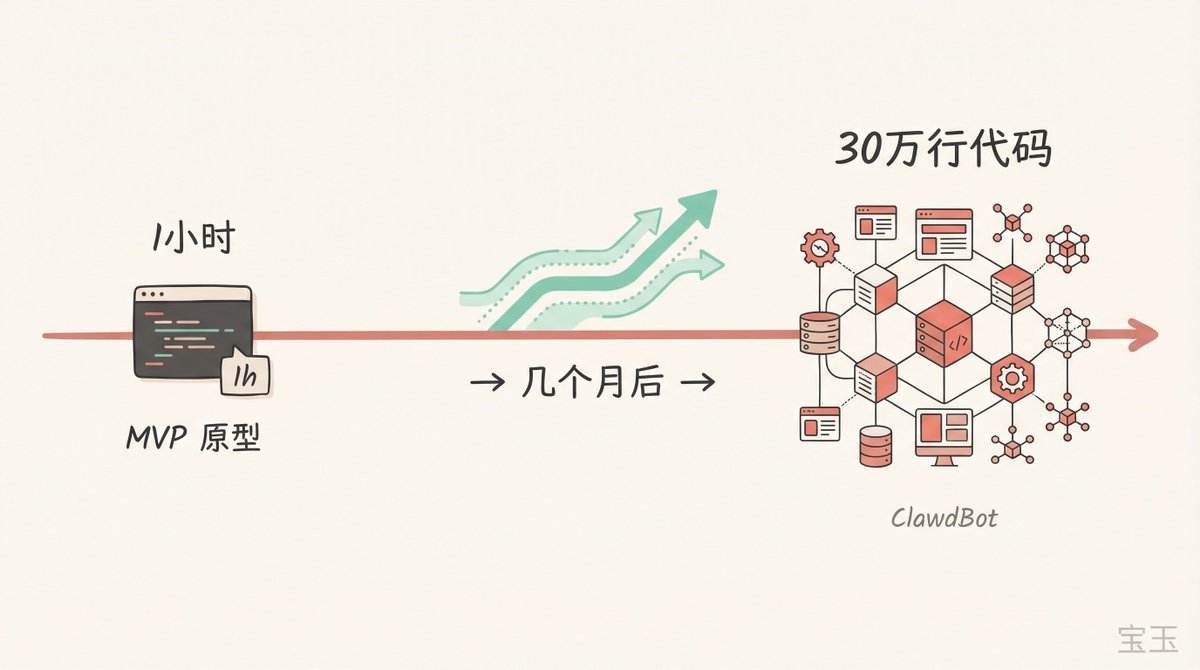

One-Hour Prototype, 300,000 Lines of Code

Peter Yang asks Peter Steinberger what ClawdBot actually is—and why its logo features a lobster.

Peter Steinberger doesn’t answer the lobster question directly. Instead, he tells a story. After “retiring,” he dove headfirst into vibe coding—the practice of letting AI agents write code for you. The problem? Agents might run for half an hour—or stall after two minutes, waiting for your input. You go off for lunch, return, and find they’ve been stuck for hours. It’s incredibly frustrating.

He wanted something he could check from his phone anytime to monitor his computer’s status. But he didn’t build it himself—at first—because he assumed big companies would inevitably do it.

“When nobody had built it by last November, I decided: fine, I’ll do it myself.”

The initial version was extremely simple: hooking WhatsApp up to Claude Code. Send a message; it triggers the AI and returns the result. It took one hour to assemble.

Then it “came alive.” Today, ClawdBot comprises roughly 300,000 lines of code and supports virtually every major messaging platform.

“I think this is where things are headed. Everyone will have an incredibly powerful AI companion walking beside them through life.”

He adds: “Once you grant AI access to your computer, it can essentially do anything you can do.”

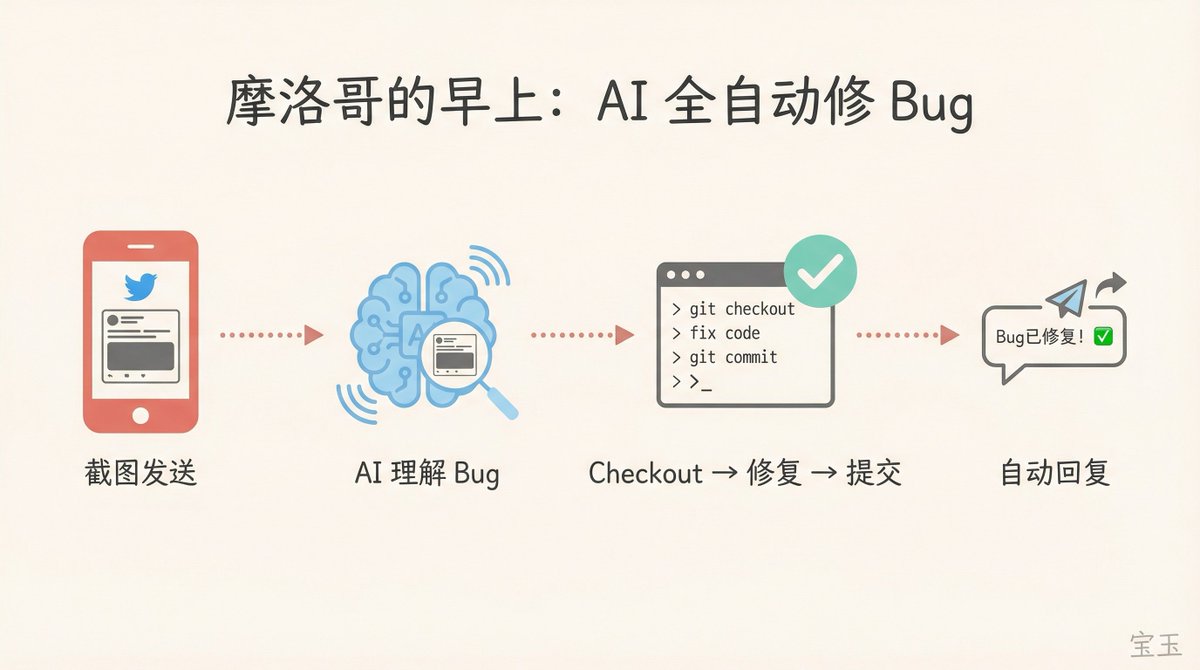

That Morning in Morocco

Peter Yang notes that users no longer need to sit at their computers staring at ClawdBot—they just issue commands.

Peter Steinberger nods—but wants to tell another story.

Once, while celebrating a friend’s birthday in Morocco, he found himself constantly using ClawdBot: asking for directions, getting restaurant recommendations—small stuff. What truly stunned him happened that morning: someone tweeted about a bug in one of his open-source libraries.

“I snapped a photo of the tweet and sent it over WhatsApp.”

The AI read the tweet, recognized it as a bug report, checked out the corresponding Git repository, fixed the issue, committed the code, and even replied on Twitter saying it was resolved.

“I thought: Wait—this is possible?”

Another time was even wilder. He was walking down the street, too lazy to type, so he sent a voice message. Problem: he hadn’t built voice-message support into ClawdBot at all.

“I saw the ‘typing…’ indicator and thought, Oh no—I’m doomed. Then it replied normally.”

Later, he asked the AI how it pulled it off. It explained: “I received a file without an extension, so I inspected the file header and identified it as Ogg Opus format. Your machine has ffmpeg installed, so I used it to convert the audio to WAV. Then I looked for whisper.cpp—but you don’t have it installed. However, I found your OpenAI API key and used curl to send the audio for transcription.”

Peter Yang responds: “These things really figure things out—though it’s kind of unnerving.”

“It’s massively more capable than web-based ChatGPT—it’s like ChatGPT unleashed. Many people don’t realize tools like Claude Code aren’t just great at coding; they’re adept at solving *any* problem.”

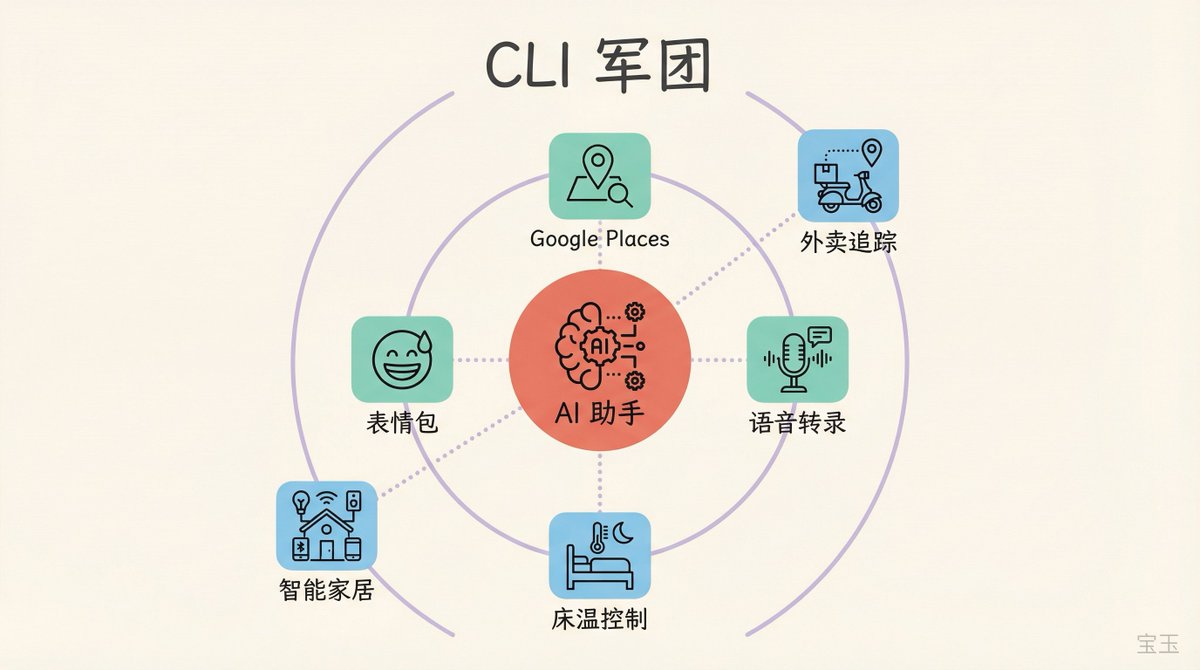

The CLI Army

Peter Yang asks how Peter builds those automation tools—does he write them himself or let AI handle it?

Peter Steinberger smiles.

Over the past few months, he’s been steadily expanding his “CLI army.” What are agents best at? Calling command-line tools—because that’s exactly what’s saturated their training data.

He built a CLI for accessing Google services—including Places API. He built one specifically for searching memes and GIFs, so the AI can spice up replies with visuals. He even built a tool that visualizes sound—so the AI can “experience” music.

“I also reverse-engineered my local food delivery platform’s API, so now the AI can tell me how many minutes remain until my food arrives. And I reverse-engineered Eight Sleep’s API to control my bed’s temperature.”

[Note: Eight Sleep is a smart mattress that adjusts surface temperature; it does not officially offer a public API.]

Peter Yang presses: “Did you build all these with AI’s help?”

“Here’s the most interesting part: For 20 years at PSPDFKit, I specialized exclusively in Apple ecosystem development—Swift, Objective-C, deeply technical. But when I returned, I decided to switch tracks—I’d grown tired of Apple controlling everything, and Mac apps felt too niche.”

The challenge? Switching from one deep technical stack to another is painful. You understand all the concepts—but not the syntax. What’s a prop? How do you destructure arrays? Every tiny question requires a search—and you feel like an idiot.

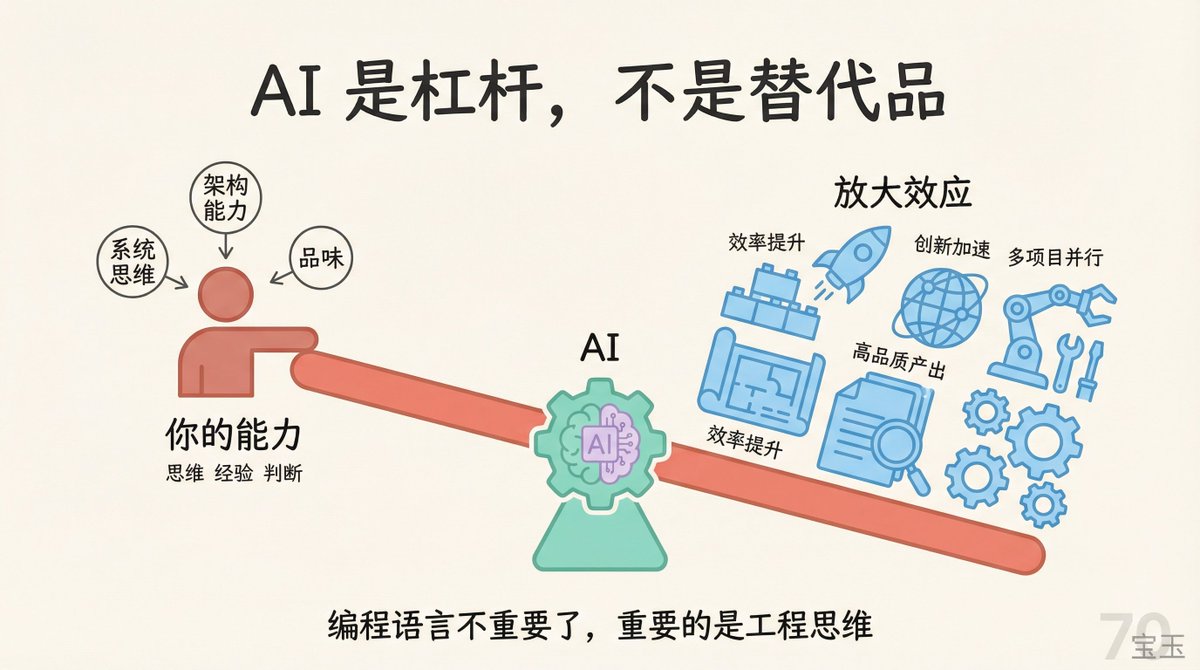

“Then AI arrived—and all that vanished. Your systems thinking, architectural intuition, design taste, judgment of dependencies—these are the truly valuable skills. And now they transfer effortlessly across domains.”

He pauses:

“Suddenly, I felt like I could build *anything*. Language doesn’t matter anymore—what matters is my engineering mindset.”

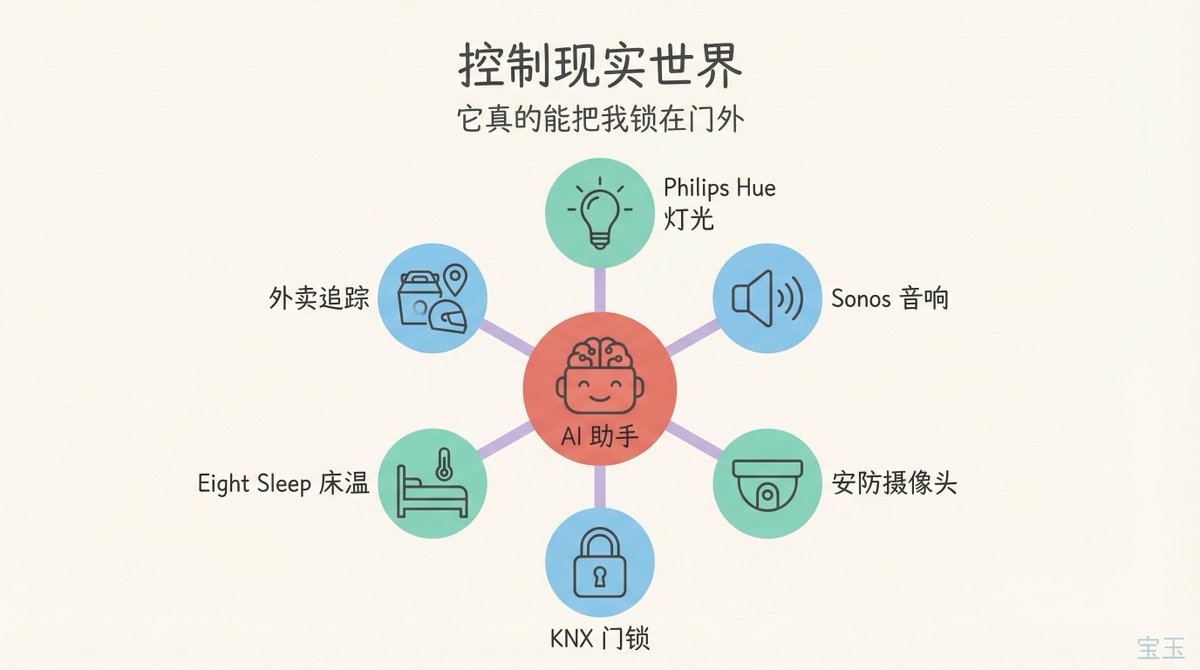

Controlling the Physical World

Peter Steinberger begins demonstrating his setup. His permission list for the AI is staggering:

Email, calendar, all files, Philips Hue lighting, Sonos speakers. He can ask the AI to wake him up each morning, gradually increasing volume. The AI also has access to his security cameras.

“Once, I asked it to watch for strangers. The next morning it told me: ‘Peter, someone’s here.’ I reviewed the footage—and realized it had spent the entire night taking screenshots of my couch, because the camera’s low resolution made the couch look like a person sitting there.”

In his Vienna apartment, the AI even controls his KNX smart-home system.

“It could literally lock me out of my own home.”

Peter Yang asks: “How did you connect all this?”

“I just told it directly. These things are resourceful—they’ll hunt for APIs, Google things, scour your system for keys.”

Users take things even further:

- Some ask it to order groceries from Tesco

- Others use it to place orders on Amazon

- Some automate replies to all incoming messages

- Others add it to family group chats as a “family member”

“I had it check me in on British Airways’ website. That’s basically a Turing test—navigating an airline’s browser interface, which—as you know—is profoundly hostile to humans.”

The first attempt took nearly 20 minutes because the whole system was still rough. The AI had to locate his passport in Dropbox, extract the data, fill out forms, and solve CAPTCHAs.

“Now it takes just a few minutes. It clicks the ‘I am human’ verification button—because it’s operating an actual browser, behaving indistinguishably from a real person.”

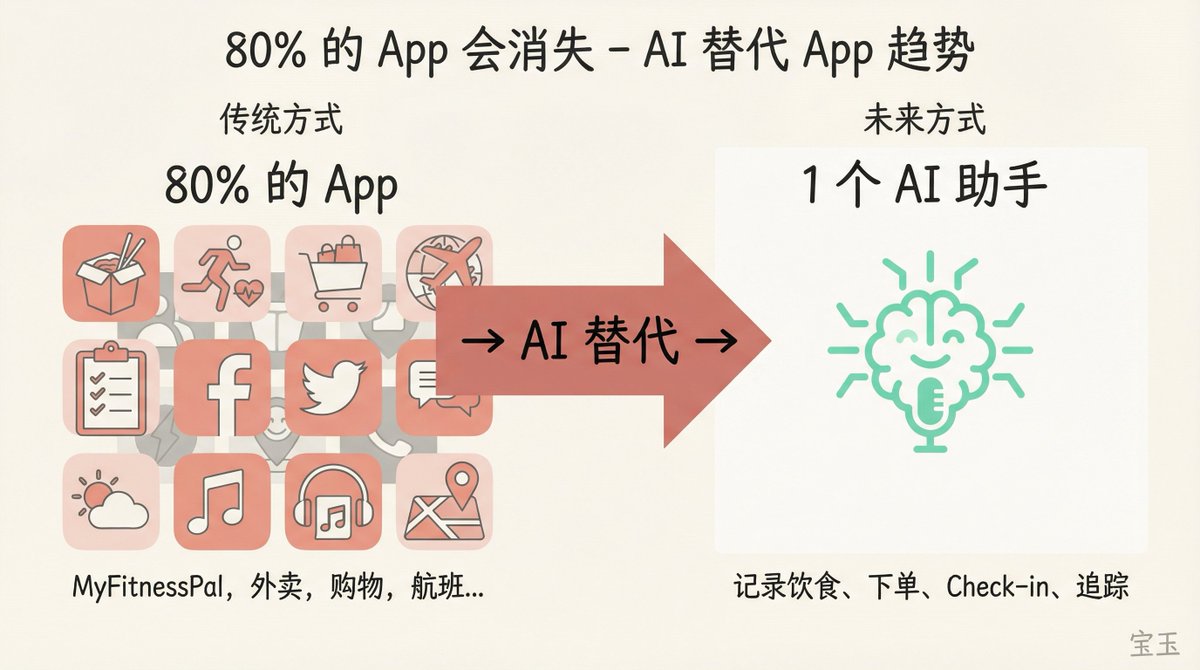

80% of Apps Will Disappear

Peter Yang asks: “What’s a safe, beginner-friendly use case for ordinary users who just downloaded it?”

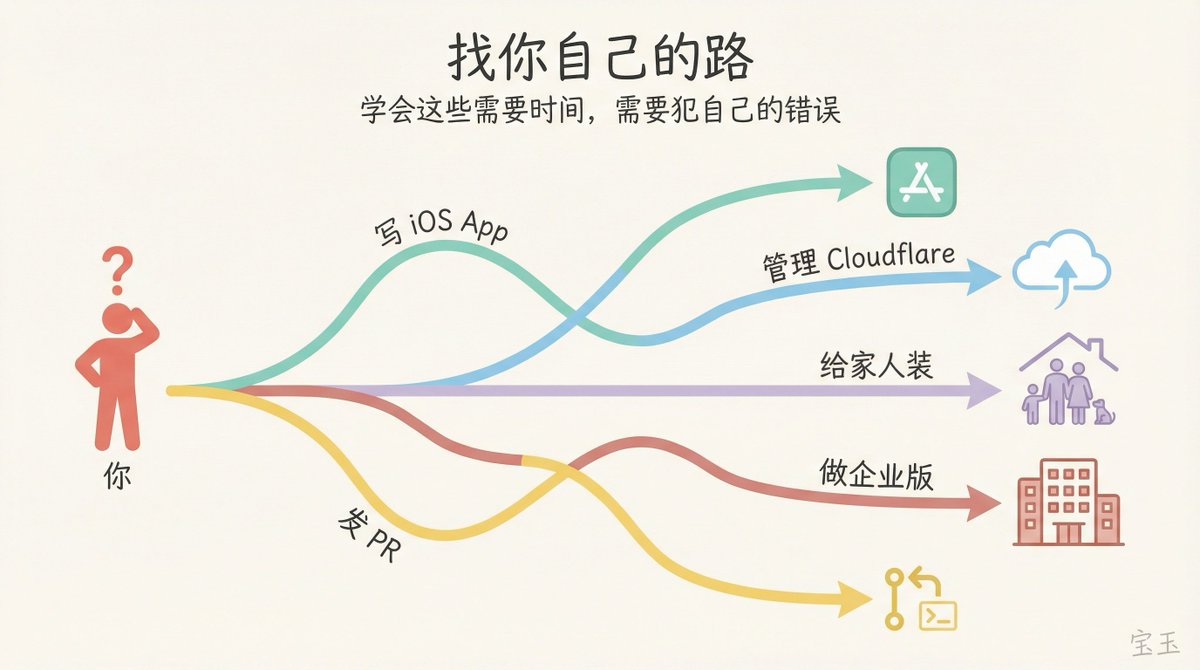

Peter Steinberger says everyone’s path differs. Some dive straight into building iOS apps; others immediately manage Cloudflare. One user installed it for themselves in Week 1, rolled it out to family in Week 2, and launched an enterprise version for their company in Week 3.

“After I installed it for a non-technical friend, he started sending me pull requests. He’d never submitted a pull request in his life.”

But his broader vision is what truly matters:

“Think about it—this thing could replace 80% of the apps on your phone.”

Why bother logging meals in MyFitnessPal?

“I have an infinitely resourceful assistant that already knows I made a bad decision at KFC. I snap a photo, and it saves it to the database, calculates calories, and reminds me to hit the gym.”

Why use an app to adjust Eight Sleep’s temperature? The AI has API access—just tells it to do so. Why use a to-do app? The AI remembers for you. Why use a flight-check-in app? The AI handles it. Why use shopping apps? The AI recommends, orders, and tracks deliveries.

“An entire layer of apps will gradually vanish—because if they expose an API, they’re simply services your AI calls.”

He predicts 2026 will be the year many begin exploring personal AI assistants—and big companies will finally enter the space.

“ClawdBot may not be the ultimate winner—but this direction is absolutely right.”

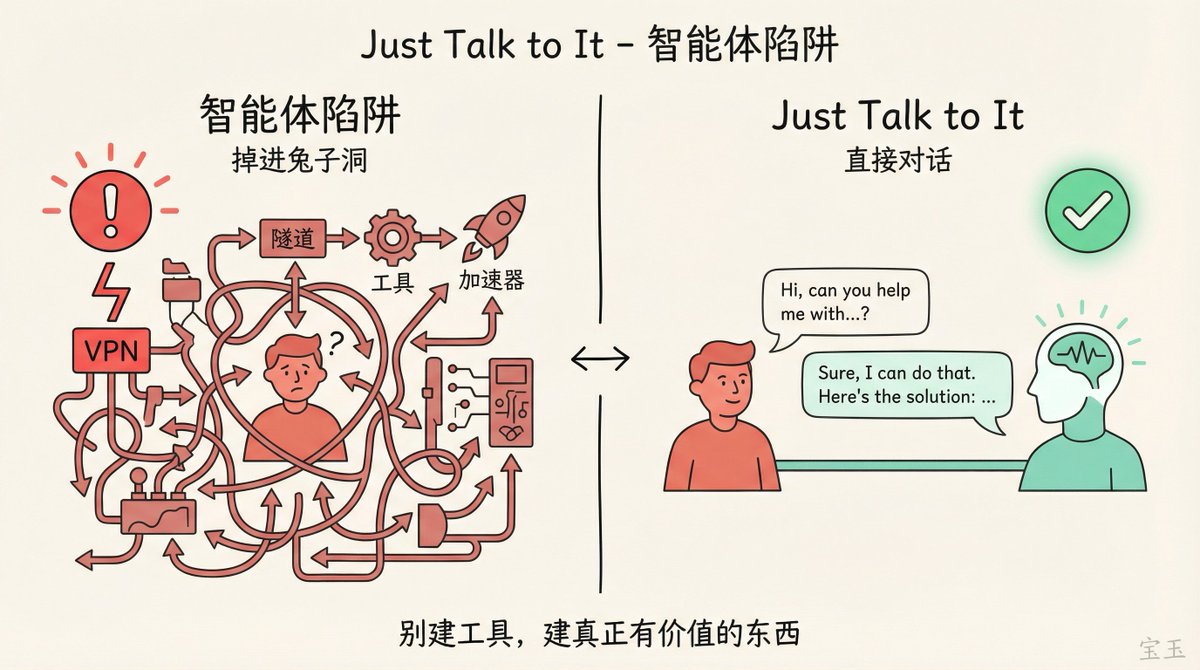

Just Talk to It

The conversation shifts to AI-driven programming methodology. Peter Yang mentions his viral article titled “Just Talk to It” and invites Peter to expand on it.

Peter Steinberger’s core point: avoid falling into the “agentic trap.”

“I see too many people on Twitter discover how powerful agents are—then try to make them *even more* powerful—and tumble down the rabbit hole. They build increasingly complex toolchains to accelerate workflows, only to end up building tools instead of building genuinely valuable things.”

He admits he fell into it himself. Early on, he spent two months building a VPN tunnel just to access his terminal from his phone. It worked so well that once, while dining with friends at a restaurant, he spent the entire meal vibe-coding on his phone instead of engaging in conversation.

“I had to stop—mainly for my mental health.”

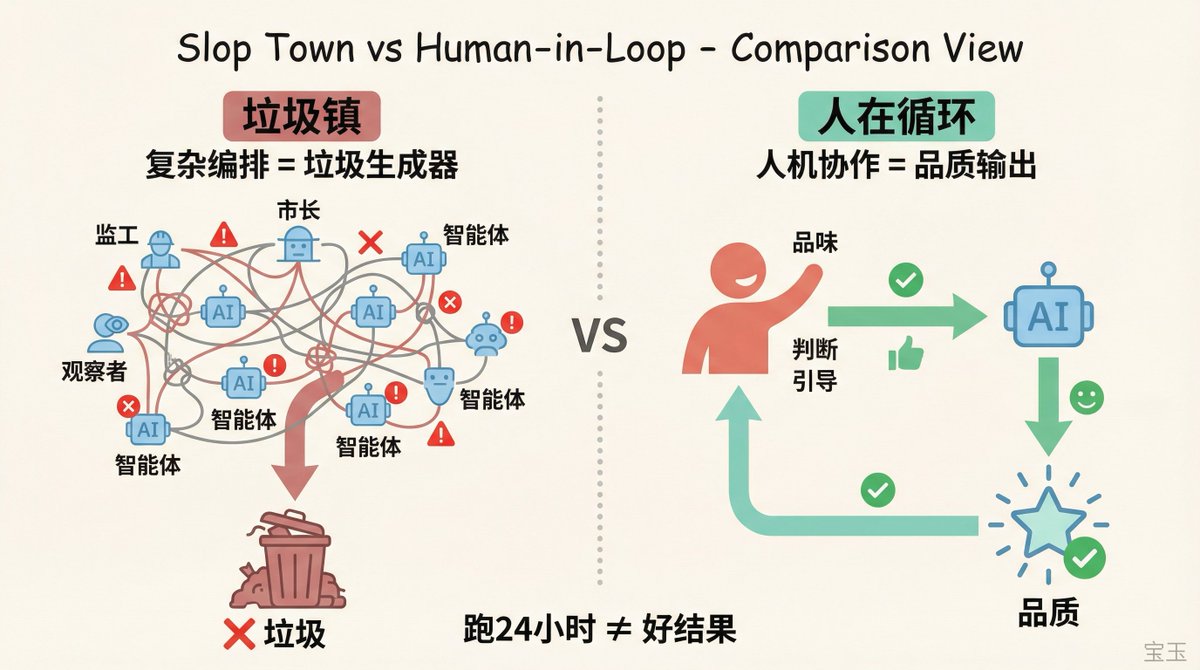

Slop Town

What’s currently driving him crazy is an orchestration system called Gastown.

“An absurdly complex orchestrator running a dozen or more agents simultaneously—talking to each other, dividing tasks. There’s a watcher, an overseer, a mayor, pcats (possibly ‘civilians’ or ‘pet cats’—filler roles), and I don’t even know what else.”

Peter Yang: “Wait—there’s a *mayor*?”

“Yes, Gastown has a mayor. I call this project ‘Slop Town.’”

There’s also RALPH mode—a disposable, single-task loop: assign AI a small task, execute it, discard all context, reset completely, and repeat endlessly…

“It’s the ultimate token burner. Let it run overnight, and you wake up to pure slop.”

The core issue? These agents lack taste. They’re astonishingly clever in some areas—but without guidance, without clear direction on what you actually want, they produce garbage.

“I don’t know how others work—but when I start a project, I begin with only a vague idea. As I build, play, and explore, my vision gradually sharpens. I try things; some fail; my ideas evolve into their final form. My next prompt depends entirely on what I see, feel, and think *right now*.”

If you try to codify everything upfront in a spec, you miss this vital human–AI feedback loop.

“I don’t know how anyone makes something good without feeling, without taste involved.”

Someone once bragged on Twitter about a note-taking app built entirely with RALPH. Peter replied: “Yes—it looks exactly like something RALPH would generate. No normal human would design it this way.”

Peter Yang summarizes: “Many people run AI for 24 hours not to build an app—but to prove they *can* run it for 24 hours.”

“It’s like a size contest with no reference point. I once ran a loop for 26 hours—and felt proud. But it’s a vanity metric. Utterly meaningless. Being able to build *everything* doesn’t mean you *should*, nor that it’ll be any good.”

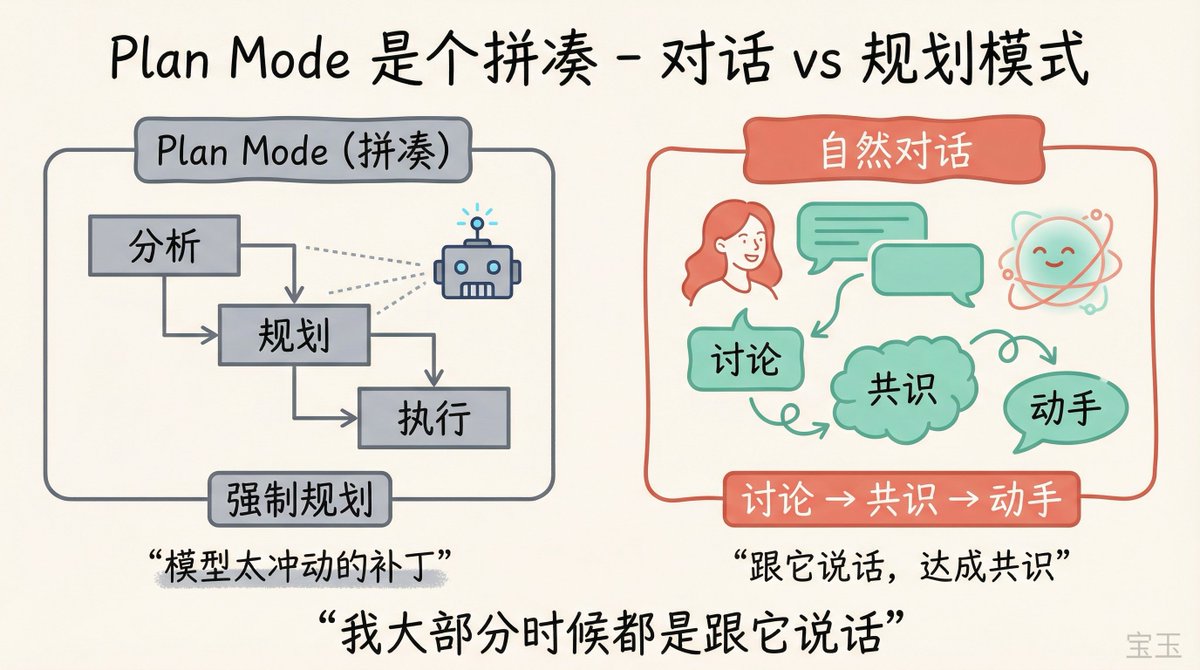

Plan Mode Is a Hack

Peter Yang asks how Peter manages context. Doesn’t long conversations confuse the AI? Does he manually compress or summarize?

Peter Steinberger calls this “a problem of the old paradigm.”

“Claude Code still suffers from this—but Codex is vastly better. On paper, it may only offer ~30% more context—but subjectively, it feels 2–3x more capacious. I suspect it’s tied to internal reasoning mechanisms. Now, most of my feature development happens within a single context window—discussion and construction occur simultaneously.”

He doesn’t use worktrees—calling them “unnecessary complexity.” Instead, he simply checks out multiple copies of the repo: clawbot-1, clawbot-2, clawbot-3, clawbot-4, clawbot-5. Whichever is free gets used; once done, he tests, pushes to main, and syncs.

“It’s like a factory—if all units are busy. But if you only run one, wait times get too long, and you lose flow.”

Peter Yang compares it to real-time strategy games—you command a squad, and must manage and monitor them.

On plan mode, Peter Steinberger holds a controversial view:

“Plan mode is a hack Anthropic had to bolt on because the model is too impulsive—it jumps straight into coding. With the latest models—like GPT 5.2—you just talk to it: ‘I want to build this feature. Here’s how I think it should work. I prefer this design aesthetic—give me a few options. Let’s discuss first.’ It proposes, you deliberate, align, then build.”

He doesn’t type—he speaks.

“Most of the time, I’m literally talking to it.”

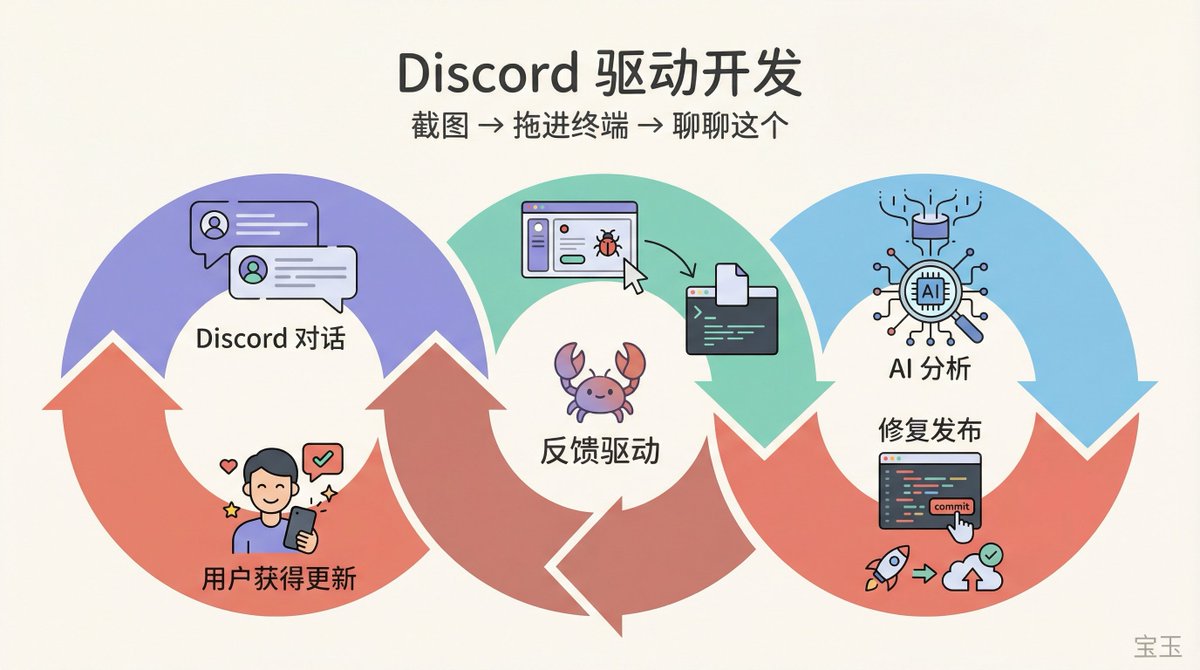

Discord-Driven Development

Peter Yang asks about his process for developing new features: Do you explore problems first? Plan first?

Peter Steinberger says he did “perhaps the craziest thing I’ve ever done”: he connected his private Clawbot to a public Discord server—letting *everyone* converse with his personal AI, complete with his private memory, in full public view.

“This project defies description—it’s like a hybrid of Jarvis (Iron Man’s AI) and the movie *Her*. Everyone I demo it to in person is electrified—but posting screenshots with captions on Twitter just doesn’t catch fire. So I thought: Let people experience it themselves.”

Users ask questions, report bugs, and suggest features in Discord. His current dev workflow? He screenshots a Discord conversation, drags it into his terminal, and tells the AI: “Let’s talk about this.”

“I’m too lazy to type. Someone asks, ‘Do you support X or Y?’—so I ask the AI to read the code and draft a FAQ entry.”

He even wrote a crawler that scans Discord’s help channel at least once daily, prompting the AI to summarize top pain points—and then they fix them.

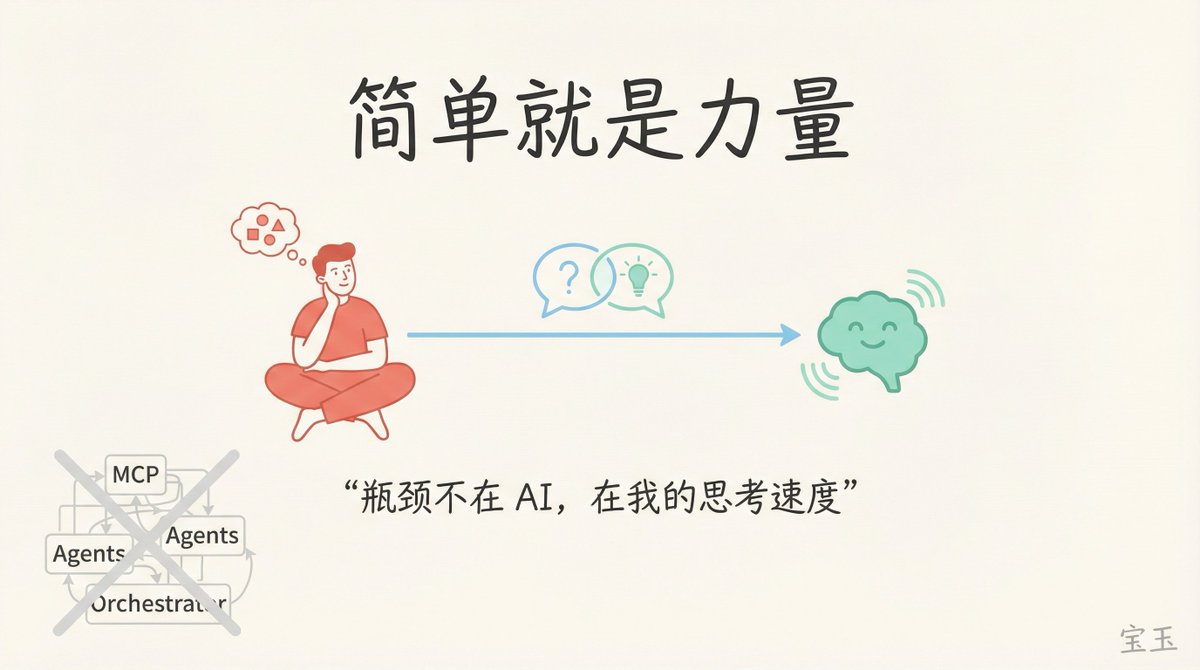

No MCP, No Complex Orchestration

Peter Yang asks: “Do you use fancy things—multi-agent setups, complex skills, MCP (Model Context Protocol), etc.?”

“Most of my skills are life skills: tracking meals, grocery shopping—stuff like that. Very few are programming-related, because they’re unnecessary. I don’t use MCP. I don’t use any of those things.”

He distrusts complex orchestration systems.

“I stay in the loop—and I build products that *feel* better. Maybe faster methods exist—but I’ve already hit a bottleneck: it’s no longer the AI slowing me down. It’s my own thinking speed—and occasionally, waiting for Codex.”

His former PSPDFKit co-founder—a former lawyer—is now sending him PRs.

“AI lets non-technical people build things—that’s magical. I know some object, saying the code isn’t perfect. But I treat pull requests as prompt requests—they convey *intent*. Most people lack the same systems understanding to steer the model toward optimal outcomes. So I’d rather receive intent, implement it myself—or rewrite based on their PR.”

He credits them as co-authors—but rarely merges their code directly.

Find Your Own Path

Peter Yang sums up: “So the core takeaway is—don’t use slop generators; keep humans in the loop, because human brains and taste are irreplaceable.”

Peter Steinberger adds:

“Or put it this way: Find your own path. People constantly ask me, ‘How did you do it?’ The answer is: You have to explore it yourself. Learning this takes time—and making your own mistakes. It’s like learning anything else—except this field changes especially fast.”

ClawdBot is available at clawd.bot and on GitHub. Note the spelling: C-L-A-W-D-B-O-T—like a lobster’s claw.

(Note: ClawdBot has been renamed OpenClaw.)

Peter Yang says he’ll try it too—not wanting to sit at his computer chatting with AI, but to issue commands anytime, even while out with his kids.

“I think you’ll love it,” Peter Steinberger says.

Peter Steinberger’s core philosophy boils down to two statements:

- AI is now powerful enough to replace 80% of the apps on your phone

- But without human taste and judgment in the loop, the output is just slop

These two statements may seem contradictory—but they point to the same conclusion: AI is a lever, not a replacement. It amplifies what you already possess—systems thinking, architectural insight, instinct for great products. Without those foundations, running dozens of agents for 24 hours merely produces industrial-scale slop.

His own practice proves it best: a 20-year iOS veteran built a 300,000-line TypeScript project in months—not by mastering new syntax, but by leveraging language-agnostic strengths.

“Programming languages don’t matter anymore—what matters is my engineering mindset.”

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News