What exactly is the second open-source ace that DeepSeek has thrown?

TechFlow Selected TechFlow Selected

What exactly is the second open-source ace that DeepSeek has thrown?

DeepSeek's newly open-sourced full-stack communication library, DeepEP, greatly alleviates practitioners' computing power concerns by optimizing the efficiency of data transmission between GPUs.

Author: Liang Siqi

Image source: Generated by Wujie AI

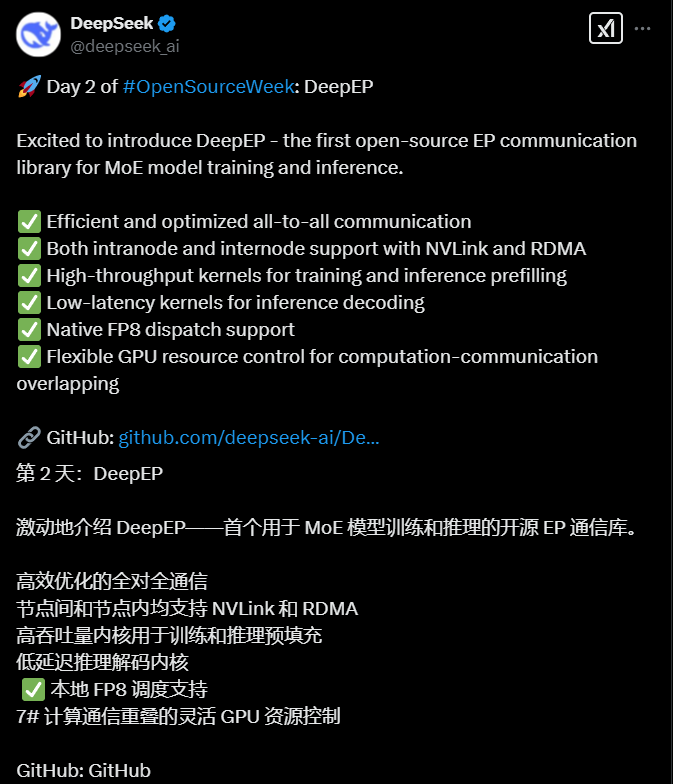

On February 25, DeepSeek, known for its open-source generosity, played a trump card—releasing DeepEP, the world's first full-stack communication library for MoE models. By directly addressing AI computing power bottlenecks, it instantly garnered 1,500 stars on GitHub (indicating bookmarks), sending shockwaves across the industry and highlighting its significance.

Many wonder what DeepEP really means. Imagine a delivery hub during Singles' Day: 2,048 couriers (GPUs) frantically moving packages (AI data) across 200 warehouses (servers). The traditional transport system is like having couriers pedal tricycles, while DeepEP equips every one with a "maglev + quantum teleportation" kit, enabling stable and efficient information transfer.

Feature One: Rewriting the Transport Rules

During NVIDIA's conference call on August 29, 2024, Jensen Huang specifically emphasized the importance of NVLink—a technology developed by NVIDIA that enables direct interconnection between GPUs, achieving bidirectional speeds up to 1.8TB/s—for low latency, high throughput, and large language models, calling it one of the key technologies driving large model development.

Yet this much-hyped NVLink technology has now been taken to new heights by a Chinese team. The brilliance of DeepEP lies in its optimization of NVLink, meaning couriers within the same warehouse now use maglev tracks, transferring at an astonishing 158 containers per second (GB/s)—equivalent to shortening the distance from Beijing to Shanghai to the time it takes to sip water.

The second breakthrough is its RDMA-based low-latency kernel. Imagine cargo being "quantum teleported" between warehouses in different cities, with each plane (network card) carrying up to 47 containers per second, while simultaneously loading and flying—overlapping computation and communication, completely eliminating idle waiting.

Feature Two: Smart Sorting Black Tech: An AI-Powered "Super Brain"

When goods must be distributed to different specialists (sub-networks in MoE models), traditional sorters have to unpack and inspect each box individually. In contrast, DeepEP's "schedule-combine" system acts like it has foresight: during training prefill mode, 4,096 packets ride intelligent conveyor belts, automatically identifying local or cross-city deliveries; during inference prefill mode, 128 urgent packages take VIP lanes, arriving in 163 microseconds—five times faster than a human blink. Meanwhile, dynamic track-switching technology instantly shifts transmission modes during traffic surges, perfectly adapting to varying scenarios.

Feature Three: FP8 "Bone-Compression Technique"

Standard cargo uses regular-sized boxes (FP32/FP16 formats), but DeepEP compresses cargo into micro-capsules (FP8 format), allowing each truck to carry three times more. Even more remarkably, these capsules automatically expand back upon arrival—saving both shipping costs and time.

This system has already been tested in DeepSeek’s own warehouse (H800 GPU cluster): local freight speed increased threefold, cross-city latency reduced to imperceptible levels, and most disruptively, it achieves true "seamless transmission"—like a courier smoothly stuffing packages into lockers while riding a bike, all movements fluid and uninterrupted.

Now, by open-sourcing this ace, DeepSeek is essentially publishing SF Express's blueprint for unmanned sorting systems. Tasks that once required 2,000 GPUs can now be handled easily with just a few hundred.

Earlier, DeepSeek released FlashMLA (literally "Fast Multi-Latent Attention") as the first outcome of its "Open-Source Week"—another key technology for reducing large model training costs. To alleviate cost pressures across the industrial chain, DeepSeek is sharing everything it has.

Prior to this, You Yang, founder of Luchen Technology, posted on social media stating, "In the short term, China's MaaS model may be the worst business model." He estimated roughly that delivering 100 billion tokens daily would incur monthly machine costs of 450 million RMB using DeepSeek's service, resulting in a 400 million RMB loss; using AMD chips would generate 45 million RMB in monthly revenue but require 270 million RMB in machine costs, still leading to over 200 million RMB in losses.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News