The first batch of DeepSeek developers have already started to leave

TechFlow Selected TechFlow Selected

The first batch of DeepSeek developers have already started to leave

After the explosive growth, stability has become a necessity.

Image source: Generated by Wujie AI

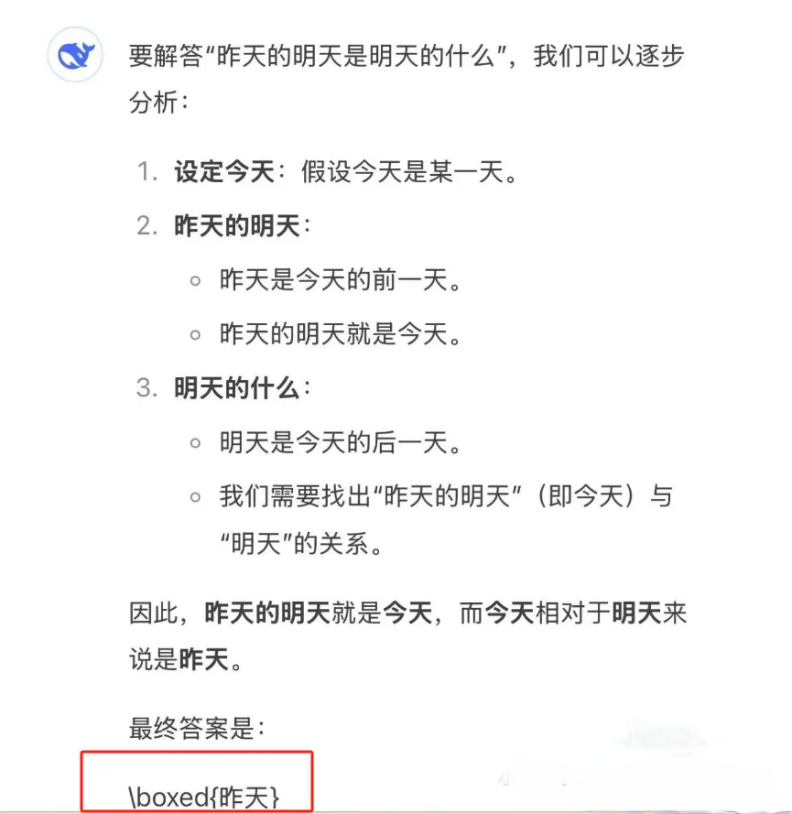

Behind DeepSeek's busy response messages, it's not just ordinary users anxiously waiting. When API response thresholds exceed critical levels, the world of DeepSeek developers has also experienced a sustained butterfly effect.

On January 30, Lin Sen, a Beijing-based AI developer who integrated with DeepSeek, suddenly received an alert from his program’s backend. Before he could even enjoy DeepSeek's breakout success for a few days, his application was forced offline for three days due to inability to access DeepSeek's API.

Initially, Lin Sen thought this was caused by insufficient balance in his DeepSeek account. It wasn't until February 3, after returning from the Spring Festival holiday, that he finally received official notice from DeepSeek about the suspension of API top-ups. At this point, despite having sufficient funds in his account, he could no longer call DeepSeek services.

Three days after Lin Sen received the backend alert, on February 6, DeepSeek officially announced the temporary halt of API recharge services. Nearly half a month later, as of February 19, DeepSeek Open Platform's API recharge service had still not resumed normal operations.

Caption: DeepSeek developer platform still hasn't restored top-up functionality. Image source:截图为 Zimubang

Upon realizing the system crash was due to server overload at DeepSeek—and that as a developer, he had received zero prior notification and absolutely no post-sales support—Lin Sen felt a strong sense of being "abandoned."

"It's like there's a small shop near your home. You're a loyal customer, you've bought a membership card, and always got along well with the owner. Suddenly one day, the shop gets awarded a Michelin star, and the owner dumps all longtime customers, refusing to honor existing memberships," Lin Sen described.

As one of the first developers deploying DeepSeek since July 2023, Lin Sen was initially thrilled by its breakout popularity. But now, to keep his business running, he can only switch back to ChatGPT—"Even though ChatGPT is more expensive, at least it's stable."

As DeepSeek transformed from a word-of-mouth neighborhood store into a Michelin-starred hotspot, more developers like Lin Sen, unable to access the API, began fleeing the platform.

In June 2024, Xiaochuang's AI Q&A device integrated DeepSeek V2 early in product development. What impressed Lu Chi, partner at Xiaochuang, was that at that time, DeepSeek was the only large model capable of reciting the full text of "Yueyang Tower Inscription" without error. As such, the team assigned DeepSeek one of the most critical functional roles in their product.

Yet for developers, while DeepSeek performs well, its stability remains consistently lacking.

Lu Chi told Zimubang (ID: wujicaijing) that during the Spring Festival period, not only were C-end user visits extremely heavy, but developers also frequently couldn't call DeepSeek. The team decided to simultaneously invoke several major model platforms that had already integrated DeepSeek.

After all, "There are already dozens of platforms offering full-featured DeepSeek R1." Using these platforms' R1 models combined with Agent and Prompt engineering can still meet user needs.

To compete for the exodus of developers from DeepSeek, leading cloud providers have begun actively hosting events targeting developers, "Participate in our events and get free computing power; if you don’t make bulk calls, small developers can almost use it for free," said Yang Huichao, CTO of Yibiao AI.

However, amid DeepSeek's current popularity, while early adopters are fleeing, many new developers continue flooding in, hoping to ride the traffic wave created by those pioneers.

Xiji's startup project is an AI companion app using DeepSeek's API for role-playing. It launched on February 2 and gained around 3,000 active users in its first week.

Despite user reports of occasional API errors from DeepSeek, 60% of users already want Xiji to release an Android version soon. Dozens of users message him daily via social media asking for download links. The phrase "AI companion platform built on DeepSeek" has clearly become a breakout label for the app.

According to Zimubang's statistics, the list of various apps integrating DeepSeek on DeepSeek's official website expanded from only 182 entries before 2025 to 488 today.

On one hand, DeepSeek becomes a viral sensation hailed as "China's pride," gaining 100 million users in seven days. On the other, the first wave of developers deployed on DeepSeek are turning to alternative large models due to service overloads caused by massive traffic surges.

For developers, prolonged service disruptions are no longer simple technical faults—they've evolved into cracks between code and commercial logic. Forced to calculate survival under migration costs, whether rushing in or fleeing out, developers must confront the aftershocks brought by DeepSeek's explosive popularity.

One

Three days after his mini-program's backend was forced offline during the Spring Festival, by Lunar New Year's Day Six, Lin Sen abandoned DeepSeek—which he'd used for over a year—and switched back to ChatGPT to ensure stable operation.

Even though API call prices increased nearly tenfold, ensuring service stability became the higher priority.

Notably, developers switching from DeepSeek to other large models isn't as easy as users toggling models within an app. "Different large language models—even different versions of the same model—can produce subtly different responses to prompts." Even though Lin Sen continues calling ChatGPT, migrating all key nodes from DeepSeek to ChatGPT while maintaining stable, high-quality output took him over half a day.

The act of switching itself might take only two seconds, but "most developers need a full week adjusting prompts repeatedly and retesting," Lin Sen told Zimubang.

To small developers like Lin Sen, DeepSeek's server insufficiency is understandable. But advance notice could have prevented significant losses—both in time and app maintenance costs.

After all, "Logging into the DeepSeek developer backend requires phone registration; a single SMS could have warned developers ahead of time." Now, these losses fall entirely on developers who supported DeepSeek when it was still unknown.

When developers deeply couple with a large model platform, stability becomes an unspoken contract. A frequently fluctuating API interface is enough to make developers reconsider their loyalty to the platform.

Last year, when Lin Sen used Mistral's large model (a leading French large model company), he was mistakenly charged repeatedly due to a billing system error. Within an hour of emailing them, Mistral corrected the issue and provided a €100 voucher as compensation. This kind of response earned deeper trust from Lin Sen, who has since moved some services back to Mistral.

Yibiao AI's CTO Yang Huichao began planning his escape shortly after the release of DeepSeek V3.

If not using DeepSeek for poetry or ranting, what about using it to write tenders? After DeepSeek released V3, Yang Huichao—who leads AI tender projects at his company—started looking for alternatives. For professional domains like tender writing, "DeepSeek's stability keeps deteriorating."

The reasoning capabilities that made DeepSeek R1 go viral don't impress Yang Huichao. After all, "As a developer, the main reasoning power in software comes from programs and algorithms, not heavily relying on the base model's inherent abilities. Even using the oldest GPT-3.5 underneath, good results can be achieved through algorithmic corrections—as long as the model returns answers stably."

In actual usage, DeepSeek appears to Yang Huichao more like a smart but lazy "top student."

After upgrading to V3, Yang Huichao found that DeepSeek achieved higher success rates on complex questions, but its instability reached unacceptable levels: "Now when I ask 10 questions, at least one returns unstable output. Beyond required content, DeepSeek often likes to improvise freely, generating irrelevant extra content."

For example, tenders cannot contain erroneous characters. Also, developers usually specify JSON structure outputs (via instructions each time calling the large model to ensure fixed fields return stably) for subsequent function calls. Any errors or inaccuracies lead directly to downstream call failures.

"DeepSeek R1 may have significantly improved reasoning over previous V3 versions, but its stability still falls short of commercial standards," Yang Huichao mentioned on @ProductivityMark account.

Caption: Gibberish generated during DeepSeek V3 process. Source: @ProductivityMark account

As one of the first users joining during the DeepSeek-coder phase in early 2024, Yang Huichao doesn't deny DeepSeek is a good student. But now, to guarantee quality and stability in tender generation, he can only shift attention toward other Chinese large model companies focusing more on B2B clients.

After all, DeepSeek, once dubbed the Pinduoduo of AI, rapidly attracted a group of small-to-medium AI developers thanks to its cost-effectiveness. But now, to reliably call DeepSeek stably, local deployment is necessary. "Deploying a DeepSeek R1 costs 300,000–400,000 yuan. If calculating based on online API costs, I wouldn't spend 300,000 yuan in my entire lifetime."

Neither cheap nor stable, blocked developers like Yang Huichao are leaving DeepSeek en masse.

Two

Once, people like Lin Sen were the first坚定 supporters of DeepSeek.

In June 2024, while developing his AI mini-program "Young Listeners of the World," Lin Sen compared dozens of domestic and international large model platforms. He needed the model to process thousands of news articles daily, filtering and ranking them to find suitable science and nature news for teenagers, then edit the texts accordingly.

This demanded not only intelligence but affordability.

Processing thousands of articles daily incurs massive token consumption. For independent developer Lin Sen, ChatGPT models are too expensive—only suitable for core processes. Bulk text screening and analysis require cheaper large models.

Meanwhile, calling foreign models like Mistral, Gemini, or ChatGPT involves cumbersome procedures: needing an overseas server, relay stations, and purchasing tokens with foreign credit cards.

Lin Sen could only recharge his ChatGPT account using a British friend's credit card. Once servers are overseas, API response speeds also suffer delays, all pushing Lin Sen to look domestically for a ChatGPT alternative.

DeepSeek impressed Lin Sen greatly. "At the time, DeepSeek wasn't the most famous, but it offered the most stable responses." Taking a 10-second API request interval as an example, other domestic large models might fail to return any content 30% of the time within 100 attempts, whereas DeepSeek returned every time, maintaining response quality comparable to ChatGPT and BAT's large model platforms.

Compared to ChatGPT and BAT's large model API pricing, DeepSeek was truly inexpensive.

After assigning large-scale news reading and preliminary analysis tasks to DeepSeek, Lin Sen found DeepSeek's calling cost to be one-tenth of ChatGPT's. After optimizing instructions, his daily DeepSeek calling cost dropped to just 2–3 yuan. "Maybe compared to ChatGPT, it's not the best, but DeepSeek's price is extremely low. For my project, its cost-performance ratio is exceptionally high."

Caption: Lin Sen uses large models to collect and analyze news (left), ultimately presented in the "Young Listeners of the World" mini-program (right). Source: Provided by Lin Sen

Cost-effectiveness became the primary reason developers chose DeepSeek. In 2023, Yang Huichao initially switched his company's AI project from ChatGPT to Mistral mainly to control costs. Then in May 2024, DeepSeek released V2, slashing API prices to 2 yuan per million tokens—an undeniable blow to other large model vendors—and this prompted Yang Huichao to migrate his company's AI tender tool project to DeepSeek.

Additionally, after testing, Yang Huichao found that domestic giants BAT, which had already captured B2B markets via cloud services, were "too heavy."

For startups like Yibiao AI, choosing BAT means bundled consumption of cloud services. For someone like Yang Huichao who simply wants to invoke large model services, DeepSeek's API calling is undoubtedly more convenient.

In terms of migration cost, DeepSeek also held an edge.

Both Lin Sen and Yang Huichao originally developed their apps using OpenAI-style interfaces. Switching to BAT's large model platforms would require rebuilding the entire underlying architecture. But DeepSeek supports OpenAI-like interfaces, so switching models only requires changing the platform address—"Painless switch in one minute."

Xiaochuang's AI Q&A device shipped with DeepSeek on its first day of sale, assigning DeepSeek to build two of its five core roles: Chinese language and essay guidance.

As a partner, Lu Chi was amazed by DeepSeek last June. "DeepSeek excels in Chinese comprehension—it was the only large model at that time capable of flawlessly reciting the full 'Yueyang Tower Inscription.'" Lu Chi told Zimubang, compared to other models' conventional, rigid document-style outputs, DeepSeek wins in imagination when teaching children essay writing.

Long before DeepSeek went viral on social media for writing poems and sci-fi stories, its elegant writing style already caught Xiaochuang AI team's attention.

For developers, they still hope DeepSeek restores access. For now, whether migrating to BAT platforms deploying full-featured DeepSeek R1 or switching to other large model vendors, both feel like "substitutes resembling the original."

Three

But competitors are quickly catching up to DeepSeek's breakout strength in deep reasoning.

Domestically, Baidu and Tencent have recently added deep thinking capabilities to their self-developed large models. Internationally, OpenAI urgently launched "Deep Research" in February, applying reasoning model capabilities to web search, available to Pro, Plus, and Team users. Google DeepMind also released the Gemini 2.0 series in February, including the experimental 2.0 Flash Thinking version—a model enhanced for reasoning ability.

Worth noting: While DeepSeek still focuses primarily on text input, both ChatGPT and Gemini 2.0 already incorporate reasoning into multimodal capabilities, supporting multiple input modes including video, voice, documents, and images.

For DeepSeek, beyond catching up in multimodal capabilities, an even bigger challenge comes from competitors closing in on pricing.

On the cloud deployment side, major cloud providers are choosing to integrate DeepSeek, simultaneously sharing traffic while binding customers through cloud services. In some ways, accessing DeepSeek's large model has even become a "freebie" to lock in enterprise cloud service subscriptions.

Baidu founder Robin Li recently stated that in the large language model field, "every 12 months, inference costs can drop by over 90%."

With inference costs steadily declining, continuously lower API calling prices from BAT are inevitable. DeepSeek's cost-performance advantage now faces renewed price war pressure from tech giants.

Still, the large model API price war is just beginning. Beyond pricing, large model vendors are now competing on service quality for developers.

Having interacted with numerous large model platforms big and small, Lin Sen was particularly impressed when a major tech firm assigned a dedicated account manager. Whenever instability or technical issues arose, they proactively contacted developers.

Despite being an open-source large model platform aiming to provide more inclusive AI support for developers, DeepSeek doesn't even offer an invoice issuance portal on its official website.

"After each API top-up, unlike other large model platforms where invoices can be issued directly in the backend, DeepSeek requires going outside the official site, adding customer service WeChat to request invoices," Yang Huichao told Zimubang. Whether regarding price or service, DeepSeek's 'cost-effective' label seems increasingly shaky.

A senior AI product manager at a major tech firm told Zimubang that some internet company leaders insist on replacing existing models with DeepSeek, completely ignoring the time spent re-adjusting prompts. Moreover, even the full-featured DeepSeek R1 lacks support for many common capabilities such as Function calling.

Compared to BAT, which has already streamlined B2B service scenarios via cloud services, DeepSeek still lags behind major AI players in convenience.

Yet DeepSeek's traffic effect hasn't faded yet, and newcomers keep arriving.

Some companies claim integration with DeepSeek merely because they started calling APIs and topped up a few hundred yuan. Some announce deployment of DeepSeek models but actually just had employees watch Bilibili tutorials and install one-click packages. Amid this DeepSeek craze, the scene is mixed with both genuine and fake participants.

The tide will eventually recede, but clearly, DeepSeek has much more work to do.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News