Rejecting ByteDance's $30 million acquisition offer, is Manus an innovation on par with DeepSeek?

TechFlow Selected TechFlow Selected

Rejecting ByteDance's $30 million acquisition offer, is Manus an innovation on par with DeepSeek?

Single-handedly secured China a ticket to the AI competition.

Text: Brother Jing, Whale-selected AI

Manus has broken the record previously set by ChatGPT and DeepSeek in terms of breakout speed—going viral across the internet within just one day of its closed beta launch!

For this Wuhan-based company called "Butterfly Effect," it's actually their second hit product. Their first was Monica, an AI product with 10 million overseas users and annual recurring revenue (ARR) reaching tens of millions of dollars—one of the most profitable AI products of 2024.

Manus is the team’s first AI Agent product. During its closed beta on March 6th, it earned widespread industry praise for its ability to autonomously execute tasks, being hailed by many as the product that “ushers in the Agent era.” From sudden fame to today, only four days have passed—there hasn’t even been a public release yet. Based solely on a few demo cases, the product has already been elevated to near-mythical status, with internal testing codes reportedly resold for up to 50,000 RMB.

In fact, in 2024 ByteDance offered $30 million to acquire the company. However, they ultimately rejected the offer, feeling the valuation wasn't fair. According to exclusive information gathered by Brother Jing, the real reason was that ByteDance viewed Monica as somewhat of a “wrapper” product with high user acquisition costs, suboptimal retention metrics, and potentially replaceable by large models. Since ByteDance planned to launch its own Doubao plugins, their bid came in low.

For a company like Monica generating tens of millions in ARR, a 3x PS (price-to-sales ratio)—borrowing the public market concept—would still represent only a modest increase over their previous round valuation, clearly falling short of investor expectations.

Back in the mobile internet era, the founders had already sold a company to a unicorn. Now, at the dawn of the AI age, from Monica to Manus, the new team has demonstrated exceptional product execution, creating two breakout hits with minimal funding. This time, Sequoia China, Tencent, and others have decided to back their continued entrepreneurship. In an AI-native landscape where even major tech firms struggle to create hits, this small team has managed exactly that.

The breakout of Manus has come amid extraordinary acclaim—many regard it as an innovation on par with DeepSeek, the key that unlocks the AI Agent era, or even a representative step toward AGI. At the same time, it has also drawn massive criticism: some claim it lacks core technology and is merely a shell; others suspect it’s a coordinated KOL marketing stunt that hasn’t made waves abroad; still others argue the service cost will be too high to scale practically.

Amid all the debate, Brother Jing has conducted an in-depth analysis of the product, aiming to share a more objective and long-term perspective on its breakthroughs and significance.

DeepSeek breaks compute centrality; Manus ushers in the Agent era

At the small-scale Manus product launch event on March 6th, the company boldly declared “The next ChatGPT moment,” signaling the opening of the Agent era.

Whether Manus truly qualifies as the next “GPT moment” remains to be seen. But DeepSeek, which went viral a month ago, has already proven its capabilities.

DeepSeek exploded into popularity during the Spring Festival, offering users their first real taste of reasoning models. It felt genuinely intelligent—the depth, breadth, and flexibility of its responses far surpassing familiar tools like Doubao and Yuanbao. This is precisely the advantage of reasoning models over instruction-following ones, and a testament to DeepSeek’s strategic insight.

In 2025, large models face three frontiers: larger parameters, multimodality, and reasoning. The first path involves brute-forcing scale—Grok-3 leveraged 200,000 H100 GPUs to push the scaling laws, while others use MoE architectures to grow bigger. Multimodality is where most players focus—OpenAI abroad, and ByteDance’s Doubao, Tencent’s Hunyuan, and StepFun domestically—all integrating DiT architectures to master seeing, hearing, writing, and speaking. The third frontier lies in reasoning, using reinforcement learning (RL) to enhance model intelligence. DeepSeek leads here, with Tongyi quickly following suit.

DeepSeek’s greater strength, however, lies in its exceptional cost control—especially evident when it open-sourced five projects consecutively. This revealed deep infrastructure optimization capabilities, challenging NVIDIA’s “compute-centric” technological-financial order.

What is “compute centrality”? U.S. financial hegemony has undergone three foundational shifts: from gold, to oil, to computing power—each time redefining the global credit system around monopolized critical resources to sustain dollar dominance.

End of the Gold Standard (1944–1971)

The Bretton Woods system tied the dollar to gold, but insufficient U.S. gold reserves led to collapse. In 1971, Nixon severed the dollar-gold link, forcing the search for a new anchor.

Petroleum-Dollar Hegemony (1974–present)

A secret U.S.-Saudi agreement ensured oil was traded in dollars, creating a “oil-dollar-Treasury” cycle: oil exporters reinvested dollar earnings into U.S. Treasuries, cementing the dollar as the world’s reserve currency. At its peak, 86% of global oil trade was dollar-denominated, enabling the Fed to extract wealth globally through monetary cycles.

Rise of Compute Centrality (2020s–)

In the digital age, computing power has become the new means of production. NVIDIA’s H100 chip functions as “computing currency.” In 2023, the global computing market reached $2.6 trillion, with U.S. firms capturing 60%. Computing power is now replacing oil as the foundation of dollar credibility.

The massive computational demands of large models have fueled NVIDIA’s stock surge—up over 435% in two years, with market cap growing from $300 billion to nearly $3 trillion in the past decade.

Manus, however, hasn’t yet shaken the global tech community or impacted NVIDIA’s stock like DeepSeek did. Yet according to partner Zhang Tao, the company has only about 50 people and built this sensation in just two to three months.

WeChat search index comparison: Manus hasn't surpassed DeepSeek at its peak

Domestically, though, Manus is the hottest AI product. Merely releasing a few website demos sparked intense online discussion. With invite codes in extremely short supply, people are paying premium prices just to get access. An app that generates fake Manus invite codes even topped the iOS paid app chart in mainland China on March 8th—despite being useless, it rode the wave of traffic.

Manus leads in product insight; big tech lags in innovation

For most people who haven’t used OpenAI’s Deep Research (priced at $200/month), trying the domestic alternative Manus is genuinely impressive. Brother Jing tested the following query:

When tasked with creating a report on embodied intelligence, it stalled during data analysis, failing completely when asked to turn it into a PPT. On a second try, asking for a written report, it stopped again before generating charts.

Currently, Manus often fails to understand or manage its own capabilities—trying to do too much at once.

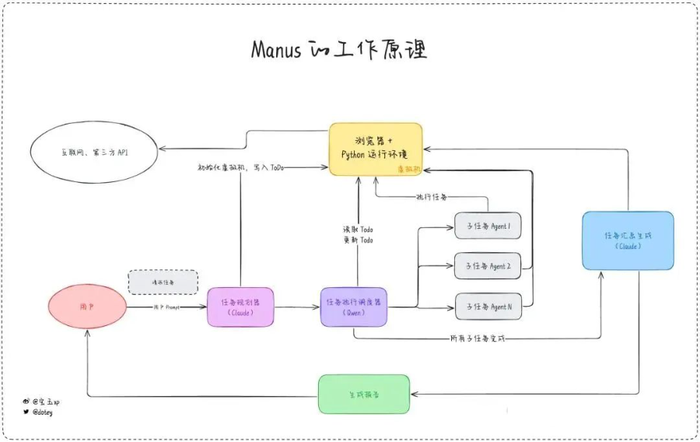

Technically, Manus isn’t particularly complex. It integrates computer use, virtual machines, and multi-agent collaboration into a single AI product.

Source: Baoyu AI

Manus’ key breakthrough, however, lies in achieving product-market fit for general-purpose Agents—something automation tools like Devin and bolt.new have failed to do.

A former employee shared on social media that the company has deep engineering experience and agent workflow expertise:

September–October 2023: First launched agent capabilities in China, with todolist.md reflecting best practices after studying various agent frameworks.

March 2024: Built a GPTS platform.

Early 2024: Began technical accumulation on browser automation, gaining deep understanding of browser context utilization.

November 2023: Started work on search, building agent web-retrieval skills (outside my involvement).

July 2024: Learned social growth tactics via Roast.

November 2024: Gained insights into coding abilities across different models through their coding product.

"Each piece is relatively thin, but the combinatorial innovation at this moment is undeniably strong," the employee commented.

Brother Jing believes Manus’ greatest success lies in surpassing big tech in product vision.

Xiao Hong, founder of Butterfly Effect, is a serial entrepreneur. He previously launched Yiban Plugin—a WeChat tool generating millions in revenue (our Whale-selected account’s social media team still pays to use it). He then capitalized on the enterprise WeChat SCRM trend with Weiban Assistant, caught the AI wave with the large model aggregator Monica, and now rides the AI Agent wave with Manus.

Successfully launching two AI-native products in succession is no easy feat. Even OpenAI, aside from ChatGPT, has struggled—most of its other offerings like GPTs, SearchGPT, DALL·E, and Whisper remain half-baked.

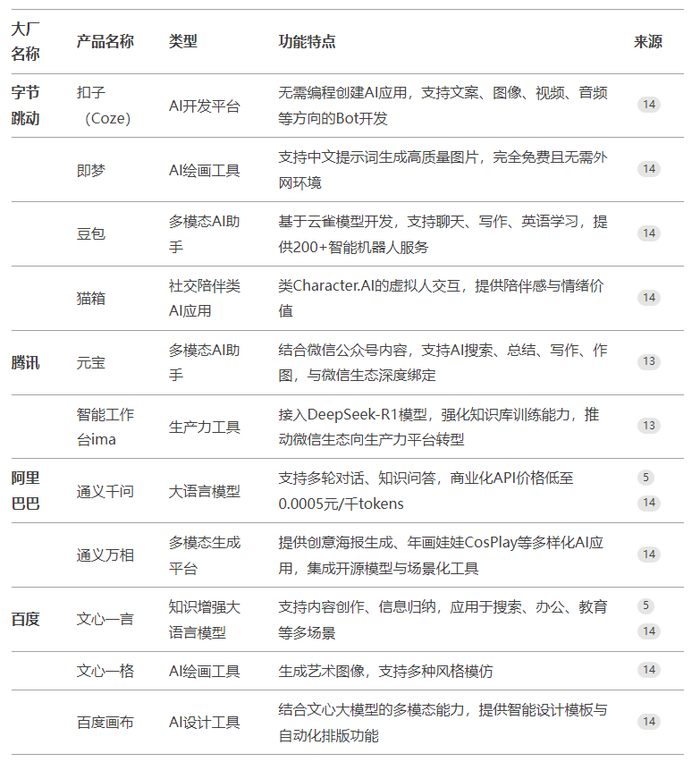

Domestic tech giants, meanwhile, generally lack originality in their AI products. Whether AI social, AI search, or AI coding tools, they mostly follow rather than lead.

Image generated by Tencent Yuanbao AI

Butterfly Effect, having captured early gains with Monica via AI plugins, is now first to market with a mature Agent product. According to Sam Altman’s five-stage AI framework—L1 (chatbots), L2 (reasoners), L3 (agents)—Manus successfully broke out as an early L3-stage product.

Their philosophy of “Less Structure, More Intelligence” helped them avoid competing directly with big tech on AI browsers, instead discovering a new path forward.

As for whether this breakout is purely due to marketing leverage, Brother Jing doesn’t think so.

When Monica launched its Chinese version, it did collaborate with KOLs by distributing free membership codes. For Manus, during small KOL briefings, they were told it was the “world’s first general-purpose Agent product”—but I understand there was no paid promotion involved.

If Manus hadn’t gained traction, they might have followed Monica’s playbook with KOL giveaways. Now, clearly unnecessary—but judging from WeChat search trends, it still hasn’t reached DeepSeek-level热度.

Wu Bingjian, partner at Soul Capital, once noted: After DeepSeek’s breakout, society was effectively educated—people started competing on technical depth: how to improve Attention mechanisms, optimize MoE, implement FP8/FP16 mixed training. Next comes originality—who can invent the next model architecture, discover a new training paradigm?

In this sense, Manus refocuses competition back on cutting-edge innovation. Instead of AI assistant ad wars, attention returns to what groundbreaking applications and Agent products we can build in 2025 and beyond.

Being a wrapper doesn’t matter; success is still far off

Current AI products fundamentally lack true moats. Product ideas can’t be patented, and engineering execution is where big tech excels—so Brother Jing previously joked on Xiaohongshu: Guess which giant will copy this next?

That said, compared to earlier general Agent attempts like OpenAI’s Operator, Anthropic’s Claude Use, or Tencent’s APPAgent, Manus delivers a more polished, fully engineered Agent experience.

Yet polish alone isn’t a sustainable barrier. Within three hours of Manus going viral, MetaGPT released OpenManus—an open-source clone.

Once Manus identifies real user needs, refines engineering paths, and iterates on features, it becomes easier for outsiders to “semi-open-book” copy the design. If replication takes only half a day, does Manus have any defensibility at all?

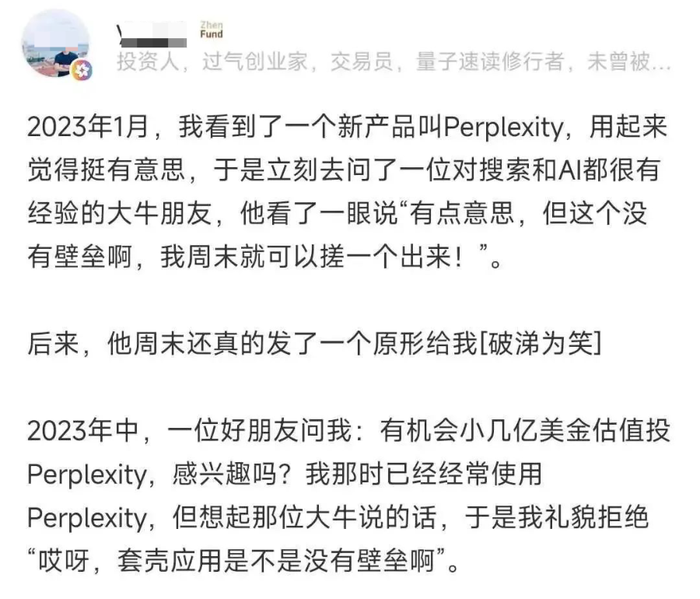

An investor missed Perplexity due to the 'wrapper' argument

Today’s countless AI search tools haven’t hindered Perplexity’s growth. Native product understanding allows Perplexity to continuously innovate, while competitors can only follow and imitate.

The same applies to Manus. Right now, its most urgent need may be accepting investment from a major tech firm. The closed beta exists partly because server capacity can’t handle broader traffic.

According to media reports from briefings, the team disclosed the cost per task: approximately two dollars. While this is already 1/10 the cost of DeepResearch, it still translates to nearly 15 RMB per task. This explains why invite codes are tightly controlled—even limited distribution caused internal system crashes.

Accepting funding from a big tech player would provide not just capital, but crucially, low-cost access to large model APIs. Media reports indicate Manus primarily calls on Claude models, supplemented by fine-tuned open-source Tongyi models. Will they accept investment from Alibaba, Tencent, or ByteDance—similar to Kimi’s model of capital plus resource support?

Only then could Manus offer subscription plans under 1,000 RMB annually. Otherwise, pricing above that threshold risks turning it into a toy for only a niche group of professionals.

Equally important is refining product details and service capabilities—rapid iteration is key to survival.

Right now, generating a response with Manus takes far too long, and many tasks still fail. More product thinking is needed beyond what product lead Zhang Tao claims: “It’s really simple—no secrets, just trust the power of the model.”

The model is the foundation, but product details define service quality. For example, although Claude 3.7 Sonnet shattered ceilings in coding ability, Cursor still attracts paying users thanks to its superior code auto-completion features.

More importantly, MCP (Multi-Agent Communication Protocol) aggregation patterns are showing strong growth potential. This should be Manus’ future development path.

Given the pace of large model evolution, built-in agents may become standard. If GPT-5 integrates reasoning and instruction models, multimodal capabilities, and native Agent functions, it could be unexpectedly powerful. Domestic giants are likely pursuing similar paths. Until then, Manus must race to build user scale and revenue.

Conclusion

DeepSeek shattered the myth that only foreign models can succeed in large models, demonstrating ultra-low-cost deployment and proving that “Eastern magic” can challenge the global tech-financial order. Put simply, DeepSeek single-handedly secured China’s seat at the AI competition table, ending the era when global investors systematically undervalued Chinese tech assets.

As for Manus, it showcases the strongest form of AI-native applications—not cookie-cutter chatbots or rigid AutoAgents, but practical, scalable solutions for diverse scenarios.

At its highest, it might become the WeChat of the next AI era—though unlikely to surpass Facebook abroad in global reach. At its lowest, it has raised public awareness of Agents and inspired countless startups to keep dreaming.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News