IO.NET: On the Brink of Token Launch with Triple Hype of AI, DePIN, and Solana Ecosystem

TechFlow Selected TechFlow Selected

IO.NET: On the Brink of Token Launch with Triple Hype of AI, DePIN, and Solana Ecosystem

In a market context where AI computing demand exceeds supply, the most critical factor for a distributed AI computing network is the scale of GPU supply.

Author: Alex Xu, Mint Ventures

Introduction

In my previous article, I mentioned that compared to the previous two cycles, this crypto bull market lacks sufficiently impactful new business and asset narratives. AI is one of the few emerging narratives in Web3 this cycle. In this article, I will combine insights from this year’s hot AI project IO.NET to explore two key questions:

-

The commercial necessity of combining AI with Web3

-

The necessity and challenges of decentralized compute services

Additionally, I will analyze the representative decentralized AI compute project IO.NET—covering its product logic, competitive landscape, team background, and valuation implications.

Some of my thinking on the integration of AI and Web3 was inspired by Delphi Digital researcher Michael Rinko’s report titled “The Real Merge”. Parts of this article reflect my interpretation and references to that work, which I highly recommend readers explore.

The views expressed here represent my current understanding as of publication and may evolve over time. They are inherently subjective and may contain factual inaccuracies, data errors, or flawed reasoning. This content should not be taken as investment advice. Feedback and discussion from industry peers are welcome.

Below is the main body of the article.

1. Business Logic: The Intersection of AI and Web3

1.1 2023: A New "Miracle Year" Driven by AI

Looking back at human history, whenever technology achieves breakthrough progress, it triggers transformative changes—from individual daily life and industrial structures to the course of human civilization itself.

Two years stand out in human history—1666 and 1905—now widely known as the “Miracle Years” in scientific history.

1666 earned its status due to Isaac Newton's concentrated burst of scientific achievements during that year. He laid the foundation for optics, invented calculus, and derived the law of universal gravitation—one of the cornerstones of modern science. Each of these contributions alone would have profoundly shaped future centuries of scientific development, collectively accelerating scientific advancement significantly.

The second miracle year was 1905, when a 26-year-old Albert Einstein published four groundbreaking papers in *Annalen der Physik*, covering the photoelectric effect (laying groundwork for quantum mechanics), Brownian motion (important for stochastic processes), special relativity, and the mass-energy equivalence equation (E=mc²). Later assessments concluded that each paper exceeded the typical standard for a Nobel Prize in Physics (Einstein later won the prize specifically for the photoelectric effect). Once again, the trajectory of human civilization leapt forward dramatically.

Likewise, 2023 is very likely to be remembered as another “Miracle Year”—thanks largely to ChatGPT.

We consider 2023 a milestone in technological history not only because of GPT’s massive leap in natural language understanding and generation but also because humanity has now identified a predictable pattern behind large language model (LLM) capability growth: scaling up model parameters and training data leads to exponential improvements in performance—and there appears to be no immediate ceiling in sight (as long as sufficient compute power is available).

This capability extends far beyond language processing. LLMs are increasingly being applied across diverse scientific fields. For example, in biotechnology:

-

In 2018, Nobel laureate Frances Arnold stated at her award ceremony: “Today we can read, write, and edit any DNA sequence in practical applications, but we cannot yet compose with it.” Just five years later, in 2023, researchers from Stanford University and Salesforce Research in Silicon Valley published a paper in *Nature Biotechnology*. Using a GPT-3 fine-tuned large language model, they generated one million entirely novel proteins from scratch and discovered two structurally distinct proteins with antibacterial properties—potential alternatives to traditional antibiotics. In other words, AI has broken through the bottleneck of protein “creation.”

-

Previously, the AI algorithm AlphaFold predicted the structures of nearly all 214 million known proteins on Earth within just 18 months—a volume hundreds of times greater than all prior human structural biology efforts combined.

With various AI-driven models now available, transformative shifts are inevitable—not just in hard sciences like biotech, materials science, and drug discovery, but also in domains such as law and art. 2023 marks the beginning of this transformation.

It’s well known that humanity’s wealth creation capacity has grown exponentially over the past century. The rapid maturation of AI technologies will further accelerate this trend.

Global GDP trend chart, data source: World Bank

1.2 The Convergence of AI and Crypto

To fundamentally understand the necessity of merging AI and crypto, we should begin with their complementary characteristics.

Complementarity Between AI and Crypto

AI possesses three core attributes:

-

Stochasticity: AI exhibits randomness; its content generation mechanism operates as a black box that is difficult to reproduce or inspect, resulting in unpredictable outputs

-

Resource-intensive: AI is an energy- and hardware-hungry industry, requiring vast amounts of electricity, chips, and computational power

-

Human-like intelligence: AI (soon) will pass the Turing test, making humans indistinguishable from machines*

※ On October 30, 2023, a research group from UC San Diego released results of a Turing test conducted on GPT-3.5 and GPT-4.0 (test report). GPT-4 scored 41%, just 9% short of the 50% passing threshold. By comparison, humans achieved 63%. The meaning of this Turing test is the percentage of people who believe the entity they’re chatting with is human. Scoring above 50% means more than half the participants perceive the interlocutor as human rather than machine—thus considered a pass.

While AI unlocks unprecedented productivity, these three traits pose significant societal challenges:

-

How to verify and control AI’s randomness so it becomes an advantage rather than a flaw

-

How to meet the enormous demand for energy and computing resources

-

How to distinguish between humans and machines

Crypto and blockchain economies, however, possess features that may serve as ideal solutions to these AI-related challenges. Key characteristics of crypto economics include:

-

Determinism: Operations run on blockchains, code, and smart contracts with clear rules and boundaries. Inputs yield predictable outputs—highly deterministic

-

Efficient resource allocation: Crypto creates a vast global free market where pricing, fundraising, and resource flows occur rapidly. Token incentives help accelerate supply-demand matching and drive networks toward critical mass

-

Trustlessness: Public ledgers, open-source code, and easy verifiability enable trustless systems. Zero-knowledge (ZK) technologies allow verification without exposing private information

Let’s illustrate this complementarity through three examples.

Example A: Taming Randomness — AI Agents Built on Crypto Economics

An AI agent is an artificial intelligence program designed to perform tasks autonomously based on human intent (notable projects include Fetch.AI). Suppose your AI agent needs to execute a financial transaction, such as “buy $1,000 worth of BTC.” It might face two scenarios:

Scenario One: Interfacing with traditional financial institutions (e.g., BlackRock) to purchase BTC ETFs. This involves numerous compatibility issues between AI agents and centralized entities—KYC, document review, login, identity verification—which remain cumbersome today.

Scenario Two: Operating natively within the crypto economy. The agent could directly sign transactions from your wallet via Uniswap or another aggregator platform to complete the trade and receive WBTC (or another wrapped BTC variant). The process is fast and simple. In fact, trading bots already function as basic AI agents focused solely on executing trades. As AI evolves, these bots will handle increasingly complex intents—for instance: monitor 100 “smart money” addresses on-chain, analyze their strategies and success rates, allocate 10% of my funds to replicate similar trades over one week, stop if performance deteriorates, and summarize possible reasons for failure.

AI performs better within blockchain systems primarily because of the clarity of rules and permissionless access. Within well-defined constraints, the risks posed by AI’s randomness are minimized. For example, AI dominates humans in games like chess and video games—environments with clearly defined rules. Conversely, progress in autonomous driving remains slower because real-world environments are less predictable, and society tolerates far less randomness in safety-critical decisions.

Example B: Resource Mobilization — Using Token Incentives to Aggregate Supply

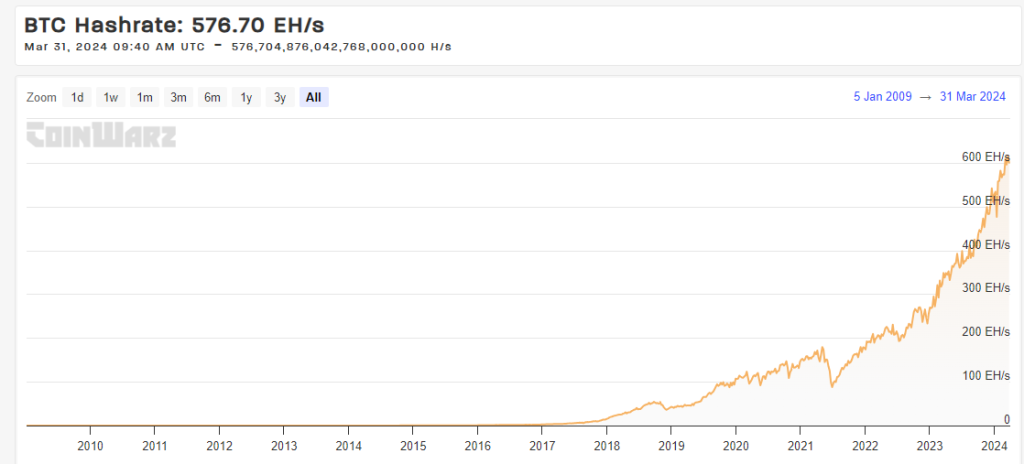

Bitcoin’s global hashpower network currently exceeds the combined computational capacity of any single nation’s supercomputers (current hashrate: 576.70 EH/s). Its growth is fueled by a simple, fair incentive mechanism.

BTC network hashrate trend, source: https://www.coinwarz.com/

Beyond Bitcoin, DePIN projects—including Mobile—are leveraging token incentives to build two-sided markets and achieve network effects. IO.NET, the focus of this article, aims to aggregate AI compute power by designing a token model that unlocks latent GPU supply.

Example C: Open Source + ZK — Distinguishing Humans from Machines While Protecting Privacy

Worldcoin, a Web3 project co-founded by OpenAI CEO Sam Altman, uses a hardware device called Orb to capture iris biometrics. Leveraging zero-knowledge proofs, it generates unique, anonymous hashes to verify human identity while distinguishing humans from machines. In early March, the Web3 art project Drip began using Worldcoin IDs to authenticate real users and distribute rewards.

Recently, Worldcoin open-sourced the software code for its Orb iris-scanning hardware, enhancing transparency and ensuring user biometric data privacy.

Overall, crypto economics—with its deterministic nature rooted in code and cryptography, permissionless access, efficient resource mobilization via tokens, and trustless verification through public ledgers and open-source code—has emerged as a promising framework for addressing the societal challenges posed by AI.

Among these challenges, the most urgent and commercially pressing one is AI’s insatiable hunger for computational resources—especially chips and GPU power.

This explains why decentralized compute projects have outperformed other AI-focused sectors during this bull market cycle.

Commercial Necessity of Decentralized Compute

AI requires massive computational resources, both for training models and performing inference.

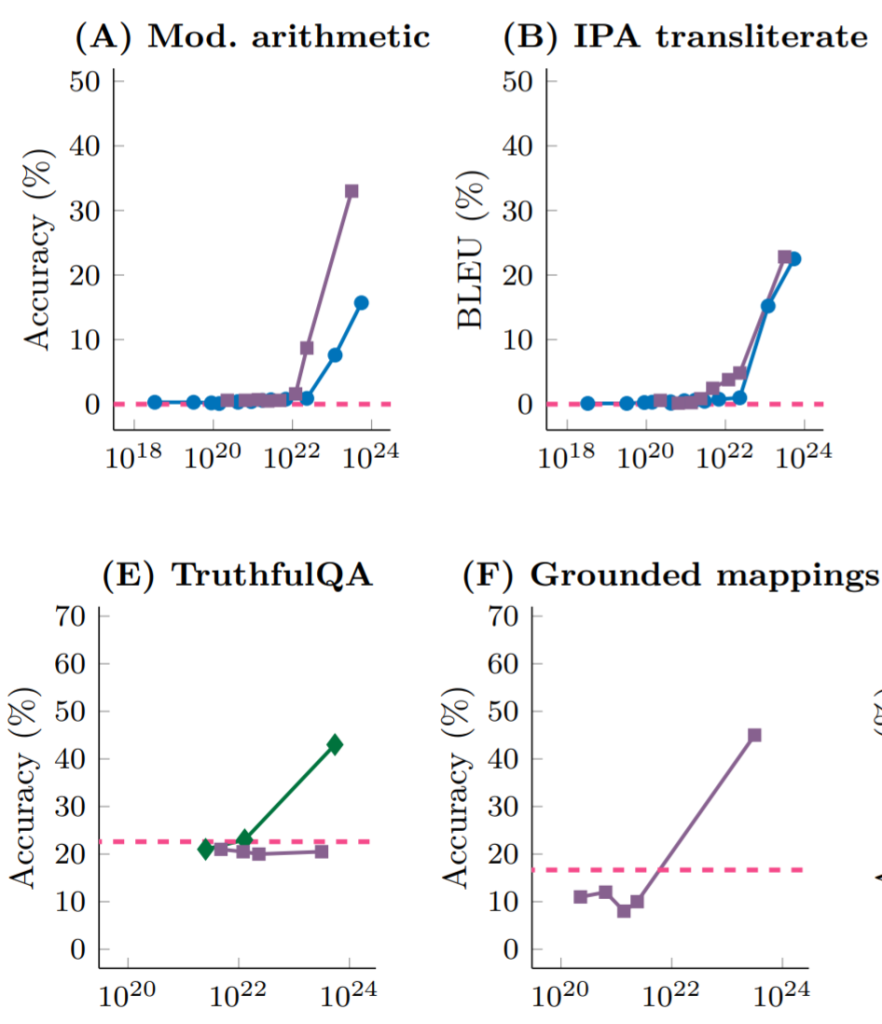

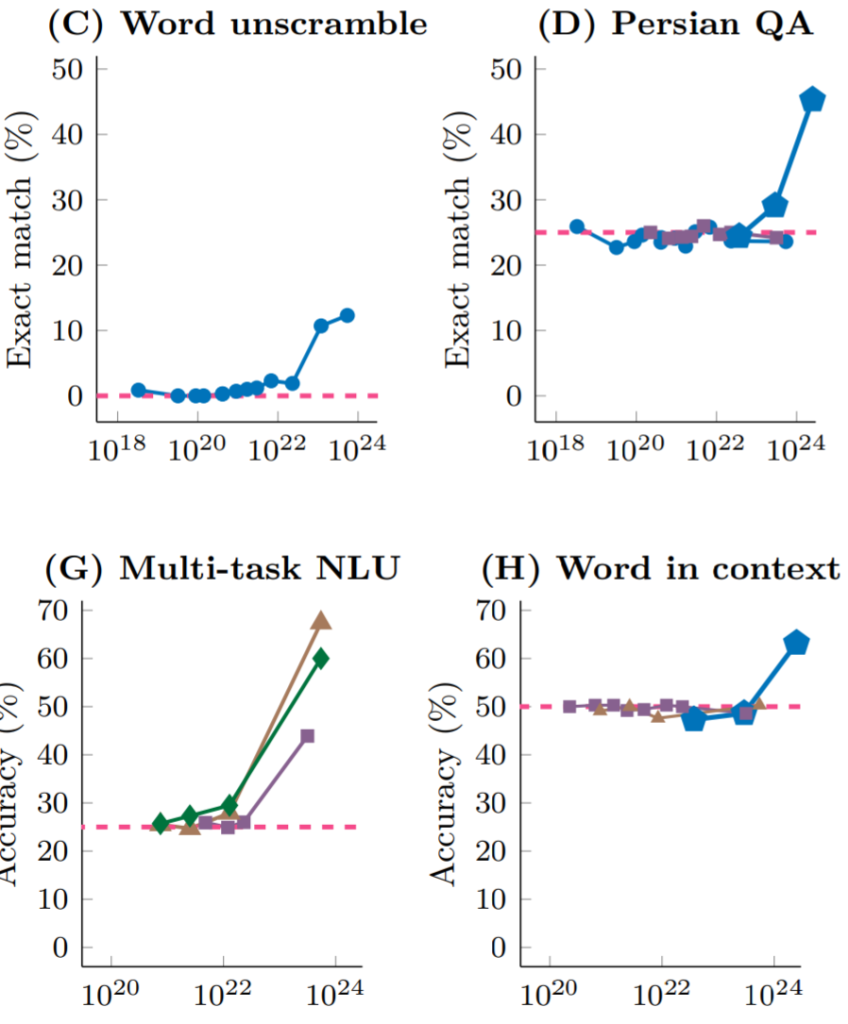

In practice, a key insight about large language model (LLM) training has been confirmed: when data and parameter scales become sufficiently large, LLMs exhibit emergent capabilities unseen in smaller models. Each generational leap in GPT performance corresponds directly to exponential increases in training compute.

Research from DeepMind and Stanford University shows that different LLMs, when tackling various tasks (math computation, Persian Q&A, natural language understanding), perform no better than random guessing until the training scale surpasses ~10^22 FLOPs (floating-point operations per second, a measure of computing power). Beyond this threshold, performance improves sharply regardless of the specific model used.

Source: Emergent Abilities of Large Language Models

Source: Emergent Abilities of Large Language Models

It is precisely this “brute force yields miracles” principle—validated through empirical practice—that led OpenAI CEO Sam Altman to propose raising $7 trillion to build an advanced chip foundry ten times larger than TSMC’s current capacity (estimated cost: $1.5 trillion), with the remainder allocated to chip production and model training.

Beyond model training, AI inference itself demands substantial compute (though less than training), making chip and compute scarcity a persistent issue across the AI ecosystem.

Compared to centralized AI compute providers like Amazon Web Services (AWS), Google Cloud Platform (GCP), and Microsoft Azure, decentralized compute platforms offer several value propositions:

-

Accessibility: Accessing GPUs via cloud services often takes weeks, and popular GPU models are frequently out of stock. Consumers usually must sign long-term, inflexible contracts with major providers. Decentralized platforms offer flexible hardware choices and faster deployment.

-

Lower pricing: By utilizing idle GPUs and supplementing with protocol-level token subsidies, decentralized networks may offer significantly cheaper compute.

-

Censorship resistance: Cutting-edge GPU supply is monopolized by big tech firms, and governments—particularly the U.S.—are increasing scrutiny over AI compute services. The ability to access compute in a decentralized, elastic, and permissionless manner is becoming an explicit need, forming a core value proposition for Web3-based compute platforms.

If fossil fuels were the lifeblood of the industrial age, then compute power may be the lifeblood of the new digital era ushered in by AI. Compute infrastructure will underpin the AI age. Just as stablecoins became a thriving offshoot of fiat currency in the Web3 world, could decentralized compute markets become a parallel branch of the rapidly growing AI compute market?

Given this is still an early-stage market, much remains uncertain. However, several factors could catalyze adoption of decentralized compute:

-

Persistent GPU supply-demand imbalance: Ongoing GPU shortages may push developers to experiment with decentralized compute platforms.

-

Regulatory expansion: Obtaining AI compute from major cloud platforms requires KYC and multiple layers of review. This friction may drive adoption of decentralized alternatives—especially in sanctioned or restricted regions.

-

Token price momentum: During bull markets, rising token prices enhance the subsidy value for GPU suppliers, attracting more participants, expanding market scale, and lowering end-user costs.

However, decentralized compute platforms face equally apparent challenges:

-

Technical and engineering hurdles

-

Verification of work: Deep learning computations involve hierarchical structures where each layer’s output feeds into the next. Verifying correctness typically requires re-executing the entire computation, making efficient validation difficult. Platforms must develop novel algorithms or use probabilistic verification techniques that provide confidence rather than absolute certainty.

-

Parallelization challenges: Decentralized platforms aggregate fragmented GPU supply, meaning individual devices offer limited compute. No single provider can independently train or infer large models quickly, necessitating task decomposition and parallel execution to reduce total completion time. This introduces complexities around task splitting (especially for deep learning), data dependencies, and inter-device communication overhead.

-

Privacy protection: How to ensure clients’ data and models aren’t exposed to compute providers?

-

-

Regulatory compliance challenges

-

Due to the permissionless nature of both supply and demand sides, decentralized compute platforms may attract certain users seeking freedom from oversight—but this same feature may draw regulatory scrutiny as AI governance frameworks mature. Additionally, some GPU suppliers may worry about whether their rented compute is being used by sanctioned entities.

-

Overall, decentralized compute consumers are typically professional developers or small-to-medium organizations—distinct from crypto investors buying tokens or NFTs. These users prioritize service stability and continuity over low prices. Currently, decentralized compute platforms still have a long way to go before earning widespread trust from this user base.

Next, we’ll examine IO.NET—a new decentralized compute project from this cycle—analyze its key details, and estimate its potential post-launch valuation relative to comparable AI and decentralized compute projects.

2. Decentralized AI Compute Platform: IO.NET

2.1 Project Positioning

IO.NET is a decentralized compute network that builds a two-sided marketplace centered around chips. On the supply side are globally distributed GPUs (primarily), along with CPUs and Apple iGPUs (M1/M2). On the demand side are AI engineers needing to train or run inference on machine learning models.

On IO.NET’s official website, it states:

Our Mission

Putting together one million GPUs in a DePIN – decentralized physical infrastructure network.

Its mission is to integrate a million-level number of GPUs into its DePIN network.

Compared to existing cloud-based AI compute providers, IO.NET emphasizes three key selling points:

-

Flexible configuration: AI engineers can freely select and combine desired chips to form custom “clusters” tailored to their computational needs

-

Fast deployment: Eliminates weeks of approval delays (common with AWS and other centralized providers); tasks can be deployed and started within seconds

-

Low cost: Service pricing is up to 90% lower than mainstream providers

Additionally, IO.NET plans to launch an AI model marketplace and related services in the future.

2.2 Product Mechanics and Operational Data

Product Mechanics and Deployment Experience

Like Amazon Web Services, Google Cloud, and Alibaba Cloud, IO.NET offers a compute service called IO Cloud. IO Cloud is a distributed, decentralized chip network capable of executing Python-based machine learning code and running AI/ML programs.

The fundamental unit of IO Cloud’s service is the cluster—a self-coordinating group of GPUs that completes computational tasks. AI engineers can customize clusters according to their requirements.

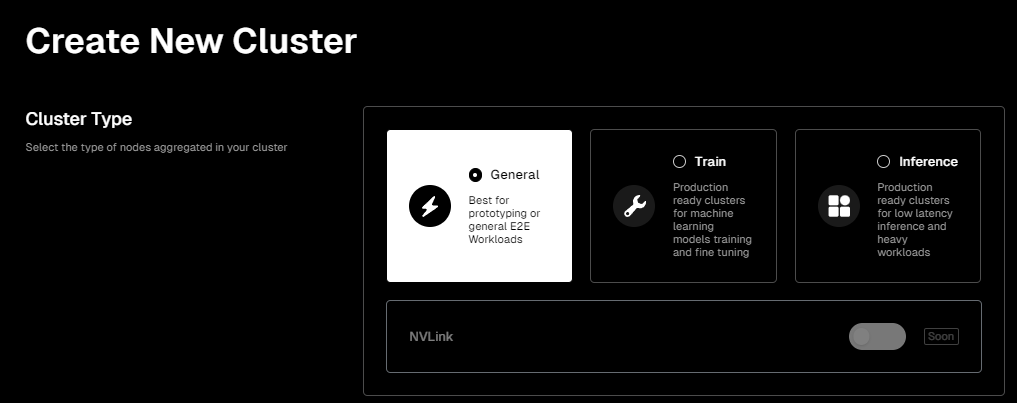

IO.NET’s interface is highly user-friendly. To deploy your own chip cluster for AI computation, simply navigate to the Clusters page and configure your desired setup.

Page info: https://cloud.io.net/cloud/clusters/create-cluster, same below

First, you choose your use case. Three options are currently available:

-

General: Provides a general-purpose environment suitable for early-stage projects with unclear resource needs.

-

Train: Designed specifically for training and fine-tuning ML models. Offers enhanced GPU resources, higher memory capacity, and/or faster networking for intensive workloads.

-

Inference: Optimized for low-latency inference and heavy workloads. In ML contexts, inference refers to using trained models to predict or analyze new data and return feedback. This option prioritizes latency and throughput optimization for real-time or near-real-time processing.

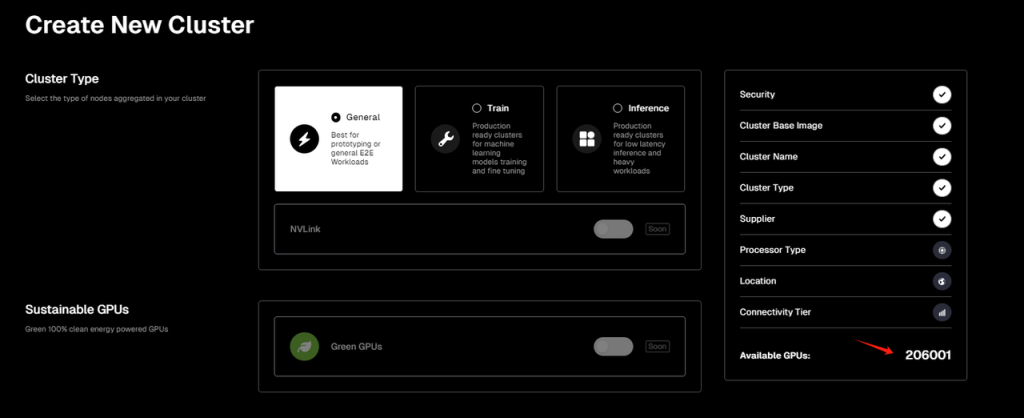

Next, you select the cluster’s supplier. IO.NET has partnered with Render Network and Filecoin’s miner network, allowing users to choose GPU supply from IO.NET or either of the two partner networks—effectively positioning IO.NET as an aggregator (though Filecoin services are temporarily offline as of writing). Notably, IO.NET currently reports over 200,000 online GPUs, while Render Network lists 3,700+ available GPUs.

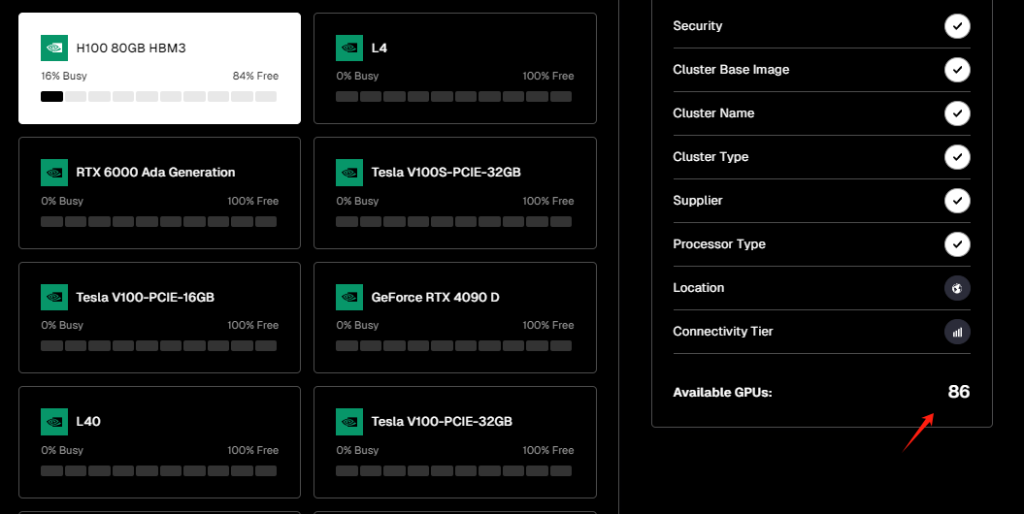

Following this, you enter the hardware selection phase. Currently, only GPUs are listed (excluding CPUs and Apple iGPUs like M1/M2), with NVIDIA products dominating the offerings.

Based on test data from the day of writing, IO.NET’s network had 206,001 GPUs online. The most abundant model was the GeForce RTX 4090 (45,250 units), followed by the GeForce RTX 3090 Ti (30,779 units).

Moreover, the A100-SXM4-80GB chip—highly efficient for AI workloads like ML, deep learning, and scientific computing (market price >$15,000)—had 7,965 units online.

The H100 80GB HBM3 GPU—designed from the ground up for AI (market price >$40,000)—offers 3.3x training and 4.5x inference performance compared to the A100. Only 86 units were actively online.

After selecting hardware, users specify additional parameters: region, connection speed, number of GPUs, and rental duration.

Finally, IO.NET generates a quote based on the full configuration. For example, my test configuration included:

-

General-purpose use case

-

16 x A100-SXM4-80GB GPUs

-

Ultra-high-speed connectivity

-

U.S.-based location

-

One-week rental period

Total cost: $3,311.60 ($1.232 per GPU-hour)

By comparison, the per-GPU hourly rates for A100-SXM4-80GB on AWS, GCP, and Azure are $5.12, $5.07, and $3.67 respectively (source: https://cloud-gpus.com/; actual prices vary by contract terms).

Thus, IO.NET’s pricing is significantly lower than major cloud providers, offering greater flexibility and ease of use.

Operational Overview

Supply-Side Status

As of April 4, official data shows IO.NET’s total supply includes 371,027 GPUs and 42,321 CPUs. Additionally, its partner Render Network contributes 9,997 GPUs and 776 CPUs to the network.

Data source: https://cloud.io.net/explorer/home, same below

At the time of writing, 214,387 GPUs from IO.NET were online—an uptime rate of 57.8%. Render Network’s GPUs showed a 45.1% uptime rate.

What do these supply figures mean?

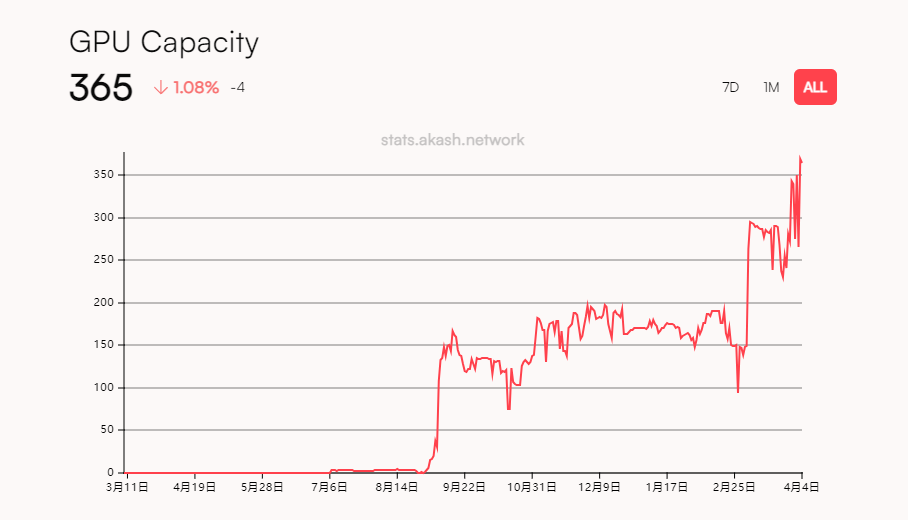

For context, let’s compare IO.NET with Akash Network—a longer-established decentralized compute project.

Akash Network launched its mainnet in 2020, initially focusing on decentralized CPU and storage services. It introduced GPU testnet services in June 2023 and launched its GPU mainnet in September 2023.

Data source: https://stats.akash.network/provider-graph/graphics-gpu

According to Akash’s official data, despite steady growth since launch, the total number of GPUs connected to its network remains at just 365.

In terms of GPU supply volume, IO.NET surpasses Akash Network by multiple orders of magnitude, establishing itself as the largest decentralized GPU compute network to date.

Demand-Side Status

From the demand side, however, IO.NET remains in the early market development phase. Actual usage for compute tasks is currently limited. Most online GPUs show 0% utilization, with only four GPU types—A100 PCIe 80GB K8S, RTX A6000 K8S, RTX A4000 K8S, and H100 80GB HBM3—handling active tasks. Except for the A100 PCIe 80GB K8S, the others operate below 20% load.

The network’s reported utilization pressure was 0%, indicating most supplied GPUs remain idle.

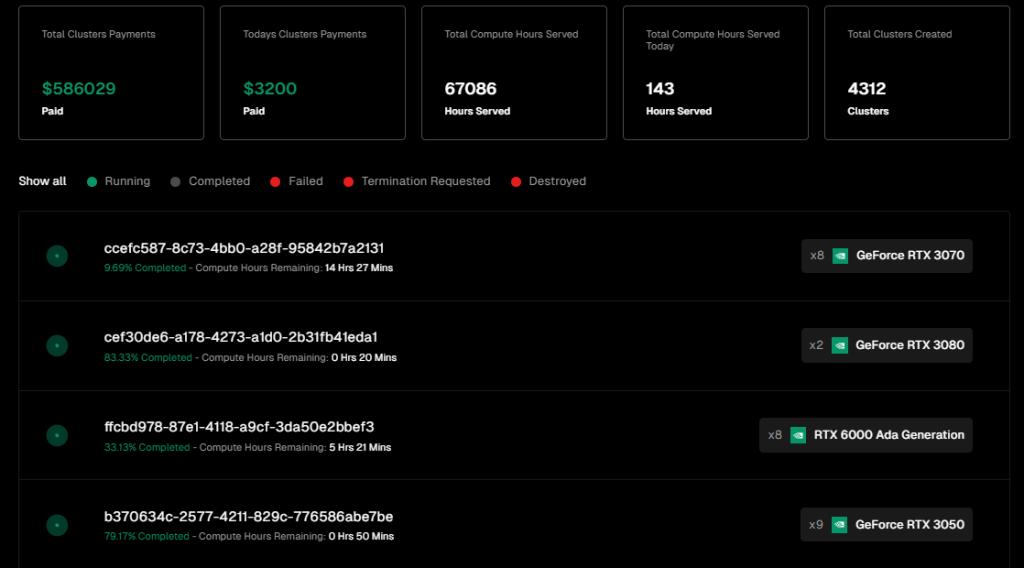

In terms of revenue, IO.NET has generated $586,029 in service fees, with $3,200 earned in the last 24 hours.

Data source: https://cloud.io.net/explorer/clusters

These revenue figures—both total and daily—are on par with Akash Network, though Akash derives most of its income from CPU services, with over 20,000 CPUs supplied.

Data source: https://stats.akash.network/

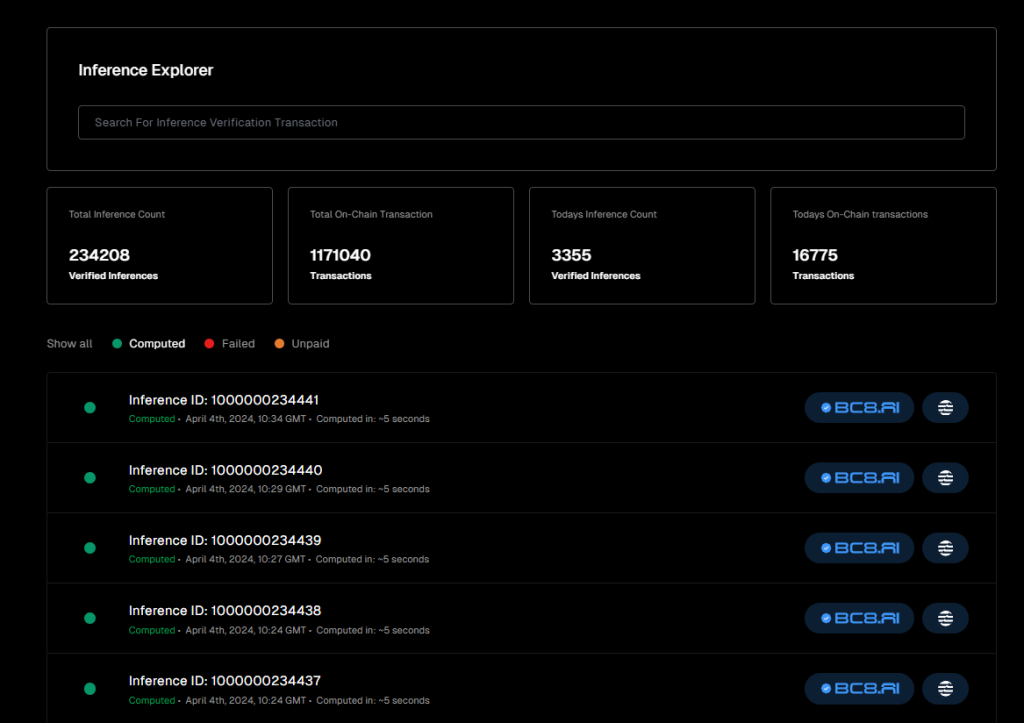

IO.NET also disclosed data on AI inference tasks processed: over 230,000 verified inference tasks to date. However, the majority stem from BC8.AI, a project sponsored by IO.NET.

Data source: https://cloud.io.net/explorer/inferences

Current operational data shows IO.NET has successfully expanded its supply side, rapidly aggregating vast AI compute resources driven by airdrop expectations and its “Ignition” community campaign. Demand-side growth, however, remains nascent, with insufficient organic demand. Whether this stems from limited outreach or suboptimal user experience requiring evaluation.

That said, given the persistent gap in AI compute availability, many AI engineers and startups are actively seeking alternatives and may be drawn to decentralized providers. With IO.NET yet to launch targeted economic incentives or marketing campaigns on the demand side—and with ongoing improvements in product experience—gradual alignment between supply and demand remains a plausible outcome.

2.3 Team Background and Funding

Team Overview

IO.NET’s core team originally worked in quantitative trading. Until June 2022, they focused on developing institutional-grade quant trading systems for equities and crypto assets. Driven by backend compute demands, they began exploring decentralized computing and ultimately focused on reducing GPU compute costs.

Founder & CEO: Ahmad Shadid

Ahmad Shadid previously worked in quantitative finance and financial engineering and served as a volunteer for the Ethereum Foundation.

CMO & Chief Strategy Officer: Garrison Yang

Garrison Yang officially joined IO.NET in March this year. Previously, he was VP of Strategy and Growth at Avalanche and holds a degree from UC Santa Barbara.

COO: Tory Green

Tory Green is COO of io.net, formerly COO at Hum Capital and Director of Corporate Development and Strategy at Fox Mobile Group. She graduated from Stanford.

According to IO.NET’s LinkedIn profile, the team is headquartered in New York City with an office in San Francisco and currently employs over 50 people.

Funding History

To date, IO.NET has publicly disclosed only one funding round: a Series A raise completed in March, valuing the company at $1 billion and raising $30 million. The round was led by Hack VC, with participation from Multicoin Capital, Delphi Digital, Foresight Ventures, Animoca Brands, Continue Capital, Solana Ventures, Aptos, LongHash Ventures, OKX Ventures, Amber Group, SevenX Ventures, and ArkStream Capital.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News