Google Unveils Multimodal "Killer Weapon"—Can Gemini Really Outperform GPT-4?

TechFlow Selected TechFlow Selected

Google Unveils Multimodal "Killer Weapon"—Can Gemini Really Outperform GPT-4?

Gemini will pose strong competition to GPT-4 in understanding complex data and performing advanced tasks.

By Mu Mu

"Largest," "most capable," "best," "most efficient"—Google has attached several superlatives to its newly launched multimodal large model Gemini, unveiled on December 7, clearly signaling its competitive ambition against OpenAI's GPT-4.

Divided into three sizes—Ultra, Pro, and Nano—Gemini claims top scores across various "AI exams." The demonstration video portrays Gemini as a "super tool" proficient in listening, speaking, reading, and writing.

According to official statements, Gemini Ultra is the most powerful version, combining multimodal capabilities with expertise and accuracy. It supports input and output in text, images, and voice, can grade math homework, guide athletes' movements and exertion, and perform complex tasks such as chart generation and coding. In the MMLU (Massive Multitask Language Understanding) test, it even reportedly "surpasses human experts."

Currently, regular consumers can only access the Gemini Pro version, which Google positions as "the best model for scaling across various tasks" and has already been integrated into Bard, Google’s previously released chatbot. Gemini Nano, described as "the most efficient model for on-device tasks," will be embedded in Google's Pixel 8 Pro smartphones. Meanwhile, Gemini Ultra—the "largest and most capable model for highly complex tasks"—is slated for release to developers and enterprise users in early next year.

So, is Gemini truly stronger than GPT-4?

Some users have pointed out that the "test scores" Google provided for Gemini Ultra were based on its own proprietary evaluation methods. Bloomberg noted that the demonstration video was not real-time, and netizens observed clear editing traces.

TechFlow conducted hands-on testing of Bard’s mathematical abilities, which now incorporates a fine-tuned version of Gemini Pro. The results showed that Bard still makes comprehension errors on complex math problems, particularly in image recognition.

Google showcases Gemini’s “listen, speak, read, write” capabilities

Gemini is a multimodal AI large model built from scratch by Google. Although it lags behind GPT-4 in release timing, Google markets it as the "most capable" model, emphasizing its multimodal strengths.

It can simultaneously process and analyze multiple data types—including text, images, audio, video, and code. Users can input information in various formats, and Gemini not only understands them but also analyzes and performs tasks according to user requests.

Currently at version 1.0, Gemini comes in three scales: Ultra, Pro, and Nano. The Ultra version targets highly complex tasks; Pro focuses on multitasking; Nano is optimized for mobile applications. Each version serves specific use cases and demonstrates superior performance across multiple benchmark tests.

The promotional video released by Google highlights Gemini’s exceptional multimodal capabilities—viewers are likely to be amazed after watching it.

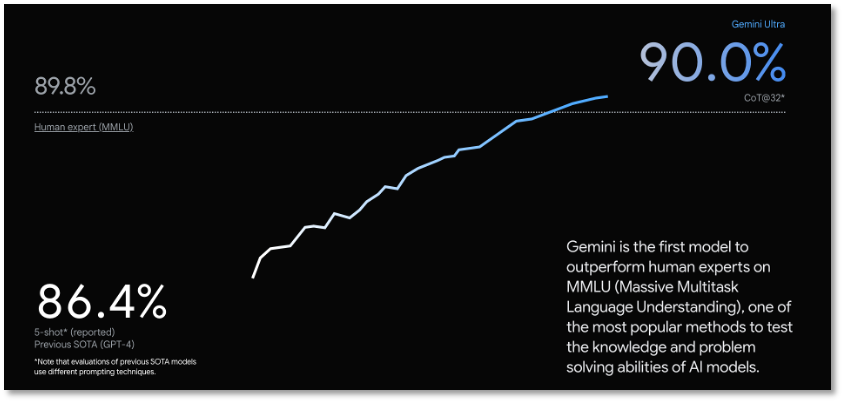

Behind the "super model" Gemini Ultra lies testing data published by Google. Among 32 academic benchmarks widely used to evaluate large language models (LLMs), it outperforms current state-of-the-art models in 30.

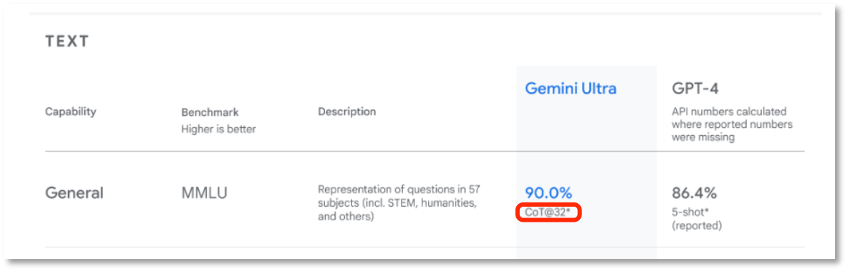

Gemini Ultra claims a 90.0% score, making it the first model to surpass human experts on the MMLU (Massive Multitask Language Understanding) benchmark. This test evaluates world knowledge and problem-solving ability using questions from 57 subjects including mathematics, physics, history, law, medicine, and ethics. Gemini also exceeds current technological standards across a range of benchmarks involving text and code.

MMLU is a major assessment of large models’ language understanding, consisting of multiple-choice tasks across 57 human knowledge domains—from elementary math to U.S. history, computer science, and law—with difficulty levels ranging from high school to expert proficiency. It is one of the mainstream semantic understanding evaluations for large models today.

Based on Google’s reported results, Gemini appears poised to strongly challenge GPT-4 in understanding complex data and executing advanced tasks.

Google claims Gemini surpassed human experts in the MMLU evaluation

Since it was trained from the start on multimodal data, Gemini Ultra theoretically understands all forms of information—text, images, speech, video, and code—opening up broader possibilities for AI applications.

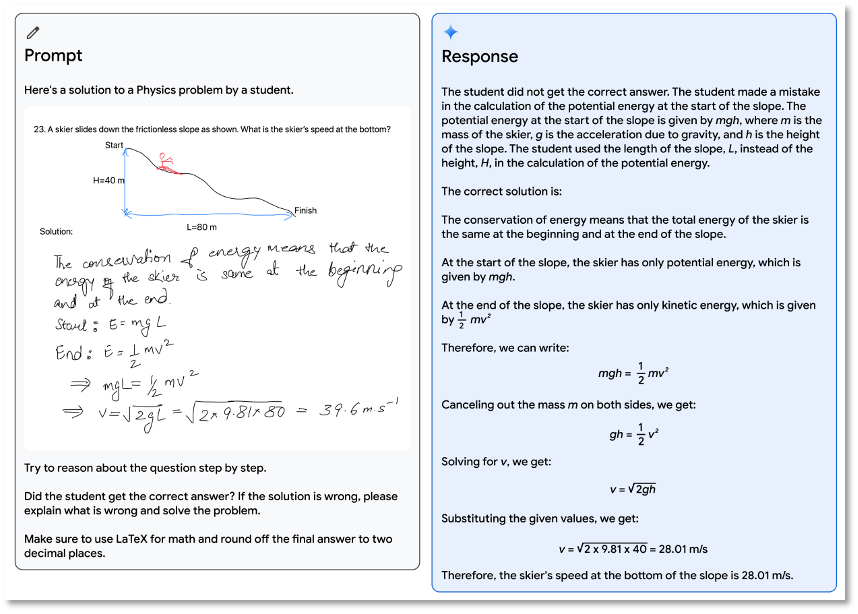

For example, in education, leveraging Gemini Ultra’s multimodal reasoning skills, messy handwritten notes can be interpreted, incorrect steps in students’ problem-solving identified, and correct solutions with explanations provided. While this doesn’t mean replacing teachers, educators now gain a powerful AI assistant.

Gemini can grade student assignments

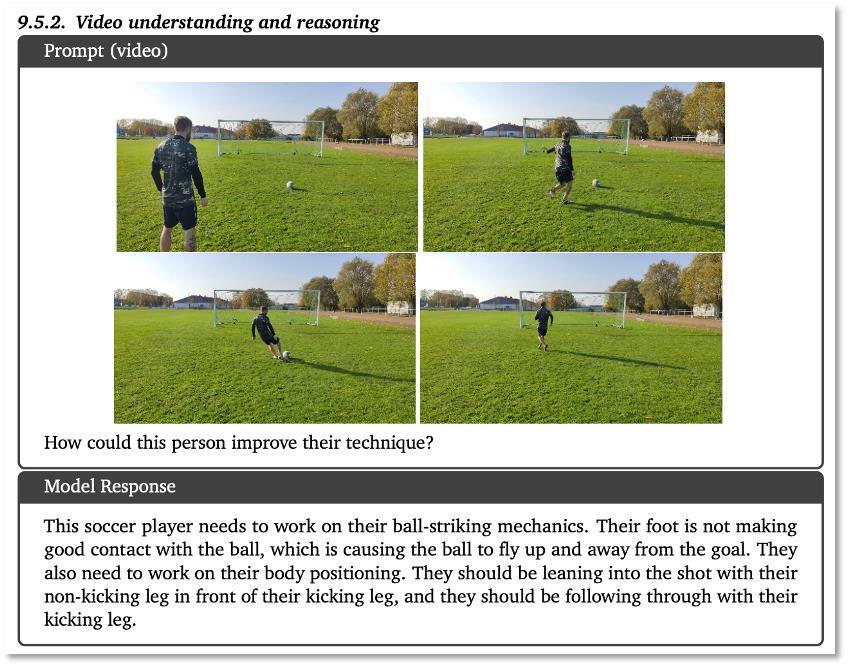

In video understanding and reasoning, Gemini Ultra even exhibits coaching-level insight, analyzing athletes’ movements and muscle engagement and offering specific improvement suggestions.

Gemini can understand video content and provide guidance to athletes

Gemini Ultra handles complex image understanding, code generation, and instruction following with ease. When given an image and the prompt: "Use the function depicted in the upper-left subplot, multiply it by 1000, add it to the function shown in the lower-left subplot, and generate matplotlib code for a single result plot," Gemini Ultra perfectly executes inverse graphics tasks—inferring the plotting code, performing additional mathematical transformations, and generating relevant code.

Judging from these examples presented by Google, Gemini Ultra seems like the strongest large model on Earth. What viewers really want to know is: when can we actually use this "Super Saiyan" of large models?

According to Google, starting December 6, Bard began rolling out a fine-tuned version of Gemini Pro to enhance advanced reasoning, planning, and understanding—marking the biggest upgrade since Bard’s launch.

Note that the Gemini Pro-integrated Bard currently supports only English and is available in over 170 countries and regions. Support for additional modalities, languages, and regions is planned for the near future. That means Chinese users cannot yet fully experience Gemini Pro.

Gemini Nano will debut first on Google’s Pixel 8 Pro smartphones, initially supporting features like "Recorder Summaries" and "Gboard Smart Replies." More messaging apps will follow next year.

Over the coming months, Gemini capabilities will roll out across more Google products and services, including Search, Ads, Chrome, and Duet AI. That means Google Search will soon incorporate Gemini-powered features.

As for the "strongest" Gemini Ultra, ordinary users will need to wait. Google says it is undergoing trust and safety checks, requiring further refinement through human feedback and reinforcement learning (RLHF) before public release.

During this phase, Gemini Ultra will be selectively provided to customers, developers, partners, and safety and responsibility experts for early experimentation and feedback, prior to opening access to developers and enterprise clients in early next year.

Doubts over Ultra’s MMLU “exam paper” being Google’s own version

Google showcased the most powerful Gemini Ultra, yet delayed its rollout—prompting immediate skepticism: Is it really stronger than GPT-4?

Bloomberg pushed back, stating that Google's model still lags behind OpenAI's, and that its demonstrated capabilities are based solely on pre-recorded videos—not live interactions. These videos may rely on "carefully tuned prompts and static images." Bloomberg also noted that Gemini's responses often require auxiliary information and strong hinting during actual interactions.

Viewers of the demo video also spotted obvious editing artifacts, suggesting the "impressive capabilities might be exaggerated."

Moreover, internet users discovered that the MMLU evaluation used for Gemini Ultra’s test scores employed Google’s own customized "exam paper." In the 57-subject multiple-choice test where Ultra scored 90, a footnote reads "CoT@32*," indicating Google’s self-adjusted evaluation method. Under the same standard as GPT-4, Ultra’s score drops to 83.7—lower than GPT-4’s 86.4.

Gemini Ultra scores 90 under Google’s adjusted testing framework

Academic details aside, the good news is that Google has already integrated Gemini into Bard. Although it uses the lower-tier Pro instead of Ultra, it still claims strong multitasking abilities—making it the most accessible way for the public to test Gemini firsthand.

TechFlow selected math problems for testing, since ChatGPT has historically struggled with math, and mathematical reasoning—with its singular correct answers—is considered by OpenAI as foundational to achieving AGI. So how does Bard, powered by Gemini, fare in math?

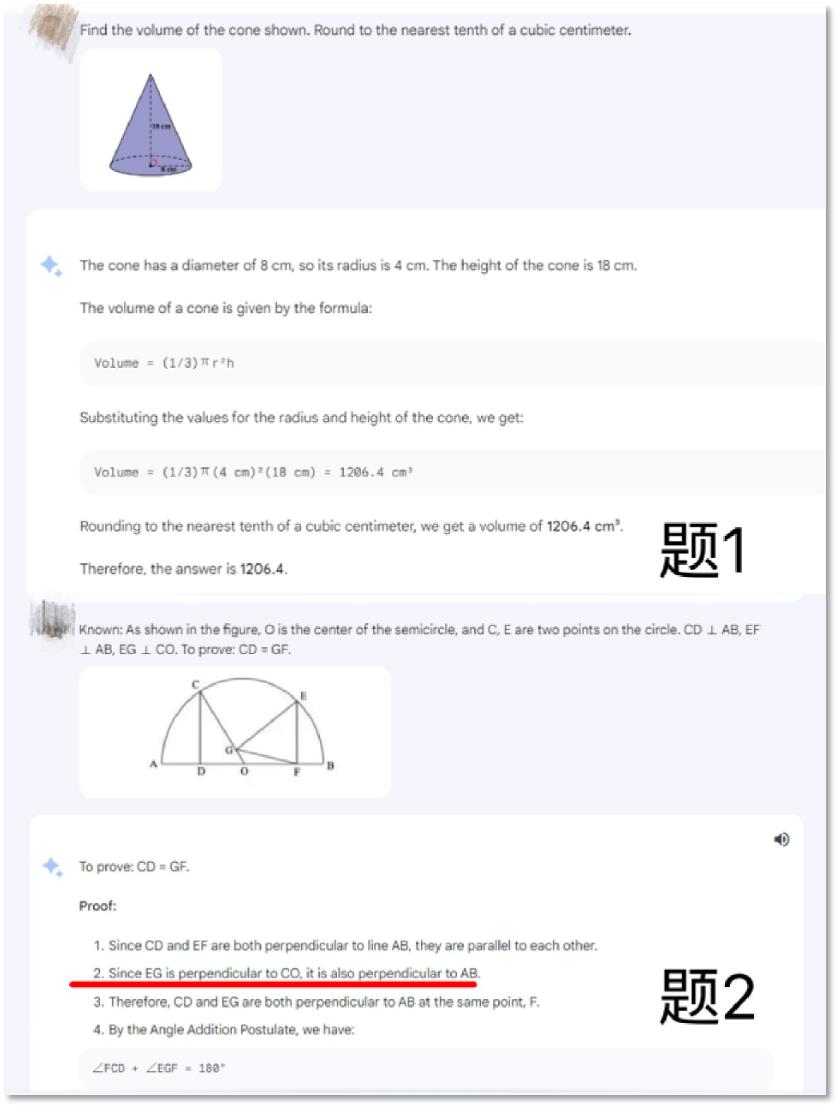

We asked the questions in English: Question 1 involved calculating the volume of a cone; Question 2 was a more challenging geometry proof.

Results show that Gemini Pro accurately recognizes images and text within them and correctly solves simple math problems. However, it still makes clear mistakes on complex ones. In Question 2, Bard incorrectly proves lines EG and AB as perpendicular in Step 2.

Bard with Gemini Pro isn't perfect at solving math problems

Could this underperformance be due to Bard using Gemini Pro rather than Ultra? We’ll just have to wait and test again once Ultra is integrated.

As for Gemini Nano, which will be introduced into the Pixel 8 Pro smartphone, it will power two features: "Recorder Summaries" and "Gboard Smart Reply."

According to Google, even without internet connectivity, the Recorder app can summarize phone conversations, interviews, or presentations. The Smart Reply feature works similarly to automated post-call replies—Gemini Nano identifies incoming message content and generates appropriate responses. However, both features currently support only English text recognition.

According to DeepMind’s proposed AGI evaluation framework, at the AGI-1 stage, artificial intelligence should be able to learn and reason across domains and modalities, demonstrating intelligence in multiple areas and tasks such as question answering, summarization, translation, and dialogue, enabling basic communication and collaboration with humans and other AIs, and perceiving and expressing simple emotions and values.

Considering both Google’s official announcements and real-world testing experiences, the version worth anticipating—and potentially surpassing GPT-4—remains the unreleased Ultra. If its multimodal capabilities truly perform as demonstrated, Google may indeed be closing in on its definition of AGI.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News