If OpenAI Were a DAO, Could It Have Avoided This Farce?

TechFlow Selected TechFlow Selected

If OpenAI Were a DAO, Could It Have Avoided This Farce?

While rules and constraints can create many consensuses, truly great consensus is often not forged by rules.

Author: Wang Chao

Ever since the weekend drama began unfolding, people have been suggesting that OpenAI should form a DAO. As events progressed, more and more people started supporting this idea. If OpenAI had truly adopted a DAO governance model, could this crisis have been avoided? I believe so—not necessarily because DAO governance is clearly superior, but because OpenAI’s current governance has serious flaws. Had it learned even a little from the DAO ecosystem, this situation likely wouldn’t have occurred.

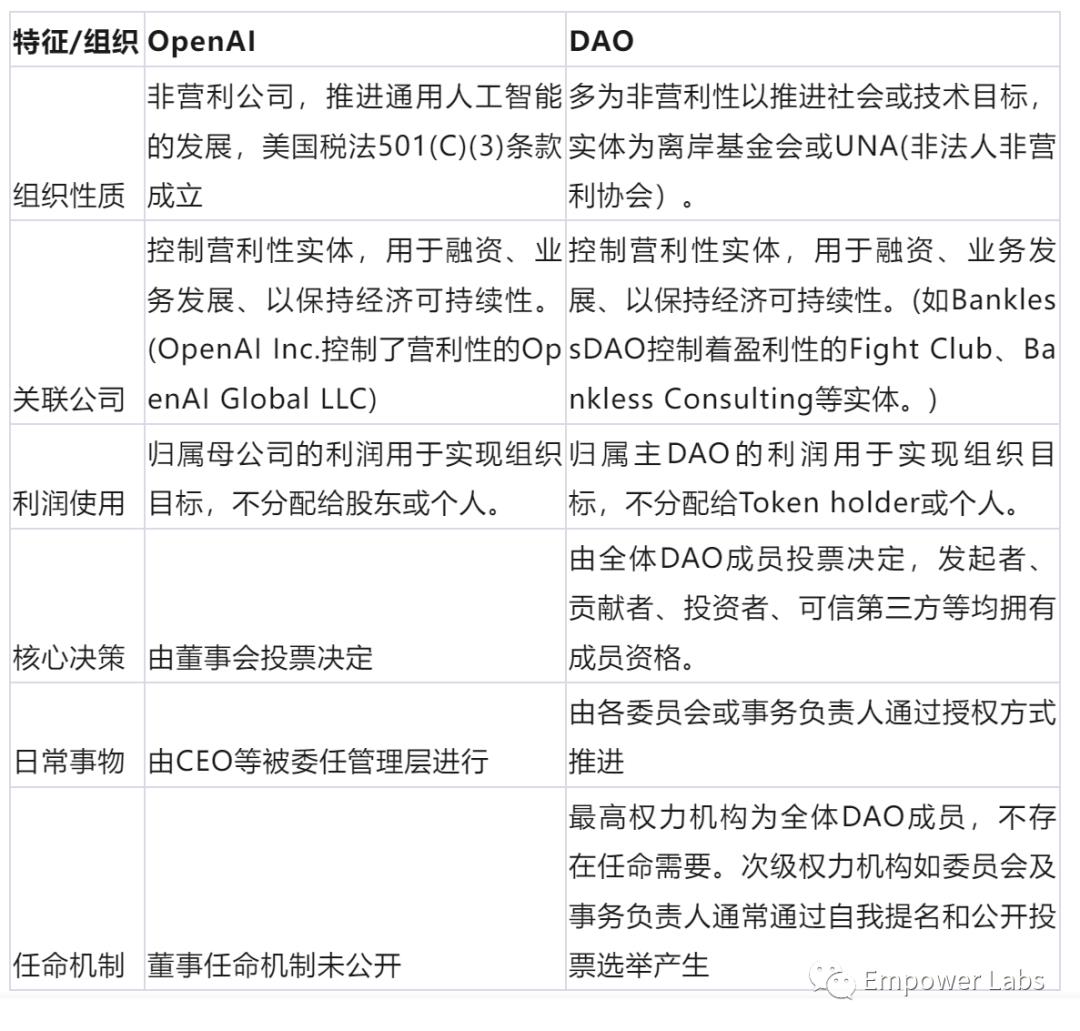

OpenAI is a nonprofit organization dedicated to creating safe artificial general intelligence (AGI) and ensuring its benefits are equitably distributed across humanity. In this sense, OpenAI functions as an organization producing public goods. Many DAOs also aim to create public goods, making OpenAI and DAOs highly similar in many aspects.

(DAOs, as a new organizational form, come in many shapes. The comparison here refers only to typical non-profit-oriented DAOs and does not represent all DAO models.)

The recent internal turmoil at OpenAI did not stem from structural issues per se, but rather from unclear and unreasonable governance rules that left room for manipulation. For example, the board originally consisted of nine members, but with several departures, only six remain. As the highest authority, the board failed to promptly replenish vacancies. If membership continues shrinking to just three, only two directors would be needed to decide OpenAI's fate. Operational decisions have also been made arbitrarily—replacing CEO Sam Altman, a critical move, was evidently not discussed or reviewed by the full board, but instead decided by a subset of directors in closed-door meetings, without adequately considering broader stakeholder input or providing proper communication and negotiation opportunities.

Even for-profit public companies introduce independent directors to enhance governance transparency and better represent minority shareholders and the public interest. For an organization like OpenAI—one impacting fundamental technological development, societal safety, and even the future of humanity—the introduction of external directors, while present, has clearly failed to fulfill its intended role. OpenAI’s board needs not only more balancing forces, such as employee representatives, but also a more effective governance framework. Drawing from DAO governance models to design a stronger, more transparent, and inclusive structure for OpenAI seems to me a worthwhile path forward.

It's worth reflecting that DAOs were initially conceived by techno-libertarians who hoped to rely entirely on code to create self-sustaining systems that operate with minimal human interference—this is what is meant by Autonomous. When political coordination among humans enters the picture, a DAO ceases to be a true DAO and becomes merely a DO, losing its autonomy. However, at today’s stage, such idealized DAOs are simply unattainable. As a compromise, we now broadly consider any organization using blockchain networks for collective governance as a DAO. This means we’ve accepted the reality of human-led governance, with code serving only as a supplementary constraint. Thus, the defining feature of DAOs has shifted from Autonomous to Community Driven—emphasizing broader participation and representation of diverse interests.

Interestingly, AGI also aims at Autonomy. OpenAI explicitly defines AGI as a highly autonomous system capable of outperforming humans in most economically valuable work.

by AGI we mean a highly autonomous system that outperforms humans at most economically valuable work.

OpenAI

Although autonomy in AGI primarily refers to behavioral capability, if we examine the underlying principles, both AGI and DAO aspire to build genuinely autonomous systems capable of operating independently of external control—there is no essential difference. So how should we govern such autonomous systems? Should we rely more on internally aligned human values and training, or impose more external constraints? From LLMs to AGI, this remains an urgent question demanding our attention.

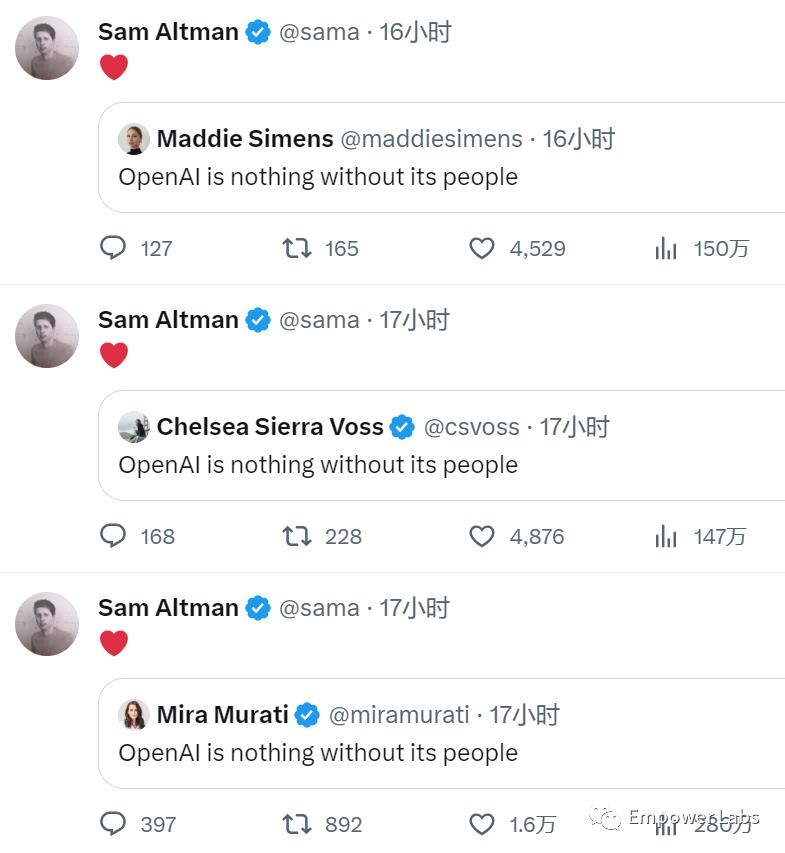

The latest twist in OpenAI’s saga—up to 90% of employees signing a letter indicating they would quit and follow Sam Altman—echoes a classic debate in the DAO world over the past few years: Are rule-based code constraints more important, or is community consensus paramount?

While rules and constraints can generate many forms of agreement, truly great consensus is rarely forged by rules alone. Only shared mission and cultural values can produce deep resonance and genuine alignment.

We know how to create such resonance among humans. But what about AI?

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News