Space Recap | AINFT Fully Launches AI Service Platform, Building Next-Generation AI Infrastructure with “Elastic Aggregated Payment”

TechFlow Selected TechFlow Selected

Space Recap | AINFT Fully Launches AI Service Platform, Building Next-Generation AI Infrastructure with “Elastic Aggregated Payment”

The AINFT AI Service Platform reshapes the inclusive and efficient AI user experience with a “single entry point, multiple AI models, flexible payment” model.

As artificial intelligence evolves from a world-stunning technological breakthrough into an everyday “efficiency tool” embedded across daily life, its usage cost has quietly become increasingly expensive. Behind this lies not only the maturation of business models but also a reflection that AI is rapidly advancing toward a critical stage of becoming “digital infrastructure.” And once a tool becomes essential, its cost structure, selection method, and long-term sustainability become unavoidable practical concerns for every ordinary user.

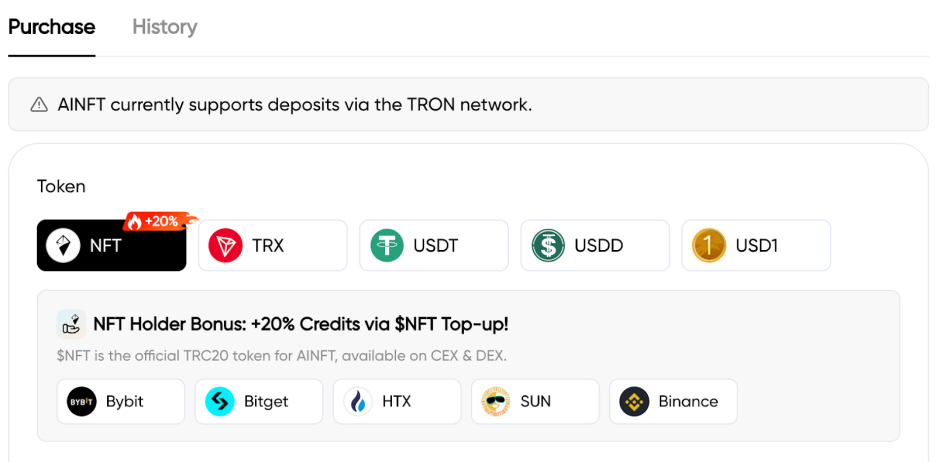

It is precisely against the backdrop of rising AI usage costs that the AINFT AI service platform within the TRON ecosystem recently launched comprehensively. This platform integrates top-tier large language models—including ChatGPT, Claude, and Gemini—offering unified chat and API interfaces, and deeply integrates with the TronLink wallet to support one-click login and on-chain payments. Its key advantages include free AI model access for new users, as well as the option to top up small amounts for credits to use paid services; users who recharge using NFT tokens enjoy a 20% discount.

This is not merely a direct challenge to existing paywall models—it also raises a crucial question: With multi-model coexistence becoming the norm, must users still bear high costs for a single model? The emergence of AINFT may point toward a more flexible and sustainable future for AI usage. This edition of the SunFlash Roundtable brings together multiple industry observers and practitioners—not to debate model capabilities, but to delve into the underlying logic driving rising costs and examine how ordinary users can establish long-term, effective usage strategies now that AI has become a high-frequency tool.

As AI demand diversifies, traditional subscription models have become users’ “efficiency shackles”

As AI rapidly evolves from awe-inspiring tech demos into an indispensable daily “productivity tool” across industries, a pronounced contradiction emerges: On one hand, user needs are becoming unprecedentedly diverse and scenario-specific; on the other, mainstream service models remain stuck in costly subscription-based frameworks. This deepening mismatch between supply and demand renders traditional subscriptions not only ill-suited to flexible, evolving real-world needs—but also transforms their steep fixed fees into “efficiency shackles” hindering users’ pursuit of high performance. In this SunFlash Roundtable, guests analyze the deep-rooted drivers behind escalating AI usage costs from technical evolution, market supply-demand dynamics, and user behavior perspectives.

YOMIRGOpoints out that rising AI usage costs reflect two core trends: First, AI tasks have evolved from early single-turn Q&A into complex “chain-of-thought” problem-solving processes involving multiple rounds of tool invocation and reflection—causing computational consumption to grow exponentially.As requirements for output logic and quality increase, intensive inference capability has become the core cost component of AI services—driven by massive parameter counts, high computational overhead, and global imbalances in high-performance chip supply and demand. Second,the persistent rise in AI usage costs reflects AI’s increasing “infrastructuralization,” akin to electricity or water—seamlessly integrated into daily workflows as an indispensable productivity pillar.

AISIMagrees and adds that cost increases fundamentally reflect AI’s shift—from its initial status as a “novelty tool” for early adopters—to today’s “high-frequency productivity infrastructure.” The deeper users’ reliance, the higher their expectations across all dimensions—and naturally, the greater the associated compute and operational costs.Graceexplains from a UX perspective that while users pay for superior outputs, they also shoulder the invisible costs of complex backend processes.

As rising usage costs become an established trend, a more urgent end-user question arises: Do current payment models still align with today’s demand landscape? On this, roundtable participants reached clear consensus:Traditional long-term subscriptions bundling a single model are increasingly misaligned with users’ authentic, dynamic, and increasingly granular usage scenarios.

Multiple guests, drawing from real-world usage contexts, emphasize that user needs are inherently diversified and scenario-specific.ONEONEandGraceboth note thattasks like writing, coding, and image generation often map best to different models’ strengths—expecting any single model to excel across all domains is neither realistic nor economical.web3Monkey incisively observes that much of what users pay for top-tier models goes toward the ~20% of cutting-edge capabilities rarely used—while frequent needs are met perfectly well by baseline capabilities, creating obvious value imbalance.

Further, this model may constrain user choice and adaptability in fast-evolving markets.YOMIRGOemphasizes thatAI technology advances at breakneck speed—long-term lock-in to a single model is self-limiting, causing users to miss out on rapid advancements and resulting technical dividends from other models.HiSevenadds thatusers’ core demand is shifting from “passively accepting fixed services” to “actively seeking optimal solutions,” preferring to flexibly invoke the most suitable tools based on real-time needs—not be bound to a single platform.

Ultimately, discussion converges on a clear conclusion: For the vast majority of ordinary users whose needs are fragmented and usage scenarios highly variable, prepaying high fees long-term for low-frequency specialized capabilities faces severe economic and practical challenges.AISIMsummarizes thata more flexible, cost-effective service model has become a critical unmet market need.

AINFT’s breakthrough approach: Elastic pricing restructures costs; unified gateway reshapes experience

Faced with the structural tension between monolithic subscription models and diversified demand, participants unanimously agreed that the solution likely lies in a unified gateway aggregating multi-model capabilities. They foresee this as more than simple technical aggregation—it will trigger profound shifts in usage logic and even industry ecosystems.

Multiple guests noted that a unified gateway changes the user-AI relationship.AISIMdescribed the current “model faith wars” among users—where platform aggregation dissolves such unnecessary tribalism,shifting users from “paying for models” to “paying for problem resolution.”From an efficiency standpoint,HiSevenandONEONEadd that this drastically reduces time and cognitive overhead spent switching, registering, and price-comparing across platforms—making AI services as seamless as toggling browser tabs.

Moreover, deeper transformation lies in the complete evolution of task execution. AsGraceaptly analogized,in this new paradigm, users assume the role of “CEO”—no longer passively receiving outputs from a single tool, but instead commanding full orchestration authority, directing the most appropriate “AI employees” to collaborate based on task characteristics. For instance, one model generates proposals while another specializes in review and optimization—this team-based approach significantly boosts reliability and output quality.

This implies AI sheds its identity as a specific tool, transforming instead into infrastructure like electricity or water: highly standardized, consumed on-demand, and billed with precise granularity. This is far more than a UI improvement—it marks a fundamental evolution of the entire AI service paradigm, shifting from closed, rigid “tool provision” to flexible, inclusive “capability access.”

As a concrete implementation aligned with this evolution, the Web3-native AI platform AINFT within the TRON ecosystem embodies this paradigm in practice. Drawing on firsthand experience, panelists dissected feasible pathways through which AINFT achieves its dual goals of “low cost” and “superior experience.”

1. Cost-structure revolution: From fixed subscriptions to elastic payment

Guests view AINFT’s core innovation as its economic model.web3Monkey details its points system and micro on-chain top-up mechanism—completely dismantling traditional fixed monthly fees. New users receive free points upon wallet login sufficient for basic needs.Currently, new users receive 1 million points upon login—enough to cover routine low-frequency usage.For high-frequency users, the platform offers highly flexible top-up options supporting multiple cryptocurrencies—including NFTs, TRX, USDD, USDT, and USD1—with a 20% discount when topping up via NFT tokens. Users top up only as needed, reducing estimated monthly costs dramatically to the $5–$15 range—shifting from the burden of “fixed monthly fees” to the flexibility of “on-demand elastic payment.”

2. User-experience reimagining: Seamless integration and user sovereignty

At the experience layer,AINFT enables one-click login via TronLink wallet, granting instant access to multi-model services. This design eliminates tedious repeated registration and verification, seamlessly integrating AI services into the fluid Web3 experience. Simultaneously, its unified API interface empowers users to flexibly embed this aggregated capability into their own applications and workflows—greatly expanding practical utility boundaries.

3. Ecosystem-value integration: Sovereignty, incentives, and sustainability

More profoundly, AINFT’s model design reflects attention to user sovereignty and long-term value. Its points and top-up mechanisms function not as mere payment channels—but as positive incentive loops: users topping up with NFTs earn extra rewards, continuously optimizing costs for long-term participation and deep usage. This effectively returns both choice and value back to users—aligning platform evolution with community interests and building a more resilient, attractive, sustainable ecosystem.

Facing the twin challenges of rising costs and limited choices in centralized AI services,Web3-native solutions like AINFT offer a pivotal breakthrough. By restructuring economics via points systems and elastic payment—and reshaping UX through multi-model aggregation and one-click access—they leverage blockchain composability and incentive design to transform AI from a “closed subscription service” into an “open digital infrastructure.” As a core AI infrastructure within the TRON ecosystem, AINFT’s practice transcends mere technical aggregation—it seeks to build a new paradigm where AI Agents collaborate and incentives operate in internal cycles. This heralds a future where users no longer pay for single models, but instead participate as sovereign actors within a more inclusive, efficient, and community-driven intelligent digital ecosystem.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News