The infrastructure crisis in the crypto industry

TechFlow Selected TechFlow Selected

The infrastructure crisis in the crypto industry

The question is not whether the next infrastructure failure will occur, but when it will happen and what will trigger it.

Author: YQ

Translation: AididiaoJP, Foresight News

Amazon Web Services has suffered another major outage, severely impacting crypto infrastructure. Issues in AWS's US-East-1 region (North Virginia data center) caused outages across Coinbase and dozens of other major crypto platforms including Robinhood, Infura, Base, and Solana.

AWS has acknowledged "increased error rates" affecting Amazon DynamoDB and EC2—core database and computing services relied upon by thousands of companies. This disruption provides an immediate, vivid validation of the central thesis of this article: the dependence of crypto infrastructure on centralized cloud providers creates systemic vulnerabilities that repeatedly surface under stress.

The timing is starkly instructive. Just ten days after a $19.3 billion liquidation cascade exposed infrastructure failures at the exchange level, today’s AWS outage shows the problem extends beyond individual platforms to foundational cloud infrastructure layers. When AWS fails, cascading effects simultaneously impact centralized exchanges, “decentralized” platforms with centralized dependencies, and countless other services.

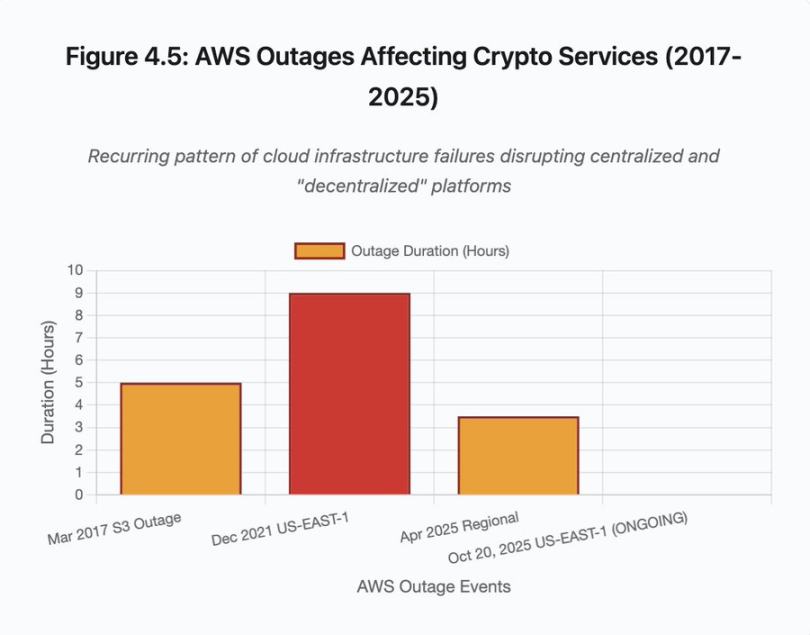

This is not an isolated incident but a pattern. The analysis below documents similar AWS outages in April 2025, December 2021, and March 2017, each causing major crypto services to go offline. The question is not whether the next infrastructure failure will occur, but when and what will trigger it.

$19.3 Billion Liquidation Cascade, October 10–11, 2025: A Case Study

The October 10–11, 2025 liquidation cascade serves as an illuminating case study of infrastructure failure patterns. At 20:00 UTC, a major geopolitical announcement triggered a market-wide sell-off. Within one hour, $6 billion in positions were liquidated. By the time Asian markets opened, leveraged positions worth $19.3 billion had vanished from 1.6 million trader accounts.

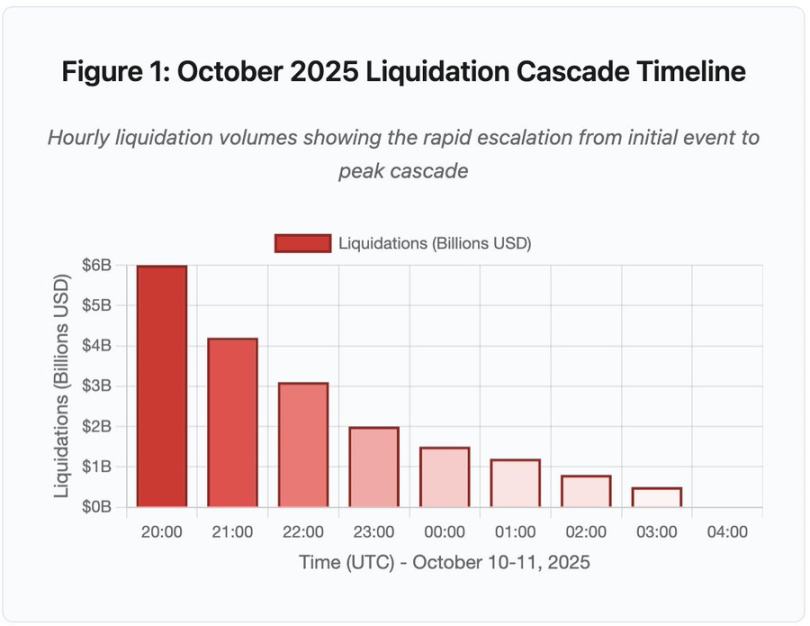

Figure 1: Timeline of the October 2025 Liquidation Cascade

This interactive timeline chart shows the dramatic progression of hourly liquidations. $6 billion evaporated in just the first hour, followed by an even more intense second hour as the cascade accelerated. The visualization reveals:

-

20:00–21:00: Initial shock – $6 billion liquidated (red area)

-

21:00–22:00: Peak cascade – $4.2 billion, when API rate limiting began

-

22:00–04:00: Prolonged deterioration – $9.1 billion liquidated in thin liquidity markets

-

Critical turning points: API rate limiting, market maker withdrawal, order book thinning

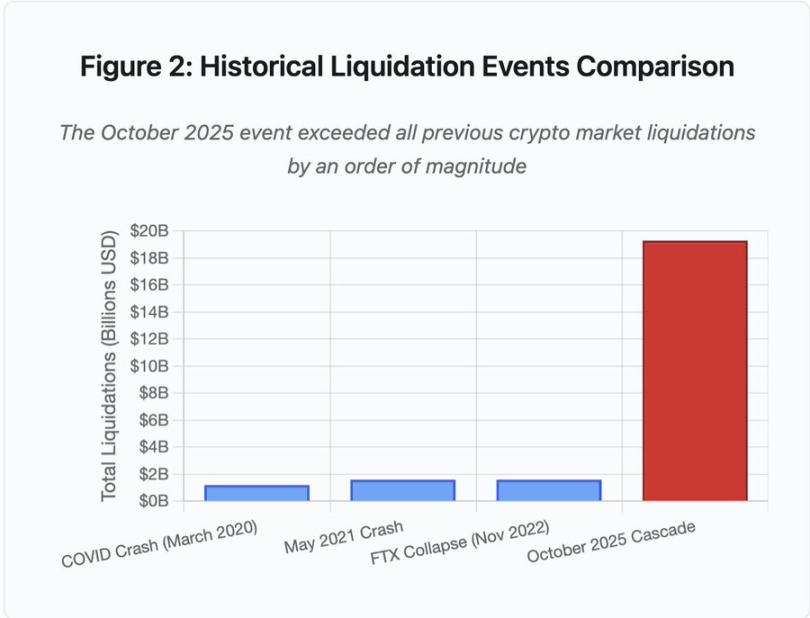

The scale was at least an order of magnitude larger than any previous crypto market event. Historical comparisons highlight the step-function nature of this incident:

Figure 2: Comparison of Historical Liquidation Events

The bar chart dramatically illustrates the outlier status of the October 2025 event:

-

March 2020 (COVID): $1.2 billion

-

May 2021 (Crash): $1.6 billion

-

November 2022 (FTX): $1.6 billion

-

October 2025: $19.3 billion—16 times larger than prior records

But liquidation figures only tell part of the story. The more interesting question concerns mechanism: how did an external market event trigger this specific failure pattern? The answer reveals systemic weaknesses in centralized exchange infrastructure and blockchain protocol design.

Off-Chain Failures: Centralized Exchange Architecture

Infrastructure Overload and Rate Limiting

Exchange APIs implement rate limits to prevent abuse and manage server load. Under normal operations, these limits allow legitimate trading while blocking potential attacks. During extreme volatility, when thousands of traders attempt to adjust positions simultaneously, these same rate limits become bottlenecks.

CEXs limit liquidation notifications to one order per second, even when processing thousands of orders per second. During the October cascade, this created opacity. Users could not determine the real-time severity of the cascade. Third-party monitoring tools showed hundreds of liquidations per minute, while official data sources reported far fewer.

API rate limiting prevented traders from modifying positions during the critical first hour, with connection requests timing out and order submissions failing. Stop-loss orders failed to execute, position queries returned stale data—this infrastructure bottleneck turned a market event into an operational crisis.

Traditional exchanges configure infrastructure for normal loads plus safety margins. But normal load differs drastically from stress load; daily average volume poorly predicts peak stress demand. During cascades, trading volume surges 100x or more, and queries for position data increase 1000x as every user checks their account simultaneously.

Figure 4.5: AWS Outages Impacting Crypto Services

Auto-scaling cloud infrastructure helps but cannot respond instantly—spinning up additional database read replicas takes minutes. Creating new API gateway instances takes minutes. In those minutes, margin systems continue marking positions based on corrupted price data from overloaded order books.

Oracle Manipulation and Pricing Vulnerabilities

During the October cascade, a critical design choice in margin systems became apparent: some exchanges calculate collateral value based on internal spot market prices rather than external oracle data feeds. Under normal market conditions, arbitrageurs maintain price consistency across venues. But when infrastructure is stressed, this coupling breaks down.

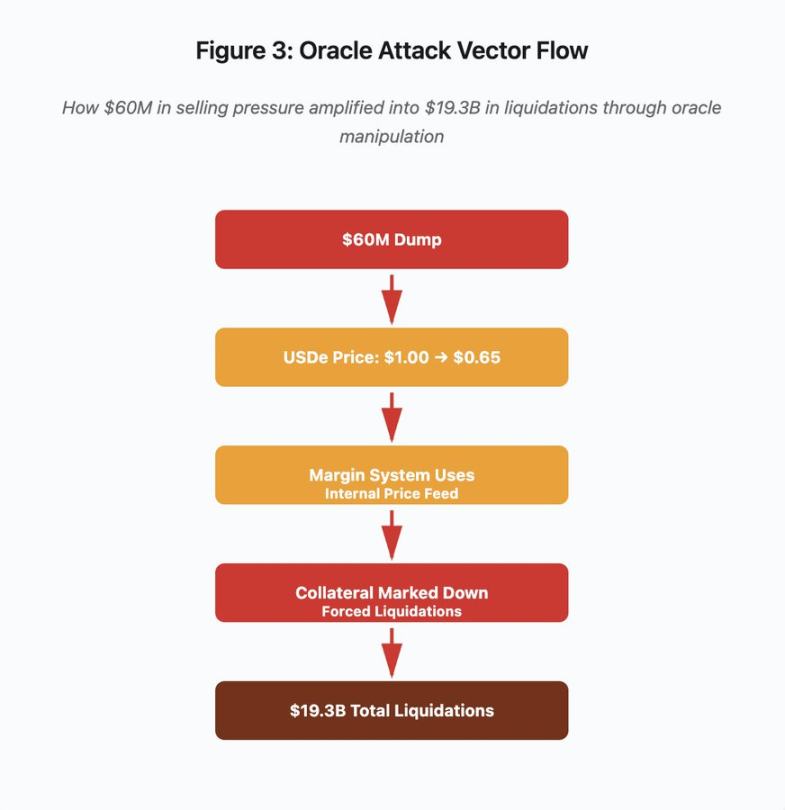

Figure 3: Oracle Manipulation Flowchart

This interactive flowchart visualizes a five-stage attack vector:

-

Initial sell-off: 60 million USD of selling pressure applied to USDe

-

Price manipulation: USDe drops from $1.00 to $0.65 on a single exchange

-

Oracle failure: Margin system uses corrupted internal price feed

-

Cascade trigger: Collateral marked down, forced liquidations begin

-

Amplification: Total $19.3 billion in liquidations (322x amplification)

The attack exploited Binance's setting of using spot market prices for wrapped synthetic collateral. When attackers dumped $60 million of USDe into a relatively thin order book, the spot price plummeted from $1.00 to $0.65. The margin system, configured to mark collateral at spot price, downward-revalued all USDe-collateralized positions by 35%. This triggered margin calls and forced liquidations across thousands of accounts.

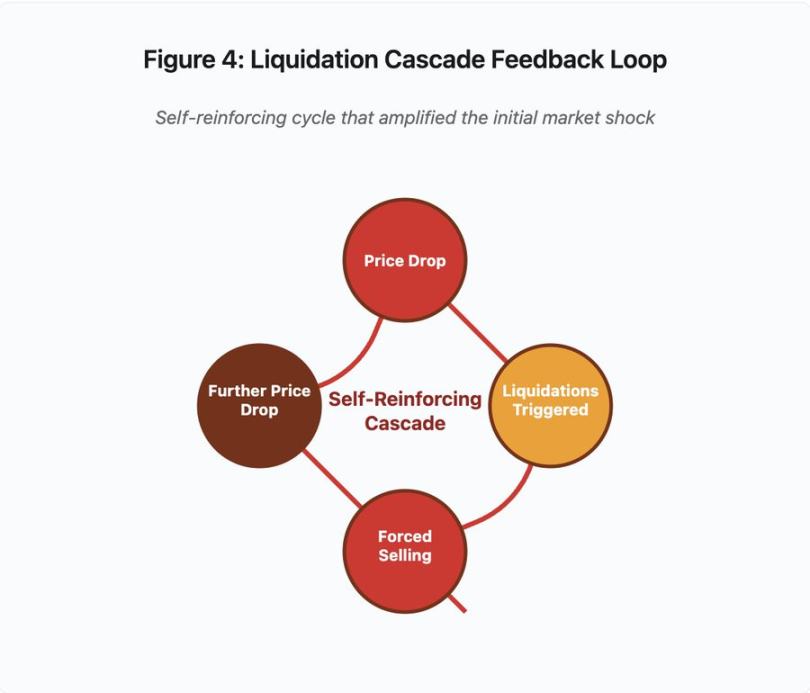

These liquidations forced more sell orders into the same illiquid market, further depressing prices. The margin system observed these lower prices and marked more positions accordingly, creating a feedback loop that amplified $60 million in selling pressure into $19.3 billion in forced liquidations.

Figure 4: Liquidation Cascade Feedback Loop

This circular feedback diagram illustrates the self-reinforcing nature of the cascade:

Price drop → Triggers liquidation → Forced selling → Further price drop → [Loop repeats]

This mechanism would not work if properly designed oracle systems were used. If Binance had used a time-weighted average price (TWAP) across multiple exchanges, instantaneous price manipulation would not affect collateral valuation. If they used aggregated price feeds from Chainlink or other multi-source oracles, the attack would have failed.

The wBETH incident four days earlier revealed a similar vulnerability. wBETH should maintain a 1:1 exchange ratio with ETH. During the cascade, liquidity dried up, and the wBETH/ETH spot market showed a 20% discount. Margin systems marked down wBETH collateral accordingly, triggering liquidations of positions fully backed by underlying ETH.

Auto-Deleveraging (ADL) Mechanism

When liquidations cannot be executed at current market prices, exchanges implement Auto-Deleveraging (ADL), distributing losses among profitable traders. ADL forcibly closes profitable positions at current prices to cover deficits from liquidated ones.

During the October cascade, Binance executed ADL across multiple trading pairs. Traders holding profitable long positions found their trades forcibly closed—not due to their own risk management failures, but because others' positions became insolvent.

ADL reflects a fundamental architectural choice in centralized derivatives trading. Exchanges guarantee they will not lose money. This means losses must be borne by one or more of the following:

-

Insurance Fund (exchange-reserved funds to cover liquidation shortfalls)

-

ADL (forced closure of profitable traders)

-

Socialized losses (losses distributed across all users)

The size of the insurance fund relative to open interest determines ADL frequency. Binance’s insurance fund totaled about $2 billion in October 2025. Relative to $4 billion in open interest for BTC, ETH, and BNB perpetual contracts, this provided 50% coverage. But during the October cascade, total open interest across all pairs exceeded $200 billion. The insurance fund could not cover the shortfall.

After the October cascade, Binance announced they would guarantee no ADL for BTC, ETH, and BNB USDⓈ-M contracts when total open interest remains below $4 billion. This creates an incentive structure: exchanges can maintain larger insurance funds to avoid ADL, but this ties up capital that could otherwise be profitably deployed.

On-Chain Failures: Limitations of Blockchain Protocols

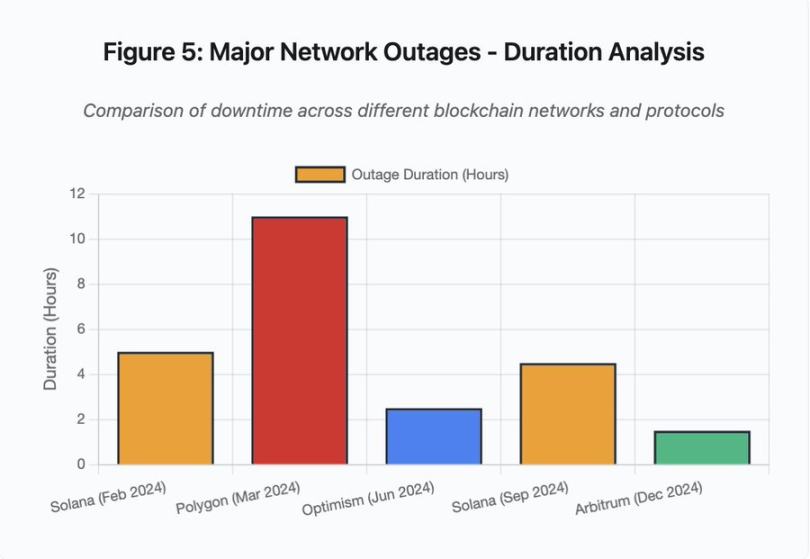

The bar chart compares downtime across different events:

-

Solana (February 2024): 5 hours – Voting throughput bottleneck

-

Polygon (March 2024): 11 hours – Validator version mismatch

-

Optimism (June 2024): 2.5 hours – Sequencer overload (airdrop)

-

Solana (September 2024): 4.5 hours – Transaction spam attack

-

Arbitrum (December 2024): 1.5 hours – RPC provider failure

Figure 5: Major Network Outages – Duration Analysis

Solana: Consensus Bottleneck

Solana experienced multiple outages during 2024–2025. The February 2024 outage lasted about 5 hours, and the September 2024 outage lasted 4–5 hours. These stemmed from similar root causes: the network being unable to handle transaction volume during spam attacks or extreme activity.

Figure 5 detail: Solana’s outages (5 hours in February, 4.5 hours in September) highlight recurring issues with network resilience under stress.

Solana’s architecture is optimized for throughput. Under ideal conditions, the network processes 3,000–5,000 transactions per second with sub-second finality—performance several orders of magnitude higher than Ethereum. But during stress events, this optimization creates vulnerabilities.

The September 2024 outage originated from a wave of spam transactions overwhelming validators’ voting mechanisms. Solana validators must vote on blocks to achieve consensus. Under normal operations, validators prioritize vote transactions to ensure consensus progress. But the protocol previously treated vote transactions like regular transactions in its fee market.

When the transaction mempool filled with millions of spam transactions, validators struggled to propagate vote transactions. Without sufficient votes, blocks could not be finalized. Without finalized blocks, the chain halted. Users with pending transactions saw them stuck in the mempool. New transactions could not be submitted.

StatusGator recorded multiple Solana service interruptions during 2024–2025, though Solana never officially acknowledged them. This created information asymmetry. Users couldn’t distinguish local connectivity issues from network-wide problems. Third-party monitoring services provided accountability, but platforms should maintain comprehensive status pages.

Ethereum: Gas Fee Explosion

Ethereum experienced extreme gas fee spikes during the 2021 DeFi boom, with simple transfers costing over $100 in transaction fees. Complex smart contract interactions cost $500–$1,000. These fees rendered the network unusable for smaller transactions and enabled a different attack vector: MEV extraction.

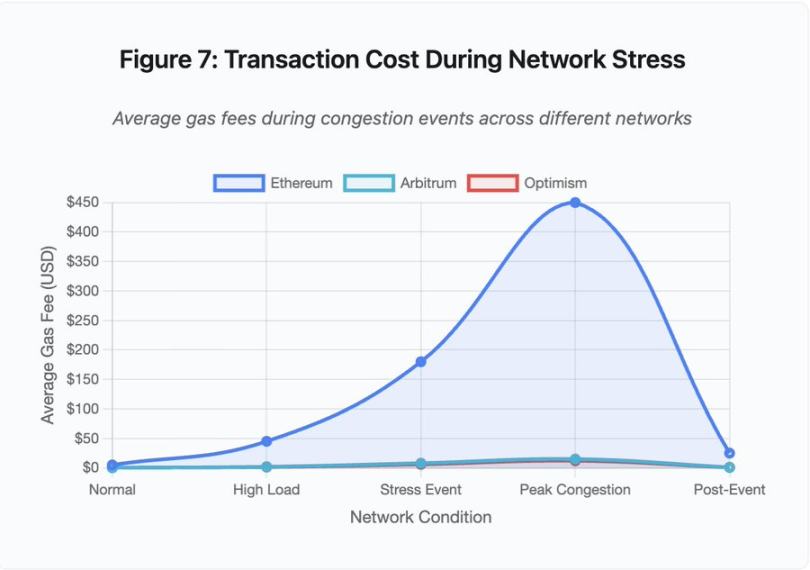

Figure 7: Transaction Costs During Network Stress

This line chart dramatically shows gas fee escalation across networks during stress events:

-

Ethereum: $5 (normal) → $450 (peak congestion) – 90x increase

-

Arbitrum: $0.50 → $15 – 30x increase

-

Optimism: $0.30 → $12 – 40x increase

The visualization shows that even Layer 2 solutions experienced significant gas fee escalations, despite much lower baselines.

Maximal Extractable Value (MEV) describes profits validators can extract by reordering, including, or excluding transactions. In high gas fee environments, MEV becomes especially profitable. Arbitrageurs race to front-run large DEX trades, and liquidation bots compete to be first in clearing undercollateralized positions. This competition manifests as gas fee bidding wars.

Users wanting their transactions included during congestion must bid above MEV bots. This leads to scenarios where transaction fees exceed transaction value. Want to claim your $100 airdrop? Pay $150 in gas. Need to add collateral to avoid liquidation? Compete with bots paying $500 priority fees.

Ethereum’s gas limit caps the total computational load per block. During congestion, users bid for scarce block space. The fee market works as designed: higher bidders get priority. But this design makes the network increasingly expensive during high usage—precisely when users need access most.

Layer 2 solutions attempt to solve this by moving computation off-chain while inheriting Ethereum’s security through periodic settlements. Optimism, Arbitrum, and other rollups process thousands of transactions off-chain, then submit compressed proofs to Ethereum. This architecture successfully reduces per-transaction costs under normal operations.

Layer 2: Sequencer Bottleneck

But Layer 2 solutions introduce new bottlenecks. Optimism experienced an outage in June 2024 when 250,000 addresses simultaneously claimed an airdrop. The sequencer—the component that orders transactions before submitting them to Ethereum—was overwhelmed, leaving users unable to submit transactions for hours.

This outage demonstrated that moving computation off-chain does not eliminate infrastructure demands. The sequencer must process incoming transactions, order them, execute them, and generate fraud proofs or ZK proofs for Ethereum settlement. Under extreme traffic, sequencers face the same scalability challenges as standalone blockchains.

Maintaining multiple RPC providers is essential. If the primary provider fails, users should seamlessly fail over to alternatives. During the Optimism outage, some RPC providers remained functional while others failed. Wallet users default-connected to failed providers could not interact with the chain, even though the chain itself remained online.

AWS outages have repeatedly proven the existence of centralized infrastructure risks within the crypto ecosystem:

-

October 20, 2025 (today): US-East-1 outage affected Coinbase, Venmo, Robinhood, and Chime. AWS acknowledged increased error rates in DynamoDB and EC2 services.

-

April 2025: Regional outage impacted Binance, KuCoin, and MEXC. Multiple major exchanges became unavailable when their AWS-hosted components failed.

-

December 2021: US-East-1 outage disabled Coinbase, Binance.US, and “decentralized” exchange dYdX for 8–9 hours, also affecting Amazon’s own warehouses and major streaming services.

-

March 2017: S3 outage prevented users from logging into Coinbase and GDAX for five hours, accompanied by widespread internet disruptions.

The pattern is clear: these exchanges host critical components on AWS infrastructure. When AWS experiences regional outages, multiple major exchanges and services go down simultaneously. Users cannot access funds, execute trades, or modify positions during outages—precisely when market volatility may require immediate action.

Polygon: Consensus Version Mismatch

Polygon (formerly Matic) experienced an 11-hour outage in March 2024. The root cause involved validator version mismatches—some validators running older software versions while others ran upgraded versions. These versions calculated state transitions differently.

Figure 5 detail: Polygon’s 11-hour outage was the longest among major events analyzed, highlighting the severity of consensus failures.

When validators reach different conclusions about the correct state, consensus fails, and the chain cannot produce new blocks because validators cannot agree on block validity. This creates a deadlock: validators running old software reject blocks produced by those running new software, and vice versa.

Resolution requires coordinated validator upgrades, which takes time during an outage. Each validator operator must be contacted, deploy the correct software version, and restart their validator. In a decentralized network with hundreds of independent validators, such coordination can take hours or days.

Hard forks typically use block-height triggers. All validators upgrade before a specific block height, ensuring simultaneous activation—but this requires prior coordination. Incremental upgrades, where validators gradually adopt new versions, carry the risk of exactly the kind of version mismatch that caused the Polygon outage.

Architectural Trade-offs

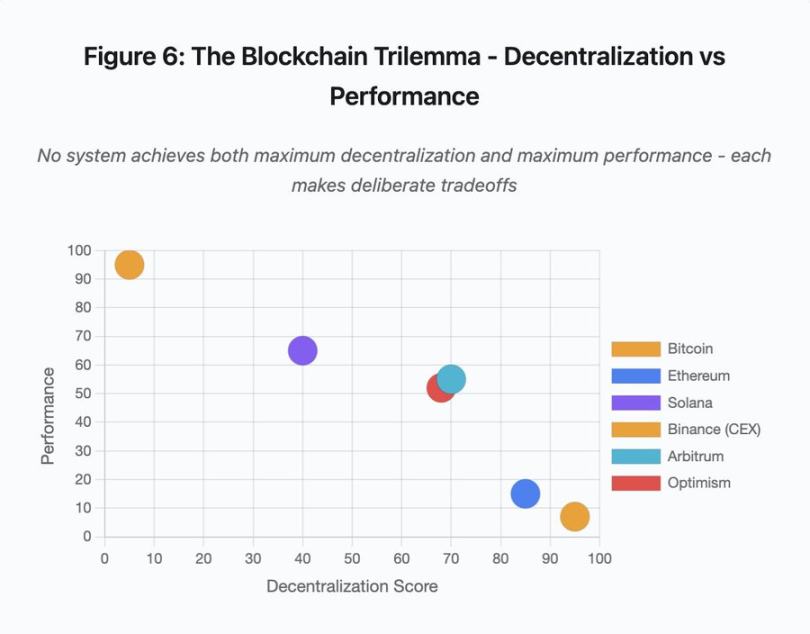

Figure 6: Blockchain Trilemma – Decentralization vs Performance

This scatter plot maps different systems along two key dimensions:

-

Bitcoin: High decentralization, low performance

-

Ethereum: High decentralization, medium performance

-

Solana: Medium decentralization, high performance

-

Binance (CEX): Minimal decentralization, maximum performance

-

Arbitrum/Optimism: Medium-high decentralization, medium performance

Key insight: No system achieves both maximum decentralization and maximum performance. Each design makes deliberate trade-offs for different use cases.

Centralized exchanges achieve low latency through architectural simplicity—the matching engine processes orders in microseconds, and state resides in centralized databases. No consensus protocol introduces overhead, but this simplicity creates single points of failure. When infrastructure is stressed, cascading failures propagate through tightly coupled systems.

Decentralized protocols distribute state across validators, eliminating single points of failure. High-throughput chains preserve this property during outages (no loss of funds, only temporary liveness issues). But reaching consensus among distributed validators introduces computational overhead—validators must agree before state transitions are finalized. When validators run incompatible versions or face overwhelming traffic, the consensus process may temporarily halt.

Adding replicas improves fault tolerance but increases coordination costs. In Byzantine fault-tolerant systems, each additional validator increases communication overhead. High-throughput architectures minimize this via optimized validator communication, enabling superior performance but making them vulnerable to certain attack patterns. Security-focused architectures prioritize validator diversity and consensus robustness, limiting base-layer throughput while maximizing resilience.

Layer 2 solutions attempt to deliver both properties through layered design. They inherit Ethereum’s security via L1 settlement while providing high throughput through off-chain computation. However, they introduce new bottlenecks at the sequencer and RPC layers, showing that architectural complexity solves some problems while creating new failure modes.

Scaling Remains the Fundamental Problem

These events reveal a consistent pattern: systems provision resources for normal load, then catastrophically fail under stress. Solana handled regular traffic effectively but collapsed when transaction volume increased 10,000%. Ethereum gas fees remained reasonable until DeFi adoption caused congestion. Optimism’s infrastructure worked well until 250,000 addresses claimed an airdrop simultaneously. Binance’s API functioned normally during regular trading but became rate-limited during the liquidation cascade.

The October 2025 event illustrated this dynamic at the exchange level. Under normal operations, Binance’s API rate limits and database connections were sufficient. But during the liquidation cascade, when every trader simultaneously tried to adjust positions, these limits became bottlenecks. Margin systems designed to protect exchanges via forced liquidations instead amplified the crisis by creating forced sellers at the worst possible moment.

Auto-scaling offers insufficient protection against step-function load increases. Spinning up additional servers takes minutes. In those minutes, margin systems mark positions based on corrupted price data from thin order books. By the time new capacity comes online, the cascade has already spread.

Over-provisioning resources for rare stress events wastes money during normal operations. Exchange operators optimize for typical loads, accepting occasional outages as economically rational. The cost of downtime is externalized to users, who experience liquidations, stuck trades, or inability to access funds during critical market movements.

Infrastructure Improvements

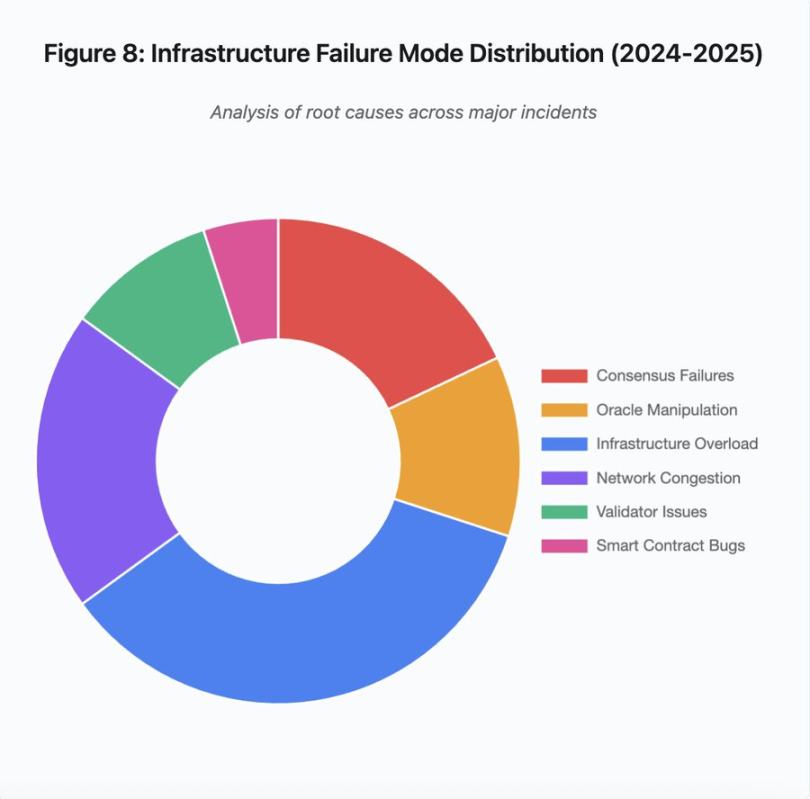

Figure 8: Distribution of Infrastructure Failure Modes (2024–2025)

The pie chart breakdown of root causes shows:

-

Infrastructure overload: 35% (most common)

-

Network congestion: 20%

-

Consensus failure: 18%

-

Oracle manipulation: 12%

-

Validator issues: 10%

-

Smart contract vulnerabilities: 5%

Several architectural changes could reduce the frequency and severity of failures, though each involves trade-offs:

Separation of Pricing and Liquidation Systems

The October issue was partly due to coupling margin calculations with spot market prices. Using redemption ratios instead of spot prices for wrapped assets could have avoided mispricing of wBETH. More broadly, critical risk management systems should not rely on market data that can be manipulated. Independent oracle systems with multi-source aggregation and TWAP calculations provide more robust price feeds.

Over-Provisioning and Redundant Infrastructure

The April 2025 AWS outage affecting Binance, KuCoin, and MEXC demonstrated the risks of centralized infrastructure dependency. Running critical components across multiple cloud providers increases operational complexity and cost but eliminates correlated failures. Layer 2 networks can maintain multiple RPC providers with automatic failover. The added expense seems wasteful during normal operations but prevents multi-hour outages during peak demand.

Enhanced Stress Testing and Capacity Planning

The pattern of systems working fine until they fail indicates insufficient stress testing. Simulating 100x normal load should be standard practice—identifying bottlenecks during development is cheaper than discovering them during actual outages. Yet realistic load testing remains challenging. Production traffic exhibits patterns synthetic tests cannot fully capture, and user behavior during real crashes differs from test scenarios.

The Way Forward

Over-provisioning offers the most reliable solution but conflicts with economic incentives. Maintaining 10x excess capacity for rare events costs money every day to prevent a once-a-year issue. Systems will continue to fail under stress until catastrophic failures impose enough cost to justify over-provisioning.

Regulatory pressure may force change. If regulations mandate 99.9% uptime or limit acceptable downtime, exchanges will need to over-provision. But regulations typically follow disasters, not prevent them. Mt. Gox’s 2014 collapse led Japan to establish formal cryptocurrency exchange regulations. The October 2025 cascade will likely prompt a similar regulatory response. Whether these responses specify outcomes (maximum allowable downtime, maximum slippage during liquidations) or methods (specific oracle providers, circuit breaker thresholds) remains uncertain.

The fundamental challenge is that these systems operate continuously in global markets but rely on infrastructure designed for traditional business hours. When stress hits at 02:00, teams scramble to deploy fixes while users face mounting losses. Traditional markets halt trading during stress; crypto markets simply crash. Whether this is a feature or a flaw depends on perspective and position.

Blockchain systems have achieved remarkable technical sophistication in a short time. Maintaining distributed consensus across thousands of nodes represents a genuine engineering achievement. But achieving reliability under stress requires moving beyond prototype architectures toward production-grade infrastructure. This shift requires funding and prioritizing robustness over the speed of feature development.

The challenge lies in prioritizing robustness over growth during bull markets, when everyone is profiting and outages seem like someone else’s problem. When the next cycle stress-tests the system, new weaknesses will emerge. Whether the industry learns from October 2025 or repeats similar patterns remains an open question. History suggests we will discover the next critical vulnerability through another multi-billion-dollar failure under stress.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News

![Axe Compute [NASDAQ: AGPU] completes corporate restructuring (formerly POAI), enterprise-grade decentralized GPU computing power Aethir officially enters the mainstream market](https://upload.techflowpost.com//upload/images/20251212/2025121221124297058230.png)