Testing Grok3, "the smartest in the world": Is it really the end of model marginal returns?

TechFlow Selected TechFlow Selected

Testing Grok3, "the smartest in the world": Is it really the end of model marginal returns?

Grok3, which consumed 263 times the computational power of DeepSeek V3, is this it?

On February 18, Beijing time, Musk and the xAI team officially launched Grok3, the latest version of Grok, during a live stream.

Well before this launch event, fueled by a steady flow of related information and Musk's relentless 24/7 pre-launch hype, global anticipation for Grok3 had reached unprecedented levels. Just a week earlier, while commenting on DeepSeek R1 during a livestream, Musk confidently claimed that "xAI is about to release a superior AI model."

According to data presented at the event, Grok3 has surpassed all current mainstream models in benchmark tests for math, science, and programming. Musk even stated that Grok3 will eventually be used for SpaceX Mars mission calculations and predicted it would achieve "Nobel Prize-level breakthroughs within three years."

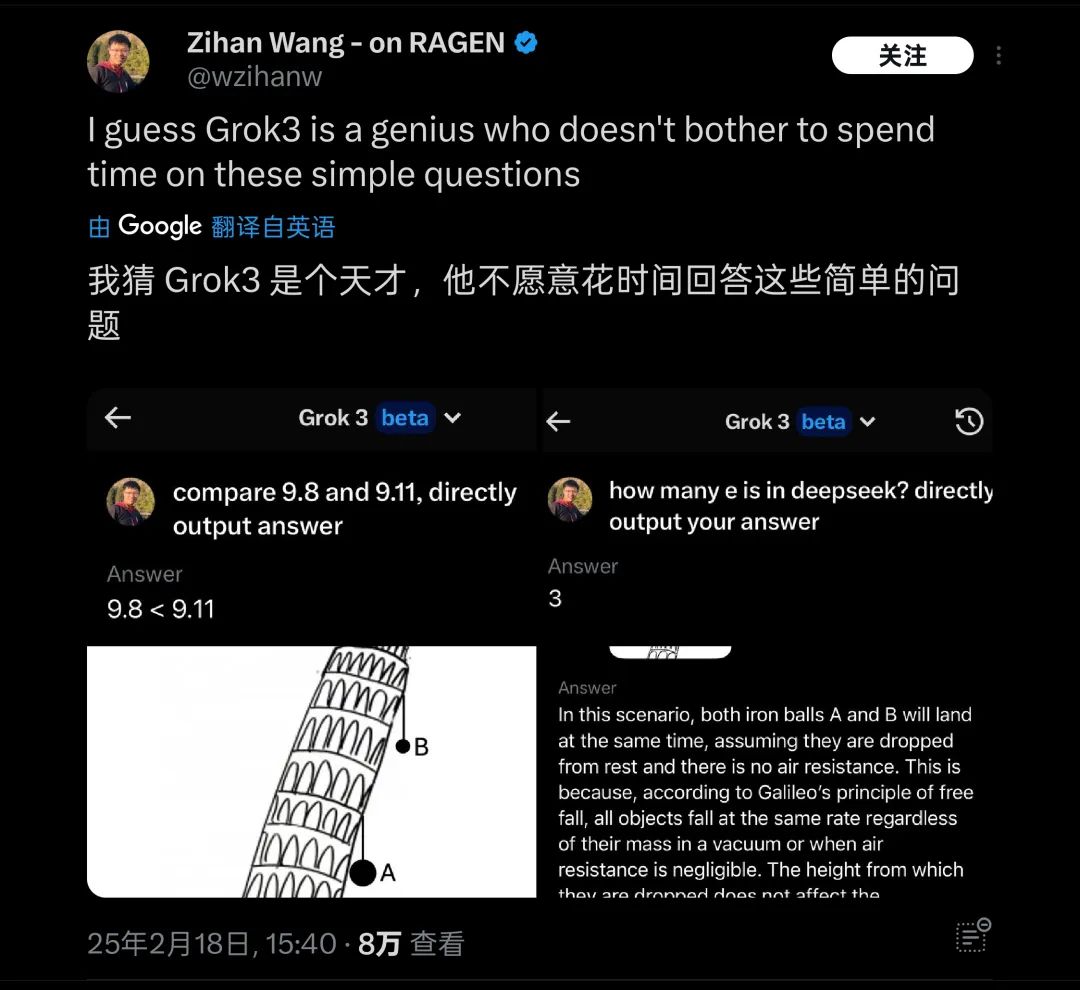

However, these claims remain solely Musk’s assertions. Shortly after the release, I tested the latest beta version of Grok3 and posed the classic tricky question often used to challenge large models: "Which is larger, 9.11 or 9.9?"

Unfortunately, without any qualifiers or annotations, Grok3—hailed as the smartest model today—still failed to answer this question correctly.

Grok3 failed to accurately interpret the meaning of this question

Image source: GeekPark

After this test went public, it quickly attracted significant attention. Similarly, overseas users have conducted comparable tests involving basic physics/math questions such as "which ball falls first from the Leaning Tower of Pisa," where Grok3 was also found unable to respond appropriately. It has since been jokingly referred to as "a genius unwilling to answer simple questions."

Grok3 failed numerous common-sense questions in real-world testing

Image source: X

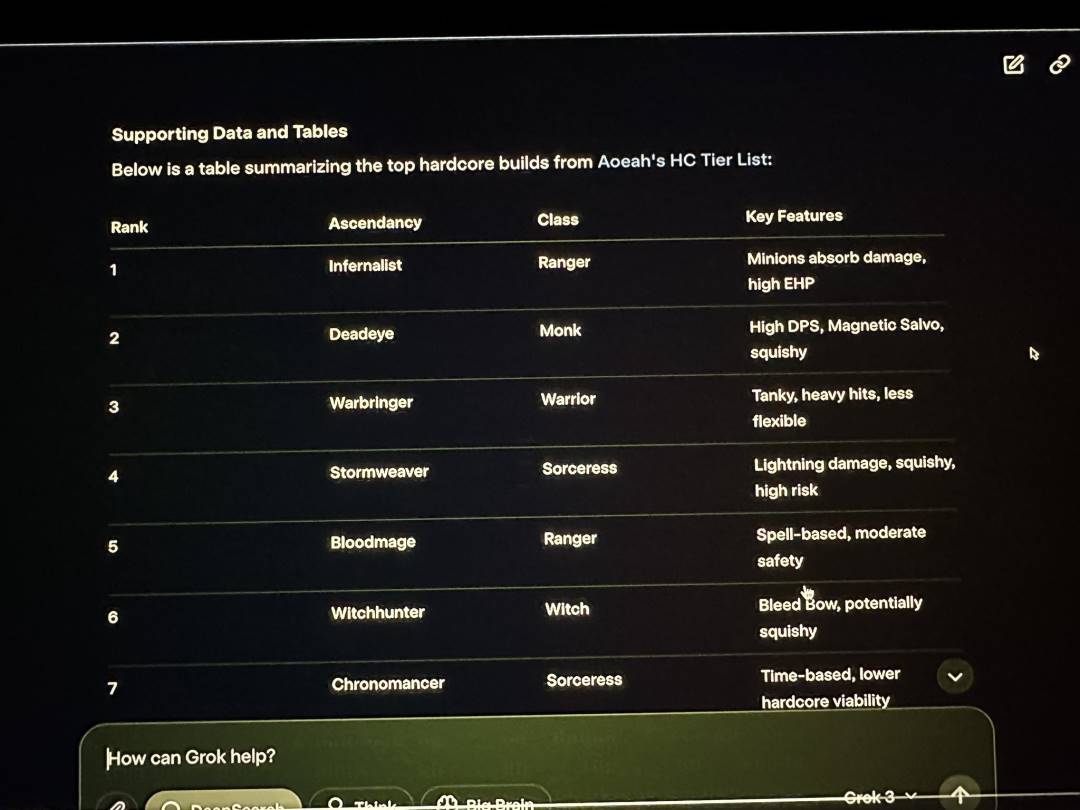

Beyond user-generated basic knowledge tests where Grok3 faltered, during the xAI launch livestream, Musk demonstrated using Grok3 to analyze character classes and ascendancy effects in Path of Exile 2—a game he claimed to play frequently. In reality, most of the answers provided by Grok3 were incorrect. Musk did not notice this obvious issue during the live demonstration.

Grok3 produced numerous incorrect data points during the live demo

Image source: X

This error not only became solid evidence for overseas netizens to mock Musk for allegedly hiring游戏代练 (proxy players) to play games but also cast serious doubts over Grok3’s reliability in practical applications.

For such a so-called "genius," regardless of its actual capabilities, its reliability when applied to extremely complex future scenarios like Mars exploration must be seriously questioned.

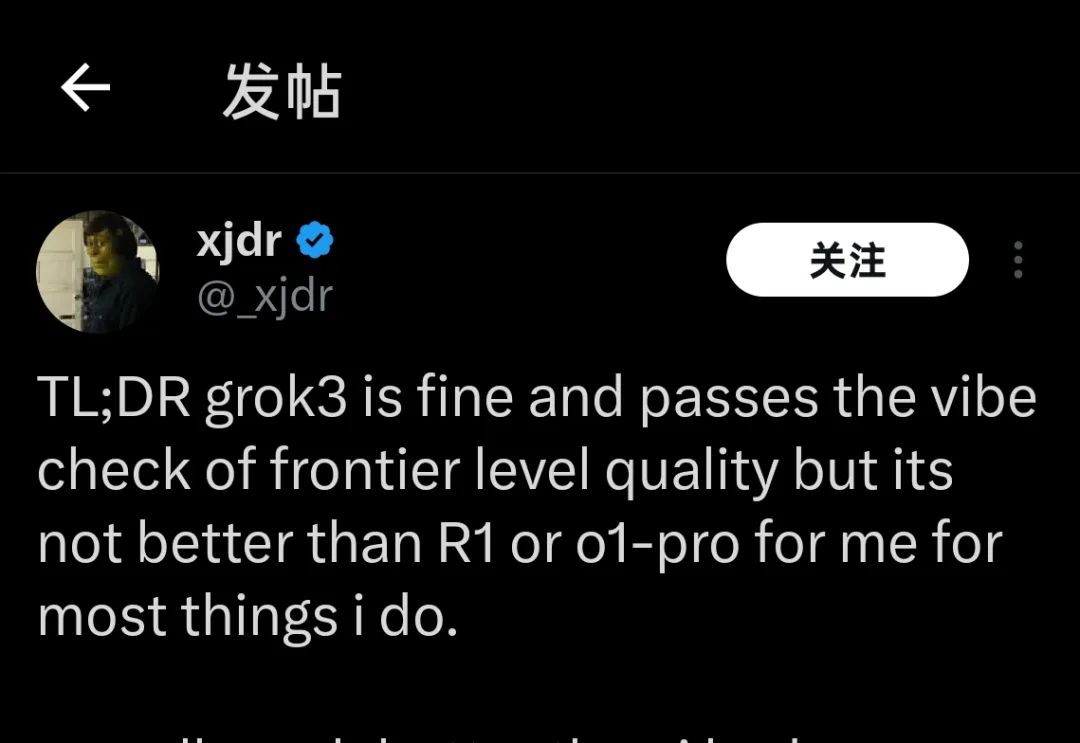

Currently, many individuals who obtained Grok3 testing access weeks ago or just began using the model hours ago have arrived at the same conclusion regarding its performance:

"Grok3 is good, but it's not better than R1 or o1-Pro."

"Grok3 is good, but it's not better than R1 or o1-Pro."

Image source: X

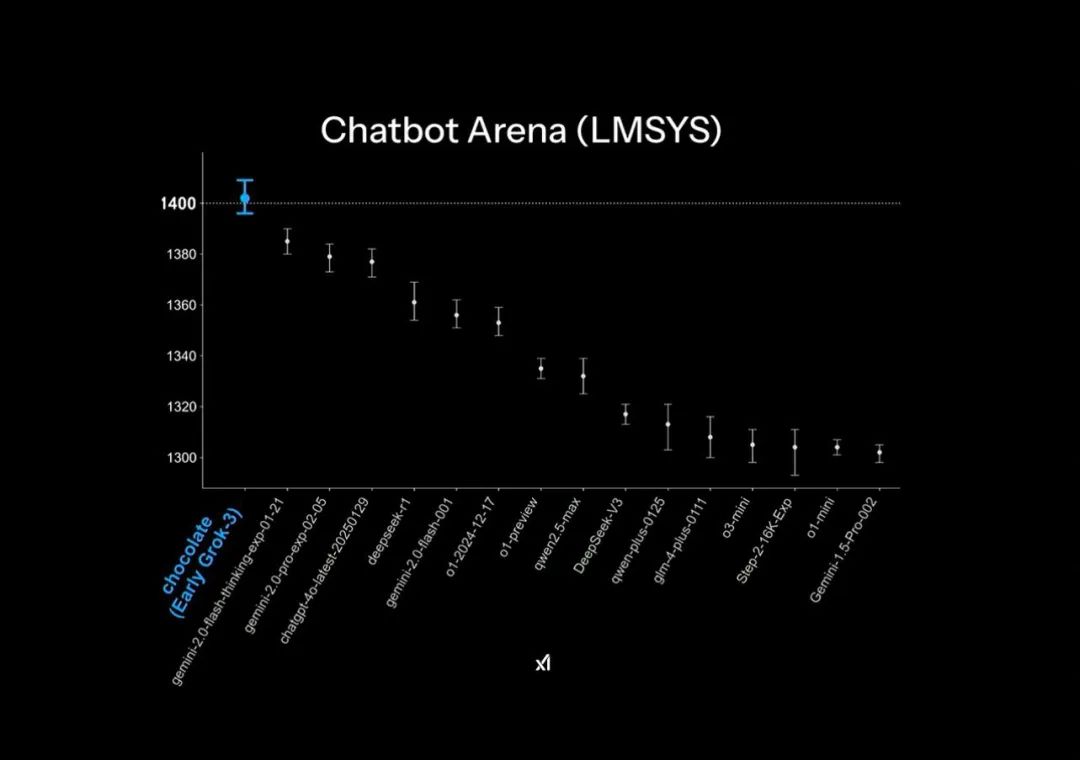

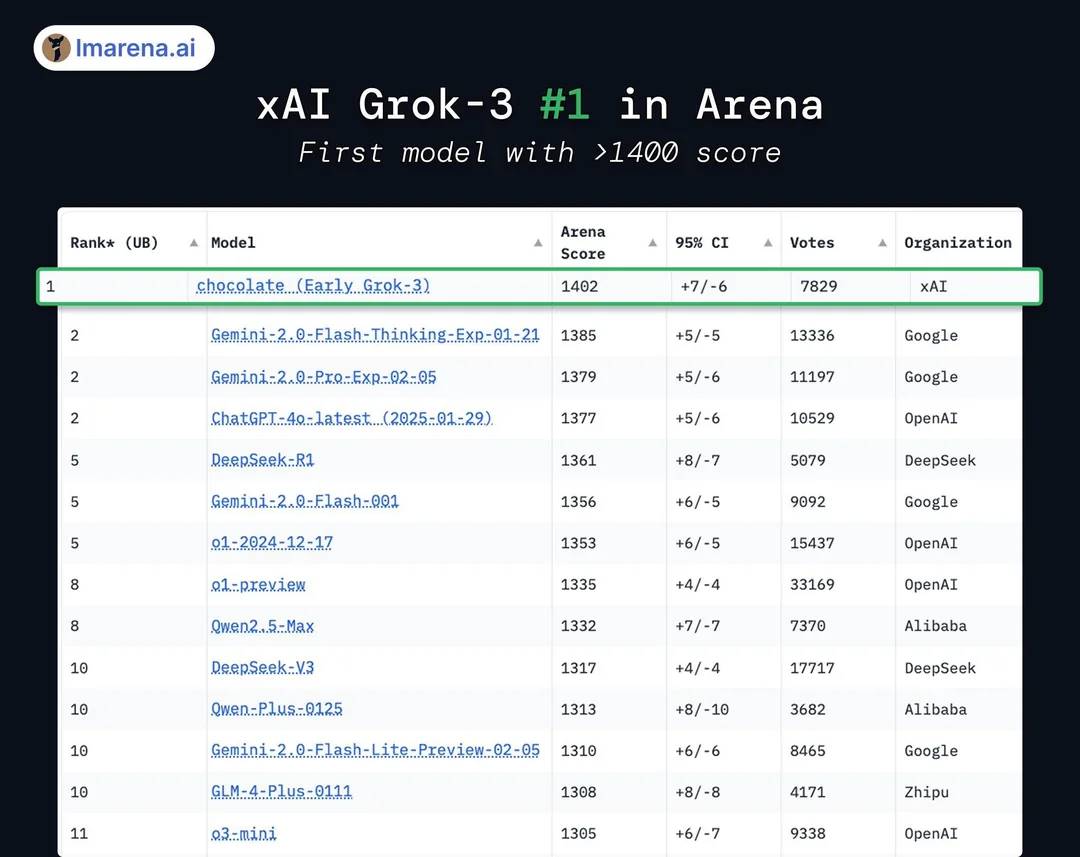

In the official presentation slides released during the launch, Grok3 achieved "far ahead leadership" on the large model leaderboard Chatbot Arena. However, this visual effect employed a minor graphical trick: the vertical axis only displayed rankings between 1400–1300, making an original 1% difference in test results appear dramatically pronounced in the slide.

The "far ahead leadership" effect shown in the official presentation slides

Image source: X

In reality, Grok3’s score differs from DeepSeek R1 and GPT-4.0 by less than 1–2%, aligning with many users’ hands-on experience of perceiving "no noticeable difference."

In reality, Grok3 leads other models by only 1%-2%

Image source: X

Moreover, although Grok3 outperformed all currently publicly tested models in scores, this achievement is not widely accepted. After all, during the Grok2 era, xAI was already accused of "score farming" on this leaderboard, with scores dropping significantly when the ranking system adjusted weighting against response length and style—leading industry insiders to frequently criticize it for being "high-scoring but low-performing."

Whether it's leaderboard "score farming" or subtle design tricks in visuals, they both reflect xAI and Musk’s obsession with appearing "far ahead" in model capability.

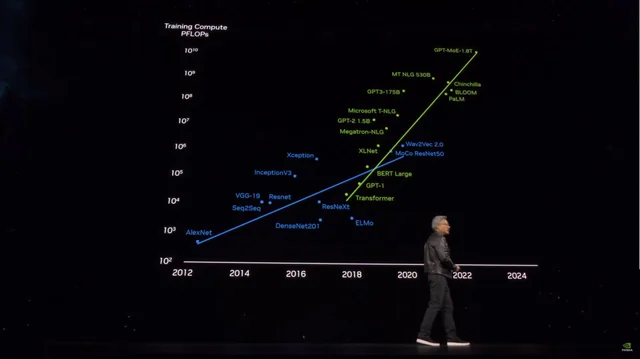

To achieve these marginal gains, Musk paid an extraordinarily high price: during the launch, Musk almost boastfully revealed that Grok3 was trained using 200,000 H100 GPUs (Musk stated "over 100,000" during the livestream), accumulating two hundred million training hours. This led some to view it as another major boost for the GPU industry and consider DeepSeek’s impact on the sector as "foolish."

Many believe piling up computing power will define the future of model training

Image source: X

In reality, comparing DeepSeek V3, which was trained using 2,000 H800 GPUs over two months, users calculated that Grok3’s actual training compute consumption was 263 times greater than V3’s. Yet, the performance gap between DeepSeek V3 and Grok3, which scored 1402 on the large model arena leaderboard, is less than 100 points.

Following the release of these figures, many quickly realized that behind Grok3’s ascent to "world's strongest" title lies a clear diminishing return: the idea that bigger models yield better performance is showing evident marginal effects.

Even the "high-scoring but low-performing" Grok2 was supported by vast amounts of high-quality first-party data from the X (Twitter) platform. By the time of Grok3’s training, xAI inevitably encountered the same ceiling currently faced by OpenAI—the shortage of high-quality training data rapidly exposed the model’s diminishing returns.

Certainly, the developers of Grok3 and Musk himself were likely the first and most aware of these realities. Hence, Musk has repeatedly emphasized on social media that the version users are experiencing is "only a test version," with the full version set to launch in the coming months. Musk himself has taken on the role of Grok3 product manager, encouraging users to directly report issues in comment sections.

He might be the most-followed product manager on Earth

Image source: X

Yet, within less than a day, Grok3’s performance has undoubtedly sounded an alarm for those hoping to rely on "brute force scaling" to train more capable large models. Based on Microsoft’s public disclosures, OpenAI’s GPT-4 reportedly contains 1.8 trillion parameters—more than tenfold increase over GPT-3—with rumored GPT-4.5 expected to be even larger.

As model parameter sizes skyrocket, training costs surge accordingly

Image source: X

With Grok3 setting a precedent, GPT-4.5 and others aiming to keep "burning money" through ever-larger parameter counts to improve model performance must now confront an imminent ceiling—and figure out how to突破 (break through).

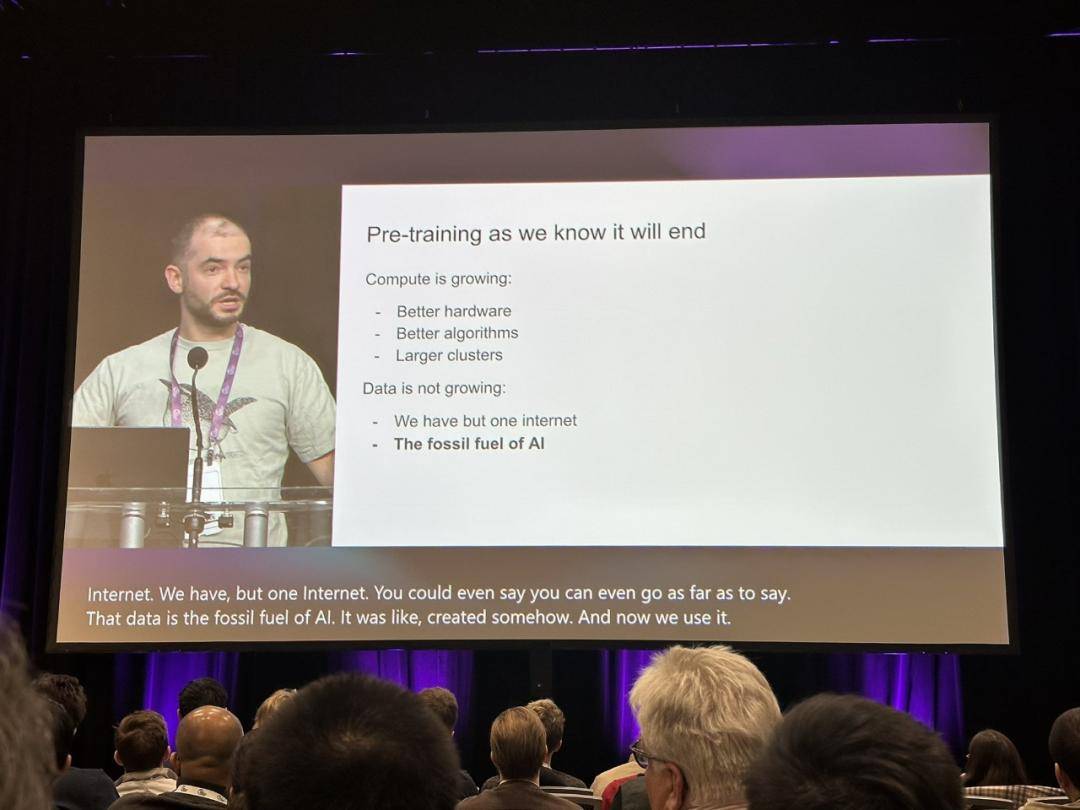

At this moment, OpenAI’s former chief scientist Ilya Sutskever’s statement from last December—"the kind of pre-training we’re familiar with will come to an end"—has resurfaced, prompting renewed efforts to find the true path forward for large model development.

Ilya’s insight has already sounded the alarm for the industry

Image source: X

At that time, Ilya accurately foresaw the near-exhaustion of usable new data and the difficulty of further improving model performance through additional data, likening it to fossil fuel consumption: "Just as oil is finite, human-generated content on the internet is also limited."

In Sutskever’s vision, the next generation of models beyond pre-training will possess "true autonomy" and reasoning capabilities "similar to the human brain."

Unlike today’s pre-trained models, which primarily rely on content matching (based on previously learned material), future AI systems will learn progressively and build problem-solving methodologies in a manner akin to human thought processes.

A human can achieve basic mastery in a subject with just a few core textbooks, whereas AI large models require millions of data points merely to reach basic proficiency—and may still fail to understand fundamental questions when phrased differently. True intelligence has not improved: the basic questions mentioned at the beginning, which Grok3 still cannot correctly answer, are direct illustrations of this phenomenon.

Yet, beyond "brute-force scaling," if Grok3 truly reveals to the industry that "pre-training is nearing its end," then it still holds significant enlightening value.

Perhaps, after the hype around Grok3 fades, we may begin to see more cases like Fei-Fei Li’s approach—fine-tuning high-performance models on specific datasets for as little as $50. Through such explorations, humanity may ultimately discover the true path toward AGI.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News