The Truth About AI Agents: Why GOAT, a $1 billion-valued AI, Is Still Just a Mechanical Text Generator?

TechFlow Selected TechFlow Selected

The Truth About AI Agents: Why GOAT, a $1 billion-valued AI, Is Still Just a Mechanical Text Generator?

Data access is key.

Author: MORBID-19

Translation: TechFlow

Hello everyone, another day, another speculative bet. Recently, AI agents have become a hot topic of discussion—especially aixbt, a product that's been receiving significant attention lately.

But in my opinion, this hype makes absolutely no sense.

Let me explain for those unfamiliar with Bitcoin terminology: once users bridge their assets onto so-called "Bitcoin Layer 2s (L2s)," true "non-custodial lending" becomes impossible.

All "Bitcoin bridges" or "interoperability/scaling layers" introduce new trust assumptions, with few exceptions like the Lightning Network. So when someone claims a Bitcoin L2 is "trustless," you can safely assume that’s not actually true. This is also why most new L2s emphasize being "trust-minimized."

Although I don’t know much about Side Protocol specifically, I’m almost certain that aixbt’s claim of “non-custodial lending” is false—and such a judgment would be correct 99% of the time.

Still, I don't entirely blame aixbt. It's just following instructions: scraping data from the internet and generating tweets that appear useful.

The problem is that aixbt doesn’t truly understand what it’s saying. It cannot verify the truthfulness of information, consult experts to validate its assumptions, or question its own logic or reasoning.

Large language models (LLMs) are fundamentally word predictors. They don’t understand their outputs—they simply select words that seem correct based on probability.

If I wrote an article in the Encyclopedia Britannica claiming "Hitler conquered ancient Greece and gave rise to Hellenistic civilization," that would become "fact," "history," for an LLM.

Many of the AI agents we see on Twitter are nothing more than word predictors dressed up with cool avatars. Yet, these AI agents are seeing skyrocketing market valuations. GOAT has already reached a $1 billion valuation, and aixbt sits at around $200 million. Are these valuations justified?

No one knows for sure—but ironically, I’m quite content holding positions in these assets myself.

Data Access Is Key

I’ve long been interested in the intersection of AI and crypto. Recently, Vana caught my attention because it’s attempting to solve the "data wall" problem. The issue isn’t lack of data—it’s about accessing high-quality data.

Would you publicly share your trading strategy for low-liquidity small-cap tokens? Would you freely release high-value insights that normally require payment? Would you openly disclose the most private details of your personal life?

Obviously not.

You won’t share such "private data" unless your privacy is reasonably protected through proper compensation.

Yet, if we want AI to reach human-level intelligence, this kind of data is precisely what’s most critical. After all, core human traits lie in our thoughts, inner monologues, and deepest reflections.

Even acquiring somewhat "semi-public" data poses significant challenges. For example, extracting useful information from videos requires first generating accurate subtitles and understanding contextual meaning so AI can comprehend the content.

Similarly, many websites require login access to view content—Instagram and Facebook are prime examples. This design is common across numerous social networks.

In summary, current AI development faces three major limitations:

-

Inability to access private data

-

Inability to access paywalled data

-

Inability to access data from closed platforms

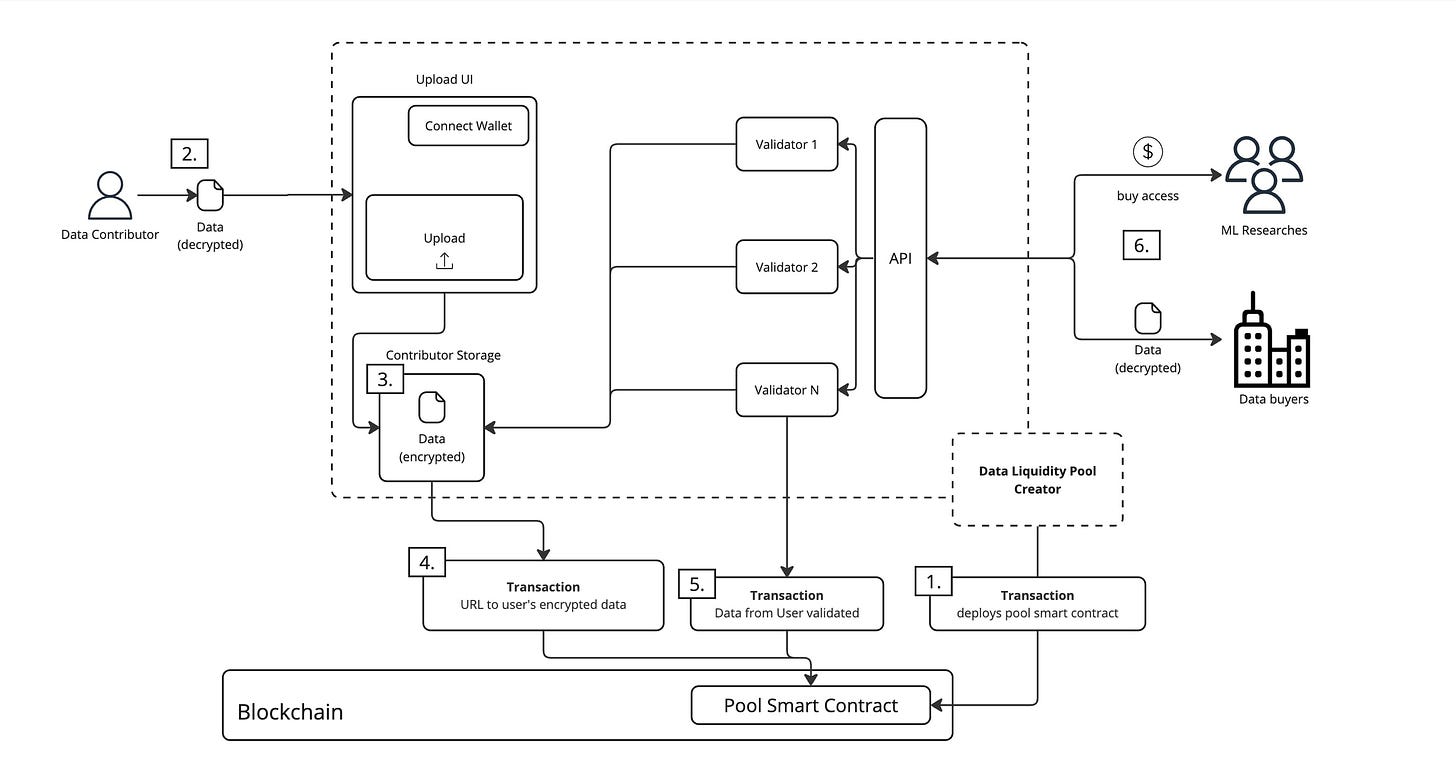

Vana offers a potential solution. By protecting privacy, it aggregates specific datasets into a decentralized mechanism called DataDAOs, thereby overcoming these barriers.

DataDAOs function as decentralized data markets, operating as follows:

-

Data Contributors: Users submit their data to DataDAOs and receive governance rights and rewards in return.

-

Data Verification: Data is verified within the Satya network—a secure computation network ensuring data quality and integrity.

-

Data Consumers: Verified datasets can be used by consumers for AI training or other applications.

-

Incentive Mechanism: DataDAOs encourage users to contribute high-quality data and manage data usage and training processes transparently.

If you'd like to learn more, click here.

I hope one day aixbt can transcend its current "stupidity." Perhaps we could even create a dedicated DataDAO for aixbt. While I'm not an expert in AI, I firmly believe the next major breakthrough in AI development will depend on the quality of data used to train models.

Only AI agents trained on high-quality data will truly realize their potential. I look forward to that moment—and I hope it’s not too far away.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News