What Is Groq, Which Achieved 10x the Inference Speed of NVIDIA GPUs and Recently Secured $640 Million in Funding?

TechFlow Selected TechFlow Selected

What Is Groq, Which Achieved 10x the Inference Speed of NVIDIA GPUs and Recently Secured $640 Million in Funding?

Groq, the AI chip unicorn known for its speed, has officially announced a $640 million funding round, pushing its latest valuation to $2.8 billion.

Author: Metaverse Heart

On August 5, U.S.-based AI chip startup Groq announced a new funding round of $640 million, bringing the company’s valuation to $2.8 billion.

The latest round was led by BlackRock Private Equity Partners, the private equity arm of Wall Street asset management giant BlackRock, with participation from Cisco Investments and Samsung Catalyst Fund. This highlights both Groq’s technological innovation and strong financial backing.

01. One-Minute Project Overview

1. Project Name: Groq

2. Founded: 2016

3. Product Introduction:

Groq has launched a new AI acceleration chip called the LPU (Language Processing Unit), specifically designed for large language models with ultra-fast inference capabilities. Its chip performance is 10 to 100 times faster than conventional GPUs and TPUs, achieving inference speeds 10 times that of NVIDIA GPUs.

4. Founding Team:

-

Jonathan Ross: Founder and CEO, former core developer of Google's TPU project

-

Yann LeCun: Turing Award winner and one of the three pioneers of deep learning, serving as technical advisor

5. Funding History:

-

In 2017, Groq raised $10.3 million in seed funding shortly after founding;

-

In 2018, Groq raised $52.3 million in Series A funding led by Social Capital;

-

In 2020, Groq raised $150 million in Series B funding led by Tiger Global Management, with participants including D1 Capital Partners and The Spruce House Partnership;

-

In 2021, Groq raised $300 million in Series C funding, again supported by Tiger Global Management and D1 Capital Partners;

-

In its latest round, Groq raised $640 million, led by BlackRock Inc.'s fund, with co-investment from Cisco and Samsung.

02. Pioneer of Innovation-Driven AI Processors

Groq’s founding story stands as a model of innovation and technological breakthrough.

Before founding Groq, Ross worked at Google as an engineer focusing on deep learning and computing architecture research. During his time there, he identified significant performance bottlenecks in traditional computing architectures when handling modern AI tasks, especially in deep learning and large-scale data analysis.

Traditional CPUs and GPUs were unable to meet the high demands for parallel processing and low latency required by these tasks. This realization prompted Ross to leave Google and found a company dedicated to breaking through conventional computing limits—Groq.

In its early days, Groq’s founding team focused intensely on hardware design and technology development. Composed of top talent from high-performance computing and semiconductor fields, the team brought extensive experience and technical expertise. Initial efforts included processor architecture design, prototype development, and performance testing.

Groq’s technical vision centers around an innovative processor architecture aimed at delivering significantly higher computational performance and efficiency compared to existing processors. The company’s goal is to build a hardware platform capable of surpassing the limitations of CPUs and GPUs, meeting the ever-growing computing demands of AI and HPC applications.

As its technology matured and market demand grew, Groq began expanding into areas such as data centers, cloud computing, and edge computing. Today, the company has established strategic partnerships with several leading tech firms, driving global adoption of its products.

03. Redefining High-Performance Computing

Since its inception, Groq has rapidly emerged as a pioneer in artificial intelligence (AI) and high-performance computing (HPC), thanks to its groundbreaking technology and exceptional products.

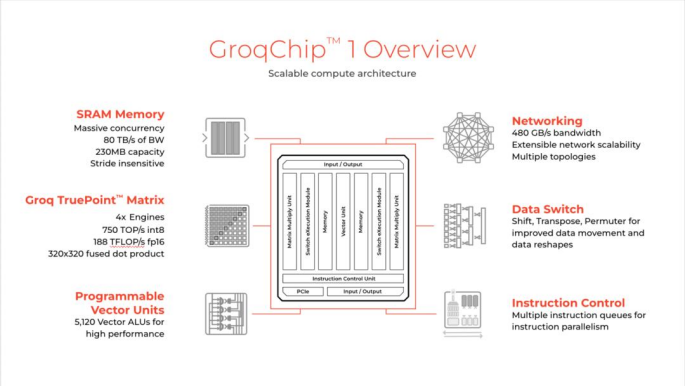

At the heart of Groq’s technology is its innovative processor architecture. Unlike traditional CPU and GPU designs, Groq’s philosophy focuses on enhancing computational power and efficiency, particularly tailored to the needs of modern AI and data-intensive applications.

-

Highly Parallelized Design: Groq’s architecture features extreme parallelization, integrating numerous computing units capable of processing vast amounts of data simultaneously. This design boosts computational throughput while reducing processing latency.

-

Streamlined Data Paths: Traditional processors often suffer from data transfer bottlenecks. Groq’s architecture minimizes data transmission delays through optimized data pathways and high-speed cache design, enabling more efficient processing of large datasets and meeting the high-performance requirements of AI training and inference.

-

Flexible Configuration Options: Groq offers multiple configuration options, allowing users to tailor computing resources to specific application needs. This flexibility enables deployment across diverse computing environments—from data centers to edge computing systems.

Groq’s AI accelerator is a core component of its product lineup, purpose-built to accelerate the training and inference of deep learning models. Its advantages are evident in three key aspects:

-

High Throughput and Low Latency: By boosting processing power and minimizing latency, the AI accelerator dramatically speeds up machine learning model training—critical for data-heavy applications like image recognition and natural language processing.

-

Optimized Algorithm Support: The accelerator is fine-tuned for various machine learning algorithms, including convolutional neural networks (CNNs) and recurrent neural networks (RNNs). Hardware-level optimizations enable efficient execution of complex computations, improving both training efficiency and inference performance.

-

Scalability and Configurability: Designed for high scalability, the accelerator can handle data processing tasks of varying scales. Users can deploy multiple accelerators to build high-performance computing clusters, meeting the demands of large-scale AI applications.

With its highly parallelized architecture, optimized data paths, and powerful AI acceleration, Groq delivers exceptional technological support across data centers, cloud computing, and edge computing environments.

As technology advances and products continue to improve, Groq is providing unprecedented solutions for modern computing needs, pushing the boundaries of computational technology to new heights.

As a dark horse in the AI chip sector, Groq’s LPU chip undoubtedly boasts impressive high-speed inference capabilities. However, with soaring valuations and rising market expectations, Groq also faces a series of challenges.

04. Challenges and Opportunities Ahead

First is the issue of LPU capacity. While it demonstrates outstanding performance in running large language models, its limited memory means substantial hardware resources may be required during actual deployment.

Analysis suggests that when running large models like LLaMA 70B, Groq’s hardware requirements and associated costs could far exceed expectations, increasing economic pressure for large-scale deployments.

Second, while the specialized nature of the LPU provides advantages in specific tasks, it also limits its applicability across broader AI workloads. Compared to GPUs, the LPU’s lack of general-purpose flexibility may hinder its competitiveness in diverse AI scenarios.

As a nascent product from a startup, Groq still has a long journey ahead in terms of technological maturity, market acceptance, and ecosystem development. It must continuously refine its products, expand its R&D team, and forge industry partnerships to accelerate commercialization.

Looking ahead, Groq’s growth opportunities are immense. As AI technology advances and use cases expand, demand for high-performance AI chips continues to rise. If Groq can effectively address cost and versatility challenges, its LPU chips could secure a major position in the AI inference market.

Groq plans to deliver 108,000 LPUs by the end of March 2025. Achieving this ambitious target would further solidify its leadership in the industry. How Groq will continue to break through in fierce market competition, achieving dual success in technological innovation and commercialization, remains a story worth watching closely.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News