AI Data Sparks "Oil Crisis," Content Companies Can Now Sit Back and Profit

TechFlow Selected TechFlow Selected

AI Data Sparks "Oil Crisis," Content Companies Can Now Sit Back and Profit

If large AI models are compared to cars, raw data is the crude oil.

Author: Jiangjiang

Editor: Manman Zhou

The emergence of ChatGPT and the explosive adoption of Midjourney enabled AI’s first large-scale application—popularization of large models.

Large models refer to machine learning models with massive parameters and complex structures capable of processing vast data and performing various sophisticated tasks.

01 AI Data Copyright Disputes

If today’s AI large models are compared to automobiles, raw data is crude oil. Regardless, AI models first need sufficient "crude oil."

AI companies primarily obtain their "crude oil" from the following sources:

-

Publicly available free online data sources such as Wikipedia, blogs, forums, news outlets, etc.;

-

Established news media and publishers;

-

Research institutions like universities;

-

End-users (C-end users) of the models.

Ownership of oil in the real world has mature legal frameworks, but in the still-chaotic domain of AI, rights to "crude oil" extraction remain unclear, leading to countless disputes.

Recently, several major music labels sued AI music generation companies Suno and Udio, accusing them of copyright infringement. This lawsuit resembles The New York Times' December lawsuit against OpenAI.

Image source: Billboard

In July 2023, some authors filed a lawsuit alleging that ChatGPT generated summaries of copyrighted works.

In December of the same year, The New York Times also filed a similar copyright infringement lawsuit against Microsoft and OpenAI, accusing both companies of using its content to train AI chatbots.

Additionally, a class-action lawsuit was filed in California, accusing OpenAI of collecting private user information from the internet to train ChatGPT without user consent.

Ultimately, OpenAI did not pay for these allegations. They stated they disagreed with The New York Times’ claims, could not reproduce the issues mentioned, and more importantly, the data sources provided by The New York Times were insignificant to OpenAI.

Source:

https://openai.com/index/openai-and-journalism/

For OpenAI, the biggest lesson may be managing relationships with data providers and clarifying mutual rights and responsibilities. Thus, over the past year, we’ve seen OpenAI form partnerships with numerous data providers, including The Atlantic, Vox Media, News Corp, Reddit, Financial Times, Le Monde, Prisa Media, Axel Springer, American Journalism Project, and others.

Going forward, OpenAI will legitimately use these media outlets’ data, while the media companies will integrate OpenAI’s technology into their products.

02 AI Drives Monetization of Content Platforms

However, the fundamental reason OpenAI partners with data suppliers isn't fear of lawsuits, but the looming data scarcity for machine learning. Researchers from MIT and elsewhere estimated that high-quality language datasets for machine learning could be exhausted by 2026.

"High-quality data" has thus become highly coveted by model makers like OpenAI and Google. Content companies repeatedly partner with AI model firms, entering passive income modes.

Traditional media platform Shutterstock has successively partnered with AI companies Meta, Alphabet, Amazon, Apple, OpenAI, and Reka. In 2023, content licensing to AI models increased its annual revenue to $104 million, projected to reach $250 million by 2027. Reddit earns up to $60 million annually from content licensing to Google. Apple is seeking collaborations with mainstream news outlets, offering at least $50 million annually in copyright fees. Royalty payments from AI companies to content providers are growing at an annual rate of 450%.

Image source: CX Scoop

Over recent years, monetizing content beyond streaming has been a major pain point in the industry. Compared to the internet startup era, AI brings greater imagination and stronger revenue expectations to the content sector.

03 High-Quality Data Remains Scarce

Not all content meets AI's needs.

Another highlight of the earlier-discussed debate between OpenAI and The New York Times concerns data quality. Just as refining petroleum requires both high-quality crude and advanced purification techniques.

OpenAI specifically emphasized that The New York Times’ content made no significant contribution to its model training. Unlike Shutterstock, which receives tens of millions annually from OpenAI, text-based media like The New York Times, built on timeliness, aren't favorites in the AI era. AI demands deeper, more unique data.

With high-quality data so scarce, AI companies are increasingly focusing on "purification technologies" and "end-to-end applications."

On June 25, OpenAI acquired Rockset, a real-time analytics database company specializing in real-time data indexing and querying. OpenAI plans to integrate Rockset’s technology into its products to enhance the real-time utility of data.

Image source: DePIN Scan

By acquiring Rockset, OpenAI aims to enable AI to better utilize and access real-time data, supporting more complex applications such as real-time recommendation systems, dynamic data-driven chatbots, and real-time monitoring and alert systems.

Rockset acts as OpenAI’s built-in "petrochemical division," transforming ordinary data directly into high-quality data required for applications.

04 Is Creator Data Ownership a Pipe Dream?

Much of the data on internet media platforms (Facebook, Reddit, etc.) comes from UGC—user-generated content. While many platforms charge AI companies hefty data fees, they quietly add clauses to user agreements stating, "the platform has the right to use user data to train AI models."

Although user agreements mention AI model training rights, many creators remain unaware of exactly which models use their content, whether usage is paid, or how to claim rightful benefits.

During Meta’s Q2 earnings call this February, Zuckerberg explicitly stated that images from Facebook and Instagram would be used to train its AI generation tools.

Reports indicate Tumblr has secretly reached content licensing agreements with OpenAI and Midjourney, though specific terms remain undisclosed.

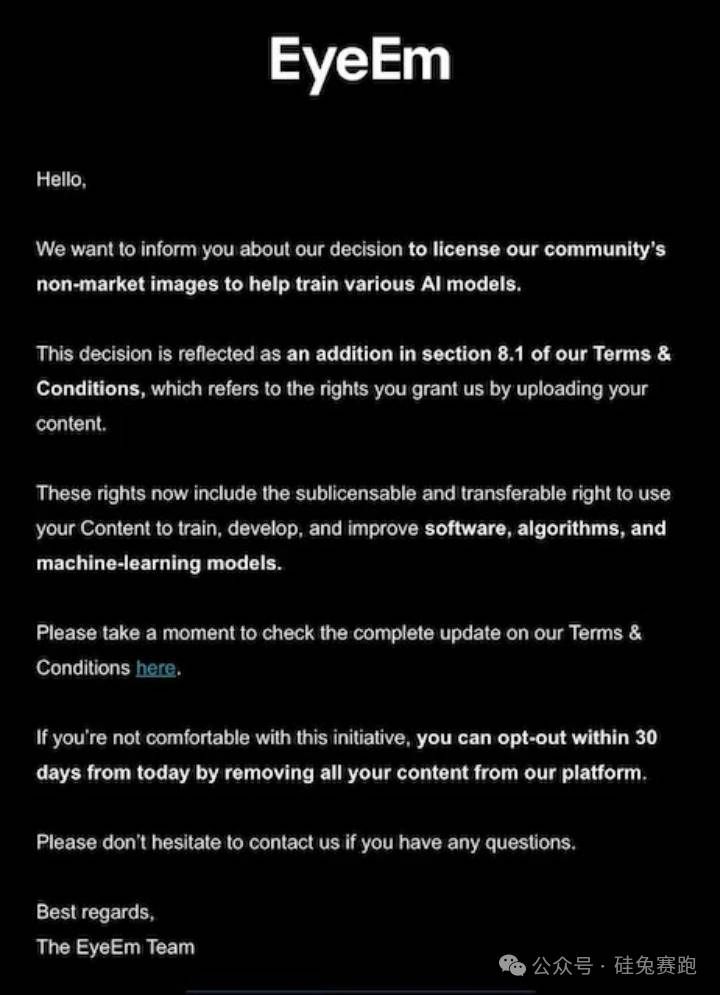

Photographers on image platform EyeEm recently received a notice informing them their uploaded photos would be used for AI model training. The notice mentions users can opt out by discontinuing product use, but no compensation policy has yet been introduced. Freepik, EyeEm’s parent company, told Reuters it signed deals with two major tech firms to license most of its 200 million images at around three cents per image. CEO Joaquin Cuenca Abela said five similar deals are underway but declined to identify the buyers.

UGC-dominated content platforms like Getty Images, Adobe, Photobucket, Flickr, and Reddit face similar issues—under immense pressure to monetize data, platforms ignore users’ content ownership and package-sell data to AI model companies.

The entire process occurs in secrecy, leaving creators powerless. Many creators might only suspect their work was sold to an AI firm when, one day, they see a model generating content resembling their own.

Web3 may offer a solution to protecting creator data ownership and revenue rights. As AI companies soar to new highs on Wall Street, Web3 AI-related tokens have simultaneously skyrocketed. Blockchain’s decentralized and immutable nature offers inherent advantages in safeguarding creators’ rights.

Media content like images and videos saw widespread blockchain adoption during the 2021 bull market, and UGC from social platforms is now quietly going on-chain too. Meanwhile, many Web3 AI model platforms already incentivize ordinary users contributing to model training—both data owners and trainers are being rewarded.

Exponential development of AI models heightens demand for data ownership. Creators should ask: Why is my work being sold to an AI company for five cents without my consent? Why am I uninformed throughout the process and receive zero benefit?

Content platforms exploiting resources unsustainably cannot alleviate AI companies’ data anxiety. The prerequisite for high-volume, high-quality data is clear data ownership and fair distribution of interests among creators, platforms, and AI model companies.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News