Grass Founder Interview: Why You Should Participate in Decentralized AI Data Supply?

TechFlow Selected TechFlow Selected

Grass Founder Interview: Why You Should Participate in Decentralized AI Data Supply?

Grass combines several distinct bullish narratives: DePin + AI + Solana.

Author: AYLO

Translation: TechFlow

Grass is an incredibly exciting project, expected to launch its mainnet in Q1 or Q2. Grass already has over 500,000 users. When the Grass network goes live, it will become one of the largest crypto protocols on the market purely by user count, creating a new income stream for everyone with internet access.

Grass combines multiple bullish narratives: DePin + AI + Solana. In this article, you'll have the opportunity to hear from Grass founder 0xdrej, who reveals many important insights. This is a long but highly worthwhile read, covering what Grass is, how it works, why it chose Solana, and more.

What attracted you to the crypto space?

0xdrej: Yes, I suppose I missed many opportunities when I first entered cryptocurrency. I think that's true for many people. I first heard about crypto in high school because a classmate was mining Bitcoin on his laptop. I haven't heard from him since, but I'm sure he's doing well now. Actually, I participated in a Doge faucet back in 2014 when Doge first launched, but I lost access to that account. So those were my two early significant encounters with crypto, but it wasn't until a few years ago when I started getting into DeFi that I really dove deep into R&D work.

I worked in finance for a while and became very familiar with how traditional financial systems operate. Seeing ordinary people rebuild entire infrastructure on blockchain was extremely exciting. You know, there are so many parallels between traditional finance and what happens on-chain—it's crazy, mainly because it’s a massive immutable ledger. So yes, a few years ago I began participating in some DeFi protocols.

What’s the elevator pitch for Grass? How do you explain it at a high level?

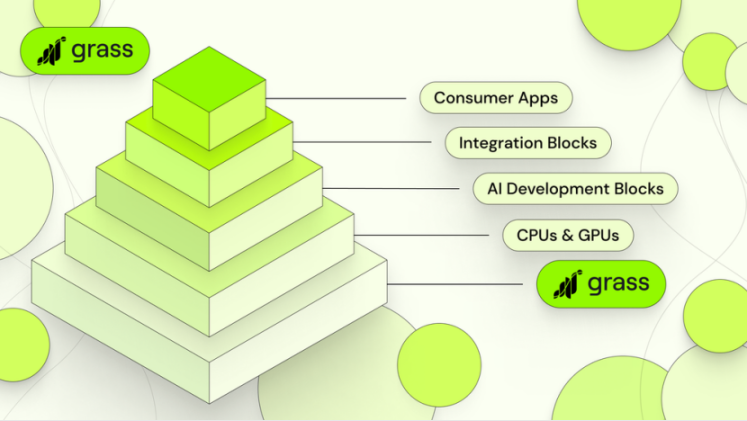

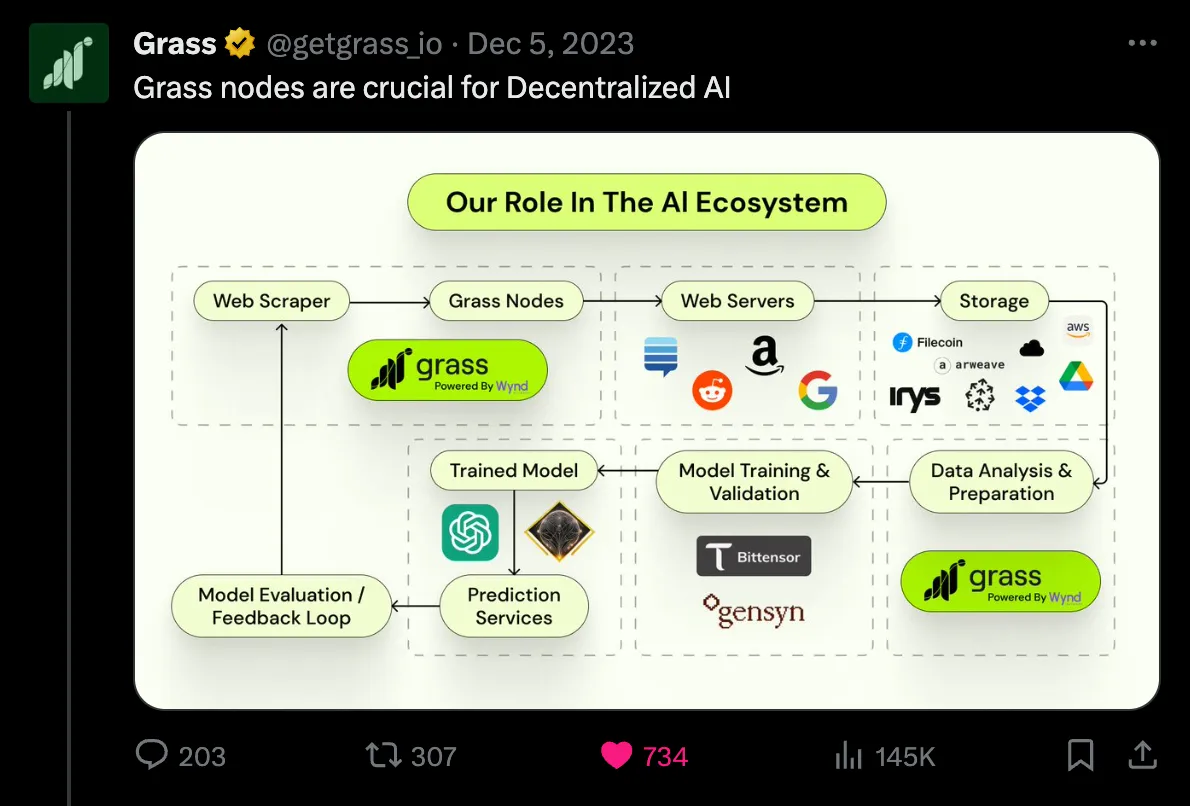

0xdrej: We like to call it the decentralized data provisioning layer for AI. That essentially means we have a network of over 500,000 browser extensions that crawl the public internet, capture website snapshots, and upload them to databases.

The idea is that because we can parallelize and distribute all this computing power—and these residential views of the internet (which is crucial, since websites usually show consumers what they want the public to see, not what data centers or traditional crawlers get)—we can actually create datasets that would be impossible to generate elsewhere.

There are several comparisons—one being a decentralized oracle for AI, others being decentralized versions of regular crawling. But ultimately, it's a massive data protocol focused on public web data.

So by allowing anyone to participate in this network and integrating blockchain, you found a way to compete with existing solutions, right?

0xdrej: We experimented with several different business models. Clearly, when building such a protocol, you could just pay people a small amount for unused bandwidth—for example, a fixed rate per gigabyte—and then use that bandwidth to scrape large datasets, extract insights, and monetize those insights. You capture a bit of margin at each step: from scraping to dataset to insight.

Usually, different entities handle these steps, and the users providing the bandwidth—the ones powering everything—only see that tiny fixed rate per gigabyte, or often nothing at all, because they installed an SDK in a free app that silently cycles their bandwidth. We thought that was unfair.

We asked ourselves: how can we create a value pool mechanism to compensate users across the entire vertical? So if someone trains an AI model using data scraped by your Grass node, your node should be compensated—not just for raw data usage. Hopefully that makes sense. This is one of the major problems we aim to solve on-chain.

Another increasingly prominent issue is polluted datasets. This is a new problem in AI, though it's existed in e-commerce for years.

For instance, if you're scraping an e-commerce site like eBay and want to track daily prices for all their inventory, you might need to scrape around 30 million SKUs daily. eBay knows that if they block your IP, you’ll switch. So instead, they set price traps—if they detect scraping activity aimed at undercutting them, they feed you fake prices. We experienced this early on with Grass and compared it against data center-based scraping.

These e-commerce tactics have slowly migrated into ad tech. Since HoloLens exploded over the past year and a half, they’ve also flowed into NLP (natural language processing) datasets.

So, if you’re a politician and know a particular dataset will train a model, you might contact the people managing it and ask them to insert, say, a thousand sentences favoring a specific candidate. Similarly, companies fund the insertion of fake reviews into datasets already scraped from the web.

Now, solving this is extremely difficult, right? Because as you probably know, LLM training datasets aren’t just GBs or TBs—they’re PBs, millions of GBs.

So expecting anyone training an LLM to verify whether a dataset truly came from the claimed source is unrealistic. For example, if I claim I scraped all of Medium—that’s maybe 50 million articles—but there’s no guarantee those are actually authentic Medium articles.

To address this, zk-TLS (zero-knowledge Transport Layer Security) offers a great solution. Honestly, this would only be feasible on a high-throughput blockchain.

The idea is that once we decentralize, nodes submit request proofs as they crawl the internet. They submit these proofs, and then our sequencer (currently centralized, but we plan to decentralize it) delegates a certain number of tokens to a smart contract.

That contract unlocks upon receiving approved requests. Now, you can link that request proof directly to the network response from the crawl, and then to the dataset itself. Suddenly, you have cryptographic proof showing these rows in the dataset actually came from those websites at a specific date and time.

This is powerful because such a mechanism doesn’t exist even in Web 2.0—and is only possible with blockchain.

Can you talk about what a "data war" is and how Grass participates in it?

0xdrej: As I hinted earlier, the first industries to start blocking data were actually e-commerce, because those were the most directly monetizable datasets at the time. As technology evolved and our understanding of language data advanced, this type of data became extremely valuable too. However, until recently, language data didn’t offer nearly as much value as it does now. So many websites only recently figured out how to monetize this linguistic data. Then they realized how powerful it was and started blocking access to the internet.

For example, about six months ago, Elon Musk started rate-limiting Twitter for everyone because it was being scraped. Previously, Twitter didn’t really block web crawlers, but Elon Musk recognized the value of Twitter data and wanted to use it to train his own AI. That’s exactly what we predicted—and it’s unfolding as expected.

Another example is Reddit, which imposed various restrictions on its API. You may not know that two-thirds of the general-purpose crawling libraries used to train GPT were actually scraped from Reddit.

Reddit didn’t fully grasp how valuable their data was. It’s especially valuable due to how Reddit operates: someone asks a question, people answer, good answers rise to the top, bad ones sink. There’s a community manually curating data suitable for models.

We predicted a data war is currently unfolding, where all these sites try to lock down their data. They even give backdoor access to a few big tech firms, making AI inaccessible to open-source developers—a scary trend introducing serious centralization risks.

Another good example is Medium. A few months ago, Medium’s CEO wrote a blog post about how web scrapers were feeding Medium articles into AI models. He discussed poisoning these datasets, blocking scrapers, and making content as inaccessible as possible. That’s why it’s hard to browse Medium without registering.

This prevents ordinary people from freely using the internet, as companies try to isolate their data.

Medium’s CEO also mentioned they allow Google access to their data. Regular users can’t properly browse their site, but Google can scrape it for free to train its AI models. He explained why: Google will prioritize Medium in search results in exchange for access. This shows how valuable owning a search engine is—you can effectively pay for language data through SEO prioritization. This is the next big wave of the data war.

All these companies are fighting over data, trying to lock it down, trying to get proper pricing for something humanity never priced before. Ordinary people become collateral damage, with access limited to only a few institutions—which is unfair.

What’s ironic is that now legacy companies install SDKs in apps downloaded by millions for free to scrape sites like Reddit. Suppose you download the Roku TV screensaver or some free mobile game. Developers get paid to embed an SDK that lets big companies use your bandwidth to scrape websites from your residential IP, since their IPs are blocked. Ironically, we always agree to these terms and conditions, and they justify it with: “Hey, you get an ad-free experience.” They claim that’s your compensation. But we all know ads are worth far less than the value of the data being used.

Our philosophy with Grass is that if a data war is happening, we may not stop it, but we should at least have a chance to participate. We should have the choice—to either sell weapons in the data war or create a massive open dataset for the internet that anyone can use to train their own AI models.

Is it easy for people to join Grass and benefit?

0xdrej: The network is currently in beta testing and extremely simple. The hardware you need already exists on your device. All you need is a referral code. Then simply create an account or use the Saga mobile app, and you're good to go—the onboarding process is very smooth.

One issue we've recently faced is that user growth has been much faster than anticipated. So while we scale our infrastructure, users might encounter minor issues.

How big do you think this market is?

0xdrej: Right now, we're targeting two verticals—or three—each with different market sizes.

The first is the alternative data industry, which I believe is a $20 billion market. By alternative data, I mainly mean data used by hedge funds. For example, if you track prices and inventory at certain stores, you can estimate a company’s quarterly earnings. Hedge funds pay for such information.

The web scraping market itself, though still emerging, is currently worth billions and growing rapidly. The reason for this massive growth lies in the third market: artificial intelligence.

The AI data market size is now extremely hard to quantify. It may be growing exponentially every day—we find it difficult to put a value on it. But when you see people discussing selling data to AI datasets, you realize this is a huge opportunity.

So, does Grass become more valuable and competitive as user numbers grow?

0xdrej: Yes, that’s a great question. The larger the network, the more viable it becomes.

Let me give an example—Hivemapper, which I think is a cool product and concept. If you want to map the entire world but only have 10 cars driving, you’ll only get a small fraction of the map. It might be useful for some very specific niche applications, but not broadly applicable.

However, if you have millions of drivers mapping every road worldwide, you can build a much more comprehensive map. Then you can sell a superior product at a higher premium, and unit economics improve significantly for every participant.

Think about it—Grass is essentially mapping the entire internet.

Let me give another example unrelated to AI but part of a massive industry—flights, travel, and hotels. If you're a travel aggregator, you want the best price from every provider at every location. For example, flight prices from Berlin to Singapore might differ depending on whether viewed from New York or Berlin. Travel aggregators need to know prices from as many IP addresses as possible to offer the best deals. Now, if they only have IPs from Singapore, China, and the U.S., and someone wants to fly between two European cities, scraping accurate prices becomes very difficult. The network unlocks more use cases as it scales—that’s exciting.

As the network grows, do you think user rewards will be diluted? Or will it find a balance as the network becomes more profitable?

0xdrej: I’ll try to avoid forward-looking statements here. The first variable is that the network is now very close to being usable, which is why during this beta phase, we chose to reward uptime. We don’t intend to reward online time indefinitely.

So right now is the only time you can earn points just for keeping your device online. In the future, nodes will only be compensated for actual bandwidth usage. Regarding balance, the travel example I mentioned earlier is perfect.

In that domain, you can never have enough nodes. For travel aggregators, the most competitive player is actually the one with the most nodes. So if you unlock that, they’ll simply push more content and throughput through the network.

What prompted your decision to build on Solana?

0xdrej: For what we’re trying to do, having a high-throughput chain is clearly essential. When the Grass network launches, it will be one of the largest crypto protocols by user count. This requires extremely low gas fees to incentivize users. Solana is currently the most gas-efficient—and likely fastest—chain. Upcoming updates like FireDancer are very exciting because parallel transactions are exactly what we need.

There are many DePin protocols on Solana, and from a business development perspective, we’re eager to collaborate with other DePin projects. One particularly cool thing we noticed is that Solana has its own phone, and we believe adoption of Solana phones will only increase. This is something no other chain offers. For us, launching an app on a Solana phone is an obvious choice.

Have you drawn inspiration from other projects in the DePin space, like Helium?

0xdrej: Absolutely. The whole idea behind DePin is really about reclaiming ownership. You’re not only overpaying for many things in life but also deprived of things that could otherwise earn you money.

The recent push toward decentralization in DePin, and things done by Helium Mobile and the Saga phone, have opened everyone’s eyes. It’s like realizing: I possess so many resources, yet in many cases, they’re being taken from me. Now, people see an alternative path where you have the choice to reject that reality. That’s powerful, and I didn’t want to miss out. So we’ve drawn a lot of inspiration from that.

Looking ahead, what will Grass look like in 2024? Can you share any insights into your roadmap?

0xdrej: We plan to fully launch the network at some point in 2024—I don’t think that will surprise anyone.

Beyond that, on our roadmap, we aim to implement request proofs using zk-TLS to bind network requests to datasets—this may happen in the second half. We also plan to decentralize many of our sequencers. Implementation details are still TBD, but we have many exciting ideas that will make it easier for people to run Grass infrastructure.

We’re also thinking about hardware. Right now, using Grass costs nothing—we like that and intend to keep it that way forever. But suppose you don’t want your device running 24/7, or for some reason don’t want to run the node on your personal device. We want to give people the option to simply buy a box, connect it to their internet, and let it run in the background. Beyond personal preference, an exciting aspect of hardware is that we can embed AI agents inside and allow them to run autonomously. They can perform extensive web scraping and crawling tasks for you. All you need to do is sit back and let those AI agents do the work—like owning a self-driving car that maps roads.

If you want to contribute more to the network, we want a device available to enable that.

We’re developing small features like gamification upgrades for the dashboard. We also want to add special Easter egg features specifically for Saga users—we’re currently exploring ideas. Beyond that, we’re working on releases for other devices. Now, we’re not just focusing on browser extensions—we’re also making it downloadable for those who prefer not to install one. Many people dislike installing extensions, and that’s totally fine. So we plan to expand to other platforms like Android, iOS, Raspberry Pi, Linux, etc.

Overall, we want to give people more choices to easily join the Grass network.

How do you envision Grass’s governance structure? Will it be a fully community-owned decentralized network?

0xdrej: We have several stages toward decentralization. The first is the attestation mechanism, where we can reward user contributions on-chain.

The second stage involves decentralizing our sequencers and parts of the crawl approval process. Governance plays a key role here. We essentially want to become a massive data supply network where community members can say, “Hey, I’m training this AI model, I need these types of datasets, and I’d like to propose shifting crawl efforts toward gathering this data.” Then sequencers can act as validators to ensure correct data is crawled.

One of the few governance features we want to include is protecting the network. In a decentralized network, when done right, market efficiency usually emerges over time. Many apps monetize unused CPU, GPU, etc., typically paying out in fiat. They might initially offer decent rates to attract users, then gradually lower payouts until returns become negligible.

With a governance structure, you can protect the community because those contributing to the network actually own part of it. That’s the state we want to reach—where everyone running a node in the Grass network owns a piece of the network itself.

Do you theoretically have sufficient scale to launch the network now? Or do you still want to increase node count before launch?

0xdrej: In terms of total node count, we’re very close to our target. However, in certain geographic locations, we’re actually not that close. Some regions have demand for specific content types that exceeds local supply. We want to ensure we can meet all demand—that’s our goal for network launch.

As you know, we’re in testing phase, so we’re doing our best to ensure scalability. Due to faster-than-expected growth, users have encountered some issues accessing the network and viewing dashboards. These are problems we plan to resolve before full network launch. That’s why we’re still in testing. So regarding node numbers, we’re considering many factors. Overall, we’re quite satisfied with where we stand.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News