From a product-market fit perspective, which other projects in this round of crypto AI have potential?

TechFlow Selected TechFlow Selected

From a product-market fit perspective, which other projects in this round of crypto AI have potential?

The next major opportunity in cryptography and AI is not another speculative token, but infrastructure capable of truly advancing AI development.

Author: Evan ⨀

Translation: TechFlow

The intersection of crypto and AI is still in a very early stage. Although the market has seen countless intelligent agents and tokens, most projects seem to be mere numbers games, with teams trying to take as many "shots on goal" as possible.

While AI represents the technological revolution of our generation, its integration with crypto is largely viewed as an early liquidity tool to access the AI market.

As a result, we've already witnessed multiple cycles in this space, where most narratives have experienced rollercoaster-like rises and falls.

How do we break the hype cycle?

So where does the next big opportunity at the intersection of crypto and AI come from? What kind of applications or infrastructure will actually create value and achieve product-market fit?

This article attempts to explore the key areas of interest through the following framework:

-

How can AI help crypto?

-

How can crypto empower AI?

Particularly on the second point—opportunities in decentralized AI—I am especially excited and will introduce some promising projects:

1. How Can AI Empower Crypto?

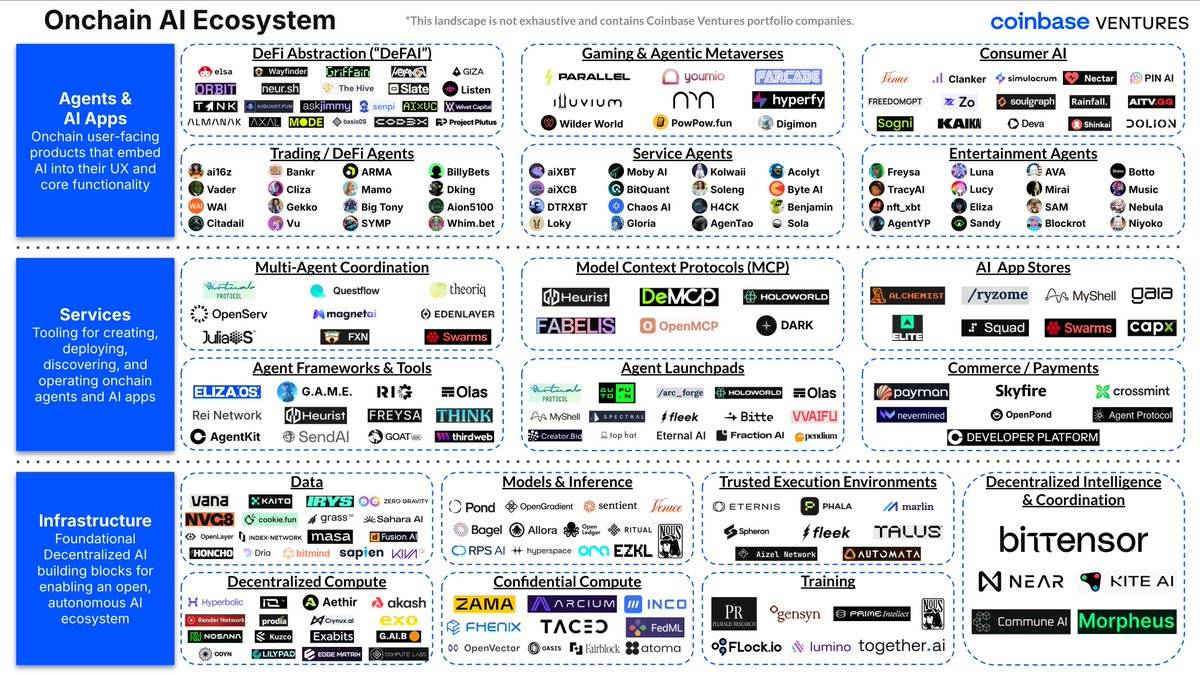

Below is a more comprehensive ecosystem map provided by CV:

https://x.com/cbventures/status/1923401975766355982/photo/1

While there are many verticals such as consumer AI, agent frameworks, and launch platforms, AI has already impacted the crypto experience in three main ways:

1. Developer Tools

Similar to Web2, AI is accelerating crypto development through no-code and low-code ("vibe-code") platforms. Many such applications aim for similar goals as traditional ones, like Lovable.dev.

Teams like @poofnew and @tryoharaAI are helping non-technical developers quickly launch and iterate without needing deep smart contract knowledge. This not only shortens time-to-market for crypto projects but also lowers the barrier for market-savvy and creative individuals who lack technical backgrounds.

Other parts of developer experience are also being optimized, such as smart contract testing and security: @AIWayfinder, @octane_security

2. User Experience

Despite major progress in onboarding and wallet experiences (e.g., Bridge, Sphere Pay, Turnkey, Privy), the core crypto user experience (UX) hasn't undergone a qualitative change. Users still need to manually navigate complex blockchain explorers and execute multi-step transactions.

AI agents are changing this by becoming a new interaction layer:

Search and Discovery: Teams are racing to build tools akin to a "blockchain version of Perplexity." These chat-based natural language interfaces allow users to easily find market alpha, understand smart contracts, and analyze on-chain behavior without dealing with raw transaction data.

A larger opportunity lies in agents becoming gateways for users to discover new projects, yield opportunities, and tokens. Just as Kaito helps projects gain visibility on launch platforms, agents can understand user behavior and proactively surface relevant content. This could create sustainable business models, potentially monetized via revenue sharing or affiliate fees.

Intent-Based Actions: Instead of clicking through multiple interfaces, users simply express their intent (e.g., "swap $1000 worth of ETH into the highest-yielding stablecoin position"), and the agent automatically executes complex multi-step transactions.

Error Prevention: AI can also prevent common mistakes, such as entering incorrect transaction amounts, buying scam tokens, or approving malicious contracts.

More about how Hey Anon enables DeFAI automation:

3. Trading Tools and DeFi Automation

Currently, many teams are racing to develop agents that help users get smarter trading signals, trade on their behalf, or optimize and manage strategies.

Yield Optimization

Agents can automatically shift funds between lending protocols, DEXs, and farming opportunities based on interest rate changes and risk profiles.

Trade Execution

AI can execute superior strategies by processing market data faster, managing emotions, and following predefined frameworks more consistently than humans.

Portfolio Management

Agents can rebalance portfolios, manage exposure, and capture arbitrage opportunities across chains and protocols.

If an agent can truly and consistently manage funds better than humans, it would represent a quantum leap for existing DeFi AI agents. Current DeFi AI mainly helps users execute predefined intents; this would move toward fully autonomous fund management. However, user adoption for such a shift resembles the rollout of electric vehicles—there remains a significant trust gap before large-scale validation. But if successful, this technology could capture the largest value in the space.

Who Are the Winners in This Space?

While some standalone apps may have distribution advantages, it's more likely that existing protocols will directly integrate AI:

-

DEXs: Enable smarter routing and scam protection.

-

Lending Protocols: Automatically optimize yields based on user risk profiles and repay loans when health factors drop below thresholds, reducing liquidation risks.

-

Wallets: Evolve into AI assistants that understand user intent.

-

Trading Platforms: Offer AI-assisted tools to help users stick to their strategies.

Endgame Vision

Interfaces in crypto will evolve into conversational AI systems that understand users' financial goals and execute them more efficiently than users themselves.

2. Crypto Empowering AI: The Future of Decentralized AI

In my view, crypto’s potential to empower AI far exceeds AI’s impact on crypto. Teams working on decentralized AI are tackling some of the most fundamental and practical questions about AI’s future:

-

Can cutting-edge models be developed without relying on massive capital expenditures from centralized tech giants?

-

Can global distributed computing resources be coordinated to train models or generate data efficiently?

-

What happens if humanity’s most powerful technology is controlled by just a few companies?

I highly recommend reading @yb_effect's thread on decentralized AI (DeAI) here for deeper insights into this space.

Just scratching the surface, the next wave at the crypto-AI intersection may come from research-driven academic AI teams rooted in open-source communities. These teams deeply understand both the practical implications and philosophical value of decentralized AI and believe it's the best path to scale AI.

What Problems Does AI Face Today?

In 2017, the landmark paper "Attention Is All You Need" introduced the Transformer architecture, solving a decades-old challenge in deep learning. Since ChatGPT popularized it in 2019, Transformers have become the foundation for most large language models (LLMs), sparking a compute arms race.

Since then, compute requirements for AI training have grown fourfold annually. This has led to extreme centralization in AI development, as pre-training depends on high-performance GPUs available only to the largest tech giants.

-

From an ideological standpoint, centralized AI is problematic because humanity’s most powerful tools could be controlled or withdrawn by their funders at any time. Therefore, even if open-source teams cannot match the pace of centralized labs, challenging this status quo remains crucial.

Crypto provides the economic coordination needed to build open models. But before reaching that goal, we must answer: beyond idealism, what real problems can decentralized AI solve? Why is collaboration so important?

-

Luckily, teams in this space are highly pragmatic. Open source embodies the core idea of scaling technology: through small-scale collaboration, each team optimizes its local maximum and incrementally builds upon others, ultimately reaching a global maximum faster than centralized approaches limited by scale and institutional inertia.

-

Meanwhile, especially in AI, open source is also essential for creating intelligence—not in a moral sense, but one capable of adapting to different roles and personalities assigned by individuals.

In practice, open source may unlock innovation to address very real infrastructure constraints.

The Scarcity of Computing Resources

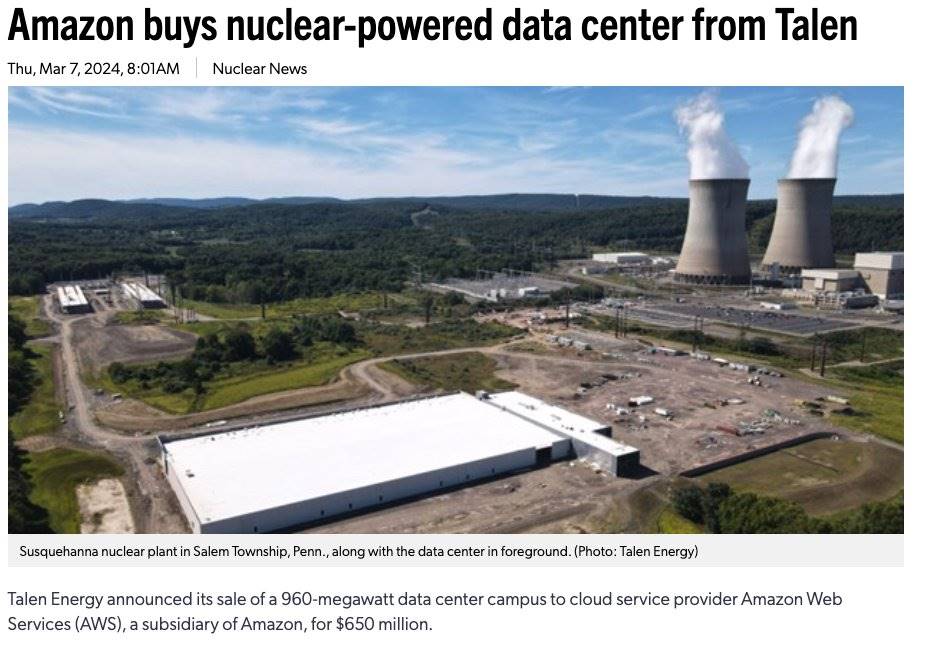

Training AI models already demands massive energy infrastructure. Several projects are now building data centers ranging from 1 to 5 gigawatts. However, continued scaling of frontier models will require energy beyond what single data centers can provide—levels comparable to entire cities. The issue isn’t just energy output but also physical limits of individual data centers.

Even beyond pre-training frontier models, inference costs are rising significantly due to new reasoning models and breakthroughs like DeepSeek. As the @fortytwonetwork team notes:

"Unlike traditional LLMs, reasoning models prioritize generating smarter responses by allocating more processing time. However, this shift comes with a trade-off: the same compute resources handle fewer requests. To achieve these meaningful improvements, models need more 'thinking' time, further exacerbating compute scarcity."

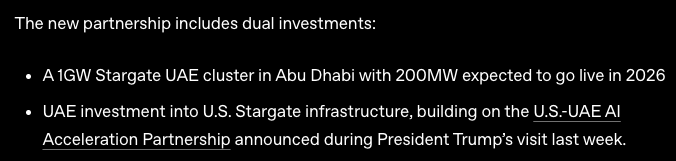

Compute scarcity is already evident. For example, OpenAI caps API calls at 10,000 per minute, effectively limiting AI applications to serving around 3,000 users simultaneously. Even ambitious projects like Stargate—a $500 billion AI infrastructure plan recently announced by President Trump—may only temporarily alleviate this issue.

According to Jevons’ Paradox, efficiency gains often lead to increased resource consumption due to rising demand. As AI models become more powerful and efficient, compute needs could surge due to new use cases and broader adoption.

So where does crypto come in? How can blockchain meaningfully assist AI search and development?

Crypto offers a fundamentally different approach: globally distributed + decentralized training with economic coordination. Instead of building new data centers, leverage the millions of existing GPUs—gaming rigs, crypto mining hardware, enterprise servers—that sit idle most of the time. Similarly, blockchain can enable decentralized inference by utilizing idle compute on consumer devices.

A major challenge in distributed training is latency. Beyond crypto elements, teams like Prime Intellect and Nous are developing breakthroughs to reduce GPU communication needs:

-

DiLoCo (Prime Intellect): Reduces communication needs by 500x, enabling intercontinental training and achieving 90–95% compute utilization.

-

DisTrO/DeMo (Nous Research): Their optimizer family reduces communication needs by 857x using discrete cosine transform compression.

However, traditional coordination mechanisms can't solve the inherent trust challenges in decentralized AI training. Blockchain's native properties may here find true product-market fit (PMF):

-

Verification and Fault Tolerance: Decentralized training faces risks of participants submitting malicious or incorrect computations. Crypto provides cryptographic verification schemes (e.g., Prime Intellect’s TOPLOC) and economic penalties to deter bad actors.

-

Permissionless Participation: Unlike traditional distributed computing projects requiring approval, crypto allows truly permissionless contribution. Anyone with idle compute can join instantly and start earning, maximizing the available resource pool.

-

Aligned Economic Incentives: Blockchain-based incentives align individual GPU owners’ interests with collective training goals, making previously idle compute economically productive.

Given this, how are teams in the decentralized AI stack addressing AI scalability using blockchain? What are the proof points?

-

Prime Intellect: Distributed and Decentralized Training

-

DiLoCo: Reduces communication needs by 500x, enabling intercontinental training.

-

PCCL: Handles dynamic node joining, failures, and achieves 45 Gbit/s cross-continental communication speed.

-

Currently training a 32-billion-parameter model across globally distributed worker nodes.

-

Has achieved 90–95% compute utilization in production environments.

-

Results: Successfully trained INTELLECT-1 (10B parameters) and INTELLECT-2 (32B parameters), achieving large-scale model training across continents.

-

-

Nous Research: Decentralized Training and Communication Optimization

-

DisTrO/DeMo: Achieves 857x reduction in communication needs via Discrete Cosine Transform (DCT) techniques.

-

Psyche Network: Uses blockchain coordination to provide fault tolerance and incentives to mobilize compute resources.

-

Completed one of the largest pre-trainings on the internet, training Consilience (40B parameters).

-

-

Pluralis: Protocol Learning and Model Parallelism

Pluralis takes a different approach from traditional open-source AI called Protocol Learning (Protocol Learning). Unlike data-parallel methods used by other decentralized training projects (e.g., Prime Intellect and Nous), Pluralis argues data parallelism has economic flaws—simply pooling compute isn't enough to train cutting-edge models. For instance, Llama3 (400B parameters) requires 16,000 80GB H100 GPUs for training.

Source: Link

The core idea behind Protocol Learning is introducing a real value-capture mechanism for model trainers to pool the vast compute needed for large-scale training. This is done by distributing partial ownership of the model proportional to training contributions. In this architecture, neural networks are trained collaboratively, but no single participant can ever extract the full weight set (called Protocol Models). In this setup, the computational cost for any participant to extract the full model weights exceeds the cost of retraining it from scratch.

Here’s how Protocol Learning works in practice:

-

Model Sharding: Each participant holds only shards of the model, not the complete weights.

-

Collaborative Training: Training requires passing activations between participants, but no one sees the full model.

-

Inference Credentials: Inference requires credentials distributed according to training contributions. Contributors earn revenue from actual model usage.

The significance of Protocol Learning lies in transforming models into economic assets or commodities that can be fully financialized. By doing so, it aims to achieve the computational scale required for truly competitive training tasks. Pluralis combines the sustainability of closed-source development (e.g., steady revenue from closed model releases) with the benefits of open collaboration, opening new possibilities for decentralized AI.

Fortytwo: Decentralized Swarm Inference

Source: Link

-

While other teams focus on distributed and decentralized training challenges, Fortytwo focuses on distributed inference, leveraging Swarm Intelligence to address compute scarcity during inference.

-

Fortytwo addresses the growing compute scarcity in inference. To harness idle compute on consumer hardware (e.g., M2-chip MacBook Air), Fortytwo networks specialized small language models (SLMs).

-

Fortytwo networks multiple SLMs, where nodes collaborate to evaluate each other’s contributions, amplifying network effectiveness through peer evaluation. Final responses are based on the most valuable contributions within the network, supporting inference efficiency.

-

Interestingly, Fortytwo's swarm inference approach can complement distributed/decentralized training projects. Imagine a future where SLMs running on Fortytwo nodes are precisely the models trained via Prime Intellect, Nous, or Pluralis. These distributed training efforts could collectively produce open-source foundation models, fine-tuned for specific domains, and finally coordinated via Fortytwo’s network for inference.

Conclusion

The next big opportunity at the intersection of crypto and AI isn’t another speculative token, but infrastructure that can genuinely advance AI. Today, centralized AI faces scalability bottlenecks—precisely where crypto excels in global resource coordination and aligned economic incentives.

Decentralized AI opens a parallel universe where, by combining experimental freedom with real-world resources, we can explore more of AI’s untapped technological frontiers.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News