After Vision Pro, Apple quietly ponders smart glasses

TechFlow Selected TechFlow Selected

After Vision Pro, Apple quietly ponders smart glasses

AR+AI seems to have given Apple a new idea for developing wearable devices.

By Mu Mu

Apple’s newly launched Vision Pro is making waves, but the company is already rumored to be developing another pair of smart glasses. Unlike the Vision Pro headset, this new model emphasizes being lightweight, designed for comfortable all-day wear.

According to Bloomberg reporter Mark Gurman, engineers in Apple's Cupertino lab have recently been discussing a smart glasses project resembling Meta and Ray-Ban's collaborative smart glasses. These glasses would offer audio capabilities and use AI along with cameras to recognize objects in the outside world.

Gurman believes wearable devices could help Apple attract new customers and drive growth. After Vision Pro, AR+AI appears to be opening a new path for Apple in wearable technology.

Apple’s Potential Smart Glasses Likely Focus on AR Technology

Vision Pro has received sharply divided reviews since its release.

Users praise its near-4K display quality, powerful interaction capabilities, and best-in-class MR (mixed reality) experience. However, they also note that Vision Pro is large and heavy, making it uncomfortable for extended use. The weight concentrated on the face places significant strain on the neck.

Its excessive weight and forward center of gravity have become major drawbacks. Amid user feedback on Vision Pro, news emerged that Apple is considering developing a lightweight smart glasses alternative.

Unlike Vision Pro, the rumored smart glasses prioritize “lightness” and resemble regular eyewear in appearance. Functionally, they may be similar to Meta’s Ray-Ban smart glasses or Amazon’s Echo Frames. Apple’s version would provide audio playback, eliminating the need for AirPods, and use AI and cameras to identify objects in the surrounding environment.

Based on Mark Gurman’s reporting at Bloomberg, Apple’s envisioned smart glasses align more closely with augmented reality (AR) technology.

As of now, these smart glasses remain in the "technology exploration" phase within Apple's hardware engineering division. Bloomberg reports that Apple’s overall sales declined last year, and even its once-booming wearable business has stagnated—prompting the company to take action.

The report notes that after the initial failure of Ray-Ban’s smart glasses, recent versions have sold better than expected. Consumers are increasingly comfortable using smart glasses to shoot videos, play music, and issue voice commands to chatbots.

Meta has already begun integrating AI features into its smart glasses hardware, and Apple may soon follow suit. Supporting evidence includes multiple international patents Apple has filed since 2023 related to smart glasses. For example, one patent titled "Eyewear System" describes a mechanism ensuring that future smart glasses sit properly on the user’s nose and stay securely in place, enabling accurate eye tracking and content delivery.

Tim Cook once mentioned in an interview with GQ: "You can overlay digital elements onto the physical world—this technology can greatly enhance human communication and connection. It allows people to do things they couldn’t do before." That interview revealed Cook’s personal views on AR—he doesn’t want people disconnected from reality; instead, he and Apple aim to enhance learning, interaction, and creativity through augmented reality.

Vision Pro embodies Cook’s vision of blending digital and physical worlds. But Vision Pro won’t be the only—or final—device representing this philosophy. Just as the iPhone was followed by the iPad, Apple will continue creating new hardware to bridge virtual and real experiences, enabling even greater functionality in the physical world.

Reflecting back, since the first mobile phone ("brick phone") debuted in 1973, humans have carried palm-sized machines for 50 years. Another shift in how we interact with the world isn't surprising—wearables like smart glasses represent a new form, a new paradigm of interaction.

AR + AI Powers a New Frontier in Smart Glasses

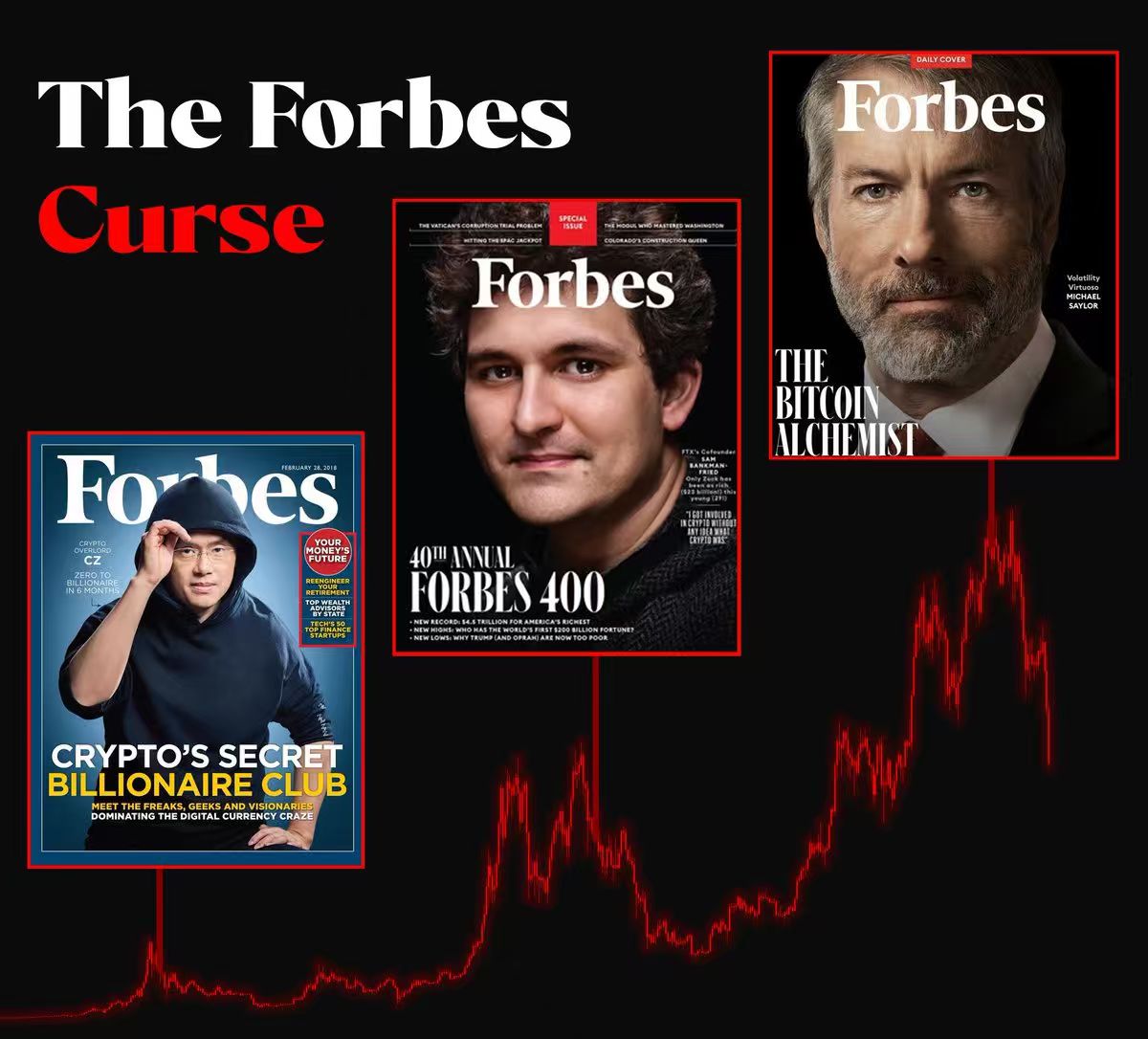

As AR glasses evolve, artificial intelligence happened to sweep the globe in 2023 with breakthroughs in large models. Wearable devices combined with AI became a natural narrative. Major tech giants appear to be targeting AI-powered wearables—especially smart glasses.

Meta has integrated AI functions into its Ray-Ban smart glasses. Beyond real-time language translation, the AI manifests as a voice assistant capable of describing the user’s visual surroundings—for instance, helping users choose clothing combinations by suggesting which shirt goes well with which pants.

Meta added an AI voice assistant to Ray-Ban glasses

Amazon’s Alexa team previously developed Echo Frames—a smart audio glasses product. However, it lacks a display screen or camera. Instead, it delivers feedback via four directional speakers built into each temple arm. Users can control smart home devices, receive notifications, make calls, or listen to music through voice conversations. Echo Frames can also adjust volume and feedback based on ambient environments.

In China, Huawei has also entered the smart glasses market. As a smart eyewear product, Huawei’s design preserves the look of traditional glasses. At the same time, it embeds “smart assistance” entirely within the frame, offering全天候 intelligent announcements—such as proactively delivering daily schedules, news, weather updates, or immediately notifying users of flight or train information when traveling.

Overall, today’s smart glasses seem little more than headphones embedded in eyewear. But people envision a greater role: bringing humans closer to the digital world—not just acting as voice assistants, but ideally enhancing reality through visual capabilities.

TechFlowPost’s editorial team imagined potential smart glasses functionalities: “When I’m shopping at a supermarket, my glasses could compare prices in my field of view and scan products to reveal full details,” “When reading foreign-language documents, my glasses instantly translate them,” “When I see a restaurant sign, my glasses display ratings and signature dishes”…

Compared to these practical applications, current smart glasses still offer limited integration between physical and virtual worlds. Most either serve as secondary screens or enhance audio and voice interactions—the display and AI capabilities haven’t yet fully converged to deliver optimal synergy.

A closer look at smart glasses components reveals that AR glasses, constrained by size and comfort requirements, leave minimal space for processors, cameras, batteries, and other components. Additionally, AI large models require specialized variants tailored for smart hardware. Of course, adding AI functionality also demands dedicated chips capable of processing multimodal data such as sound, images, and video.

However, such large models for wearables are emerging. Insiders report that OpenAI is currently embedding its "GPT-4 with Vision" object recognition software into products from social media company Snap—potentially unlocking new features for Snap’s Spectacles smart glasses.

Let’s look forward to the next revolution in mobile computing. It may no longer resemble today’s handheld smartphones requiring manual operation, visual focus, and auditory attention. Instead, glasses integrating audiovisual capabilities and eye-tracking technology might just be the most promising evolution we can imagine.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News