No wonder Buffett finally bet on Google

TechFlow Selected TechFlow Selected

No wonder Buffett finally bet on Google

Google controls the entire chain in its own hands. It doesn't rely on Nvidia and possesses efficient, low-cost computing sovereignty.

Author: Ma Leilei

Source: Wu Xiaobo Channel CHANNELWU

Warren Buffett once said, "Never invest in a business you can't understand." Yet, as the "Oracle era" nears its end, Buffett has made a decision that breaks his own rule: buying Google stock at a high premium of about 40 times free cash flow.

Yes, Buffett has bought an "AI-themed stock" for the first time—not OpenAI, not NVIDIA. Every investor is asking one question: Why Google?

Go back to the end of 2022. At that time, ChatGPT emerged out of nowhere, triggering a "red alert" among Google's executive team. They held constant meetings and even urgently recalled two founders. But at that moment, Google looked like a slow-moving, bureaucratic dinosaur.

It hastily launched the chatbot Bard, but made factual errors during its demo, causing the company's stock price to plummet and wiping out over $100 billion in market value in a single day. Then, it consolidated its AI teams and released the multimodal Gemini 1.5.

Yet this so-called trump card stirred only a few hours of discussion within tech circles before being completely overshadowed by OpenAI’s subsequent video generation model Sora, quickly fading into obscurity.

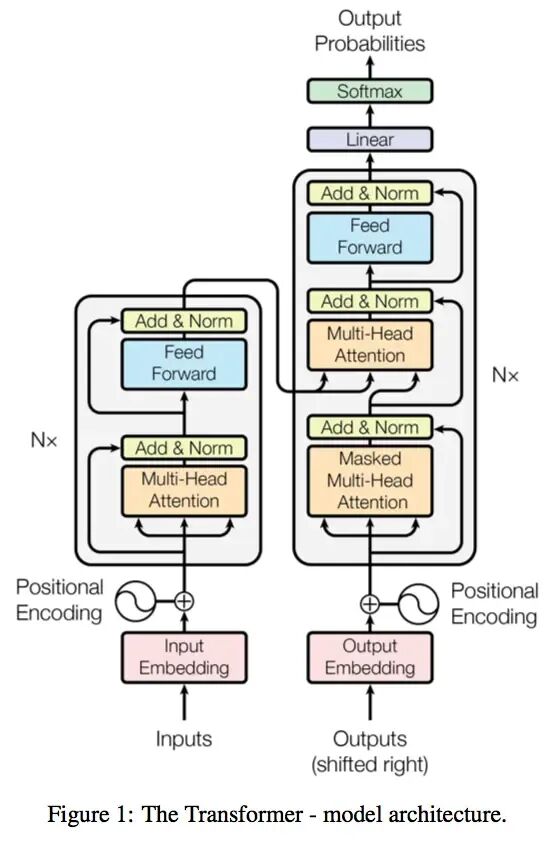

Somewhat awkwardly, it was Google researchers who published the groundbreaking academic paper in 2017 that laid the solid theoretical foundation for this wave of AI revolution.

The research paper "Attention Is All You Need"

Introducing the Transformer model

Competitors mocked Google. OpenAI CEO Sam Altman dismissed Google's taste: "I can't help but think about the aesthetic difference between OpenAI and Google."

Google’s former CEO criticized the company’s complacency: "Google has always believed work-life balance... is more important than winning the competition."

This series of setbacks led many to suspect that Google had fallen behind in the AI race.

But change has finally arrived. In November, Google launched Gemini 3, which surpassed competitors—including OpenAI—on most benchmark metrics. More crucially, Gemini 3 was trained entirely on Google’s self-developed TPU chips, now positioned as low-cost alternatives to NVIDIA GPUs and officially offered for large-scale sale to external customers.

Google is showing strength on two fronts: using the Gemini 3 series to directly challenge OpenAI on the software front, and deploying TPU chips to challenge NVIDIA’s long-standing dominance in hardware.

Kicking OpenAI, punching NVIDIA.

Altman felt the pressure last month, writing internally that Google “might bring some temporary economic headwinds” to his company. This week, upon news of major companies purchasing TPU chips, NVIDIA’s stock dropped 7% during trading, forcing it to issue a statement to calm the market.

In a recent podcast, Google CEO Sundar Pichai told employees they should catch up on sleep. "From the outside, we may have seemed quiet or behind, but in reality, we were strengthening all foundational components and pushing forward with full force."

The situation has now reversed. Pichai said: "We’ve reached a turning point."

It’s exactly three years since ChatGPT’s release. These past three years have seen AI spark a grand feast of Silicon Valley capital and shifting alliances. Beneath the feast, concerns about bubbles are rising—has the industry reached a turning point?

Surpassing

On November 19, Google released its latest AI model, Gemini 3.

One test showed that across nearly all categories—including expert knowledge, logical reasoning, mathematics, and image recognition—Gemini 3 significantly outperformed other companies’ latest models, including ChatGPT. Only in one programming test did it come in second place.

The Wall Street Journal said, "Call it America’s next-generation top model." Bloomberg remarked that Google has finally woken up. Elon Musk and Sam Altman both praised it. Some netizens joked it was Altman’s ideal version of GPT-5.

The CEO of cloud content management platform Box, after early access to Gemini 3, said the performance leap was so incredible they initially suspected their evaluation methods were flawed. But repeated testing confirmed the model won by double-digit margins across all internal assessments.

Salesforce’s CEO said he’d used ChatGPT for three years, but Gemini 3 overturned his understanding in just two hours: "Holy shit… no going back. It’s a qualitative leap—reasoning, speed, image and video processing—all sharper, faster. Feels like the world turned upside down again."

Gemini 3

Why does Gemini 3 perform so exceptionally well, and what did Google do differently?

The head of the Gemini project posted that it was “simple: improved pre-training and post-training.” Analysis suggests the model still follows the Scaling Law logic—enhancing capabilities through optimized pre-training (such as larger datasets, more efficient training methods, and more parameters).

No one wants to know the secrets of Gemini 3 more than Altman.

Last month, before Gemini 3’s launch, he sent an internal note to OpenAI staff as a warning: “By any measure, Google’s recent work has been excellent,” especially in pre-training. The progress Google made could bring the company “some temporary economic headwinds,” and “the external environment will be tough for a while.”

While ChatGPT still holds a significant advantage in user numbers over Gemini, the gap is narrowing.

ChatGPT’s user base has grown rapidly over these three years. In February this year, its weekly active users reached 400 million; this month, it jumped to 800 million. Gemini reports monthly figures: in July, it had 450 million monthly active users; this month, that number surged to 650 million.

With around 90% share of the global web search market, Google naturally controls the core channel for promoting its AI models, giving it direct access to massive user bases.

OpenAI is currently valued at $500 billion, making it the highest-valued startup globally. It’s also one of the fastest-growing companies in history, with revenue soaring from nearly zero in 2022 to an estimated $13 billion this year. But it also forecasts spending over $100 billion in the coming years to achieve artificial general intelligence, plus hundreds of billions more leasing servers. In short, it still needs to raise more funds.

Google has a key advantage: deeper pockets.

Google’s latest quarterly financial report shows revenue exceeding $100 billion for the first time, reaching $102.3 billion—a 16% year-on-year increase—with $35 billion in profit, up 33%. The company’s free cash flow stands at $73 billion, and AI-related capital expenditure will reach $90 billion this year.

It doesn’t have to worry yet about AI eroding its search business—search and advertising continue to show double-digit growth. Its cloud business is thriving, even hosting OpenAI on its servers.

Beyond self-sustaining cash flow, Google holds advantages OpenAI cannot match: vast ready-made data for training and optimizing models, and in-house computing infrastructure.

On November 14, Google announced a $40 billion investment to build new data centers

OpenAI has skillfully negotiated compute agreements worth over $1 trillion. So when Google closes in fast with Gemini, investors grow more skeptical: Can OpenAI’s projected growth really fill the financial void?

Fissures

A month ago, NVIDIA’s market cap surpassed $5 trillion, as market enthusiasm for AI pushed this "AI arms dealer" to new heights. But Google’s TPU chips, used in Gemini 3, have cracked NVIDIA’s seemingly impenetrable fortress.

Citing data from investment research firm Bernstein, The Economist reported that NVIDIA’s GPUs account for over two-thirds of the total cost of a typical AI server rack, while Google’s TPU chips cost only 10% to 50% of comparable-performance NVIDIA chips. These savings add up significantly. Investment bank Jefferies estimates Google will produce about 3 million such chips next year—nearly half of NVIDIA’s output.

Last month, prominent AI startup Anthropic planned large-scale adoption of Google’s TPU chips, reportedly in a deal worth hundreds of billions of dollars. On November 25, reports said tech giant Meta was negotiating to deploy TPU chips in its data centers by 2027, in a deal valued in the tens of billions.

Google CEO Sundar Pichai introduces TPU chips

Silicon Valley’s internet giants are all betting on chips—either developing in-house or partnering with chip firms—but none have advanced as far as Google.

TPUs trace back over a decade. Back then, Google developed a proprietary accelerator chip internally to improve the efficiency of search, maps, and translation. Starting in 2018, it began selling TPUs to cloud computing customers.

Later, TPUs were also used to support Google’s internal AI development. During the development of models like Gemini, AI and chip teams interacted closely: the former provided real-world needs and feedback, the latter customized and optimized TPUs accordingly, further boosting AI R&D efficiency.

NVIDIA currently dominates over 90% of the AI chip market. Its GPUs were originally designed for realistic game rendering, relying on thousands of parallel computing cores—an architecture that gave it a clear lead in AI applications.

Google’s TPU, by contrast, is an Application-Specific Integrated Circuit (ASIC)—a "specialist"—designed specifically for certain computational tasks. It sacrifices flexibility and versatility for higher energy efficiency. NVIDIA’s GPU is more of a "generalist," flexible and programmable, but at a higher cost.

Still, at this stage, no company—including Google—can fully replace NVIDIA. Despite being on its seventh generation, Google remains a major customer of NVIDIA. A clear reason: Google’s cloud services must cater to tens of thousands of global clients, and leveraging NVIDIA’s GPU power helps maintain its appeal.

Even companies buying TPUs still embrace NVIDIA. Shortly after announcing its TPU partnership with Google, Anthropic revealed another major deal with NVIDIA.

The Wall Street Journal noted, "Investors, analysts, and data center operators say Google’s TPU is one of the biggest threats to NVIDIA’s dominance in AI computing, but to truly challenge NVIDIA, Google must begin selling these chips more widely to external customers."

Google’s AI chip has become one of the few viable alternatives to NVIDIA’s offerings, directly dragging down NVIDIA’s stock price. NVIDIA stepped in to post reassurances amid market panic over TPUs. It said it was "happy about Google’s success" but emphasized it remains a generation ahead, with hardware more versatile than TPUs and other task-specific chips.

NVIDIA’s pressure also stems from market fears of a bubble—investors worry massive capital inflows don’t match profitability prospects. Market sentiment shifts rapidly—fearing both losing business to rivals and that AI chips might stop selling.

Well-known U.S. "short seller" Michael Burry said he has bet over $1 billion shorting NVIDIA and other tech firms. He gained fame for shorting the U.S. housing market in 2008, a story later adapted into the acclaimed film *The Big Short*. He says today’s AI frenzy resembles the dot-com bubble of the early 2000s.

Michael Burry

NVIDIA distributed a seven-page document to analysts refuting criticisms from Burry and others. But the document failed to quell controversy.

Model

Google is enjoying a sweet period, with its stock rising against the AI bubble trend. Buffett’s firm bought shares in the third quarter, Gemini 3 received positive responses, and TPU chips have raised investor expectations—all pushing Google higher.

In the past month, AI stocks like NVIDIA and Microsoft have fallen over 10%, while Google’s stock has risen about 16%. Currently ranked third globally with a $3.86 trillion market cap, it trails only NVIDIA and Apple.

Analysts describe Google’s AI approach as vertical integration.

A rare "full-stack builder" in tech, Google keeps the entire chain in-house: Google Cloud runs self-developed TPU chips, trains Google’s own large AI models, and seamlessly integrates them into core services like Search and YouTube. The benefits are obvious—no reliance on NVIDIA, with efficient, low-cost computing sovereignty.

The other common model is a looser alliance structure. Giants play different roles: NVIDIA handles GPUs, OpenAI and Anthropic develop AI models, and cloud giants like Microsoft buy GPU chips to host these AI labs’ models. In this network, there are no absolute allies or enemies—cooperate when beneficial, compete fiercely when needed.

Players form a "circular structure," with capital flowing in closed loops among a few tech giants.

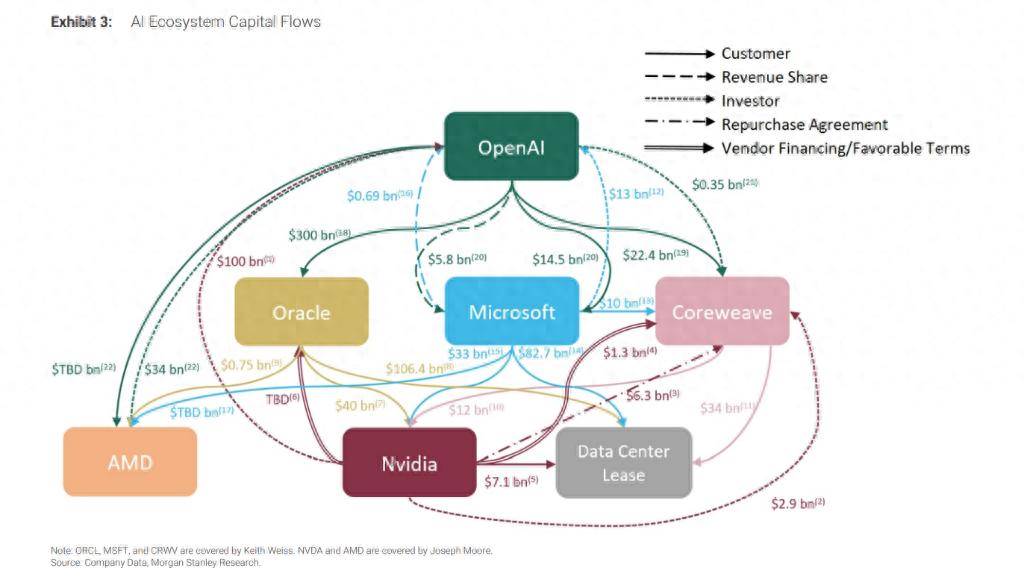

Typically, the circular funding pattern goes like this: Company A pays Company B a sum (as investment, loan, or lease), and B uses that money to buy products or services from A. Without this "starter capital," B might not afford it at all.

For example, OpenAI commits $300 billion to Oracle for computing power, Oracle turns around and spends billions buying NVIDIA chips to build data centers, and NVIDIA reinvests up to $100 billion in OpenAI—on condition it keeps using NVIDIA chips. (OpenAI pays $300B to Oracle → Oracle uses the money to buy NVIDIA chips → NVIDIA uses the earnings to reinvest in OpenAI.)

Such cases have created labyrinthine capital maps. On October 8, Morgan Stanley analysts published a diagram illustrating capital flows in Silicon Valley’s AI ecosystem. They warned that due to lack of transparency, investors struggle to assess real risks and returns.

The Wall Street Journal commented on the diagram: "The connecting arrows look like a plate of spaghetti—utterly tangled."

With capital’s push, the shape of a colossal entity is forming—its true form unknown. Some panic, some rejoice.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News