Give Nokia 1 billion, Huang Renxun wants to earn 200 billion

TechFlow Selected TechFlow Selected

Give Nokia 1 billion, Huang Renxun wants to earn 200 billion

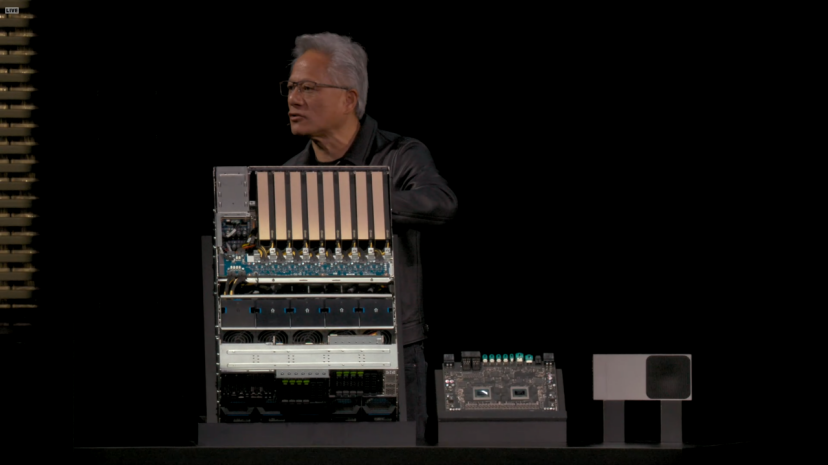

Huang Renxun unveiled a major move at GTC 2025.

At GTC 2025, Jensen Huang dropped a bombshell: NVIDIA will invest $1 billion in Nokia. Yes, that Nokia—the Symbian phone giant popular across China two decades ago.

Huang said during his keynote that telecom networks are undergoing a major shift from traditional architectures to AI-native systems, and NVIDIA's investment will accelerate this transformation. Through this investment, NVIDIA and Nokia will jointly create an AI platform for 6G networks, bringing AI capabilities into traditional RAN (Radio Access Network) infrastructure.

The investment involves NVIDIA subscribing to approximately 166 million new shares of Nokia at $6.01 per share, giving NVIDIA about a 2.9% stake in the company.

Immediately after the partnership was announced, Nokia’s stock surged by 21%, its largest increase since 2013.

01

What is AI-RAN?

RAN stands for Radio Access Network. AI-RAN is a new network architecture that embeds AI computing directly into wireless base stations. Traditional RAN systems primarily handle data transmission between base stations and mobile devices, while AI-RAN adds edge computing and intelligent processing on top.

This enables base stations to use AI algorithms to optimize spectrum utilization and energy efficiency, improving overall network performance. It also allows operators to host edge AI services using idle RAN assets, creating new revenue streams.

Operators can run AI applications directly at the base station, rather than sending all data back to centralized data centers, significantly reducing network load.

Huang gave an example: nearly 50% of ChatGPT users access it via mobile devices, and monthly mobile downloads of ChatGPT exceed 40 million. In this era of explosive growth in AI applications, traditional RAN systems cannot handle generative AI or agent-driven mobile traffic.

AI-RAN addresses this by providing distributed AI inference at the network edge, enabling faster responses for future AI agents and chatbots. Additionally, AI-RAN prepares the ground for integrated sensing and communication applications expected in 6G.

Huang cited a forecast from analyst firm Omdia, predicting the RAN market will accumulate over $200 billion by 2030, with AI-RAN becoming the fastest-growing segment.

Jussi Huttunen, President and CEO of Nokia, stated in a joint announcement that this partnership will put AI data centers into everyone’s pocket, fundamentally redefining networks from 5G to 6G.

He specifically mentioned Nokia’s collaboration with three different types of companies: NVIDIA, Dell, and T-Mobile. T-Mobile, as an initial partner, will begin field testing AI-RAN technology starting in 2026, focusing on validating performance and efficiency gains. Huttunen said these tests will generate valuable data for 6G innovation, helping operators build intelligent networks tailored to AI demands.

Built on AI-RAN, NVIDIA launched a new product called Aerial RAN Computer Pro (ARC-Pro), an accelerated computing platform designed for 6G. Its core hardware integrates two of NVIDIA’s GPUs: Grace CPU and Blackwell GPU.

The platform runs on NVIDIA CUDA, allowing RAN software to be directly embedded into the CUDA stack. As a result, it can handle not only traditional RAN functions but also mainstream AI applications simultaneously—this is NVIDIA’s key approach to realizing the "AI" in AI-RAN.

Given CUDA’s long history, the platform’s biggest advantage is programmability. Moreover, Huang announced that the Aerial software framework will be open-sourced, expected to be released on GitHub under the Apache 2.0 license starting December 2025.

The main difference between ARC-Pro and its predecessor ARC lies in deployment location and use case. The earlier ARC was mainly used for centralized cloud RAN implementations, whereas ARC-Pro can be deployed directly at base stations, making edge computing truly viable.

Ronnie Vasishta, head of NVIDIA’s telecommunications business, said that previously RAN and AI required two separate hardware setups, but ARC-Pro can dynamically allocate computing resources based on network needs—prioritizing wireless functions when needed, or running AI inference during idle periods.

ARC-Pro also integrates NVIDIA’s AI Aerial platform—a complete software stack including CUDA-accelerated RAN software, Aerial Omniverse digital twin tools, and the new Aerial Framework. The Aerial Framework converts Python code into high-performance CUDA code to run on the ARC-Pro platform. Additionally, the platform supports AI-driven neural network models for advanced channel estimation.

Huang said telecommunications is the digital nervous system of the economy and security. Collaboration with Nokia and the telecom ecosystem will ignite this revolution, helping operators build smart, adaptive networks that define next-generation global connectivity.

02

Looking at 2025, NVIDIA has indeed made several major investments.

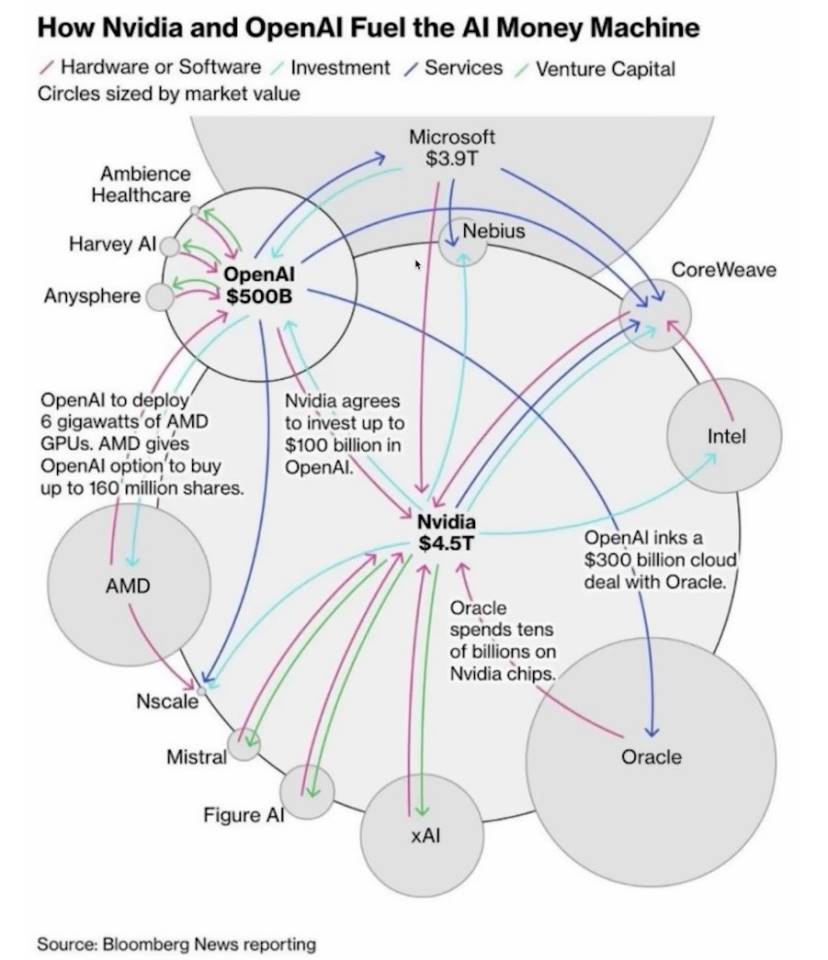

On September 22, NVIDIA and OpenAI announced a partnership where NVIDIA plans to gradually invest $100 billion in OpenAI to accelerate its infrastructure development.

Huang said OpenAI had sought NVIDIA’s investment long ago, but at the time, NVIDIA had limited funds. With humor, he added that they were too poor then and should have given them all their money.

Huang believes AI inference growth won’t be 100x or 1000x, but 1 billion times. This collaboration extends beyond hardware to include software optimization, ensuring OpenAI efficiently utilizes NVIDIA’s systems.

This move may stem from concerns after learning about OpenAI’s cooperation with AMD—fearing OpenAI might abandon CUDA. If the world’s largest AI foundation model stops using CUDA, other large model makers following suit would be inevitable.

In the BG2 podcast, Huang predicted OpenAI could become the next trillion-dollar company, setting industry growth records. He dismissed claims of an AI bubble, noting that global annual capital expenditure on AI infrastructure could reach $5 trillion.

As a result of this investment, OpenAI announced on October 29 the completion of corporate recapitalization. The company has been split into two parts: a non-profit foundation and a for-profit entity.

The non-profit foundation will legally control the for-profit arm while balancing public interest. However, it remains free to raise funds or acquire companies. The foundation will own 26% of the for-profit company and hold a warrant to gain additional shares if the company continues to grow.

Besides OpenAI, NVIDIA also invested in Musk’s xAI in 2025. The current funding round for xAI has increased to $20 billion, with about $7.5 billion raised through equity and up to $12.5 billion through debt via a special purpose vehicle (SPV).

This SPV operates by using raised funds to purchase high-performance processors from NVIDIA, which are then leased to xAI.

These processors will support xAI’s Colossus 2 project. The original Colossus is xAI’s supercomputing data center located in Memphis, Tennessee, already deploying 100,000 NVIDIA H100 GPUs, making it one of the world’s largest AI training clusters. Colossus 2, currently under construction, aims to scale GPU count to hundreds of thousands or more.

On September 18, NVIDIA also announced a $5 billion investment in Intel and a deep strategic partnership. NVIDIA will subscribe to newly issued common shares of Intel at $23.28 per share, totaling $5 billion. Upon completion, NVIDIA will hold approximately 4% of Intel’s shares, becoming a key strategic investor.

03

Of course, Huang covered much more at this GTC.

For instance, NVIDIA unveiled multiple open-source AI model families, including Nemotron for digital AI, Cosmos for physical AI, Isaac GR00T for robotics, and Clara for biomedical AI.

Meanwhile, Huang introduced DRIVE AGX Hyperion 10, an autonomous driving development platform targeting Level 4 autonomy. It integrates NVIDIA computing chips with a full sensor suite, including lidar, cameras, and radar.

NVIDIA also launched the Halos certification program—the industry’s first system for evaluating and certifying the safety of physical AI, specifically for autonomous vehicles and robotics.

The core of the Halos program is the Halos AI system, the first lab recognized by the ANSI Accreditation Committee. ANSI, the American National Standards Institute, grants highly authoritative and credible certifications.

The system’s role is to use NVIDIA’s physical AI to verify whether autonomous systems meet standards. Companies like AUMOVIO, Bosch, Nuro, and Wayve are among the first members of the Halos AI verification labs.

To advance Level 4 autonomy, NVIDIA released a multimodal autonomous driving dataset collected from 25 countries, containing 1,700 hours of camera, radar, and lidar data.

Huang emphasized the dataset’s value lies in its diversity and scale, covering various road conditions, traffic rules, and driving cultures, forming a foundation for training more generalizable autonomous systems.

But Huang’s vision goes far beyond this.

At GTC, he announced a series of collaborations with U.S. government labs and leading enterprises aimed at building America’s AI infrastructure. Huang said we are at the dawn of an AI industrial revolution that will define the future of every industry and nation.

The centerpiece of this effort is the collaboration with the U.S. Department of Energy. NVIDIA is helping build two supercomputing centers—one at Argonne National Laboratory and another at Los Alamos National Laboratory.

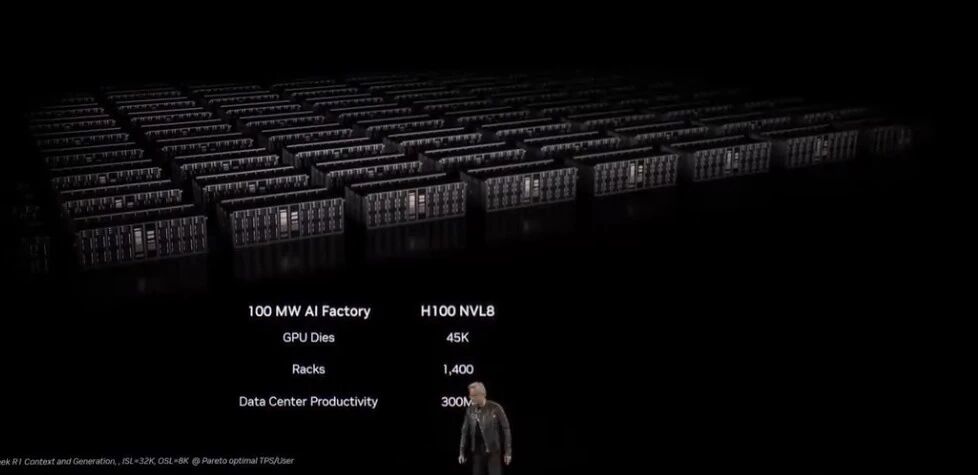

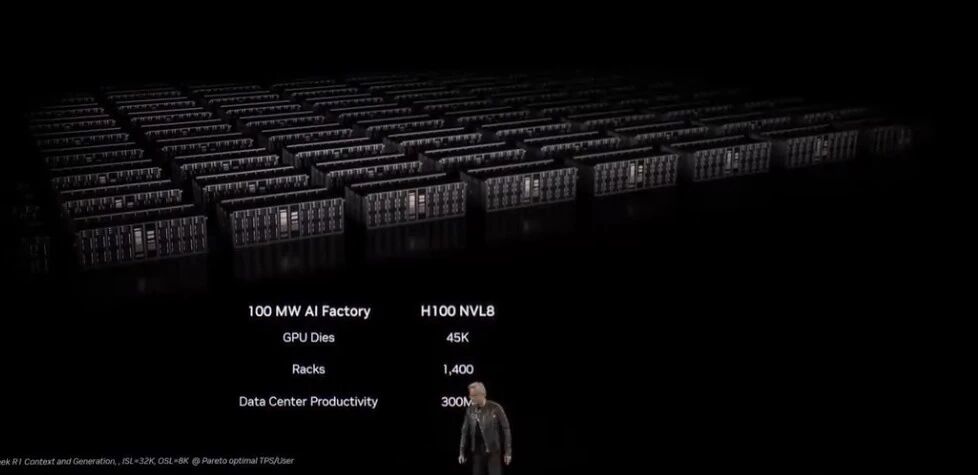

Argonne will receive a supercomputer named Solstice, equipped with 100,000 NVIDIA Blackwell GPUs. What does 100,000 GPUs mean? This will be the largest AI supercomputer ever built for the Department of Energy. Another system, Equinox, with 10,000 Blackwell GPUs, is expected to go online in 2026. Together, these systems will deliver 2,200 exaflops of AI computing power.

Paul Kearns, director of Argonne Lab, said these systems will redefine performance, scalability, and scientific potential. What will they be used for? From materials science to climate modeling, quantum computing to nuclear weapons simulation—all require this level of computational power.

Beyond government labs, NVIDIA is establishing an AI factory research center in Virginia. This center is unique—it’s not just a data center but an experimental testbed. Here, NVIDIA will test something called Omniverse DSX, a blueprint for building gigawatt-scale AI factories.

A typical data center might require tens of megawatts of power, while a gigawatt equals the output of a medium-sized nuclear power plant.

The core idea behind the Omniverse DSX blueprint is turning AI factories into self-learning systems. AI agents will continuously monitor power, cooling, and workloads, automatically adjusting parameters to improve efficiency. For example, when grid demand is high, the system can reduce power consumption or switch to battery storage.

Such intelligent management is critical for gigawatt-scale facilities, where electricity and cooling costs would otherwise be astronomical.

This vision is grand. Huang said it will take three years to realize. AI-RAN testing won’t start until 2026, autonomous vehicles based on DRIVE AGX Hyperion 10 won’t hit roads until 2027, and the Department of Energy’s supercomputers will come online in 2027.

NVIDIA holds CUDA, its killer advantage, and maintains de facto standard in AI computing. From training to inference, from data centers to edge devices, from autonomous driving to biomedicine, NVIDIA GPUs are everywhere. The investments and partnerships announced at this GTC further solidify that dominance.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News