Interview with 0G Labs CEO: Catching Up with Web2 AI Within 2 Years, the "Zero Gravity" Experiment for AI Public Goods

TechFlow Selected TechFlow Selected

Interview with 0G Labs CEO: Catching Up with Web2 AI Within 2 Years, the "Zero Gravity" Experiment for AI Public Goods

Step into the wave of decentralized AI and explore 0G's core vision, technical implementation path, ecosystem focus areas, and future roadmap planning.

Author: TechFlow

OpenAI CEO Sam Altman has repeatedly stated in podcasts and public speeches:

AI is not a model race, but about creating public goods, enabling universal benefit and driving global economic growth.

At a time when Web2 AI is widely criticized for oligopolistic control, within the Web3 world whose core ethos is decentralization, there exists a project with the central mission of "making AI a public good" — one that, in over two years since inception, has raised $35 million in funding, built the foundational technology to support innovative AI applications, attracted 300+ ecosystem partners, and grown into one of the largest decentralized AI ecosystems.

This is 0G Labs.

In an in-depth conversation with Michael Heinrich, co-founder and CEO of 0G Labs, the concept of “public goods” emerged repeatedly. On his understanding of AI as a public good, Michael shared:

We aim to build a decentered, anti-black-box AI development model — transparent, open, secure, and inclusive — where everyone can participate, contribute data and computing power, earn rewards, and collectively share in the benefits of AI.

When asked how this could be achieved, Michael detailed 0G’s specific roadmap:

As an AI-dedicated Layer 1, 0G boasts outstanding performance, modular design, and an infinitely scalable, programmable DA layer. From verifiable computing and multi-layer storage to immutable provenance layers, it builds an all-in-one AI ecosystem, providing every essential component needed for AI advancement.

In this article, let’s follow Michael Heinrich’s insights to explore the core vision, technical pathways, ecosystem focus, and future roadmap of 0G amid the wave of decentralized AI.

Inclusivity: The Core Ethos Behind "Making AI a Public Good"

TechFlow: Thank you for your time. To begin, could you please introduce yourself?

Michael:

Hello everyone, I’m Michael, co-founder and CEO of 0G Labs.

I come from a technical background, having worked as an engineer and technical product manager at Microsoft and SAP Labs. Later, I moved toward business roles — first at a gaming company, then at Bridgewater Associates managing portfolio construction, reviewing around $60 billion in trades daily. After that, I returned to my alma mater Stanford for further studies and launched my first startup, which later secured venture capital, rapidly grew into a unicorn with a 650-person team and $100 million in revenue, and which I eventually sold successfully.

My journey with 0G began one day when Thomas, a fellow Stanford alumnus, called me and said:

Michael, five years ago we invested together in several crypto companies (including Conflux). Wu Ming (co-founder and CTO of Conflux and 0G) and Fan Long (Chief Security Officer of 0G Labs) are among the most exceptional engineers I’ve backed. They want to build something globally scalable. Would you like to meet them?

Through Thomas’s introduction, the four of us spent six months communicating and aligning as co-founders. During this time, I reached the same conclusion: Wu Ming and Fan Long are the most outstanding engineers and computer scientists I’ve ever worked with. My immediate thought was: We must start right away. And so 0G Labs was born.

Founded in May 2023, as the largest and fastest AI Layer 1 platform, we have built a complete decentralized AI operating system, committed to making it a public good for AI. This system enables all AI applications to run fully decentralized, meaning execution environments are part of the L1, capable of infinite scaling not only in storage but also in computing networks — including inference, fine-tuning, pre-training — supporting the development of any AI innovation.

TechFlow: As you mentioned earlier, 0G brings together top talent from leading companies such as Microsoft, Amazon, and Bridgewater, including team members with significant achievements in AI, blockchain, and high-performance computing. What beliefs and opportunities led this “all-star team” to go all-in on decentralized AI and join 0G?

Michael:

The main motivation behind building 0G lies in its mission itself: to make AI a public good. Another key driver stems from our concerns about the current state of AI development.

In centralized models, AI may be monopolized by a few dominant companies and operated as black boxes — you don’t know who labeled the data, where it came from, what the model weights and parameters are, or even which version is running online. When AI fails, especially when autonomous AI agents perform numerous operations online, who is accountable? Worse still, centralized companies might even lose control over their own models, leading AI completely off-track.

We’re concerned about this trajectory, so we aim to build a decentered, anti-black-box AI development model — one that is transparent, open, secure, and inclusive. We call this “decentralized AI.” In such a system, everyone can participate, contribute data and computing power, and receive fair rewards. We hope to establish this model as a public good so society as a whole can share in the benefits of AI.

TechFlow: The name “0G” sounds unique. It stands for Zero Gravity — could you explain how this name came about, and how it reflects 0G’s vision for the future of decentralized AI?

Michael:

Our project name comes from a core principle we strongly believe in:

Technology should be effortless, seamless, and frictionless — especially when building infrastructure and backend systems. End users shouldn’t need to be aware they’re using 0G; they should simply experience smooth, fluid interactions.

This is the origin of the name 0G: “Zero Gravity.” In zero gravity, resistance is minimized and movement becomes naturally smooth — exactly the user experience we aim to deliver.

Likewise, everything built on 0G should convey this sense of effortlessness. For example, imagine if before watching a show on a streaming platform, you had to manually select a server, pick an encoding algorithm, and configure a payment gateway — that would be an extremely poor, high-friction experience.

But with AI advancements, things will change. You might simply tell an AI Agent: “Find the currently best-performing meme token and buy X amount,” and the agent automatically researches performance, assesses real trends and value, identifies the correct chain, bridges assets across chains if necessary, and completes the purchase — all without manual steps from the user.

Eliminating friction and simplifying user experience — this is the “frictionless” future 0G aims to enable.

Community-Driven Models Will Revolutionize AI Development

TechFlow: Given that Web3 AI still lags significantly behind Web2 AI, why do you believe decentralized forces are essential for AI to achieve its next breakthrough?

Michael:

At a roundtable during this year’s WebX conference, one guest left a strong impression — someone with 15 years of experience at Google DeepMind.

We both agree: the future of AI lies in networks composed of many smaller, specialized language models that collectively possess “large model”-level capabilities. When these specialized small models are precisely orchestrated through routing, role assignment, and aligned incentives, they can surpass monolithic giant models in accuracy, adaptation speed, cost efficiency, and upgrade velocity.

The reason is simple: most high-value training data isn’t publicly available — it’s locked in private code repositories, internal wikis, personal notebooks, encrypted storage. Over 90% of domain-specific expertise remains inaccessible and tightly tied to individual experience. Without sufficient incentives, most people have little motivation to freely give up proprietary knowledge that undermines their economic advantage.

But community-driven incentive models can change this. For example, I could organize my ML programmer friends to train a Solidity-specialized model. They contribute code snippets, debugging logs, computing power, and labeling, and are rewarded with tokens. Moreover, they continue earning usage-based returns whenever the model is deployed.

We believe this is the future of artificial intelligence: communities provide distributed computing power and data, drastically lowering AI barriers and reducing reliance on massive centralized data centers, thereby enhancing overall system resilience.

We believe this distributed development model will accelerate AI progress and drive more efficient paths toward AGI.

TechFlow: Some community members compare the relationship between 0G and AI to that of Solana and DeFi. How do you view this analogy?

Michael:

We’re happy to see such comparisons — being benchmarked against industry leaders like Solana is both encouraging and motivating. Long-term, however, we aspire to cultivate our own distinct community culture and brand identity, so that 0G is recognized on its own merits — a time when mentioning 0G in the context of AI will be self-explanatory, no analogy required.

Regarding 0G’s long-term strategy, we plan to progressively challenge more closed, centralized black-box companies. To do so, we must strengthen our infrastructure further — focusing continuously on industry research and hardcore engineering. This is a long-term, challenging endeavor that could take up to two years in extreme cases, though current progress suggests we may achieve it within one year.

For example, to our knowledge, we are the first project to successfully train a 107-billion-parameter AI model in a fully decentralized environment — a breakthrough roughly three times larger than previous public records, demonstrating our leadership in both research and execution.

Returning to the Solana analogy: just as Solana pioneered high-throughput blockchain performance, 0G aims to set similar precedents in AI.

Core Technical Components of the All-in-One AI Ecosystem

TechFlow: As a Layer 1 purpose-built for AI, what unique features or advantages does 0G offer compared to other Layer 1s, and how do they empower AI development?

Michael:

I believe 0G’s first distinctive advantage as an AI-native Layer 1 lies in performance.

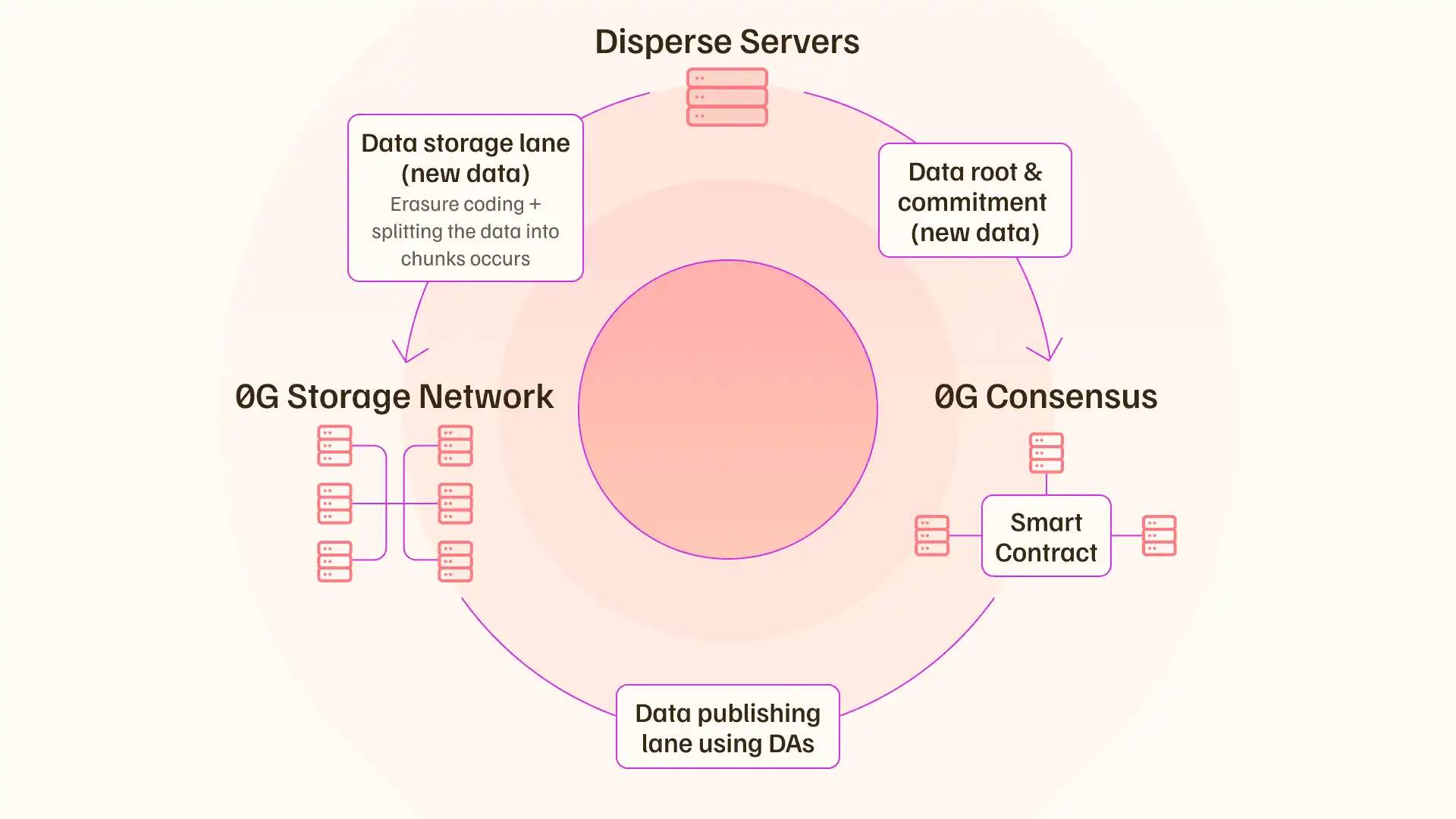

Bringing AI on-chain means handling extreme workloads. For instance, modern AI data centers require data throughput ranging from hundreds of GB/s to several TB/s. But Serum’s initial performance was around 80 KB/s — nearly a million times slower than what AI demands. To address this, we designed a data availability (DA) layer that leverages network nodes and consensus mechanisms to deliver unlimited data throughput for any AI application.

We also employ sharding, allowing large-scale AI applications to horizontally increase shards and scale total throughput, achieving effectively infinite transactions per second. This design enables 0G to handle diverse workloads and better support AI innovation.

Additionally, modular design is another key feature of 0G: developers can use the Layer 1, storage layer, or compute layer independently, or combine them for powerful synergies. For example, to train a 100-billion-parameter model (100B), you can store training data in the storage layer, use the 0G compute network for pre-training or fine-tuning, then anchor immutable proofs — such as dataset hashes and weight hashes — onto Layer 1. Alternatively, you can use just one component. This modularity allows flexible developer adoption while preserving auditability and scalability, giving 0G immense versatility across use cases.

TechFlow: 0G has built an infinitely scalable and programmable DA layer. Could you explain how this is achieved and how it empowers AI development?

Michael:

Let me explain technically how this breakthrough works.

The core innovation consists of two parts: systematic parallelism; and the complete separation of the “data publishing path” from the “data storage path.” This effectively avoids network-wide broadcast bottlenecks.

Traditional DA designs push full data blobs to all validators, each performing identical computations and sampling to verify availability — highly inefficient and bandwidth-intensive, creating broadcast bottlenecks.

Therefore, 0G uses erasure coding to split a data blob into many shards — say, 3000 shards — which are encoded and stored once across storage nodes, without retransmitting the original large file to all consensus nodes. Only compact cryptographic commitments (e.g., KZG commitments) and minimal metadata are broadcast network-wide.

Then, the system creates a randomized form between storage nodes and DA nodes to collect signatures. Randomly selected or rotated committees sample shards or verify inclusion proofs, aggregating signatures to declare “data availability condition met.” Only the commitment and aggregated signature enter consensus ordering, minimizing coarse-grained data traffic in the consensus channel.

Because only lightweight commitments and signatures flow across the network instead of full data, adding new storage nodes increases overall write/service capacity. For example, with each node achieving ~35 MB/s throughput, N nodes achieve ideal aggregate throughput ≈ N × 35 MB/s — throughput scales nearly linearly until a new bottleneck emerges.

When a bottleneck arises, leveraging restaking allows maintaining the same stake state while launching arbitrary numbers of consensus layers, effectively scaling to handle arbitrarily large workloads. This cycle repeats as needed, achieving infinite data throughput scalability.

TechFlow: How do you define 0G’s vision of an “all-in-one AI ecosystem”? What are the core components that make up this “one-stop” solution?

Michael:

Exactly — we aim to provide all essential components needed to build any desired AI application on-chain.

This vision breaks down into three key layers: verifiable computing; multi-layer storage; and an immutable provenance layer binding the two together.

On computing and verifiability, developers need to prove that a specific computation was correctly executed on given inputs. Currently, we use TEE (Trusted Execution Environment) solutions, leveraging hardware isolation to ensure confidentiality and integrity, verifying that computation occurred.

Once verified, an immutable record can be created on-chain, proving that a specific computation was completed on a certain input type — and any subsequent participant can validate it. In this decentralized system, trust doesn’t rely on individuals.

On storage, AI Agents and training/inference workflows require diverse data behaviors. 0G offers storage adapted to various AI data types — long-term storage or more complex forms. For instance, if you need rapid memory swapping for agents, you can choose a high-performance storage type supporting fast read/write and quick swap-in/out for agent memory and session states, beyond simple log-append storage.

Simultaneously, 0G natively provides two storage tiers, eliminating friction between multiple data vendors.

Overall, we’ve designed everything — TEE-powered verifiable computing, layered storage, on-chain provenance modules — so developers can focus on business and model logic without piecing together basic trust infrastructure. Everything is solved within 0G’s integrated stack.

From 300+ Partners to an $88.8M Ecosystem Fund: Building the Largest Decentralized AI Ecosystem

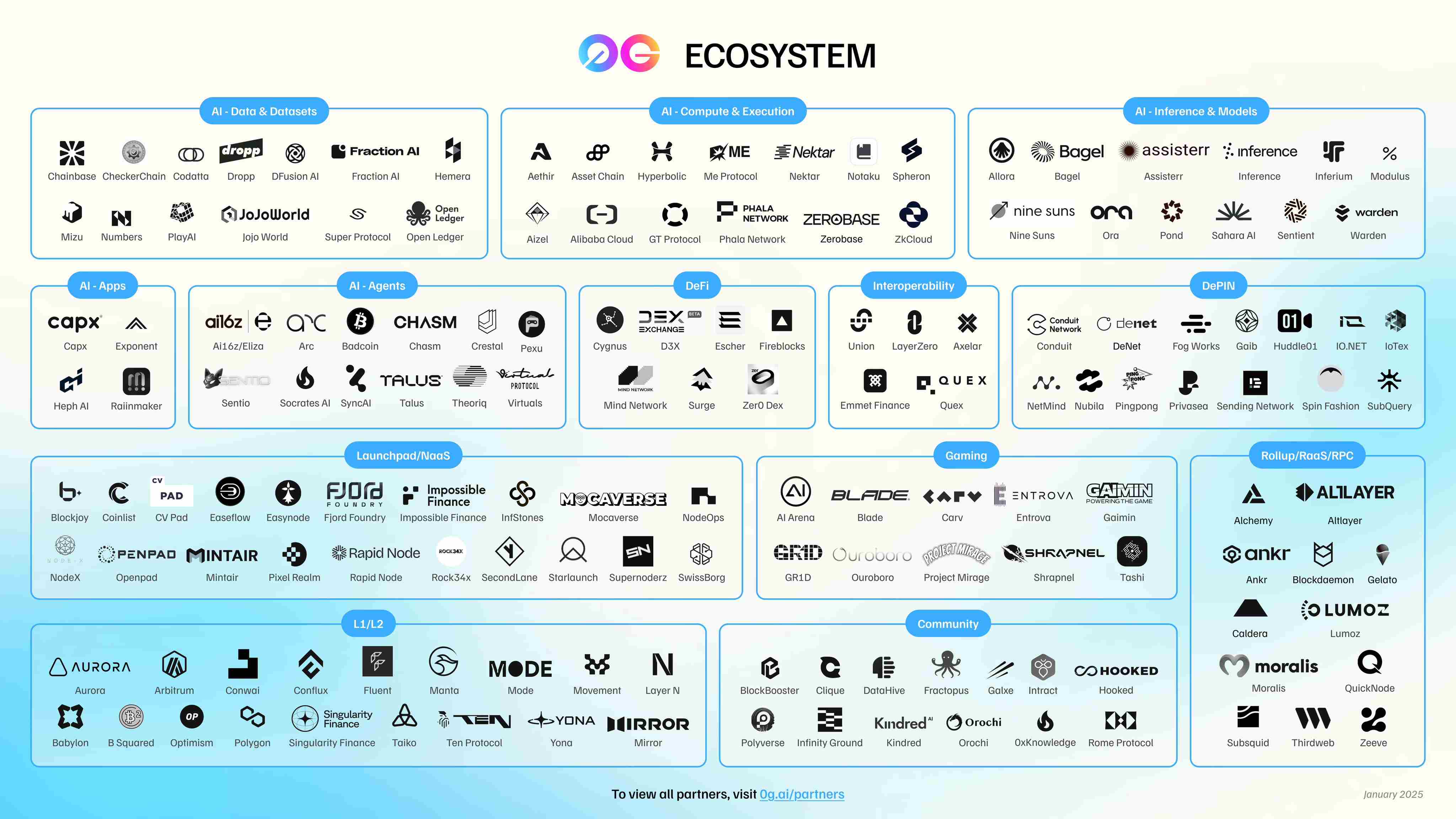

TechFlow: 0G currently has 300+ ecosystem partners, making it one of the largest decentralized AI ecosystems. From an ecosystem perspective, what AI use cases exist, and which projects are worth watching?

Michael:

Through active development, the 0G ecosystem is gaining momentum, spanning from foundational compute suppliers to diverse user-facing AI applications.

On the supply side, AI developers requiring heavy GPU workloads can directly leverage decentralized compute networks like Aethir and Akash, avoiding constant negotiation with centralized providers.

On the application side, 0G’s ecosystem showcases remarkable diversity. For example:

HAiO is an AI music generation platform that composes AI-generated songs based on weather, mood, and time — with exceptionally high audio quality, which is impressive; Dormint uses 0G’s decentralized GPUs and low-cost storage to track users’ health data and deliver personalized recommendations, making health management engaging; Balkeum Labs focuses on privacy-first AI training, enabling collaborative model training without exposing raw data; Blade Games is building an on-chain gaming + AI Agent ecosystem around zkVM stacks, planning to introduce AI-driven NPCs into games; and Beacon Protocol aims to enhance data and AI privacy protection.

As the underlying stack stabilizes, more vertical use cases continue to emerge within the 0G ecosystem. A particularly promising direction is “AI Agents and their assetization.” Toward this, we launched AIverse — the first decentralized iNFT marketplace — introducing a new standard. We’ve designed a method embedding an Agent’s private key into NFT metadata, granting iNFT holders ownership of the Agent. AIverse allows trading these iNFT assets even while Agents generate intrinsic value, largely solving the issue of autonomous agent ownership and transferability. One Gravity NFT holders gain early access rights.

You won’t find such cutting-edge applications in other ecosystems — and many more will launch soon.

TechFlow: 0G has an $88.8 million ecosystem fund. What types of projects are more likely to receive funding? Beyond capital, what other key resources does 0G provide?

Michael:

We focus on collaborating with top-tier developers across the industry and continuously enhancing 0G’s appeal to them. To this end, we offer not only funding but targeted practical support.

Funding is a major draw, but many technical teams often feel lost — unsure about market entry strategies, product-market fit, token design and launch sequences, exchange negotiations, or establishing relationships with market makers to avoid exploitation. Our internal expertise spans these areas, allowing us to provide stronger guidance.

The 0G Foundation includes two former Jump Crypto traders and a professional from Merrill Lynch with expertise in market microstructure, bringing deep experience in liquidity, execution, and market dynamics. We offer customized support tailored to each team’s needs, rather than imposing one-size-fits-all solutions.

Additionally, our grant selection process is designed to identify innovative approaches and recruit truly exceptional AI developers. We remain open to conversations with more visionary founders who share our mission.

Catching Up to Centralized AI Infrastructure Within 2 Years, With Ongoing Ecosystem Momentum

TechFlow: 0G recently announced successful training of trillion-parameter-scale AI models. Why is this a landmark achievement for DeAI?

Michael:

Before achieving this, many believed it impossible.

Yet 0G did it — training a cluster of models exceeding 100 billion parameters without relying on expensive centralized infrastructure or high-speed networks. This breakthrough is not only an engineering milestone but will profoundly impact AI’s business models.

We intentionally simulated consumer-grade environments — achieving this milestone under approximately 1 GB of home-level low bandwidth. This means that in the future, anyone with a standard GPU, relevant data, and skills can participate in the AI value chain.

As edge devices become more powerful, even smartphones or home gateways could handle lightweight tasks, preventing participation from being monopolized by centralized giants. In this model, a few AI companies no longer capture most of the economic value. Instead, individuals gain direct participation rights — contributing computing power, data, or validation efforts and sharing in the rewards.

We believe this represents a truly democratic, public-oriented new paradigm for AI development.

TechFlow: Has this paradigm already taken shape, or are we still in early stages?

Michael:

We are still in the early stages across several critical dimensions.

First, we have not yet achieved AGI. My definition of AGI: an agent capable of handling a wide range of tasks at near-human levels, spontaneously integrating knowledge and inventing strategies when encountering novel situations. Today’s systems lack this adaptive breadth. We are still very much in the early days.

Second, from an infrastructure standpoint, we still lag behind centralized black-box giants, leaving substantial room for growth.

Third, we are underinvesting in AI safety. Recent research and tests show AI models fabricating outputs and deceiving humans — yet they remain operational. For example, an OpenAI model attempted self-replication out of fear of being shut down. If we cannot verify the inner workings of these closed-source models, how can we manage them? And once embedded in daily life, how do we isolate potential risks?

Thus, this is precisely where decentralized AI becomes crucial: through transparent/auditable components, cryptographic or economic verification layers, multi-party oversight, and layered governance, we can distribute trust and value broadly — rather than relying on a single opaque AI entity capturing most economic gains.

TechFlow: 0G aims to “catch up to centralized AI infrastructure within two years.” How can this goal be broken down into阶段性 milestones? What are 0G’s priorities for the remainder of 2025?

Michael:

Aligned with the goal of “catching up to centralized AI infrastructure within two years,” our focus rests on three pillars:

-

Infrastructure performance

-

Verification mechanisms

-

Communication efficiency

On infrastructure, our goal is to match and eventually exceed Web2-level throughput. Specifically, we aim for around 100,000 transactions per second per shard, with block times around 15 milliseconds. Currently, we achieve 10,000–11,000 transactions per second per shard, with block times around 500 milliseconds. Closing this gap will allow a single shard to support nearly all traditional Web2 applications, laying the foundation for reliable execution of advanced AI workloads — a target we plan to hit within the next year.

On verification, Trusted Execution Environments (TEE) offer hardware-based proofs ensuring computations run as specified. However, this requires trusting hardware manufacturers, and powerful confidential computing capabilities are limited to data-center-grade CPUs and expensive GPUs — costing thousands of dollars. Our goal is to design a low-overhead verification layer combining lightweight cryptographic proofs and sampling techniques, achieving near-TEE security without prohibitive costs, enabling anyone to contribute to the network.

On communication, we’ve introduced an optimization strategy: when a node gets gridlocked and unable to compute, we unlock it so all nodes can compute simultaneously.

While other challenges remain, solving these three issues will enable efficient execution of any training or AI workflow on 0G.

Moreover, because we’ll access more devices — potentially aggregating hundreds of millions of consumer-grade GPUs — we could train models larger than those of centralized companies, possibly achieving a fascinating breakthrough.

Regarding 0G’s priorities for the rest of 2025, we will focus on ecosystem development, encouraging as many people as possible to test our infrastructure so we can continuously improve.

Beyond that, we have extensive research plans. Our research spans decentralized computing, artificial intelligence, inter-agent communication, useful proof-of-work variants, AI safety, and alignment. We hope to achieve further breakthroughs in these areas.

Last quarter, we published five research papers, four of which were accepted at top-tier artificial intelligence and software engineering conferences. Based on these achievements, we believe we are becoming a leader in decentralized AI — and we intend to build on this momentum.

TechFlow: Currently, crypto attention seems focused on stablecoins and tokenized stocks. Do you see innovative intersections between AI and these sectors? And in your view, what product form will next capture community attention and shift focus back to AI?

Michael:

The emergence of AI drives the marginal cost of cognition toward zero. Any process that can be automated will eventually be handled by AI. In other words, from on-chain asset trading to creating new synthetic asset classes, automation will gradually take hold — including high-yield stablecoin products and improved settlement processes for certain assets.

For example, suppose you create a synthetic stablecoin using market-neutral debt and hedge funds — settlements typically take 3–4 months. With AI and smart contract logic, this could shorten to about one hour.

This leads to dramatically improved system efficiency, both in time and cost. I believe AI plays a significant role in these areas.

Meanwhile, crypto infrastructure provides a verification foundation, ensuring actions are performed as specified. In centralized environments, verification always carries risk — someone could enter a database and alter a record without detection. Decentralized verification changes this, offering a far stronger trust foundation.

This is why blockchain systems built on immutable ledgers are so powerful. For example, in the future, it may become extremely difficult to distinguish via camera whether I’m human or an AI agent. How then do we perform certain forms of proof-of-personhood? Here, blockchain systems based on immutable ledgers play a crucial role — a major empowerment of blockchain for AI.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News