AI tool from BitsLab discovers and helps fix high-risk vulnerability in Bluefin

TechFlow Selected TechFlow Selected

AI tool from BitsLab discovers and helps fix high-risk vulnerability in Bluefin

Bluefin's practice validates BitsLab's core argument: trustworthy Web3 security must be "evidence-based" (RAG), "multi-layer reviewed" (multi-level verification), and "deeply structural" (static analysis + agent collaboration).

Author: BitsLab

As the complexity of Web3 protocols surges, the Move language—designed with asset security in mind—has become harder to audit due to scarce public data and research. To address this, BitsLab has built a multi-layered AI security toolset, "BitsLabAI": grounded in expert-curated domain-specific data, it combines RAG (Retrieval-Augmented Generation), multi-level automated review, and a cluster of specialized AI agents operating on deterministic static analysis, providing deep automation support for audits.

In the public audit of Bluefin’s perpetual contract DEX, BitsLab AI identified four issues, including one high-risk logic flaw, which the Bluefin team has since fixed.

1) Why AI Now: The Shift in On-Chain Security Paradigms

The paradigm of on-chain security and digital asset protection is undergoing a fundamental transformation. With major advances in foundational models, today's large language models (LLMs) and AI agents now possess a preliminary yet powerful form of intelligence. Given well-defined contexts, these models can autonomously analyze smart contract code to identify potential vulnerabilities. This has driven rapid adoption of AI-assisted tools—such as conversational user interfaces (UIs) and integrated agent IDEs—which are increasingly becoming standard components in the workflows of smart contract auditors and Web3 security researchers.

However, despite the promise of this first wave of AI integration, it remains constrained by critical limitations that prevent it from meeting the reliability demands of high-stakes blockchain environments:

Shallow, human-dependent auditing: Current tools act as “co-pilots” rather than autonomous auditors. They lack the ability to understand the overall architecture of complex protocols and rely heavily on continuous human guidance. As a result, they cannot perform the deep, automated analysis required to ensure the security of interconnected smart contracts.

High noise-to-signal ratio due to hallucinations: The reasoning process of general-purpose LLMs is plagued by “hallucinations.” In security contexts, this results in numerous false positives and redundant alerts, forcing auditors to waste valuable time debunking fictional vulnerabilities instead of addressing real, potentially catastrophic on-chain threats.

Limited understanding of domain-specific languages: LLM performance is directly tied to its training data. For specialized languages like Move—designed specifically for asset security—the scarcity of public resources on complex codebases and documented vulnerabilities leads to superficial understanding of Move’s unique security model, including its core principles of resource ownership and memory management.

2) BitsLab’s AI Security Framework (Reliability at Scale)

Given the key shortcomings of general-purpose AI, our framework employs a multi-layered, security-first architecture. It is not a single model but an integrated system where each component is designed to address specific challenges in smart contract auditing—from data integrity to deep automated analysis.

1. Foundation Layer: Expert-Curated Domain-Specific Dataset

The predictive power of any AI is rooted in its data. Our framework’s superior performance begins with our proprietary knowledge base—a fundamentally different foundation compared to the generic datasets used to train public LLMs. Our advantages include:

-

Broad coverage in niche domains: We maintain a large, highly specialized dataset meticulously collected and focused on high-risk areas such as DeFi lending, NFT markets, and Move-based protocols. This provides unparalleled contextual depth for identifying domain-specific vulnerabilities.

-

Expert curation and cleaning: Our dataset is not merely scraped—it is continuously cleaned, verified, and enriched by smart contract security experts. This process includes annotating known vulnerabilities, tagging secure coding patterns, and filtering out irrelevant noise, creating a high-fidelity “ground truth” for model training. This human-in-the-loop curation ensures our AI learns from the highest-quality data, significantly boosting accuracy.

2. Accuracy: Eliminating Hallucinations via RAG and Multi-Level Review

To tackle the critical issues of hallucinations and false positives, we implement a sophisticated dual system that anchors AI reasoning in verifiable facts:

-

Retrieval-Augmented Generation (RAG): Our AI does not rely solely on internal knowledge; before drawing conclusions, it queries a live knowledge base. The RAG system retrieves up-to-date vulnerability research, established security best practices (e.g., SWC Registry, EIP standards), and code examples from similar, successfully audited protocols. This forces the AI to “cite its sources,” ensuring conclusions are based on existing evidence rather than probabilistic guesses.

-

Multi-level review model: Every potential issue flagged by generative AI undergoes a rigorous internal validation pipeline. This includes an automated review mechanism composed of specialized models: a cross-referencing model compares findings against RAG data, a fine-tuned “auditor” model evaluates technical validity, and a “priority” model assesses potential business impact. This process systematically filters out low-confidence results and hallucinations before they reach human auditors.

3. Depth: Deep Automation via Static Analysis and AI Agent Collaboration

To achieve context-aware automation beyond simple “co-pilot” tools, we adopt a hybrid approach combining deterministic analysis with intelligent agents:

-

Static analysis as foundation: Our process begins with comprehensive static analysis to deterministically map the entire protocol. This generates complete control flow graphs, identifies all state variables, and traces function dependencies across contracts. This mapping provides our AI with a foundational, objective “worldview.”

-

Context management: The framework maintains a rich, holistic context of the entire protocol. It understands not just individual functions but how they interact. This critical capability enables analysis of cascading state changes and identification of complex cross-contract vulnerabilities.

-

AI agent collaboration: We deploy a suite of specialized AI agents, each trained for a specific task. The “Access Control Agent” hunts for privilege escalation flaws; the “Reentrancy Agent” focuses on unsafe external calls; the “Arithmetic Logic Agent” scrutinizes all mathematical operations for edge cases like overflows or precision errors. These agents collaborate using a shared context map, enabling detection of complex attack vectors that a single, monolithic AI would miss.

This powerful combination allows our framework to automate the discovery of deep architectural flaws, truly functioning as an autonomous security partner.

3) Case Study: Uncovering a Critical Logic Flaw in Bluefin PerpDEX

To validate our framework’s multi-layered architecture in a real-world scenario, we applied it to Bluefin’s public security audit. Bluefin is a complex perpetual contract decentralized exchange. This audit demonstrates how we uncover vulnerabilities invisible to traditional tools—through static analysis, specialized AI agents, and RAG-based fact verification.

Analysis Process: Operation of the Multi-Agent System

The discovery of this high-risk vulnerability was not a singular event but the result of systematic collaboration among the framework’s integrated components:

-

Context Mapping and Static Analysis

The process began by ingesting Bluefin’s complete codebase. Our static analysis engine deterministically mapped the entire protocol, while foundational AI agents provided a holistic overview, pinpointing modules related to core financial logic. -

Deployment of Specialized Agents

Based on initial analysis, the system automatically deployed a series of stage-specific agents. Each AI agent had its own audit prompts and vector database. In this case, one agent focused on logical correctness and boundary condition vulnerabilities (e.g., overflow, underflow, comparison errors) detected the issue. -

RAG-Based Analysis and Review

The Arithmetic Logic Agent initiated its analysis. Leveraging Retrieval-Augmented Generation (RAG), it queried our expert-curated knowledge base, referencing best-practice implementations in Move and comparing Bluefin’s code against similar logic flaws documented in other financial protocols. This retrieval process highlighted that signed number comparisons are a classic source of boundary errors.

Discovery: A High-Risk Vulnerability in Core Financial Logic

Using our framework, we ultimately identified four distinct issues, one of which was a high-risk logic flaw embedded within the protocol’s financial computation engine.

The vulnerability resided in the lt (less-than) function within the signed_number module. This function is crucial for any financial comparison, such as position sorting or profit-and-loss (PNL) calculations. The flaw could lead to severe financial discrepancies, incorrect liquidations, and failure of the fair ordering mechanism at the heart of the DEX—directly threatening the protocol’s integrity.

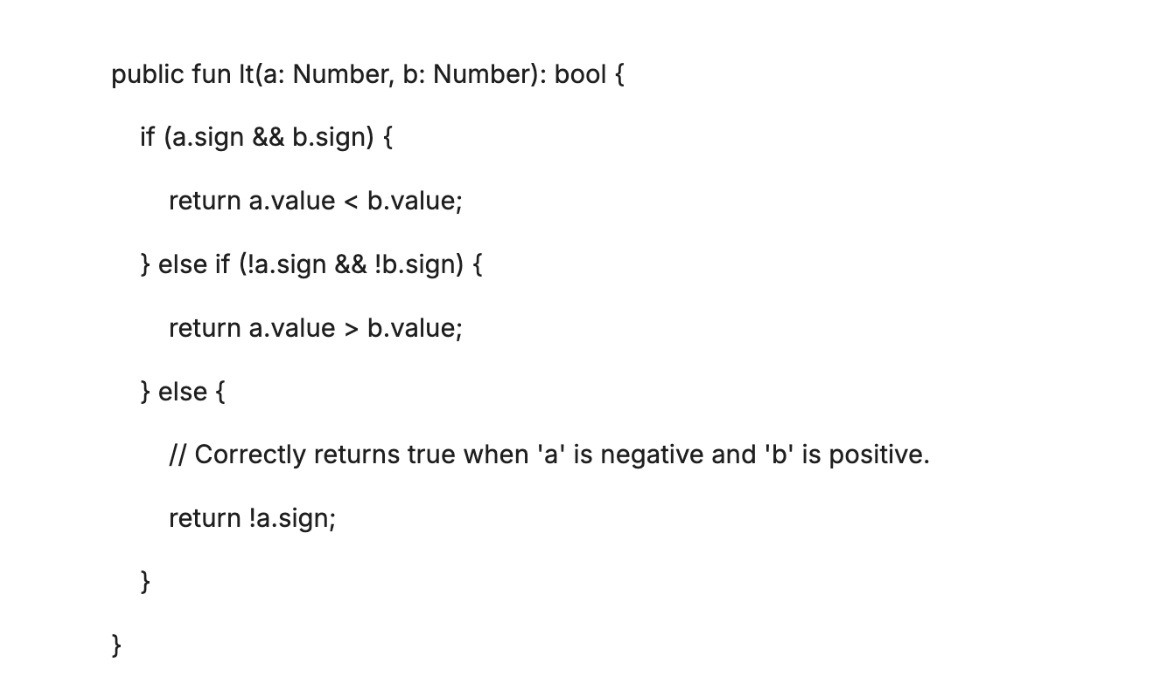

The root cause was faulty logic when comparing negative and positive numbers. The signed_number module represents values using value: u64 and sign: bool (true for positive, false for negative). The lt function contained a defect in its else branch (which handles comparisons between numbers of differing signs). When comparing a negative number (!a.sign) with a positive number (b.sign), the function incorrectly returned a.sign (i.e., false), effectively asserting that “positive is less than negative.”

Fix:

To correct this critical issue, a simple yet essential change must be made to the else branch of the lt function. The corrected implementation must return !a.sign, ensuring that negative numbers are always correctly evaluated as less than positive numbers.

Fix

Result: Upon receiving this detailed report, the Bluefin development team was immediately notified and promptly implemented the fix.

4) What BitsLab AI Means for Web3 Teams

-

Fewer false alarms: RAG + multi-level review drastically reduces hallucinations and false positives.

-

Deeper coverage: Static analysis maps + agent collaboration detect systemic risks across contracts, edge conditions, and logic layers.

-

Business-focused prioritization: Impact-based triaging guides engineering efforts, ensuring time is spent on the “most critical issues.”

5) Conclusion: BitsLab AI-Powered Security as the New Baseline

The Bluefin case validates BitsLab’s core thesis: trustworthy Web3 security must be “evidence-based” (via RAG), “multi-gated” (via layered review), and “deeply structural” (via static analysis + agent collaboration).

This approach is especially critical when interpreting and verifying the underlying logic of decentralized finance and is a necessary condition for sustaining scalable trust in protocols.

In the fast-evolving Web3 landscape, contract complexity continues to rise. Meanwhile, public research and data on Move remain relatively scarce, making “security assurance” even more challenging. BitsLab’s BitsLab AI was built precisely for this purpose—leveraging expert-curated domain knowledge, verifiable retrieval-augmented reasoning, and context-aware automated analysis to end-to-end identify and mitigate Move contract risks, injecting sustainable intelligent power into Web3 security.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News