YC AI Startup Day 2: Nadella, Ng, and Cursor CEO Attend

TechFlow Selected TechFlow Selected

YC AI Startup Day 2: Nadella, Ng, and Cursor CEO Attend

The best use of AI is to increase iteration speed, not to chase the "magic" of one-click generation.

Compiled by: Founder Park

On the second day of YC AI Startup School, seven heavyweight guests took the stage: Satya Nadella (CEO of Microsoft), Andrew Ng (Founder of Deep Learning.AI), Chelsea Finn (Co-founder of Physical Intelligence), Michael Truell (CEO & Co-founder of Cursor), Dylan Field (CEO & Co-founder of Figma), Andrej Karpathy (Former Director of AI at Tesla), and Sriram Krishnan (Senior AI Policy Advisor at the White House).

They shared a wealth of insights on AI technology and entrepreneurship, including:

-

Don’t anthropomorphize AI. AI is not human—it’s a tool. The next frontier lies in giving it memory, tools, and the ability to act—but this remains fundamentally different from human reasoning.

-

In the future, agents will become the new computers. This future depends not just on technical precision, but also on user trust and seamless interaction.

-

Products with feedback loops—like Agentic AI—outperform one-off tools. Continuous interaction optimizes outcomes, and iteration enables compound performance gains.

-

Prototyping is now 10x faster, and production software development is 30–50% more efficient. Leverage this advantage by using real-time user feedback to reduce market risk.

-

Code is no longer a scarce core asset. With rapid prototyping tools and AI, code can be generated easily. What truly matters is the value that the code delivers.

-

Real-world data is irreplaceable. While synthetic and simulated data help, real data remains critical—especially for complex visual and physical tasks.

-

The best use of AI is accelerating iteration—not chasing “magic” one-click generation. Designers and product managers must now actively contribute to AI evaluation.

Besides Andrej Karpathy (see our previous article: “Day One of YC AI Startup School Lights Up with Andrej Karpathy’s Talk”) and Sriram Krishnan, we’ve compiled the key takeaways from the other five speakers.

Microsoft CEO: Satya Nadella

1. Platform compounding effect: AI didn't emerge from nothing—it builds on decades of cloud infrastructure capable of supporting large-scale model training. Each platform generation lays the foundation for the next.

2. Models are infrastructure, products are ecosystems: Foundation models are like next-gen SQL databases—a form of infrastructure. Real products aren't the models themselves, but the entire ecosystem built around them: feedback loops, tool integrations, and user interactions.

3. Economic impact as the benchmark: Satya’s North Star metric for AI’s value is: “Does it create economic surplus?” If a technology doesn’t drive GDP growth, it’s not transformative.

4. The boundary between compute and intelligence: Intelligence grows logarithmically with compute investment. But major breakthroughs ahead won’t come just from scale—they’ll require paradigm shifts, akin to the next “scaling law moment.”

5. Energy and social consent: Scaling AI demands more energy and societal permission. To earn that permission, we must demonstrate tangible, positive societal benefits that justify its costs.

6. AI’s real bottleneck is change management: In traditional industries, the obstacle isn’t technology, but entrenched workflows. True transformation requires rethinking how work gets done—not just adding AI into existing processes.

7. Convergence of job roles: On platforms like LinkedIn, traditional roles such as design, frontend, and product are merging, creating demand for “full-stack” talent. AI is accelerating this trend by enabling more people to develop cross-disciplinary skills.

8. Don’t underestimate repetitive work: Knowledge work contains massive amounts of repetitive manual labor. AI’s best application is eliminating these “invisible friction costs” and freeing human creativity.

9. Stay open about the future: Even Satya didn’t anticipate how fast “test-time compute” and reinforcement learning would advance. Don’t assume we’ve seen the final form of AI—many more breakthroughs likely lie ahead.

10. Don’t anthropomorphize AI: AI is not human. It’s a tool. The next frontier is equipping it with memory, tools, and action-taking capabilities—but this differs fundamentally from human reasoning.

11. The future of development: AI won’t replace developers; it will become their co-pilot. VS Code is a canvas for collaboration with AI. Software engineering will shift from writing code to system design and quality assurance.

12. Responsibility and trust are non-negotiable: AI doesn’t absolve human accountability. Companies remain legally responsible for their products’ behavior. That’s why privacy, security, and sovereignty must stay central.

13. Trust comes from practical value: Trust stems from utility, not rhetoric. Satya cited a chatbot deployed for Indian farmers—visible, tangible help is the foundation of trust.

14. From voice to agents: Microsoft’s AI journey began with speech technology in 1995. Today, the strategic focus has shifted to fully functional “agents” integrating speech, vision, and ubiquitous ambient computing devices.

15. Agents are the future computer: Satya’s long-term vision: “Agents will become the next generation of computers.” This future hinges not only on technical accuracy but also on user trust and seamless interaction.

16. Leadership insight: Start at the bottom, but aim high. Learn how to build a team, not just a product.

17. The kind of people Satya values: Those who simplify complexity, bring clarity, energize teams, and thrive under tight constraints to solve hard problems.

18. Favorite interview question: “Tell me about a problem you didn’t know how to solve—and how you figured it out.” He looks for curiosity, adaptability, and perseverance.

19. Potential of quantum computing: The next disruptive leap may come from quantum. Microsoft is focused on developing “error-corrected qubits,” which could enable unprecedented precision in simulating the natural world.

20. Advice to young people: Don’t wait for permission. Build tools that empower others. He often asks himself: “What can we create that helps others create?”

21. Favorite products: VS Code and Excel—because they give people superpowers.

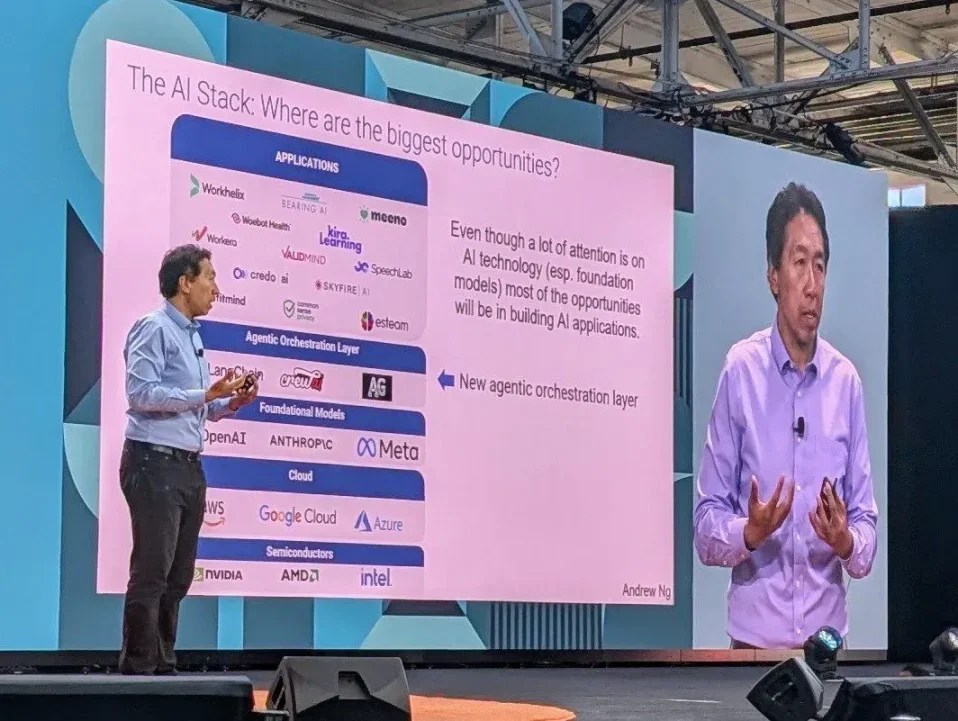

Deep Learning.AI Founder: Andrew Ng

1. Execution speed determines success: The best predictor of startup success is the speed of building, testing, and iterating. Speed creates compounding learning—and AI accelerates this exponentially.

2. Most opportunities are at the application layer: The biggest returns today aren’t from building new models, but from applying existing models to valuable, user-facing scenarios—the sweet spot founders should target.

3. Agentic AI beats one-off tools: Products with feedback loops—like Agentic AI—outperform tools that perform single tasks. Continuous interaction improves results, and iteration drives compound gains.

4. The “orchestration layer” is emerging: Between foundation models and applications, a new middle layer is forming—agent orchestration—that enables complex, multi-step workflows across tools and data sources.

5. The more specific, the faster the execution: The fastest way to move is to start with a concrete idea—one so detailed that engineers can begin coding immediately. Great specifics often come from domain experts with intuitive clarity.

6. Beware the trap of “grand narratives”: Abstract goals like “AI for healthcare” sound ambitious but often slow execution. Real efficiency gains come from micro-tools like “automating MRI appointments.”

7. Be willing to pivot—if you start with clarity: If early data shows your idea isn’t working, a well-defined starting point makes pivoting easier. Knowing exactly what you’re testing allows rapid redirection after failure.

8. Use feedback loops to de-risk: Prototyping is now 10x faster, and production development is 30–50% more efficient. Use this edge—get real-time user feedback to minimize market risk.

9. Try many things, don’t chase perfection: Don’t polish your first version. Build 20 rough prototypes and see which ones stick. Speed of learning trumps refinement.

10. Move fast and take responsibility: Andrew redefines Silicon Valley’s old motto: Not “move fast and break things,” but “move fast and take responsibility.” Accountability is the bedrock of trust.

11. Code is losing its scarcity value: Code is no longer a rare, core asset. With rapid prototyping and AI, code is easy to generate. What matters is the value it creates.

12. Technical architecture is reversible: In the past, choosing an architecture was a one-way door. Now it’s two-way—switching costs are far lower. This flexibility encourages bolder experiments and faster learning.

13. Everyone should learn programming: The claim that “you don’t need to learn to code” is misleading. When we moved from assembly to high-level languages, similar fears arose. AI lowers the barrier—more people should gain coding skills.

14. Domain knowledge enhances AI: Deep understanding of a field makes you better at using AI. Art historians write better image prompts. Doctors shape better health AI. Founders should combine domain expertise with AI fluency.

15. Product managers are now the bottleneck: The new constraint isn’t engineering—it’s product management. One of Andrew’s teams even proposed a 2:1 ratio of PMs to engineers to accelerate feedback and decision-making.

16. Engineers need product sense: Engineers with product intuition move faster and build better products. Technical skill alone isn’t enough—developers must deeply understand user needs.

17. Get feedback as quickly as possible: Andrew’s preferred feedback hierarchy (fastest to slowest): internal dogfooding → friends → strangers → small release to 1,000 users → global A/B testing. Founders should climb this ladder rapidly.

18. Deep AI knowledge remains a moat: AI literacy isn’t widespread yet. Those who truly understand AI’s technical foundations still have a huge advantage—they innovate smarter, faster, and more independently.

19. Hype ≠ truth: Beware narratives that sound impressive but serve mainly for fundraising or status. Terms like AGI, extinction, and infinite intelligence are often hype signals—not impact indicators.

20. Safety lies in usage, not the tech: “AI safety” is often misunderstood. Like electricity or fire, AI isn’t inherently good or bad—it depends on how it’s used. Safety is about application, not the tool itself.

21. The only thing that matters: Do users love it? Don’t obsess over model cost or performance benchmarks. The only question that counts: Are you building something users truly love and keep using?

22. AI in education is still experimental: Companies like Kira Learning are running many trials, but the ultimate form of AI in education remains unclear. We’re still in the early phase of transformation.

23. Watch out for doomerism and regulatory capture: Excessive fear of AI is being used to justify regulations that protect incumbent businesses. Be skeptical of “AI safety” narratives that primarily benefit established players.

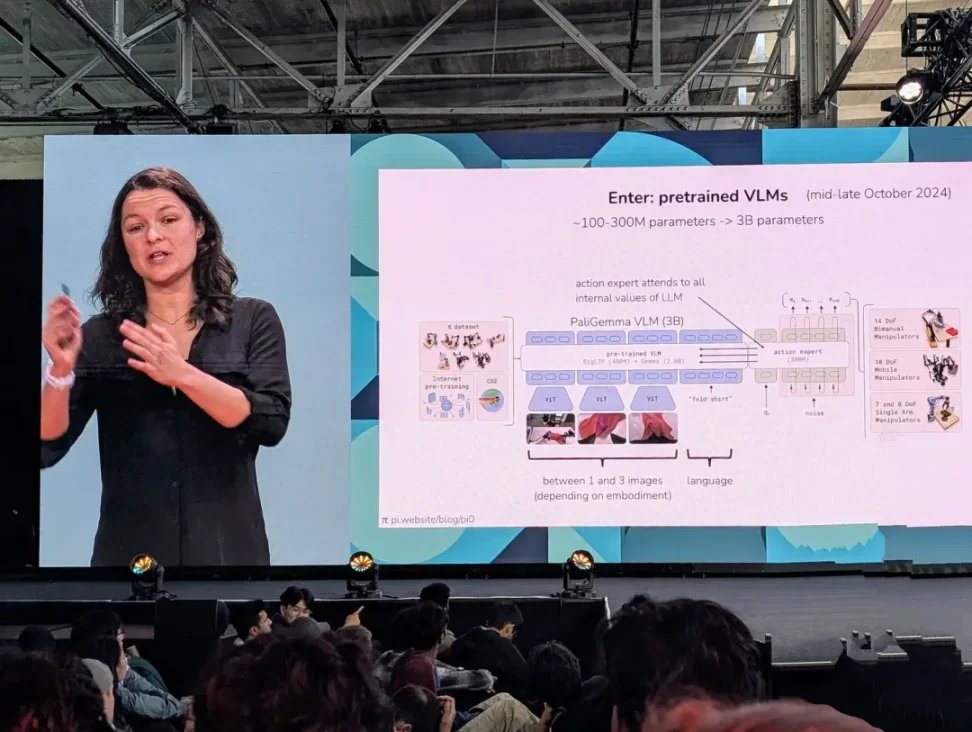

Physical Intelligence Co-founder: Chelsea Finn

1. Robotics requires full-stack thinking: You can’t just bolt robotics onto an existing company. You need to build the entire stack from scratch—data, models, deployment.

2. Data quality beats quantity: Massive datasets from industry, YouTube, or simulation often lack diversity and authenticity. Correct, high-quality data matters more than scale.

3. Best practice: Pre-train + fine-tune: Pre-train on broad datasets, then fine-tune with ~1,000 high-quality, scenario-consistent samples. This significantly boosts robot performance.

4. General-purpose robots beat specialized ones: General models that span tasks and hardware platforms (e.g., third-party robots) are proving more successful than systems built for narrow purposes.

5. Real-world data is irreplaceable: Despite the usefulness of synthetic and simulated data, real data remains essential—especially for complex visual and physical tasks.

6. Too many resources can backfire: Over-funding or over-complicating slows progress. Clarity of problem and focused execution matter most.

Cursor CEO & Co-founder: Michael Truell

1. Start early and keep building: Even when a co-founder left, Michael kept coding. Early viral traction (a Flappy Bird clone) built his confidence and skills.

2. Rapid validation, even in unfamiliar domains: Without prior experience, their team built a programming assistant for mechanical engineering. Their mantra: “Learn by doing.”

3. Differentiate boldly, don’t fear giants: They hesitated to compete with GitHub Copilot, but realized few companies aimed for full-cycle development automation. That niche opened the market for them.

4. Fast from code to launch: From first line of code to public release, they took only 3 months. Rapid iteration helped them quickly refine their product direction.

5. Focus beats complexity: They dropped plans to build both an IDE and AI tools. By focusing solely on AI functionality, they accelerated development.

6. Distribution can start with one tweet: Cursor’s early growth came from a single social media post by a co-founder. Before formal marketing, word-of-mouth was already driving momentum.

7. Compounding effect of execution: In 2024, Cursor grew annual recurring revenue from $1M to $100M in one year, achieving 10% weekly compound growth driven by product improvements and user demand.

8. Best advice: Follow your curiosity: Forget resume-padding. Michael’s top advice: Work with smart people on things you care about.

Figma CEO & Co-founder: Dylan Field

1. Find a co-founder who inspires you: Dylan’s motivation comes from working with co-founder Evan Wallace—“Every week feels like building the future.”

2. Start early, learn by doing: Dylan launched his first startup at 19 while still in college. Early failures—like a meme generator—helped refine the vision that led to Figma.

3. Launch fast, get feedback faster: They emailed early users for rapid iteration and started charging from day one. Feedback continuously drives product evolution.

4. Break long roadmaps into short sprints: Dividing big visions into smaller parts is key to maintaining speed and execution.

5. Product-market fit may take years: Figma waited five years for a defining signal: Microsoft told them, “If you don’t charge, we’ll have to stop working with you.”

6. Design is the new differentiator: He believes design is growing in importance due to AI. Figma is embracing this with new products like Draw, Buzz, Sites, and Make.

7. Use AI to accelerate prototyping: The best use of AI is boosting iteration speed—not chasing magical one-click generation. Designers and PMs must now lead AI evaluation.

8. Embrace rejection, don’t avoid it: Childhood acting taught Dylan to accept criticism and feedback. He sees rejection as part of the path to success.

9. Human connection remains central: Warns against replacing human relationships with AI. When asked about life’s meaning, he replied: “Explore consciousness, keep learning, share love.”

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News