Yang Zhiyun and Liang Wenfeng's papers collide

TechFlow Selected TechFlow Selected

Yang Zhiyun and Liang Wenfeng's papers collide

When the two founders put their names in the paper.

One

On the same day Musk released Grok3, trained on 200,000 GPUs, two papers taking a fundamentally "opposite" approach to Musk's brute-force miracle emerged in the technical community.

In the author lists of these two papers, two familiar names appeared:

Liang Wenfeng, Yang Zhilin.

On February 18, DeepSeek and Moonshot AI simultaneously released their latest research papers—both directly "colliding" in theme: challenging the core attention mechanism of the Transformer architecture to enable more efficient processing of longer contexts. More interestingly, the names of the technical-founder stars from both companies appeared in their respective papers and technical reports.

DeepSeek’s paper is titled: "Native Sparse Attention: Hardware-Aligned and Natively Trainable Sparse Attention".

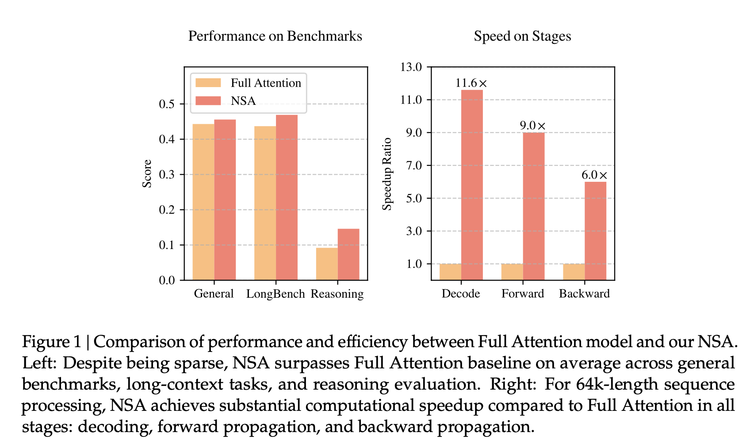

According to the paper, its proposed new architecture NSA (Native Sparse Attention) achieves equal or higher accuracy than full attention mechanisms in benchmark tests; speeds up processing of 64k-token sequences by up to 11.6x, enables more efficient training with reduced computational requirements; and performs exceptionally well on ultra-long-context tasks such as book summarization, code generation, and reasoning.

Compared to previous algorithmic innovations that captured widespread interest, DeepSeek has now reached into the very core—the transformation of the attention mechanism itself.

The Transformer underpins today’s boom in large models, but its core algorithm—the attention mechanism—still suffers from inherent flaws. By analogy with reading, traditional "full attention" reads every word in a text and compares it with all others to understand and generate output. This causes complexity to grow rapidly with longer texts, leading to technical bottlenecks or even system crashes.

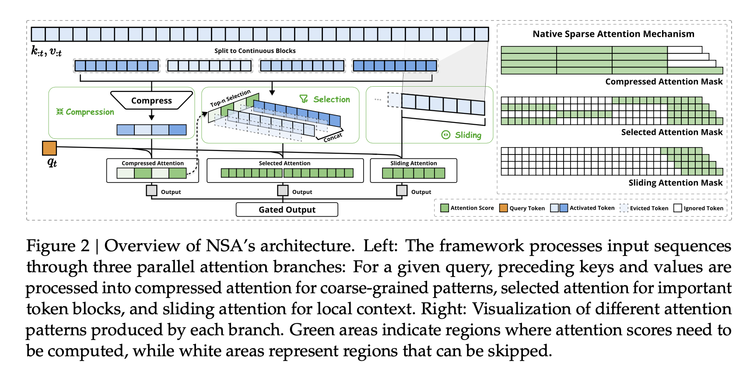

The academic community has long offered various solutions. NSA assembles, through real-world engineering optimization and experimentation, a three-component architectural solution applicable during training:

It includes: 1) Semantic compression—not processing each word individually, but grouping them into “blocks”, reducing sequence length to 1/k while preserving global semantics, introducing positional encoding to minimize information loss, thus lowering computational complexity from O(n²) to O(n²/k).

2) Dynamic selection—the model uses a scoring mechanism to identify the most important words in the text and performs fine-grained computation only on them. This importance sampling strategy retains 98% of fine-grained information while reducing computational load by 75%.

3) Sliding window—if the first two are like summarizing and highlighting key points, the sliding window maintains coherence by examining recent context; hardware-level memory reuse technology reduces memory access frequency by 40%.

None of these ideas were invented by DeepSeek, but one can think of this effort as ASML-like work—these technical elements already existed, scattered across different domains, but no one had engineered them together into a scalable solution. Now someone has used strong engineering capability to build a "lithography machine," enabling others to train models in real industrial environments using this new algorithmic architecture.

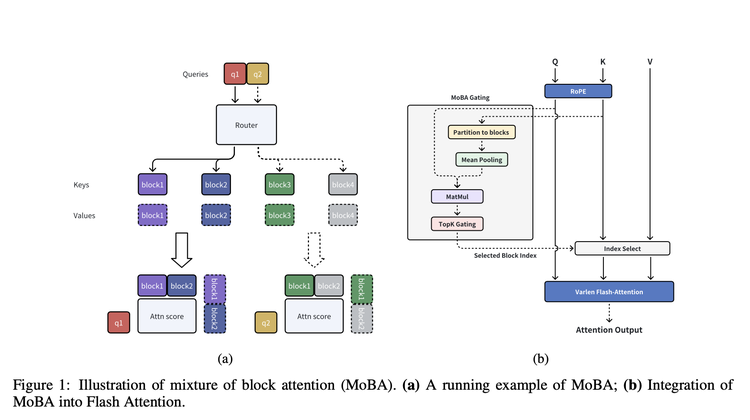

Moonshot AI’s paper, published the same day, proposes an architecture with a strikingly similar core idea: MoBA (MoBA: MIXTURE OF BLOCK ATTENTION FOR LONG-CONTEXT LLMS).

From its name, we see it also adopts the method of turning "words" into blocks. After chunking, MoBA employs a "gate network" acting like an "intelligent filter", selecting the top-K most relevant blocks for each block and computing attention only on those selected. In implementation, MoBA also integrates FlashAttention (for more efficient attention computation) and MoE (Mixture of Experts) optimization techniques.

Compared to NSA, MoBA emphasizes flexibility—it doesn’t fully abandon the dominant full attention mechanism but designs a switchable mode allowing models to toggle freely between full and sparse attention, offering better compatibility with existing full-attention models.

According to the paper, MoBA shows clear advantages as context length increases. In a 1M token test, MoBA was 6.5x faster than full attention; at 10M tokens, it achieved a 16x speedup. Moreover, it has already been deployed in Kimi’s product to handle users’ daily demands for processing ultra-long contexts.

A key reason Yang Zhilin attracted attention when founding Moonshot AI was his influential publications and citation impact, though his last research paper prior to K1.5 dated back to January 2024. Liang Wenfeng appears as an author in DeepSeek’s most critical model technical reports, but those reports list nearly all DeepSeek employees, making authorship less meaningful. In contrast, the NSA paper has only a few named authors. This highlights the significance of these works to the founders of both companies and their importance in understanding each company’s technical trajectory.

Another telling detail underscoring this importance: netizens noticed that the arXiv submission record for the NSA paper shows it was submitted on February 16 by none other than Liang Wenfeng himself.

Two

This isn’t the first time Moonshot AI and DeepSeek have "collided". When R1 was launched, Kimi unusually released the K1.5 technical report—a rare move since the company previously prioritized neither public disclosure nor sharing technical insights. At that time, both papers targeted reinforcement learning-driven reasoning models. Indeed, a close reading reveals that in the K1.5 paper, Moonshot AI provided more detailed insights into training reasoning models, surpassing the R1 paper in both informational density and technical depth. Yet subsequent excitement around DeepSeek overshadowed much of the discussion on Kimi’s paper.

A confirming example: OpenAI recently published a rare paper explaining the reasoning capabilities of its o-series models, explicitly naming both DeepSeek R1 and Kimi K1.5: "DeepSeek-R1 and Kimi k1.5 independently demonstrate through research that Chain-of-Thought (COT) learning methods can significantly enhance model performance in mathematical problem-solving and programming challenges." In other words, these are the two reasoning models OpenAI itself chose for comparison.

"The most magical thing about this large model architecture, I feel, is that it seems to point the way forward by itself, leading different people from different angles toward similar directions."

Zhang Mingxing, a professor at Tsinghua University who participated in MoBA’s core research, shared on Zhihu.

He also offered an insightful comparison:

"DeepSeek R1 and Kimi K1.5 both point toward ORM-based RL, though R1 starts from zero, being more 'pure' or 'less structured', launched earlier, and open-sourced the model simultaneously.

Kimi MoBA and DeepSeek NSA again both point toward end-to-end trainable learned sparse attention. This time, MoBA is even less structured, launched earlier, and open-sourced the code simultaneously."

Their repeated "collisions" help people better understand, through comparison, the evolution of reinforcement learning technologies and the advancement of more efficient, longer-text attention mechanisms.

"Just as studying R1 and K1.5 together helps us learn how to train reasoning models, examining MoBA and NSA together gives us a fuller picture from different angles of our belief—that sparsity in attention should exist and can be learned through end-to-end training," Zhang wrote.

Three

After MoBA’s release, Xu Xinran from Moonshot AI noted on social media that this was a year-and-a-half-long effort, now ready for developers to use out-of-the-box.

However, choosing to open-source now inevitably places the discussion under DeepSeek’s "shadow". Interestingly, in an era where many companies are actively integrating DeepSeek and open-sourcing their own models, the public immediately wonders whether Kimi will follow suit and whether Moonshot will open-source its models. As a result, Moonshot and Doubao appear to be the last remaining "outliers".

Looking now, DeepSeek’s impact on Moonshot is more sustained than on other players, posing comprehensive challenges from technical direction to user acquisition: First, it proves that even in product competition, foundational model capability remains paramount. Second, another increasingly clear ripple effect is that Tencent’s combination of WeChat Search and Yuanbao is leveraging the momentum of DeepSeek R1 to catch up on a marketing campaign it previously missed—all ultimately aimed at Kimi and Doubao.

Moonshot’s response strategy thus becomes highly watched. Open-sourcing is clearly a necessary step. And it appears Moonshot aims to truly match DeepSeek’s open-source philosophy—while most post-DeepSeek open-sourcing efforts resemble reactive moves, still following the Llama-era mindset of open-source-as-defense-or-disruption. In reality, DeepSeek’s open-source approach differs fundamentally: no longer just a defensive tactic against closed competitors like Llama, but a competitive strategy that generates clear benefits.

Rumors suggest Moonshot has recently set internal goals targeting SOTA (state-of-the-art) results—appearing to align closely with this new open-source model, aiming to release the strongest models and architectural methods, thereby gaining the application-side influence it has long sought.

Based on both companies’ papers, MoBA has already been integrated into Moonshot’s models and products, as has NSA, giving outsiders clearer expectations for DeepSeek’s next-generation models. The next big question is whether Moonshot and DeepSeek will collide once again with their next-gen models trained via MoBA and NSA—and whether they’ll do so via open-sourcing. That may well be the moment Moonshot is waiting for.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News