The impact of DeepSeek on upstream and downstream protocols of Web3 AI

TechFlow Selected TechFlow Selected

The impact of DeepSeek on upstream and downstream protocols of Web3 AI

DeepSeek bursts the final bubble in the Agent sector, while DeFAI may foster new growth, ushering in a transformation in industry financing models.

Author: Kevin, the Researcher at BlockBooster

TL;DR

-

The emergence of DeepSeek shatters the computational power moat, with open-source models leading optimization in compute efficiency as the new direction;

-

DeepSeek benefits the model and application layers across the industry value chain, while negatively impacting compute protocols within infrastructure;

-

DeepSeek inadvertently punctures the final bubble in the Agent sector, where DeFAI is most likely to give rise to innovation;

-

The zero-sum game of project fundraising may come to an end, with community launches combined with minimal VC funding potentially becoming the norm.

The shockwaves from DeepSeek will profoundly impact the AI industry’s upstream and downstream sectors this year. DeepSeek has successfully enabled consumer-grade GPUs to perform large model training tasks that previously required vast arrays of high-end GPUs. The first line of defense in AI development—computational power—is beginning to crumble. When algorithmic efficiency advances at an annual rate of 68%, outpacing hardware performance improvements bound by Moore's Law, the valuation models deeply entrenched over the past three years are no longer valid. The next chapter of AI will be ushered in by open-source models.

Although Web3 AI protocols differ fundamentally from their Web2 counterparts, they are inevitably affected by DeepSeek. This impact will catalyze entirely new use cases across the Web3 AI ecosystem: infrastructure layer, middleware layer, model layer, and application layer.

Mapping the Collaborative Relationships Across the Value Chain

Through analysis of technical architecture, functional positioning, and practical use cases, I categorize the entire ecosystem into four layers—infrastructure, middleware, model, and application—and outline their interdependencies:

Infrastructure Layer

The infrastructure layer provides decentralized foundational resources (compute, storage, L1). Compute protocols include Render, Akash, io.net; storage protocols include Arweave, Filecoin, Storj; L1s include NEAR, Olas, Fetch.ai.

Compute layer protocols support model training, inference, and framework operations; storage protocols preserve training data, model parameters, and on-chain interaction records; L1s optimize data transmission efficiency and reduce latency through specialized nodes.

Middleware Layer

The middleware layer bridges infrastructure and upper-layer applications, providing framework development tools, data services, and privacy protection. Data annotation protocols include Grass, Masa, Vana; development frameworks include Eliza, ARC, Swarms; privacy computing protocols include Phala.

The data services layer fuels model training; development frameworks rely on compute and storage from the infrastructure layer; privacy computing ensures data security during training/inference.

Model Layer

The model layer focuses on model development, training, and distribution, with open-source model training platforms such as Bittensor.

The model layer depends on compute from the infrastructure layer and data from the middleware layer; models are deployed on-chain via development frameworks; model markets deliver trained models to the application layer.

Application Layer

The application layer consists of AI products面向end users, including Agents such as GOAT, AIXBT; DeFAI protocols such as Griffain, Buzz.

The application layer invokes pre-trained models from the model layer; relies on privacy computing from the middleware layer; complex applications require real-time compute from the infrastructure layer.

DeepSeek May Negatively Impact Decentralized Compute

According to a sampling survey, approximately 70% of Web3 AI projects actually call upon OpenAI or centralized cloud platforms, only 15% utilize decentralized GPUs (e.g., Bittensor subnet models), and the remaining 15% adopt hybrid architectures (sensitive data processed locally, general tasks offloaded to the cloud).

The actual usage rate of decentralized compute protocols remains far below expectations and is mismatched with their market valuations. Three reasons contribute to low adoption: Web2 developers migrating to Web3 continue using existing toolchains; decentralized GPU platforms have yet to achieve price advantages; some projects use "decentralization" as a label to evade data compliance scrutiny while still relying on centralized cloud compute.

AWS/GCP dominate over 90% of the AI compute market share, whereas Akash’s equivalent compute capacity amounts to merely 0.2% of AWS. Centralized cloud platforms hold strong moats in cluster management, RDMA high-speed networks, and elastic scaling. While decentralized platforms offer Web3-enhanced versions of these technologies, they suffer critical shortcomings: latency issues—distributed node communication latency is six times higher than centralized clouds; fragmented toolchains—PyTorch/TensorFlow do not natively support decentralized scheduling.

DeepSeek reduces compute consumption by 50% through sparse training and enables training of billion-parameter models on consumer-grade GPUs via dynamic model pruning. Market expectations for short-term demand for high-end GPUs have been significantly revised downward, prompting a revaluation of edge computing’s market potential. As shown in the figure above, prior to DeepSeek, the vast majority of protocols and applications in the industry relied on platforms like AWS, with only rare use cases deployed on decentralized GPU networks—cases that valued cost advantages on consumer-grade compute and were indifferent to latency.

This situation may further deteriorate with the arrival of DeepSeek. DeepSeek lifts constraints on long-tail developers, enabling low-cost, efficient inference models to proliferate at an unprecedented pace. In fact, current centralized platforms and several countries have already begun deploying DeepSeek. The dramatic reduction in inference costs will spawn numerous front-end applications, creating massive demand for consumer-grade GPUs. Facing this imminent market expansion, centralized cloud providers will enter a new round of user acquisition battles—not just against top-tier platforms but also against countless small-scale centralized providers. The most direct competitive tactic will be price cuts. It is foreseeable that the price of 4090 GPUs on centralized platforms will decline, which would be catastrophic for Web3 compute platforms. When pricing is no longer the sole moat for the latter, and decentralized platforms are forced to lower prices, the consequences could be unbearable for io.net, Render, Akash, and others. Price wars could destroy their remaining valuation ceilings, triggering a death spiral of declining revenues and user loss, potentially forcing decentralized compute protocols to pivot toward new directions.

Specific Implications of DeepSeek on Upstream and Downstream Protocols

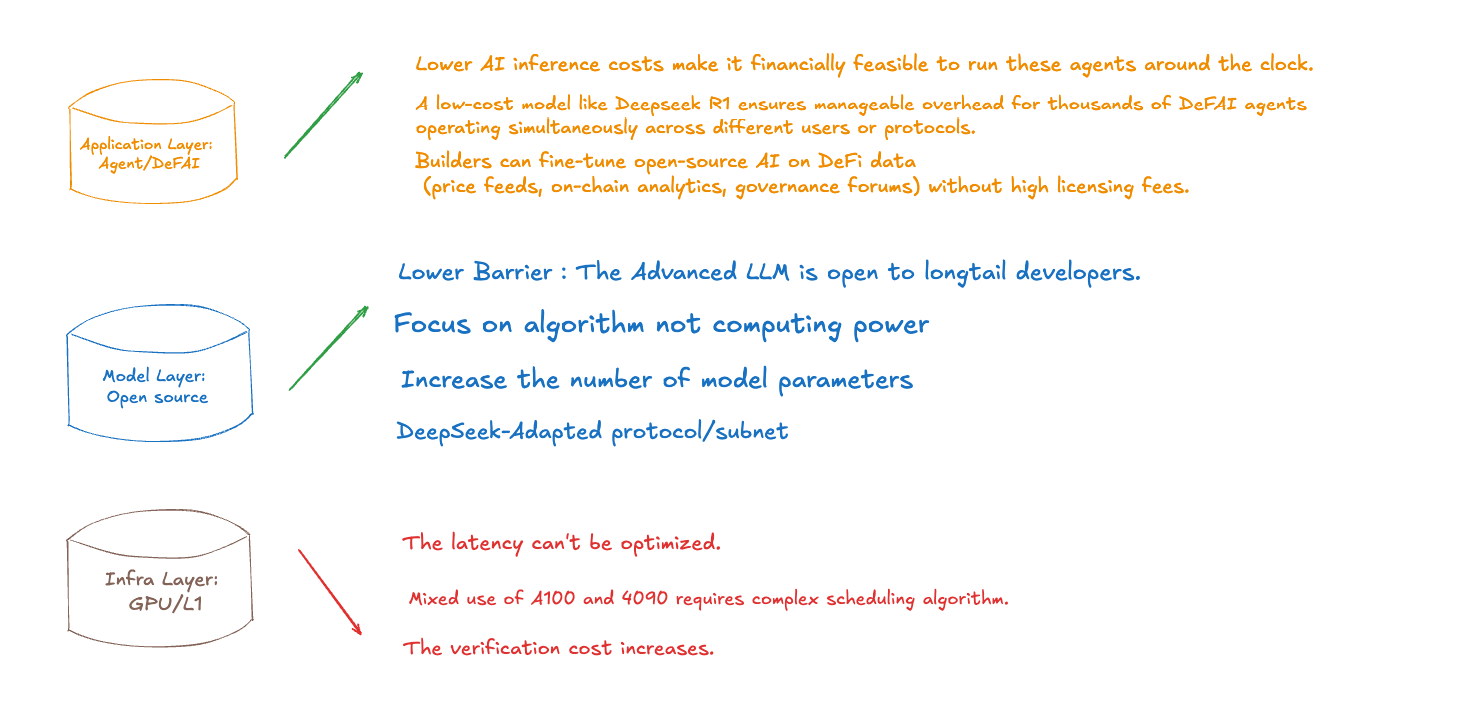

As illustrated, I believe DeepSeek will have varying impacts on the infrastructure, model, and application layers. On the positive side:

-

The application layer benefits from drastically reduced inference costs, enabling more applications to maintain Agent functionality online at low cost and execute tasks in real time;

-

Low-cost models like DeepSeek allow DeFAI protocols to form more complex SWARMs, deploying thousands of Agents on a single use case, with highly granular and clearly defined roles, significantly improving user experience and preventing misinterpretation or incorrect execution of user inputs;

-

Application developers can fine-tune models by feeding DeFi-related AI applications price data, on-chain analytics, and protocol governance data, without paying expensive licensing fees;

-

The existence of the open-source model layer is validated following DeepSeek’s emergence, as high-end models become accessible to long-tail developers, stimulating widespread development activity;

-

The high walls of compute built around high-end GPUs over the past three years have been completely broken down, giving developers more options and establishing a clear direction for open-source models. The future of AI models will no longer be about raw compute power but algorithmic superiority. This shift in mindset will become the confidence foundation for open-source model developers;

Specialized subnets围绕DeepSeek will emerge endlessly, increasing model parameters under equivalent compute, attracting more developers to join open-source communities.

On the negative side:

-

The inherent communication latency in infrastructure compute protocols cannot be optimized;

-

Coordinating hybrid networks composed of A100 and 4090 GPUs demands higher algorithmic sophistication, which is not a strength of decentralized platforms.

DeepSeek Punctures the Final Bubble in the Agent Sector, DeFAI May Give Birth to Innovation, and Industry Fundraising Models Are Transforming

Agent was the last hope in the industry, and DeepSeek’s arrival, by lifting compute constraints, paints a promising future of application explosion. While seemingly a major boost for the Agent sector, due to the industry’s strong correlation with U.S. equities and Federal Reserve policy, the last remaining bubble has been burst, driving sector valuations to rock bottom.

Within the wave of AI integration into the industry, technological breakthroughs and market dynamics have always coexisted. The ripple effect triggered by Nvidia’s market volatility acts like a mirror, revealing the deep-seated challenges in the industry’s AI narrative: beneath seemingly complete ecosystem maps—from on-chain Agents to DeFAI engines—lie fragile technical foundations, hollow value logic, and capital-driven realities. Beneath the surface prosperity of on-chain ecosystems lurk hidden ailments: numerous high-FDV tokens competing for limited liquidity, legacy assets surviving on FOMO sentiment, and developers trapped in PVP cycles that drain innovative energy. As incremental capital and user growth hit ceilings, the entire industry faces the “innovator’s dilemma”—eager for breakthrough narratives yet unable to escape path dependency. This fractured state presents a historic opportunity for AI Agents: they represent not just an upgrade in technical toolkits, but a重构of value creation paradigms.

Over the past year, an increasing number of teams have realized that traditional fundraising models are failing—the playbook of allocating small shares to VCs, maintaining tight control, and waiting for exchange listings to pump prices is no longer sustainable. With VCs tightening budgets, retail investors refusing to buy, and major exchanges raising listing barriers, a new strategy suited for bear markets is emerging: partnering with top KOLs and a few VCs, conducting large-scale community launches, and cold-starting with low market caps.

Innovators like Soon and Pump Fun are pioneering “community launches”—partnering with leading KOLs to distribute 40%-60% of tokens directly to the community, launching projects at FDVs as low as $10 million and securing millions in funding. This model leverages KOL influence to generate consensus-driven FOMO, allowing teams to lock in early profits while trading short-term control for high liquidity and market depth. Though sacrificing immediate control, teams can use compliant market-making mechanisms to repurchase tokens at low prices during bear markets. At its core, this represents a paradigm shift in power structures: moving from VC-dominated Ponzi-like games (institutional takeovers → exchange listings → retail absorption) toward transparent, community-consensus-based pricing. A new symbiotic relationship forms between project teams and communities through liquidity premiums. As the industry enters an era of transparency, projects clinging to traditional control logic may become relics swept away by the tide of power transition.

Short-term market pain precisely confirms the irreversibility of long-term technological waves. When AI Agents reduce on-chain interaction costs by two orders of magnitude, and adaptive models continuously enhance DeFi protocol capital efficiency, the industry may finally迎来the long-awaited Massive Adoption. This transformation does not rely on hype or artificially inflated capital, but on genuine technological penetration rooted in real demand—just as the electrical revolution did not stall due to lightbulb company bankruptcies, Agents will ultimately emerge as the true golden track after the bubbles burst. And DeFAI may well be the fertile ground for rebirth. When low-cost inference becomes commonplace, we may soon witness use cases combining hundreds of Agents into a single Swarm. Under equivalent compute,大幅increased model parameters will ensure Agents in the open-source era can be thoroughly fine-tuned, capable of breaking down even complex user instructions into executable task pipelines for individual Agents. Each Agent optimizing on-chain operations could boost overall DeFi protocol activity and liquidity. More sophisticated DeFi products led by DeFAI will emerge—this is precisely where new opportunities arise in the aftermath of the last bubble’s collapse.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News