China and the U.S. are racing toward the 2027 AI tipping point—why Monad?

TechFlow Selected TechFlow Selected

China and the U.S. are racing toward the 2027 AI tipping point—why Monad?

Monad is the go-to choice for blockchain AI projects like Build.

Author: Harvey C

Since last year, global enthusiasm for artificial intelligence has been steadily rising. Whether it's overseas tech giants or domestic research institutions, all are aggressively increasing investments in AI models and accelerating their release timelines.

Today, we'll explore the current state of the U.S.-China AI race, expectations for milestone moments in 2027, and why AI projects should choose to build on Monad—discussing the immense potential and opportunities ahead in AI.

1. The U.S.-China AI Race: Rapid Progress Despite Compute Constraints

In recent years, the U.S. government's restrictions on AI compute chips to China have drawn widespread attention. Yet in practice, this so-called "hardware bottleneck" hasn't significantly slowed down mainland China’s AI research as expected. In fact, when comparing the iteration speed of large models between the two countries recently, the gap has narrowed to just a few months—or even less.

-

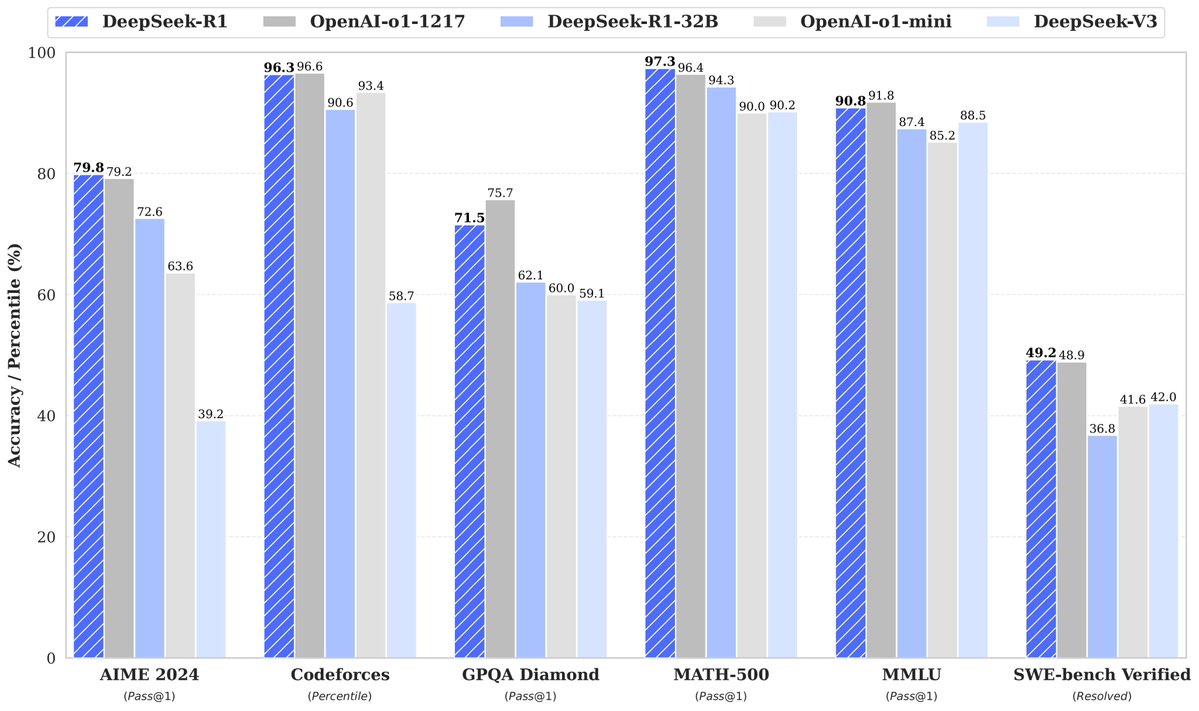

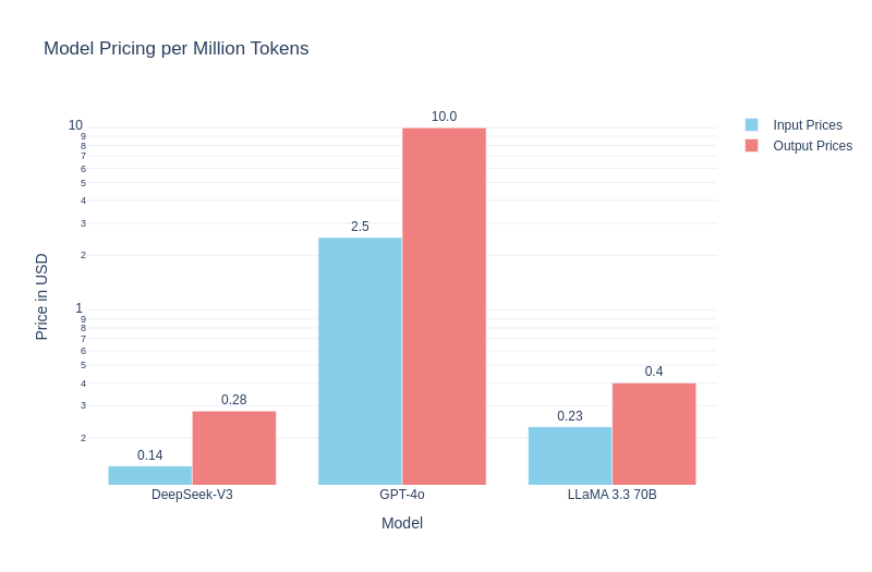

The strength of the chasers: OpenAI released its "o1-preview" model only four months ago, with the official version launching just one month ago. Around the same time, mainland China developed reasoning models with comparable performance metrics. DeepSeek, the large-model company under quant giant Fantuan (Huanfang), open-sourced the DeepSeek-R1 inference model, which rivals o1 across multiple benchmarks and even performs better in certain customized scenarios. Earlier, DeepSeek V3’s launch already put significant pressure on Llama 4to feel the heat from Eastern competition. Additionally, Kimi launched its new reinforcement learning model k1.5, the first multimodal o1-class model after OpenAI capable of joint text and image reasoning.

-

Breakthroughs in speech and multimodality: Doubao’s GPT-4o advanced voice mode, comparable to Gemini 2.0 and GPT-4o, also debuted rapidly within China. These technologies were once considered exclusive to high-compute environments, yet clearly, mainland companies have leveraged various workarounds and optimization algorithms to iterate quickly despite limited compute resources.

These developments show that even with existing gaps in computing power, mainland AI researchers are closely following global leaders. With pioneers paving the way through trial and error, followers can avoid costly mistakes and accelerate progress.

2. Overseas Leaders’ Experience Offers “Copying Homework” Opportunities for Followers

During the rapid growth of deep learning, the industry's understanding of AI paradigms has continuously evolved. While large language models (LLMs) dominate current interest, another path—reinforcement learning (RL)—is regaining significant attention.

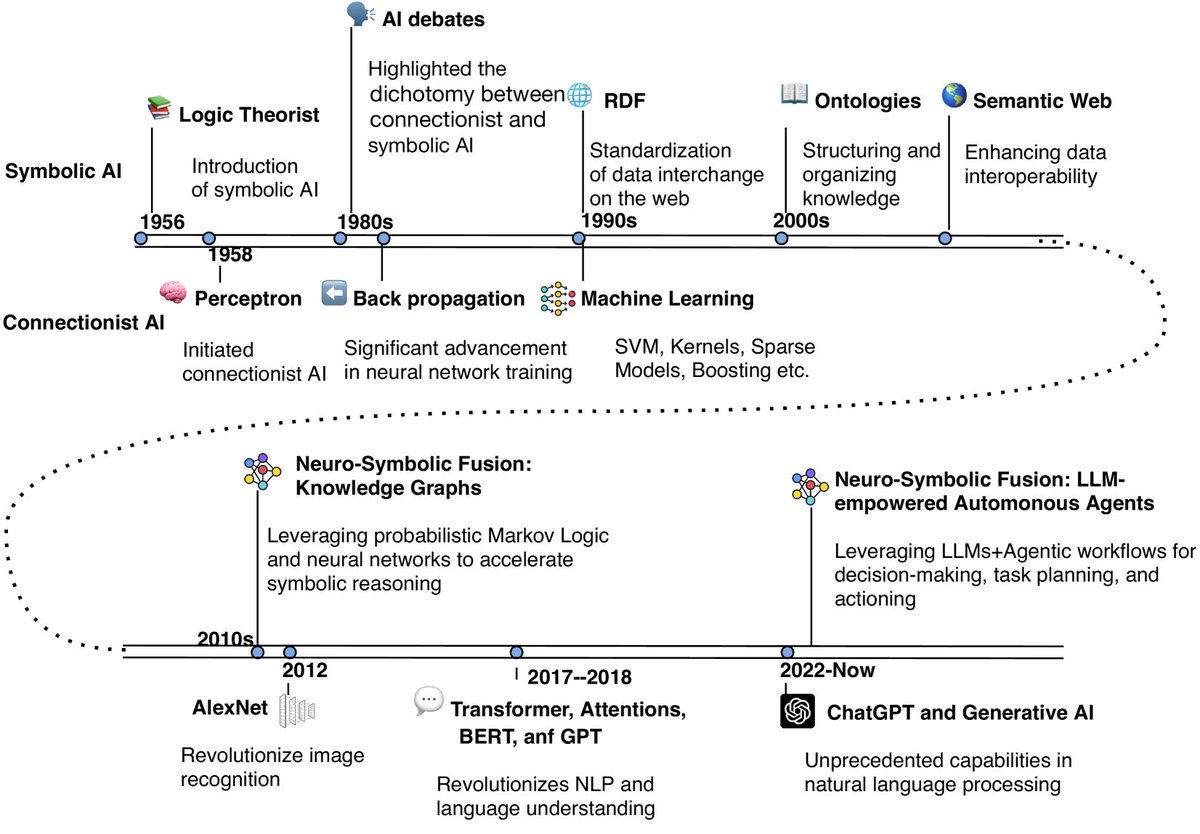

Evolution of AI Paradigms

-

Shifting AI paradigms over time: Artificial intelligence development includes several major branches. Symbolism (logic-based AI) relies on rule-based reasoning and formal logic, excelling at deterministic tasks; connectionism (neural networks) mimics the brain’s computational approach, identifying patterns through layered structures trained on data. Bayesian AI (probabilistic AI) emphasizes modeling uncertainty using probability; reinforcement learning (RL) optimizes behavior through trial and error in dynamic environments; evolutionary AI uses principles of natural selection to evolve solutions; hybrid AI combines strengths from multiple paradigms to create more powerful and flexible systems. These paradigms have each taken turns leading the field over time.

-

Rapid replication and iteration: When Western teams move first, mainland teams can follow at lower cost once the approach is validated—such as with multimodal or o1-style reasoning models. The familiar pattern from the internet era—Western R&D followed by Chinese commercialization—is repeating itself. With Western players having already navigated pitfalls, mainland teams achieve results faster through reproduction and refinement.

Mainland's DeepSeek exerts strong competitive pressure on U.S. counterparts through enhanced training at lower cost

On one hand, leading-edge labs abroad lay the groundwork for frontier exploration. On the other, mainland teams aren’t merely passive followers—they actively integrate knowledge and innovate workarounds under constrained compute resources, developing more agile implementations in reinforcement learning and reasoning.

3. Reinforcement Learning May Be the Next Breakthrough in AI

DeepSeek’s recently released model replaces most supervised fine-tuning (SFT) with reinforcement learning, reducing dependence on labeled domain-specific data. This opens possibilities for vertical generalization: given a clear reward function, the model can continuously improve its reasoning ability through self-iteration. As shared in DeepSeek’s open-source release, the model explores and self-optimizes within an RL environment, gaining a degree of autonomous improvement.

This means that with sufficient compute, models can evolve continuously via RL without requiring massive datasets. Despite U.S. hardware and technological restrictions, such alternative approaches reduce reliance on traditional large-scale training resources, showing mainland researchers a viable path forward—and offering a blueprint for other organizations aiming to develop their own large models.

4. 2027 Could Be the AI Inflection Point—Many Jobs Will Be Replaced or Redefined

The rapid advancement of AI raises questions about how fast progress will be and where the limits lie. Recently, Anthropic CEO Dario Amodei stated: “We’ll see models outperform humans in most fields by 2027.” Key drivers behind this prediction include:

-

Continuous iteration via reinforcement learning: Unlike the traditional strict separation of “training-testing-inference,” the next wave of AI breakthroughs may use hybrid methods—especially reinforcement learning—for continuous self-improvement, enabling real-time reflection and updates that rapidly boost cognitive capabilities.

-

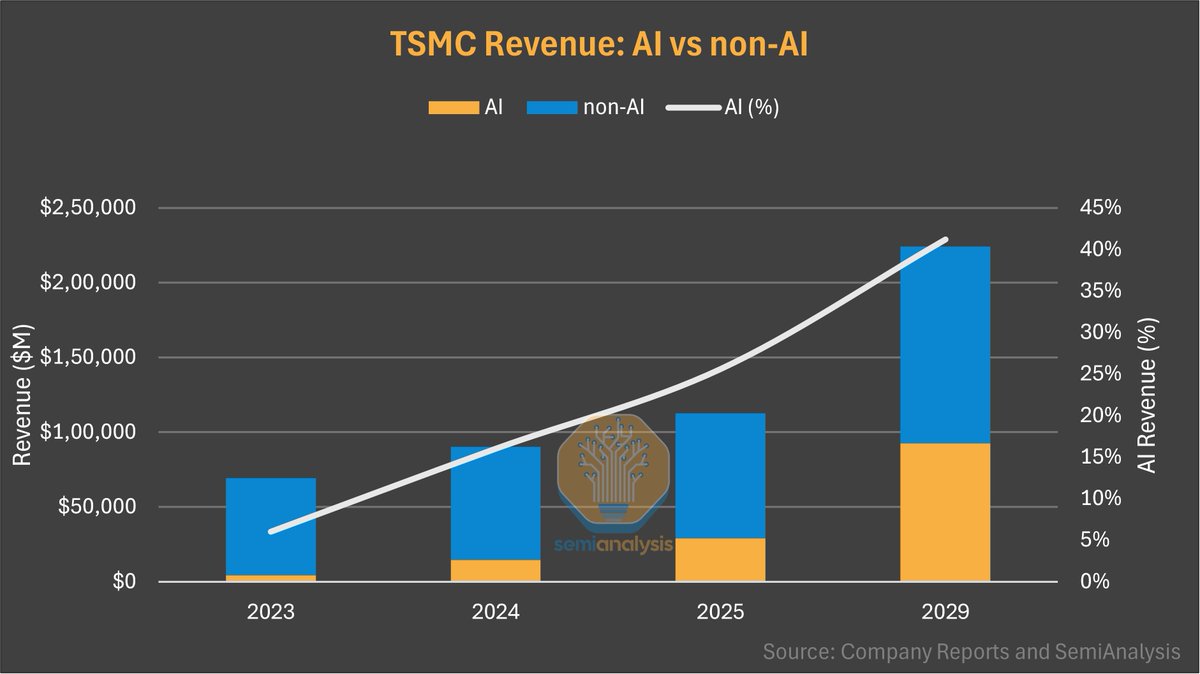

Strong industrial capital backing: TSMC, the chip manufacturing leader, recently projected during its earnings call that its AI business will grow at a ~45% CAGR from 2024 to 2029, reaching nearly 20 times its 2024 size by 2029. This demand is primarily driven by compute investments from giants like OpenAI, signaling that AI will permeate nearly every imaginable application area.

AI emerges as TSMC’s fastest-growing future business segment

2027 is likely to become a pivotal moment for rapid AI capability growth. Around this time, many tasks previously requiring human judgment and creativity will gradually be replaced or redefined by models, triggering profound changes in societal structures and economic models.

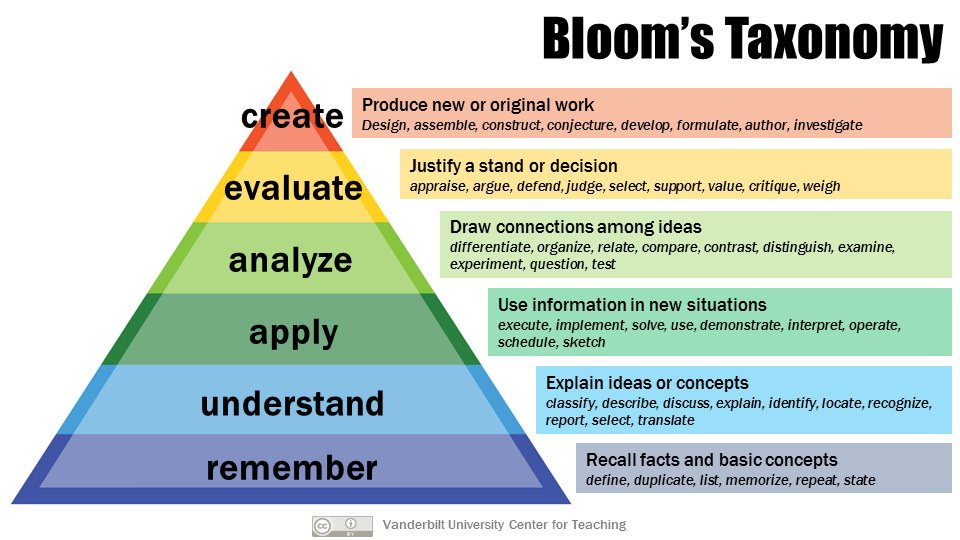

Take Bloom’s Taxonomy—a framework I personally appreciate, originally proposed by Benjamin Bloom and later refined through multiple iterations—which divides human cognition into six levels. With AI advancing rapidly, by 2027, large models might handle all but perhaps the highest levels of evaluation and creation—even possibly surpassing us in creative tasks. Time is running short for humanity. What will humans do then? That’s a topic worth exploring separately.

Blade Runner (1982)

5. Why AI Projects Should Build on the Monad Ecosystem

Back to the main point: many still view blockchain-AI integration as mere “hype.” However, I believe combining AI projects with Monad’s technical features offers unique practical value, making Monad the best choice for building blockchain-based AI applications. Here’s why:

-

Rich data from the EVM ecosystem: Access to diverse, high-quality data is crucial for training accurate and powerful models. The EVM-based ecosystem has long accumulated vast amounts of smart contract data, transaction records, and user behavior—providing rich contextual data for AI training. Compared to newer blockchain environments, the mature EVM ecosystem acts as a “data goldmine” for AI training and inference.

-

MonadDB enables real-time data access: Real-time network data capture is vital for AI timeliness, especially in DeFi. MonadDB’s high-speed data retrieval and low gas fees empower models to instantly access the latest on-chain or off-chain information.

-

High speed and low cost: Monad achieves 10,000 transactions per second with a 1-second block time, creating a friendly environment for AI agents to execute economic behaviors via on-chain operations like trading, staking, and payments. The efficient, low-cost Monad EVM environment equips AI agents with real-world tools. Low fees make “high-frequency, micro-transactions” feasible, expanding the range of interactive AI agent use cases.

-

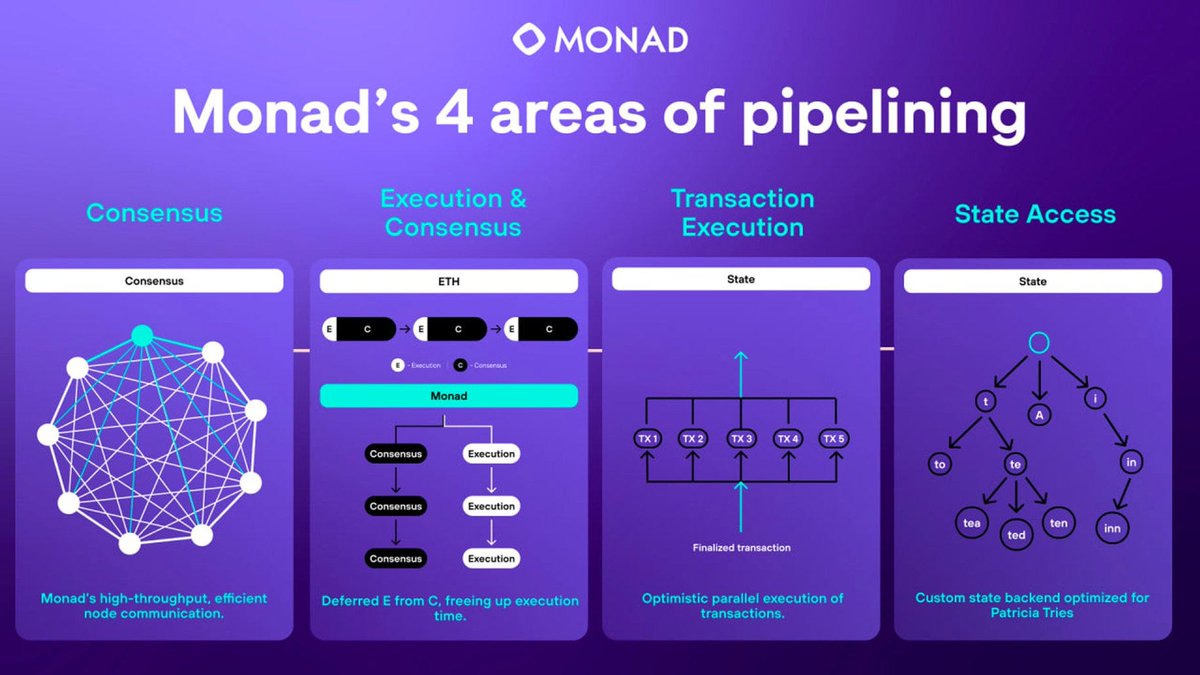

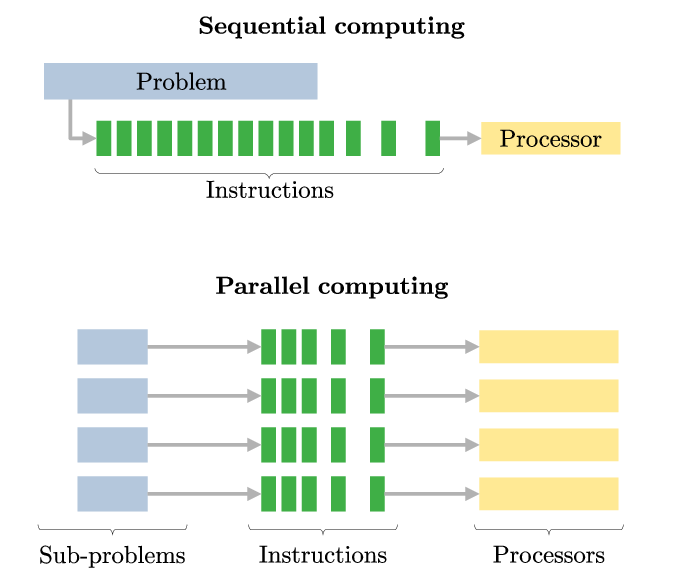

Parallel execution: Existing research shows that hybrid AI collaboration can tackle more complex and challenging tasks. Monad’s parallel execution environment allows Agentic Swarms—multiple AI agents—to run simultaneously on-chain, enabling collaborative multi-model operations.

In summary, the goal is to enable current and future AI agents to build seamlessly on Monad, leveraging the mature EVM environment and existing DeFi protocols and infrastructure to lay the foundation for next-generation AI experiences.

6. An Invitation to Chinese-Speaking AI Developers

As my colleagues Jing and Evan have frequently highlighted in their X posts—including various AI-related articles and case studies—Monad has always welcomed and supported AI projects building within its ecosystem. From the perspective of Chinese-speaking developers, I’d like to emphasize additional reasons:

-

Within the broader context of U.S.-China AI competition, Chinese-speaking developers may face limitations in cross-border resources or collaborations.

-

Yet, the blockchain world values globalization and decentralization. On Monad, you can serve global users without fear of being “labeled,” while leveraging decentralization to bypass geopolitical and technological barriers.

Monad sincerely invites all AI practitioners and developers to join the Monad ecosystem and co-create innovative, user-owned, sovereign products. Ambitious founders are welcome to build on Monad. We’re committed to supporting your success through various programs, including the ongoing evm/accathon, Mach Accelerator, Jumpstart Program, The Studio, The Foundry, and Monad Madness. Whether you're building AI-driven financial trading models on blockchain or decentralized adaptive-learning robots, Monad offers faster speeds, lower costs, and greater openness to support your innovation.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News