With the rise of AI narratives, how does Heurist contribute to meeting computational demands for Agents?

TechFlow Selected TechFlow Selected

With the rise of AI narratives, how does Heurist contribute to meeting computational demands for Agents?

Heurist addresses the centralization risks, high costs, and low resource utilization of traditional centralized cloud services, while offering AI developers and users a novel approach to computing resource utilization.

By Pzai, Foresight News

In the future cyber society, AI as a key component of human interaction makes infrastructure development a critical breakthrough. The growing demand for computing and inference services generated by model operations is rapidly expanding. As a Web3 AI-as-a-Service infrastructure, decentralized AI cloud computing protocol Heurist recently announced a $2 million funding round backed by institutions including Amber Group, Contango Digital, Manifold Trading, and Selini Capital. What kind of superior infrastructure will they deliver to crypto users and AI developers?

Architecture

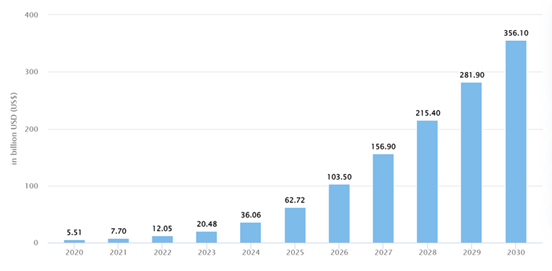

With the rapid growth of generative AI, the global market size is projected to reach $356.1 billion by 2030. This surge brings increasing demands for computing resources and inference services.

Currently, many AI models run on traditional centralized cloud services, which face several challenges:

-

Centralization risks: In centralized cloud environments, all data and computation are concentrated in a single data center, making them vulnerable to single points of failure. Additionally, storing all data in one location increases the risk of data breaches and misuse.

-

High costs: Running AI models requires significant computational power, especially during large model training, consuming substantial GPU and CPU resources. These resources are expensive, leading to high long-term operational costs, particularly for enterprises requiring continuous model operation and updates.

-

Low resource utilization: Traditional centralized cloud services often suffer from uneven workloads during AI model training and inference, resulting in insufficient capacity during peak times and wasted resources during idle periods. Resource contention among services further reduces overall utilization efficiency.

As an AI-as-a-Service infrastructure, Heurist leverages a DePIN protocol architecture that allows GPU owners to provide computing resources permissionlessly, while offering APIs enabling users and developers to access and run AI models on these distributed resources.

Compute Layer

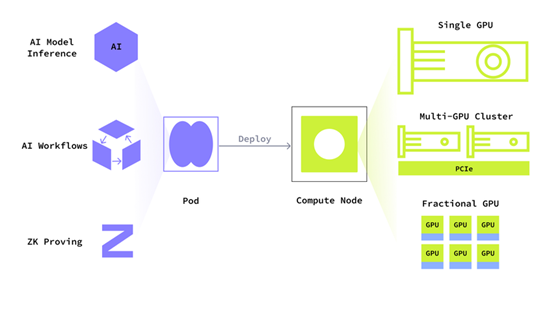

The compute layer consists of compute nodes, Pods, and a validation system. Compute nodes serve as the basic unit, allowing compute providers (miners) to join without permission and without fixed-term commitments.

Typical Pod pattern

A Pod is an independent, deployable workload unit utilizing GPUs. Through Pods, users can specify computational requirements to match suitable nodes. It supports automatic load adjustment and can be used in AI model operations, fine-tuning, and ZK proof generation. Moreover, Heurist employs cryptographic-economic verification to ensure the integrity and reliability of computational results.

Orchestration Layer

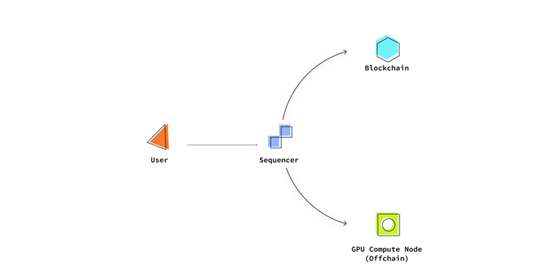

Heurist builds a ZK Stack to deliver a high-throughput, low-cost environment while providing interoperable sovereign isolation for users, ensuring stability in operations and resource management. For on-chain sequencers, transaction computations are first executed off-chain, then committed to the chain upon successful completion. This execution model reduces the cost associated with failed transactions.

Application Layer

Anyone can build frontends at the application layer. Heurist offers two payment gateway models: developers may prepay for resources via compute credits or distribute costs directly to end-users based on usage. The latter also enables autonomous payment options for AI Agents.

In summary, Heurist’s decentralized architecture enhances system fault tolerance and data security, lowers operating costs by leveraging idle computing resources, improves resource utilization through dynamic allocation, increases flexibility and scalability via load balancing, and ensures computational integrity and reliability through cryptographic-economic verification built on the ZK Stack. This enables the system to efficiently and securely adapt to diverse workloads, delivering a stable experience for users and developers alike.

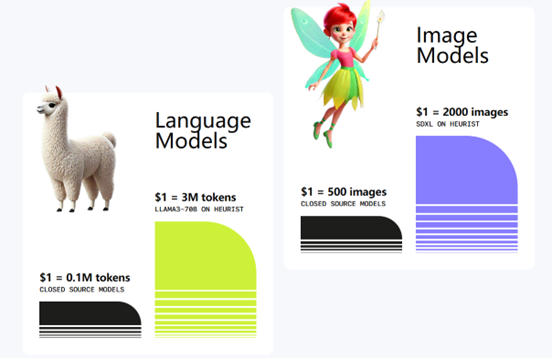

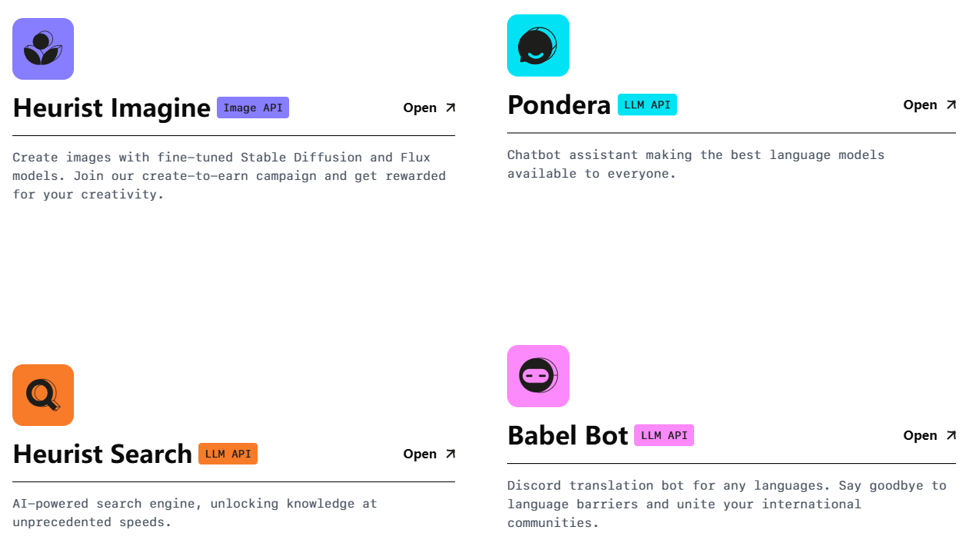

Products and Applications

Current mainstream applications built on Heurist include Heurist Imagine (AI NFT art creation), Pondera (AI conversation), Heurist Search (AI search), and Babel Bot (Discord translation bot), covering major AI use cases. Leveraging decentralized GPU computing, Heurist achieves multiple times higher cost efficiency compared to closed-source models. Beyond common image-generation models, Heurist offers fine-tuned models focused on Web3 Agents and IPs, such as Theia language model developed by Chainbase, Zeek model native to the ZKsync ecosystem, and Magus Devon model created by the Forgotten Runes community. By powering various blockchain-based AI Agents with these highly customized Web3 AI models—featuring composability and censorship resistance—and leveraging on-chain GPU computing, Heurist delivers multi-fold improvements in cost efficiency over closed-source alternatives, positioning itself as both the model and compute layer within the AI Agent ecosystem.

Ecosystem Development

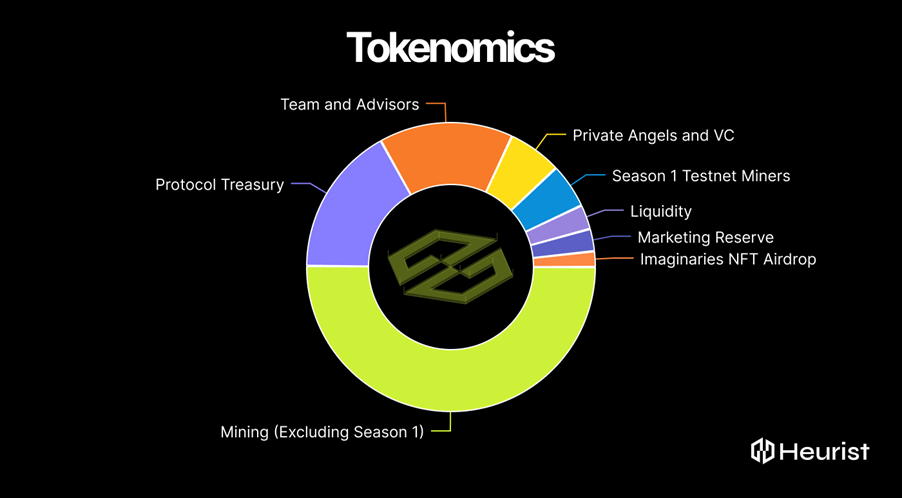

In terms of economic design, Heurist’s native token HEU will be used by developers to pay for computing resources, serve as gas for user transactions, and support staking and governance functions. Token distribution allocates 50% to computing power providers and 7% to early community members (including early miners and NFT holders). Heurist implements an elastic token release mechanism that dynamically adjusts annual inflation between 1.25% and 5%, enabling flexible scaling of computing resources to meet evolving computational demands.

During its early development phase, Heurist launched the Heurist Imaginaries NFT collection, consisting of 500 AI-generated artworks. Holders of this series are eligible for a total airdrop of 1.75% of the HEU token supply.

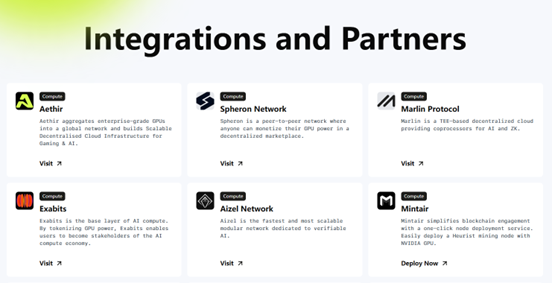

Heurist has established partnerships across multiple domains. On the GPU computing front, it collaborates with Aethir, Exabits, Spheron, and Mintair, providing robust computational infrastructure essential for AI model training and inference networks. In AI and data infrastructure, Heurist partners with GenLayer, Chasm Network, Aizel, and Gateway Network to ensure efficient and secure data flow.

For consumer applications, Heurist works with CreatorBid, Arbus AI, DecentAI, and Eden Art to bring AI technology into broader consumer markets. In AI Agent applications, Heurist provides CreatorBid with customized image-generation models, including specialized services for the WallStreetBets brand. Through these collaborations, Heurist continues to expand its influence and drive AI adoption across industries.

On the roadmap, the project plans to launch its token in Q4 2024, distribute the first season of mining-related token airdrops, and roll out developer API payment functionality. In 2025, staking will be introduced, followed by subsequent mining phases and the ZK chain rollout, along with user payment features and validator systems. Users can participate by contributing GPUs (with 12GB+ VRAM, such as GTX 3090 or 4090), joining Intract point campaigns, and engaging in the Create-to-earn program within Heurist Imagine to mint AI NFTs and earn ZK tokens.

Conclusion

The future cyber society will increasingly rely on AI-human interactions. Heurist addresses the centralization risks, high costs, and low resource utilization inherent in traditional cloud services, offering a novel approach to computing resource utilization for AI developers and users. It has the potential to become a bridge connecting AI technology with the future, driving societal progress toward greater intelligence and laying solid technological foundations for a smarter, more connected, and secure cyber society. For more information, visit the project homepage, Discord, Twitter, and Medium.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News