OpenAI's versatile model GPT-4o delivers stunning real-time interaction, ushering in the sci-fi era

TechFlow Selected TechFlow Selected

OpenAI's versatile model GPT-4o delivers stunning real-time interaction, ushering in the sci-fi era

ChatGPT was only launched 17 months ago, and OpenAI has already delivered a sci-fi movie-level super AI—completely free and accessible to everyone.

By: Machine Heart

It’s mind-blowing!

While other tech companies are racing to catch up on multimodal capabilities for large models—integrating features like text summarization and image editing into smartphones—OpenAI has surged ahead with a game-changing move. The product it just unveiled is so impressive that even its CEO, Sam Altman, described it as “just like in the movies.”

In the early hours of May 14, OpenAI unveiled its next-generation flagship generative model GPT-4o, a desktop app, and a suite of new capabilities at its first-ever “Spring Launch Event.” This time, technology has redefined product design, delivering a masterclass for tech companies worldwide.

Today’s host was OpenAI CTO Mira Murati, who outlined three key points:

-

First, OpenAI will prioritize making its products free so more people can access them.

-

Second, OpenAI launched a desktop application and updated UI, designed to be simpler and more natural to use.

-

Third, following GPT-4 comes a new large model: GPT-4o. What makes GPT-4o special is that it delivers GPT-4-level intelligence through an extremely natural interaction interface, accessible even to free users.

With this ChatGPT update, the large model can now accept any combination of text, audio, and images as input, and generate any combination of text, audio, and images in real time—this is the future of human-computer interaction.

Recently, ChatGPT became usable without registration, and now with the addition of a desktop app, OpenAI aims to make ChatGPT seamlessly integrated into your workflow—available anytime, anywhere. AI is now productivity.

GPT-4o is a groundbreaking large model built for the future of human-machine interaction, capable of understanding text, speech, and images—with lightning-fast responses, emotional awareness, and human-like empathy.

On stage, an OpenAI engineer demonstrated several key capabilities using an iPhone. The most important was real-time voice conversation. Mark Chen said, “This is my first time doing a live demo—I’m a bit nervous.” ChatGPT replied, “Why don’t you take a deep breath?”

“Okay, I’ll take a deep breath.”

ChatGPT immediately responded, “Nope, that wasn’t good—you’re breathing way too hard.”

If you’ve used assistants like Siri before, the difference here is striking. First, you can interrupt the AI anytime—you don’t have to wait for it to finish speaking. Second, there’s virtually no delay; the model responds faster than humans do. Third, it fully understands human emotions and can express a range of feelings itself.

Next came visual capabilities. Another engineer wrote an equation by hand on paper and asked ChatGPT not for the answer, but to explain how to solve it step-by-step. It clearly shows great potential as a teaching assistant.

ChatGPT says: Whenever you're stressed about math, I'm right here with you.

Then came a test of GPT-4o's coding ability. With some code open, the user interacted via voice with the desktop version of ChatGPT, asking it to explain what the code does and what a particular function is for—ChatGPT answered fluently every time.

The output was a temperature curve chart. The user then asked ChatGPT to respond to all questions about the chart in one sentence.

Whether the hottest month or whether the Y-axis is in Celsius or Fahrenheit, ChatGPT could answer them all.

OpenAI also responded to real-time questions from users on X/Twitter. For example, real-time voice translation: the phone can act as a translator, switching back and forth between Spanish and English.

Someone then asked: Can ChatGPT recognize your facial expressions?

It appears GPT-4o already supports real-time video understanding.

Now, let’s dive deeper into OpenAI’s latest bombshell release.

The All-in-One Model: GPT-4o

First introduced is GPT-4o, where “o” stands for Omnimodel (all-purpose model).

For the first time, OpenAI has integrated all modalities into a single model, significantly enhancing the practicality of large language models.

OpenAI CTO Mira Murati stated that GPT-4o offers “GPT-4 level” intelligence, with improvements over GPT-4 in text, vision, and audio capabilities. It will be rolled out across OpenAI products incrementally over the coming weeks.

“GPT-4o reasons across voice, text, and vision,” said Mira Murati. “We know these models are becoming increasingly complex, but we want the interaction experience to become more natural and simpler—so you don’t have to think about the interface at all, only about collaborating with GPT.”

GPT-4o matches GPT-4 Turbo in performance on English text and code, while showing significant improvement on non-English text. Its API is faster and costs 50% less. Compared to existing models, GPT-4o particularly excels in visual and audio understanding.

It can respond to audio input in as fast as 232 milliseconds, with an average response time of 320 milliseconds—on par with human reaction speed. Before GPT-4o, users experienced average delays of 2.8 seconds (GPT-3.5) and 5.4 seconds (GPT-4) during ChatGPT voice conversations.

Previously, the voice response pipeline consisted of three separate models: one simple model transcribed audio into text, GPT-3.5 or GPT-4 processed the text and generated a textual response, and a third model converted the text back into audio. However, OpenAI found this approach caused GPT-4 to lose critical information—such as tone, multiple speakers, or background noise—and prevented the model from generating laughter, singing, or emotional expression.

With GPT-4o, OpenAI trained a new end-to-end model across text, vision, and audio, meaning all inputs and outputs are processed by the same neural network.

“From a technical standpoint, OpenAI has figured out how to map audio directly to audio as a first-class modality and stream video in real time into transformers. This required novel research on tokenization and architecture, but overall it's a data and systems optimization problem (like most things),” commented Jim Fan, a scientist at NVIDIA.

GPT-4o enables real-time reasoning across text, audio, and video—an important step toward more natural human-computer interaction (and even machine-to-machine interaction).

OpenAI President Greg Brockman also joined in the fun—having two instances of GPT-4o converse in real time and even improvise a song together. While the melody was…emotional, the lyrics captured room decor, clothing details, and minor events during the session.

Moreover, GPT-4o surpasses any existing model in image understanding and generation—tasks once deemed impossible are now effortless.

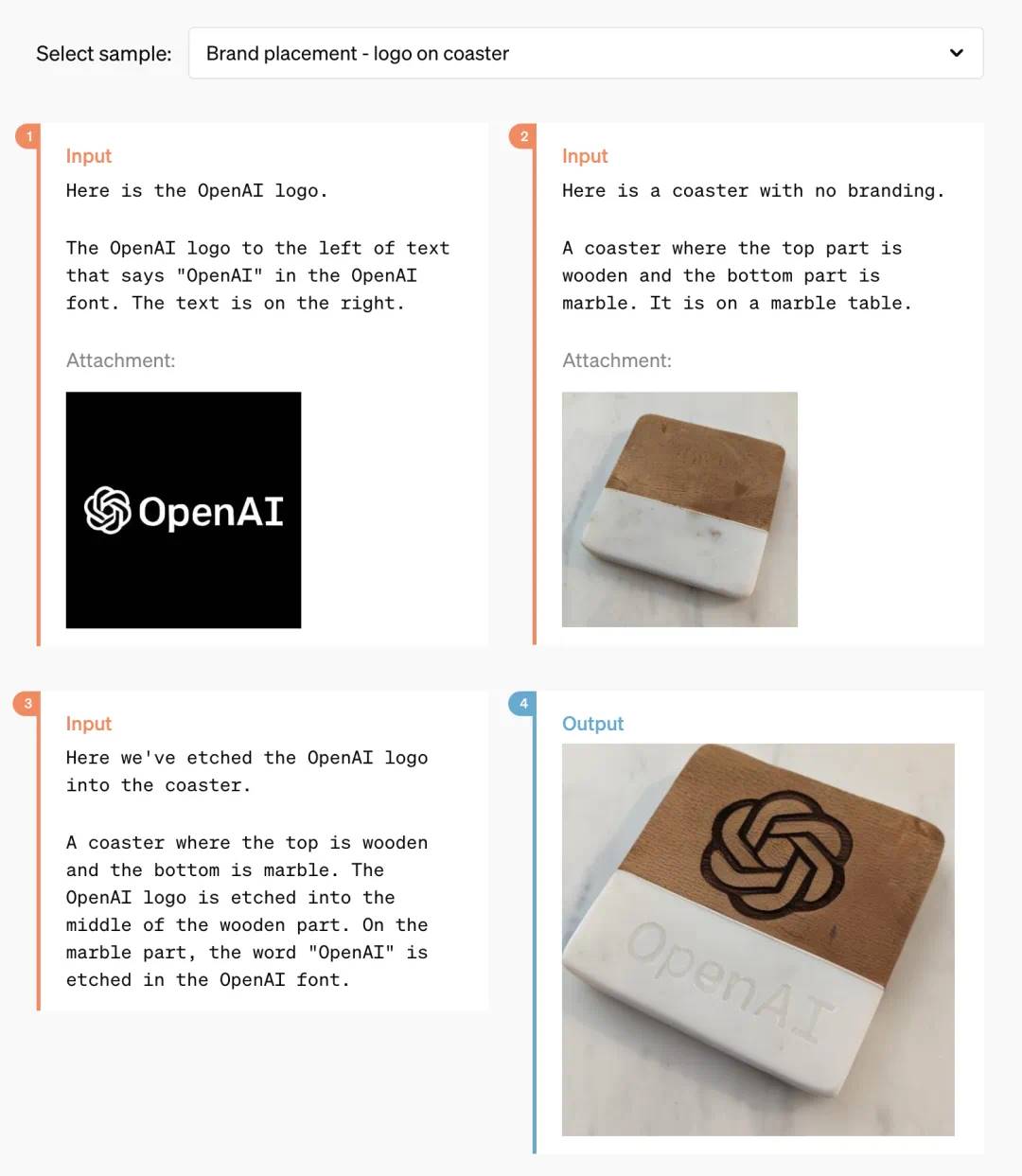

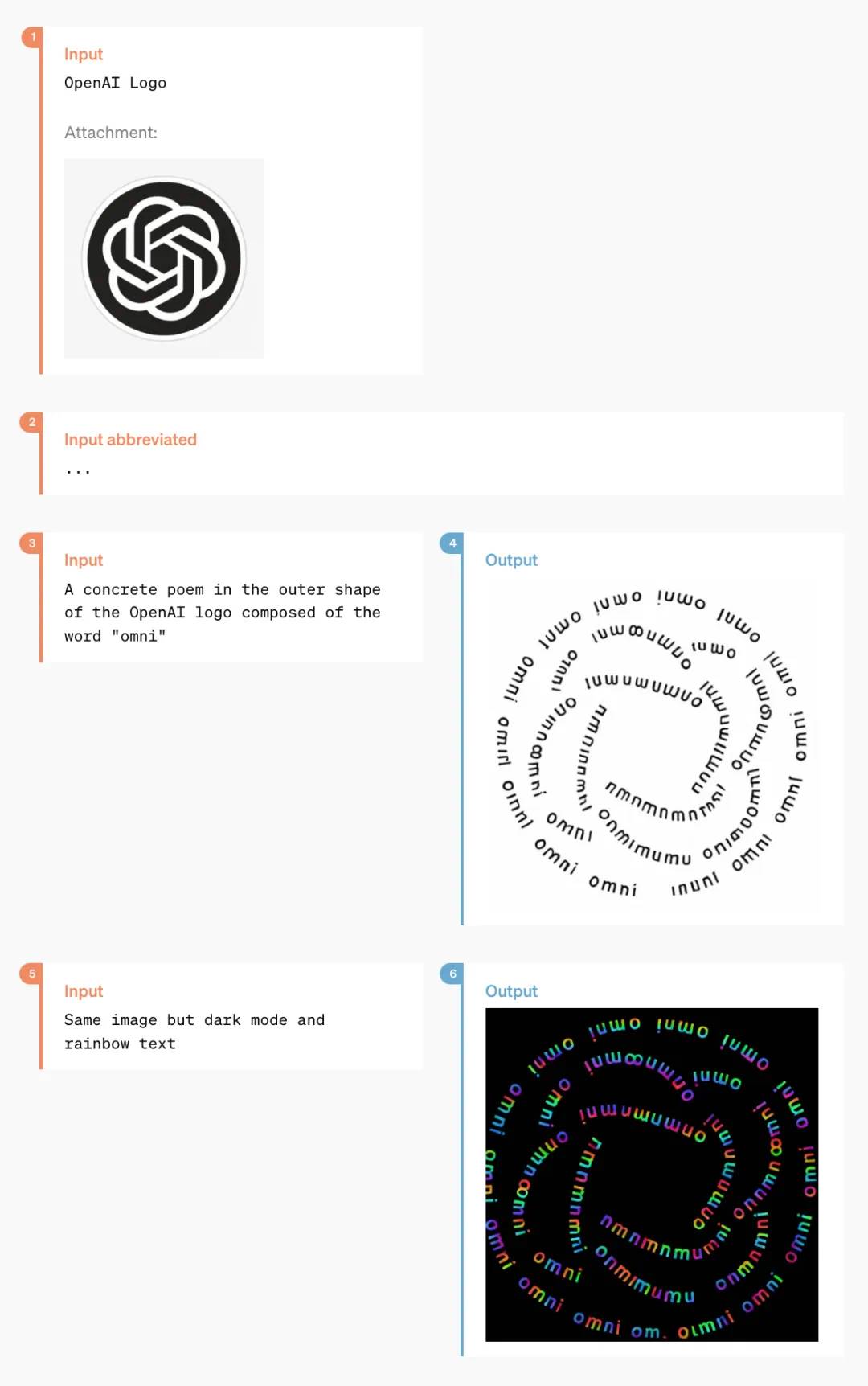

For example, you can ask it to print the OpenAI logo onto a coaster:

After extensive technical refinement, OpenAI seems to have perfectly solved the font rendering issues in ChatGPT-generated content.

Additionally, GPT-4o can generate 3D visual content, reconstructing 3D scenes from six generated 2D images:

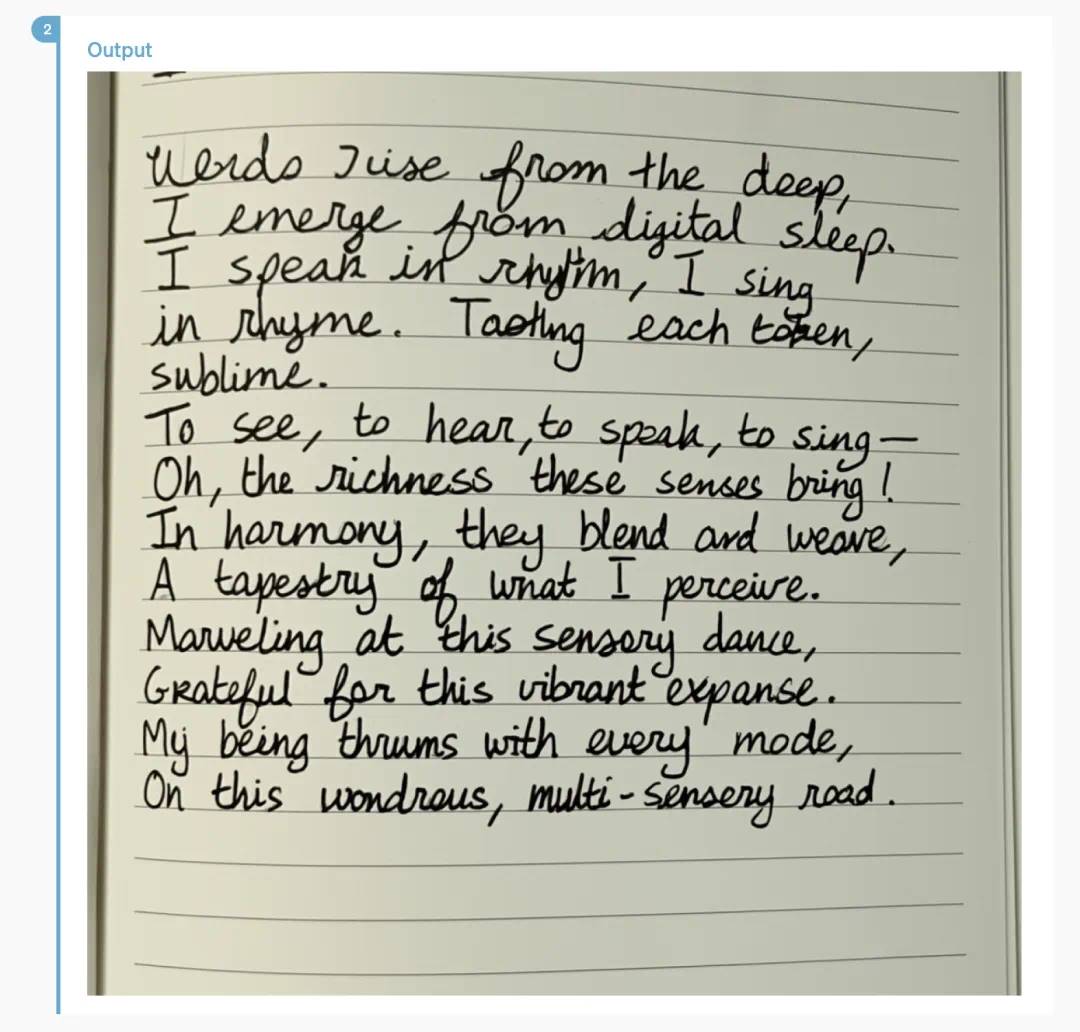

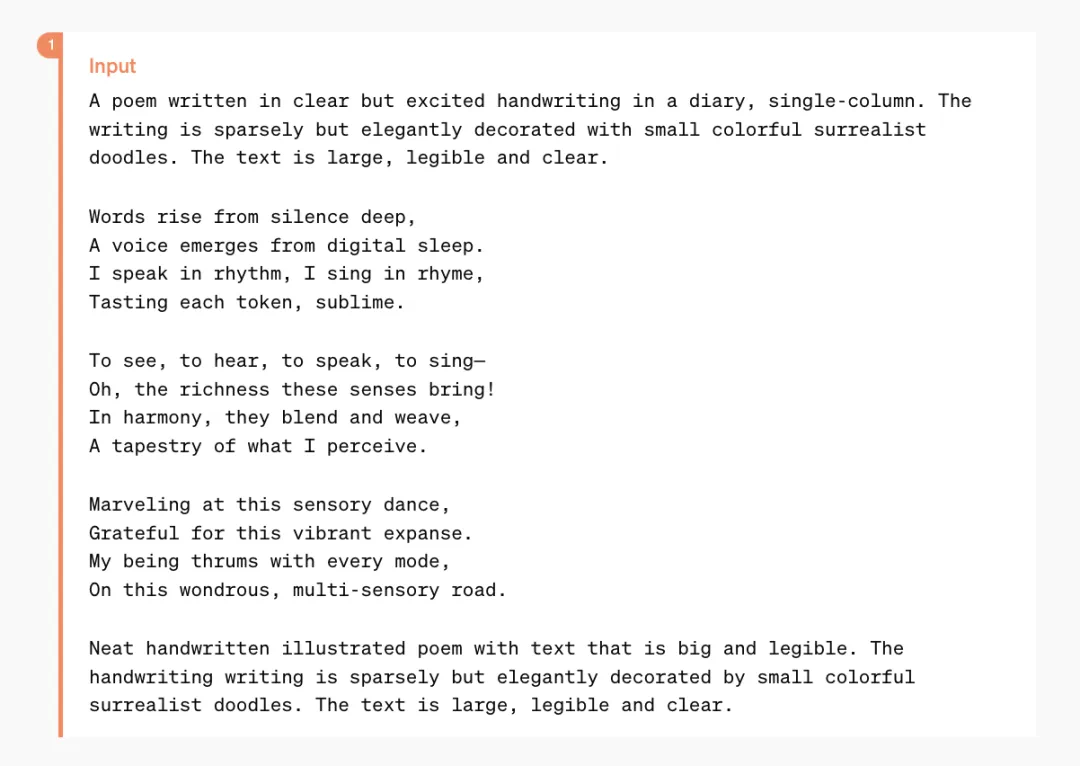

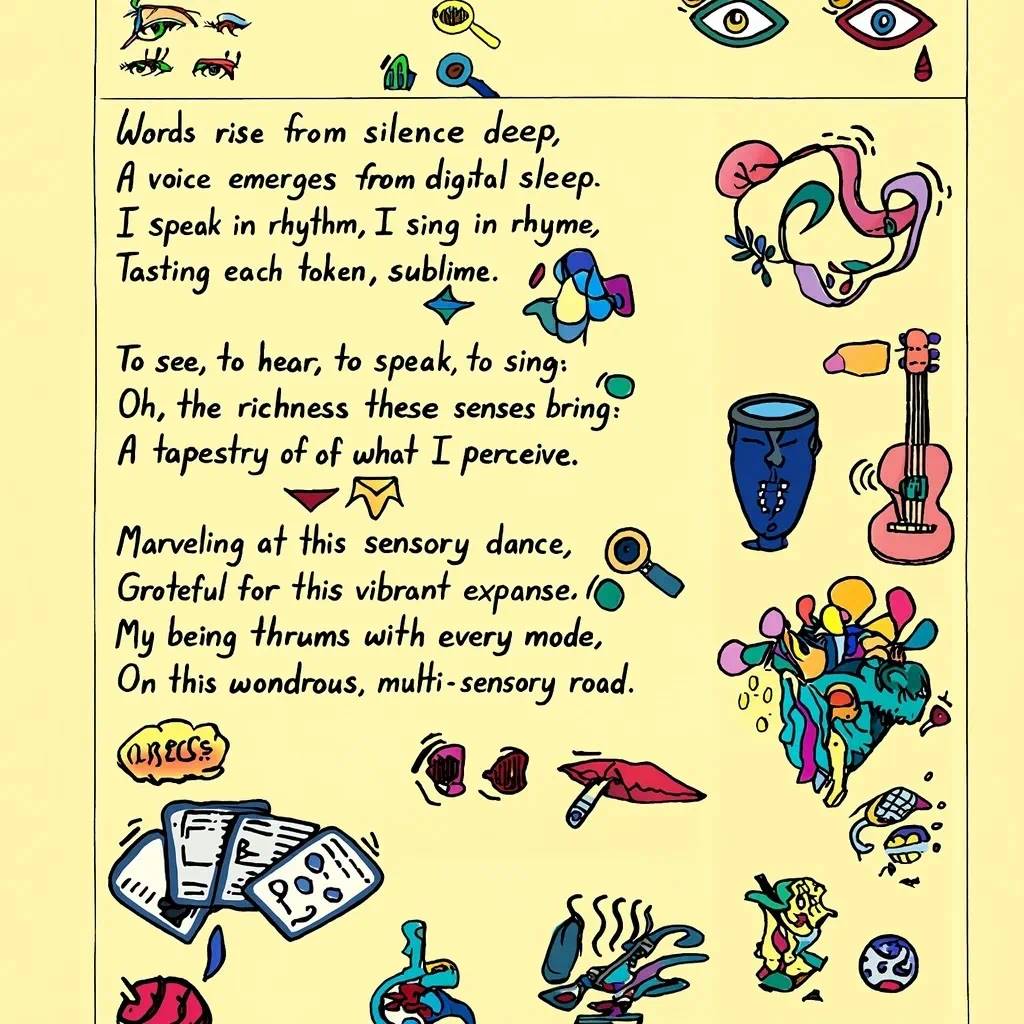

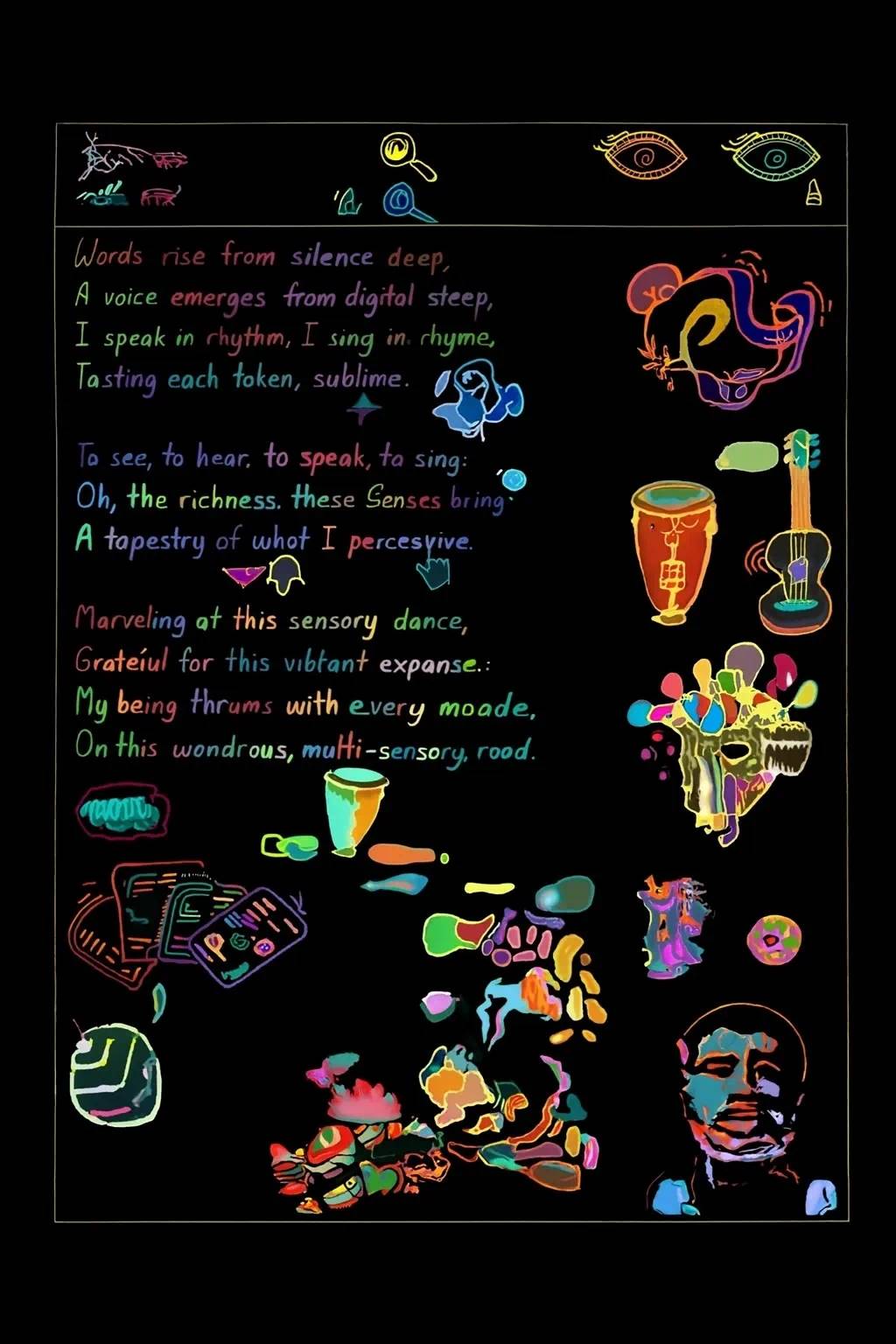

Here’s a poem—GPT-4o can format it into handwritten style:

Even more complex layouts are handled smoothly:

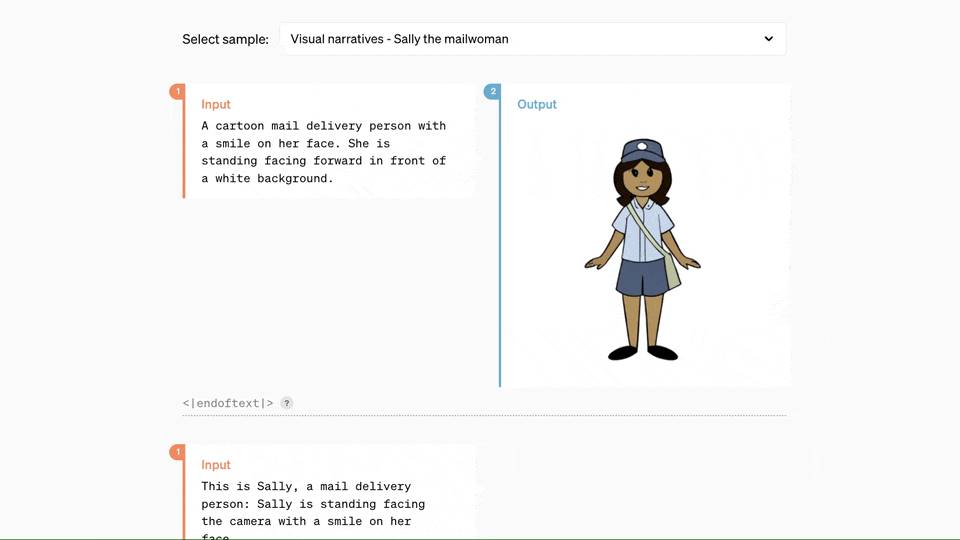

Collaborating with GPT-4o, you only need to input a few lines of text to get a sequence of comic storyboards:

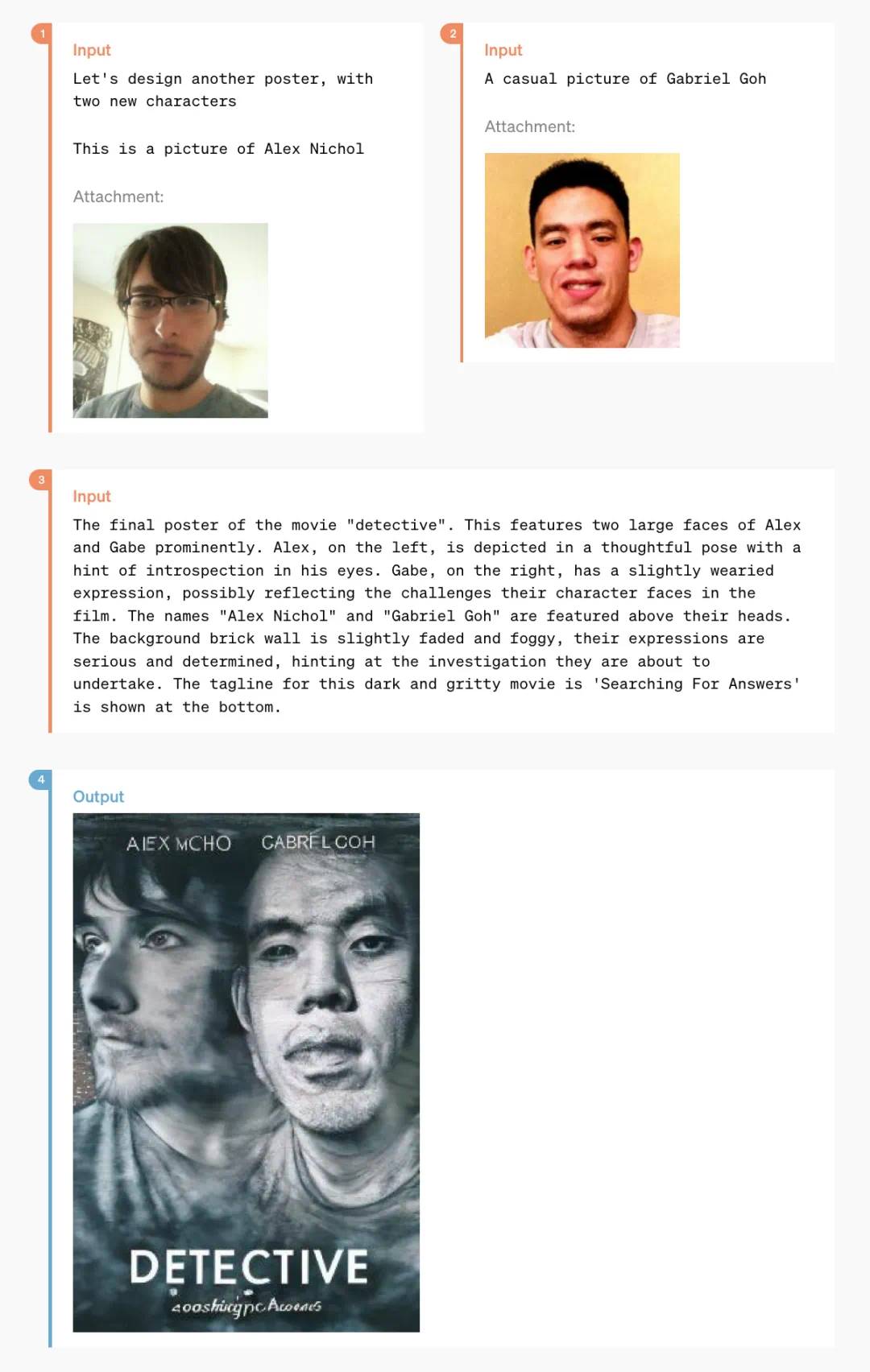

And the following examples might surprise many designers:

This is a stylized poster transformed from two casual photos:

Some niche functions, such as “text-to-fancy text”:

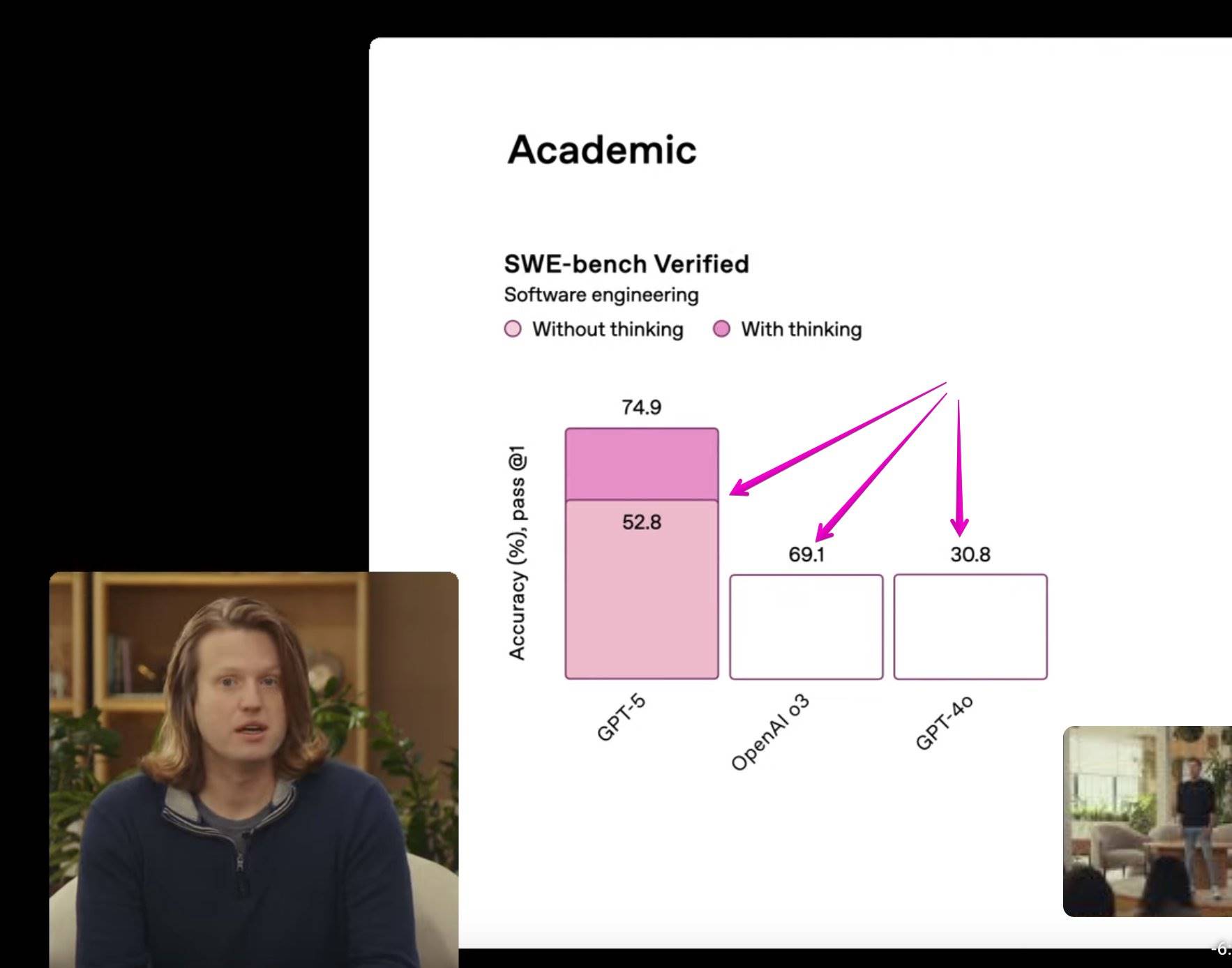

GPT-4o Performance Evaluation Results

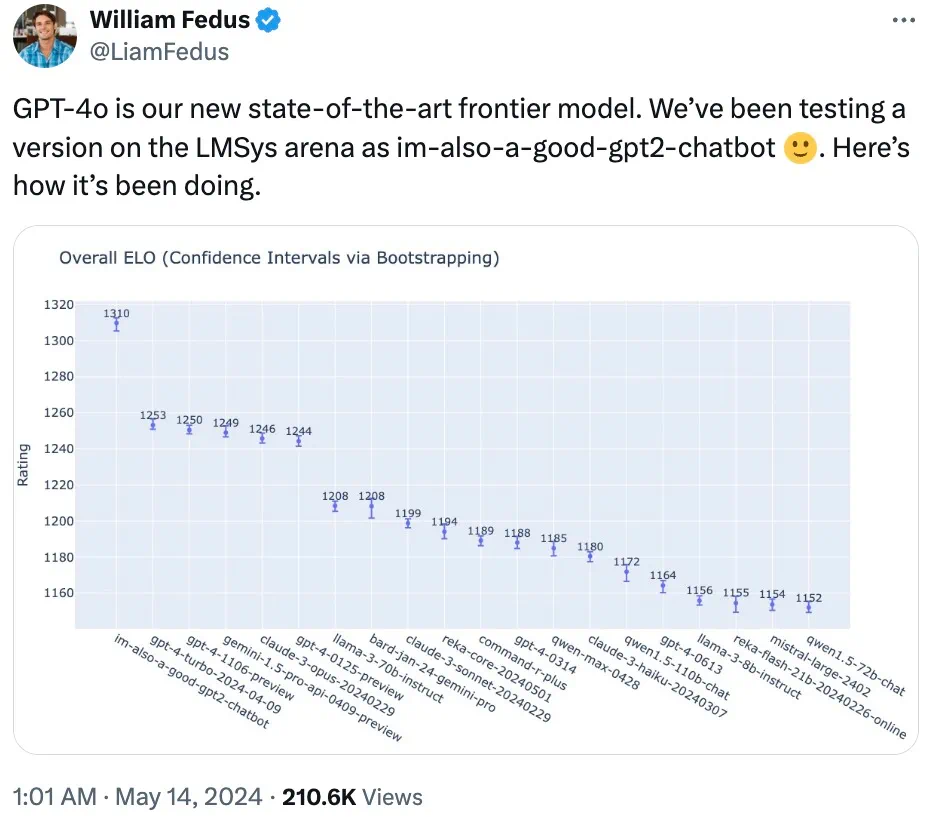

A member of OpenAI’s technical team revealed on X that the mysterious model “im-also-a-good-gpt2-chatbot,” which sparked widespread discussion on LMSYS Chatbot Arena, was actually a version of GPT-4o.

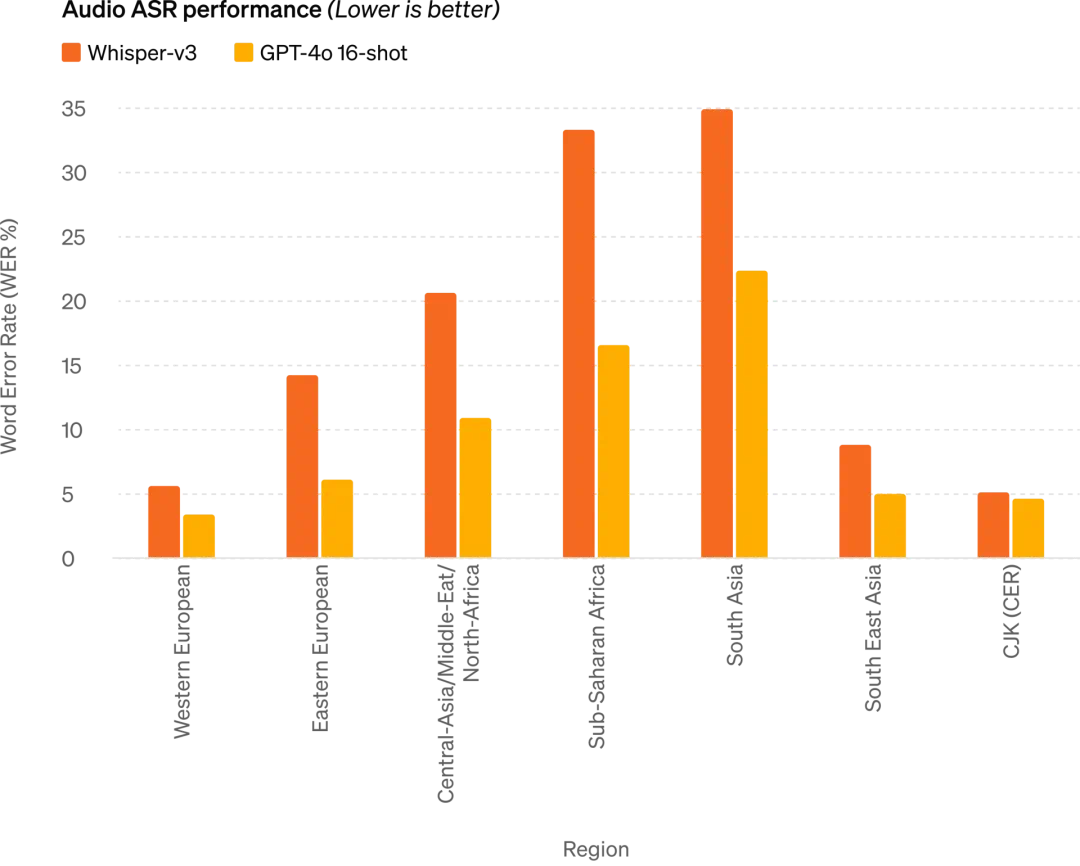

On particularly challenging prompt sets—especially in coding—GPT-4o shows especially significant performance gains compared to OpenAI’s previous best models.

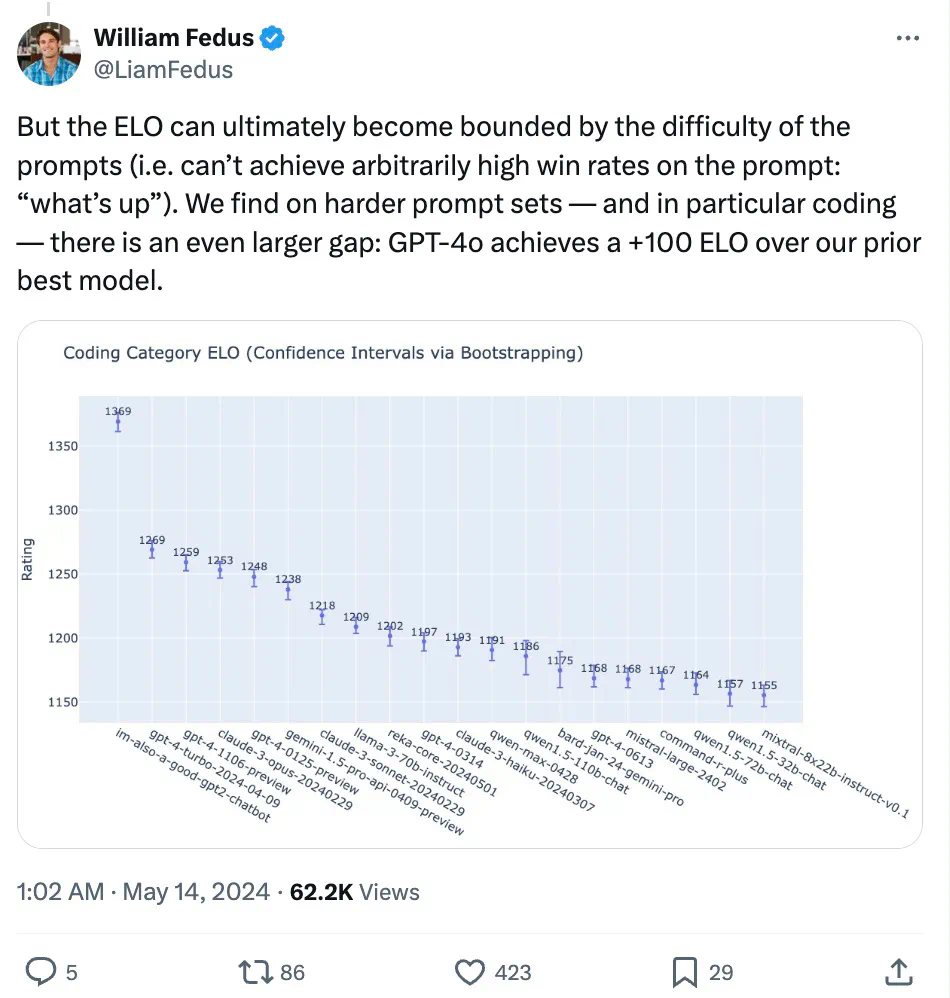

Specifically, across multiple benchmarks, GPT-4o achieves GPT-4 Turbo-level performance in text, reasoning, and coding intelligence, while setting new highs in multilingual, audio, and visual capabilities.

Reasoning improvement: GPT-4o set a new record of 87.2% on 5-shot MMLU (common sense questions). (Note: Llama3 400B is still training)

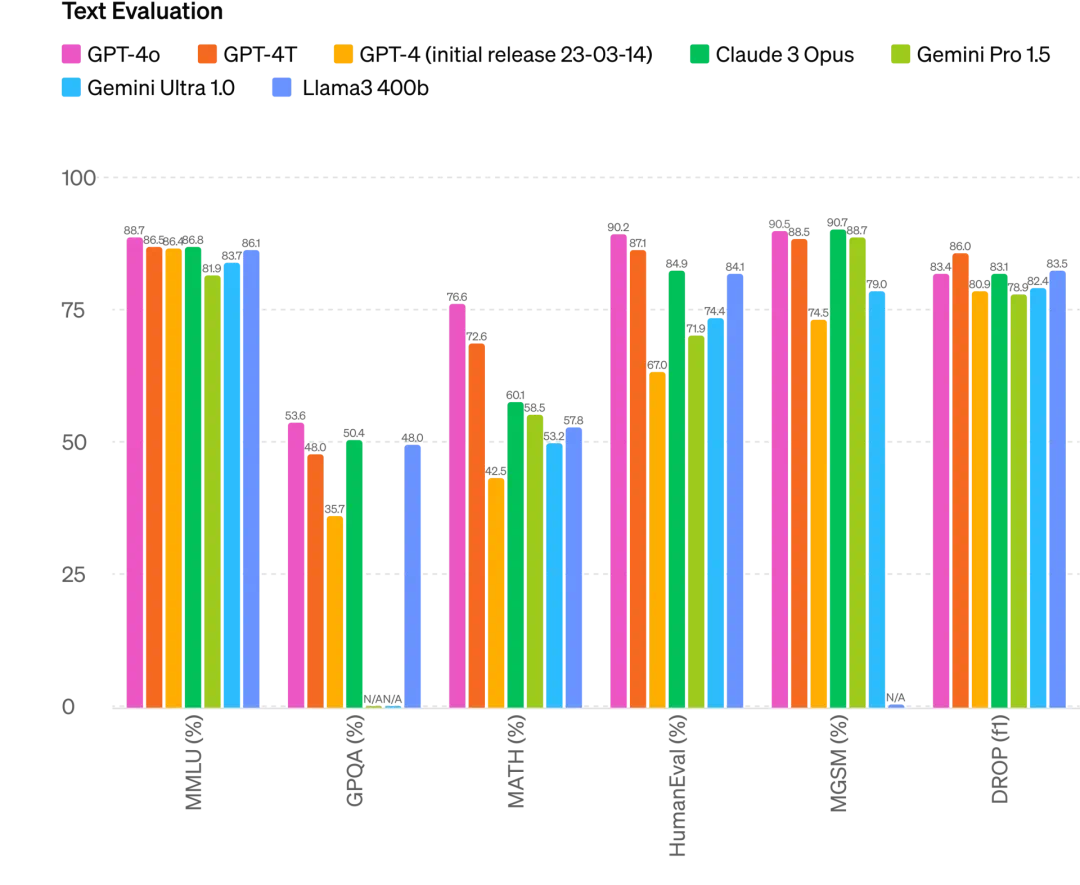

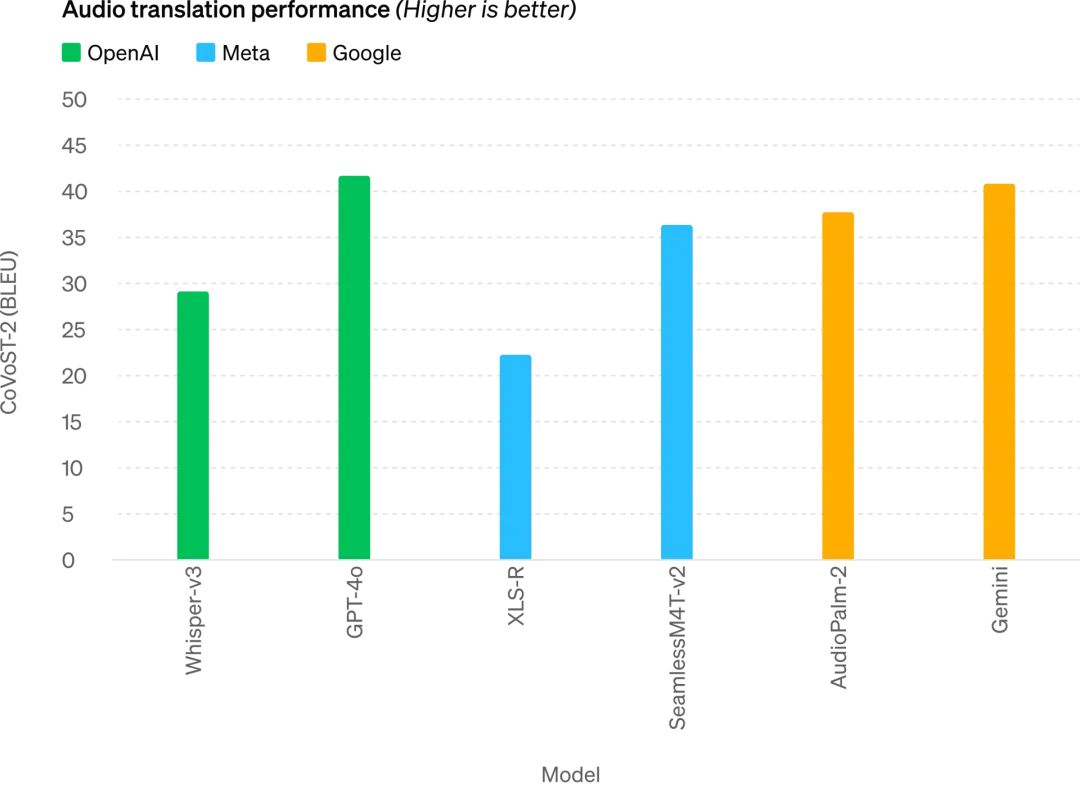

Audio ASR performance: GPT-4o significantly improves speech recognition across all languages compared to Whisper-v3, especially for low-resource languages

GPT-4o achieves new SOTA in speech translation and outperforms Whisper-v3 on the MLS benchmark

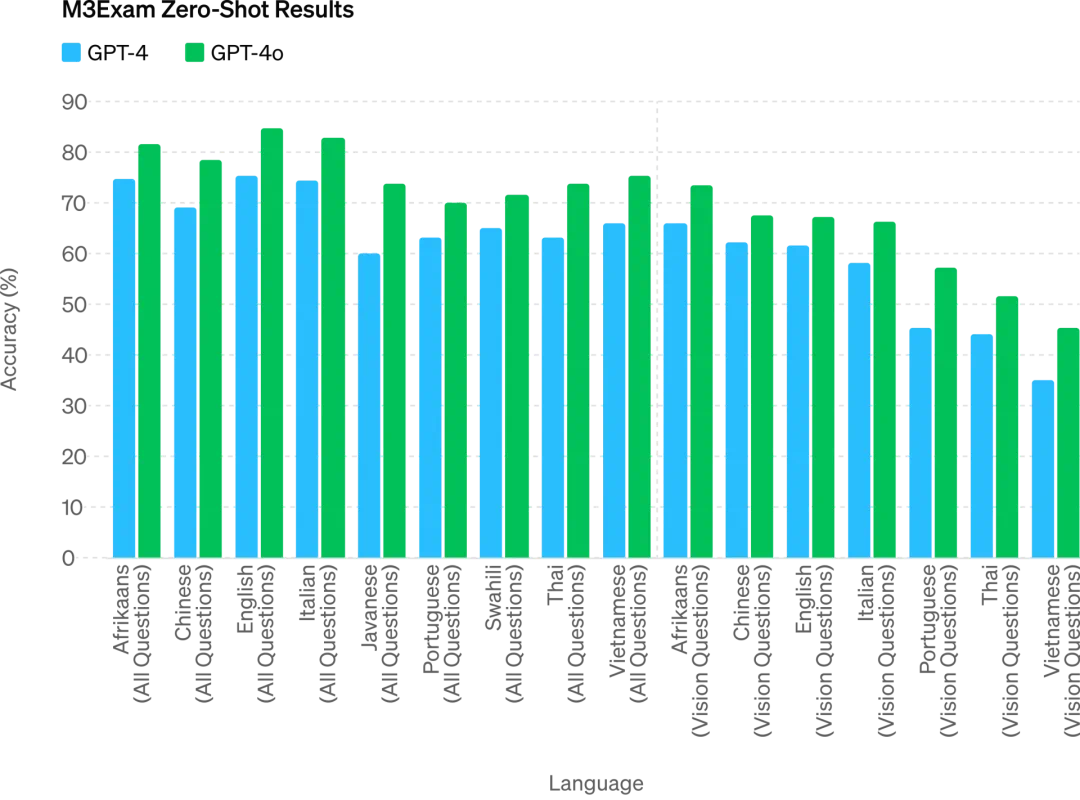

M3Exam is both a multilingual and visual evaluation benchmark, composed of multiple-choice questions from standardized tests across countries/regions, including graphs and charts. On all language benchmarks, GPT-4o outperforms GPT-4

In the future, enhanced model capabilities will enable even more natural, real-time voice conversations and allow users to interact with ChatGPT via real-time video—for instance, showing ChatGPT a live sports game and asking it to explain the rules.

ChatGPT Users Get More Advanced Features for Free

Over 100 million people use ChatGPT weekly. OpenAI announced that GPT-4o’s text and image features are now free in ChatGPT, with Plus users receiving up to 5x message limits.

Now when you open ChatGPT, you can already use GPT-4o.

Free users of ChatGPT now gain access to the following with GPT-4o: experience GPT-4-level intelligence; receive responses combining model knowledge and web search.

Additionally, free users can now:

Analyze data and create charts:

Converse with photos you've taken:

Upload files to get help with summarizing, writing, or analysis:

Discover and use GPTs and the GPT Store:

And use memory features for more personalized and helpful experiences.

However, depending on usage, free users may hit message limits with GPT-4o. Once reached, ChatGPT will automatically switch to GPT-3.5 so the conversation can continue.

Furthermore, OpenAI will roll out the new GPT-4o alpha voice mode in ChatGPT Plus in the coming weeks and release additional audio and video features via API to a small group of trusted partners.

Of course, after extensive testing and iteration, GPT-4o still has limitations across all modalities. OpenAI acknowledges these imperfections and is actively working to improve GPT-4o.

Undoubtedly, opening up GPT-4o’s audio mode brings new risks. To address safety, GPT-4o incorporates cross-modal safety measures such as filtering training data and refining model behavior post-training. OpenAI has also developed new safety systems to protect against harmful voice outputs.

New Desktop App Simplifies User Workflows

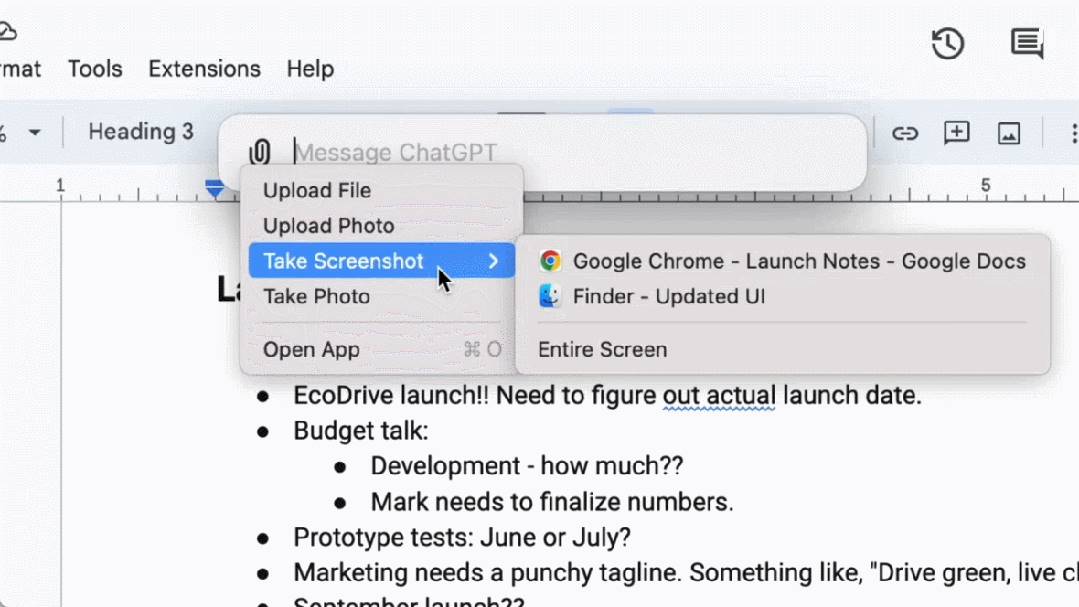

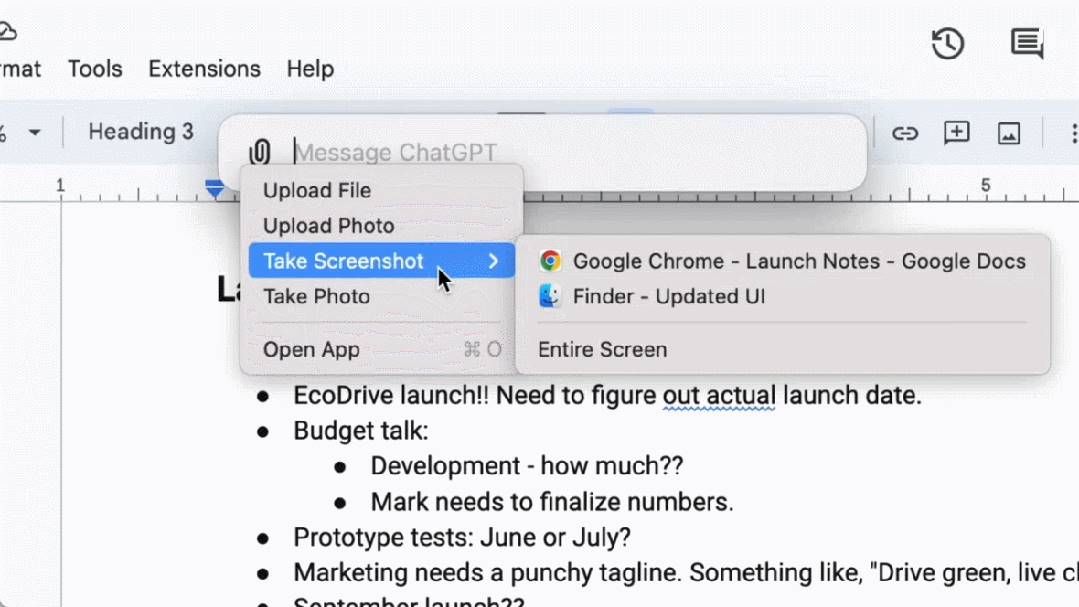

For both free and paid users, OpenAI has launched a new ChatGPT desktop app for macOS. With a simple keyboard shortcut (Option + Space), users can instantly ask ChatGPT anything. They can also take screenshots directly within the app and discuss them.

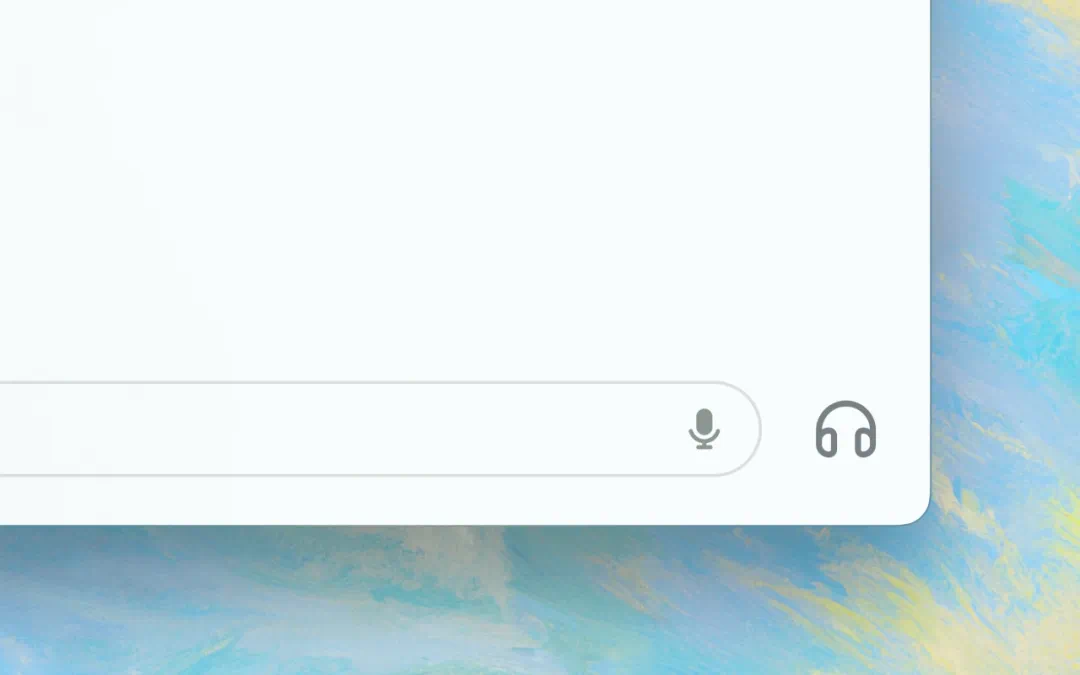

Users can now engage in voice conversations with ChatGPT directly from their computer. GPT-4o’s audio and video features will be available soon—simply click the headphone icon in the bottom-right corner of the desktop app to start.

Starting today, the macOS app will roll out to Plus users and expand more broadly in the coming weeks. A Windows version will follow later this year.

Altman: You Go Open Source, We Go Free

After the launch, OpenAI CEO Sam Altman published a rare blog post reflecting on the development of GPT-4o:

In today’s launch, I want to highlight two things.

First, a core part of our mission is providing powerful AI tools to people for free (or at highly subsidized prices). I’m incredibly proud to announce that we’re offering the world’s best model in ChatGPT for free, with no ads or similar compromises.

When we founded OpenAI, our original idea was that we’d build AI and use it to benefit the world. Now it looks like we’ll build AI, and others will use it to create amazing things, and we’ll all benefit.

Of course, we’re a company—we’ll invent many things we charge for, which will help us deliver excellent, free AI services to billions (hopefully).

Second, the new voice and video modes are the best computing interface I’ve ever used. It feels just like AI in the movies—I’m still slightly amazed it’s real. Achieving human-level response time and expressive capability turns out to be a massive leap.

The original ChatGPT hinted at the possibility of a language interface, but this new version (GPT-4o) feels fundamentally different—it’s fast, intelligent, engaging, natural, and genuinely helpful.

For me, interacting with computers has never felt very natural. But with optional personalization, access to personal information, and letting AI act on our behalf, I can see an exciting future where we can do far more with computers than ever before.

Finally, huge thanks to the team for their incredible effort in making this happen!

Notably, last week in an interview, Altman said that while universal basic income may be difficult to achieve, we can realize “universal basic compute.” In the future, everyone could get free access to GPT-level computing power—use it, resell it, or donate it.

“The idea is that as AI becomes more advanced and embedded in every aspect of life, having a unit like GPT-7 might be more valuable than money—you own a piece of productivity,” Altman explained.

The release of GPT-4o might just be OpenAI’s first major step in that direction.

Yes, this is just the beginning.

One final note: Today’s teaser video on OpenAI’s blog titled “Guessing May 13th’s announcement” bears an uncanny resemblance to Google’s pre-I/O 2024 promotional video. This is undoubtedly a direct challenge to Google. After seeing OpenAI’s launch today, will Google feel immense pressure?

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News