Core unresolved issues regarding models, infra, and applications

TechFlow Selected TechFlow Selected

Core unresolved issues regarding models, infra, and applications

Is there a "capital ceiling," meaning only large companies can afford the high costs of cutting-edge models?

By Elad

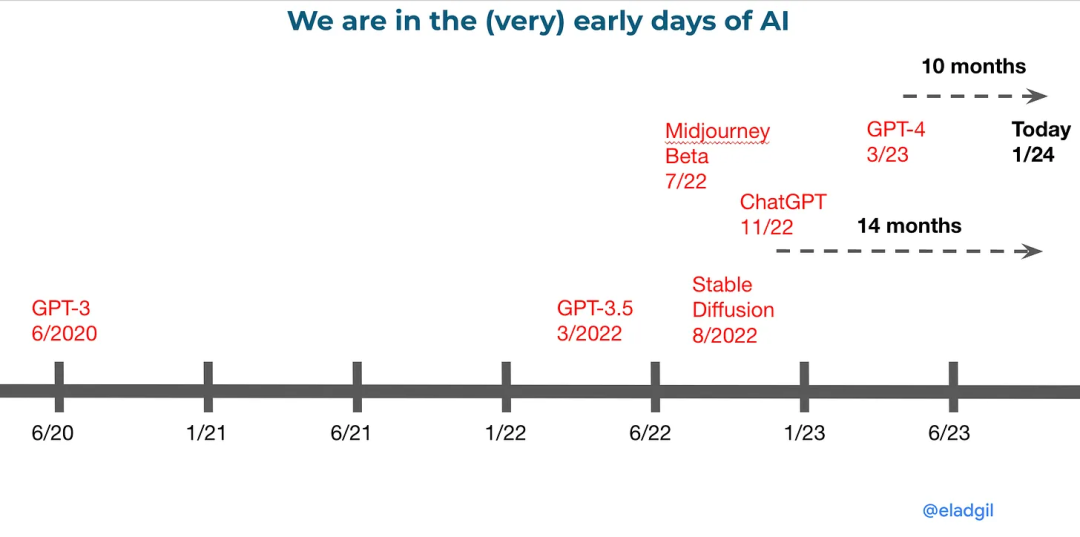

In most fields, time brings greater clarity. But in generative AI, the opposite may be true. We believe this domain is still extremely early, with new variables, modalities, and systemic changes making things highly complex. In these early exploratory stages, filtering through vast amounts of information, navigating ambiguity, experimenting, identifying potential paths, and deepening our understanding are ongoing tasks.

Elad is a serial entrepreneur and an early investor in well-known companies such as Airbnb, Coinbase, Figma, Notion, and Pinterest. This article presents his recent insights into unresolved questions regarding LLMs, infrastructure layers, and AI application layers—thoughtful and comprehensive topics open to discussion and feedback.

Large tech companies have shaped the LLM market landscape through massive investments, raising a critical question: in such an environment, how can small startups and independent developers find their footing? Is there a "capital ceiling," beyond which only large corporations can afford the soaring costs of cutting-edge models?

Open-source models like Llama and Mistral also play significant roles in the LLM space. They serve as counterbalances to proprietary models, promoting broader access and innovation. However, licensing restrictions for commercial use and long-term sustainability remain key challenges for open-source large models.

01. LLM Questions

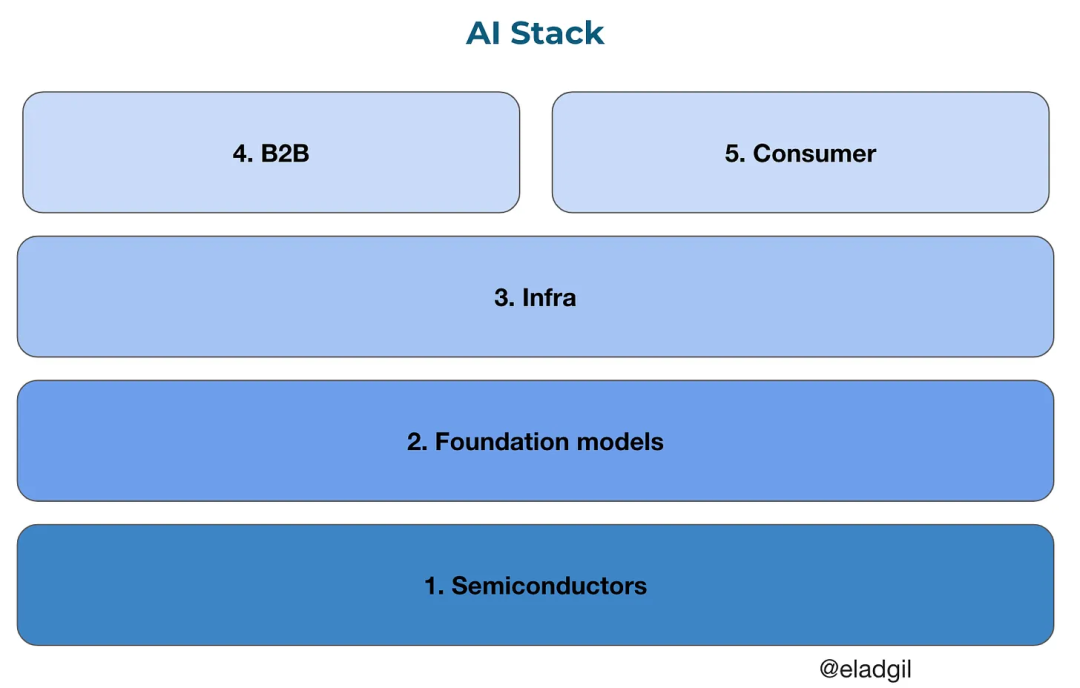

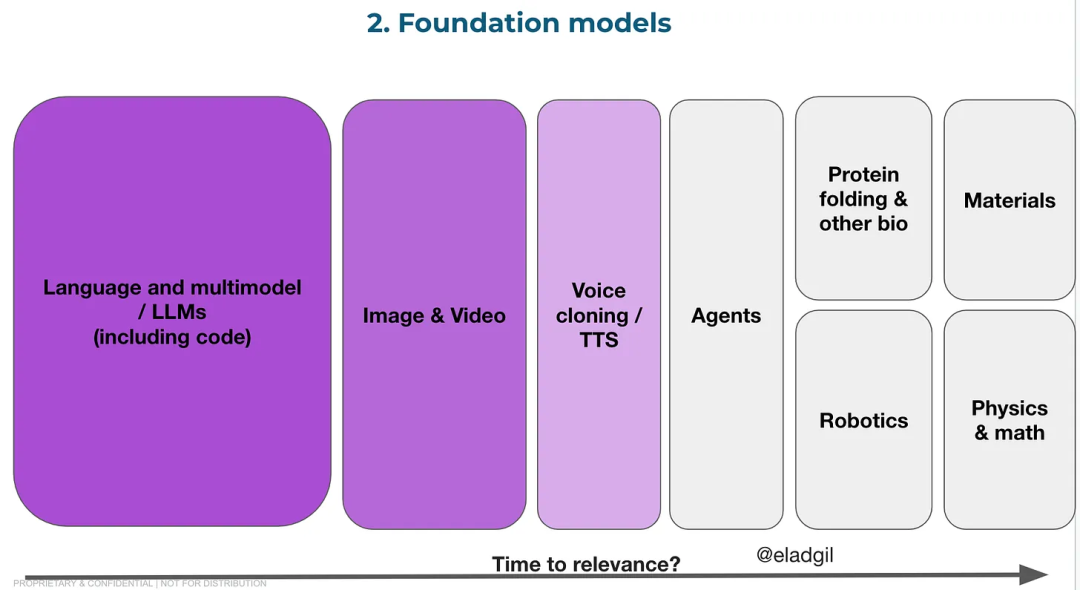

In a sense, there are two types of LLMs: one being state-of-the-art general-purpose models (e.g., GPT-4), and all others. Back in 2021, Elad predicted that due to enormous capital requirements, the frontier model market would evolve into an oligopoly dominated by a few players. Meanwhile, non-frontier large models would become increasingly price-driven, enhancing the influence of open-source alternatives.

Current trends appear to align with this prediction. The frontier LLM space is likely heading toward an oligopoly. Key contenders include closed models like those from OpenAI, Google, and Anthropic, alongside open models such as Llama (Meta) and Mistral. While the list may shift slightly over the next year or two, training costs for frontier models continue to rise, while costs for other general-purpose models decline annually even as performance improves. For instance, training a model equivalent to GPT-3.5 today may cost only one-fifth of what it did two years ago.

As models grow larger, funding increasingly comes from cloud providers and major tech firms. Microsoft has invested over $10 billion in OpenAI; Anthropic has received $7 billion from Amazon and Google. NVIDIA is also heavily investing in this space. Compared to such figures, venture capital funding appears negligible. With skyrocketing training costs, emerging sources of capital are concentrated among big tech companies or national governments—such as the UAE—supporting domestic AI champions. This trend is shaping the market and potentially pre-determining future winners.

These investments from cloud providers represent just the tip of the iceberg when compared to their returns. For example, Microsoft’s Azure generates about $25 billion in revenue per quarter—the ~$10 billion invested in OpenAI equals roughly six weeks of Azure revenue. Yet AI has significantly boosted Azure's growth: recently, AI contributed a 6 percentage point increase in Azure’s revenue, translating to $5–6 billion annually. Although this isn’t pure profit, it’s substantial enough to motivate large cloud providers to fund more frontier models. Meta’s commitment to Llama should not be overlooked either—they’ve recently announced a $20 billion budget dedicated to large-scale model training.

Key Questions About LLMs

Are cloud providers using massive compute resources and capital to create a select group of frontier players, thereby locking in an oligopolistic market structure? What happens when cloud providers stop funding new LLM startups and instead focus exclusively on existing ones? Cloud providers are now the primary backers of foundational models—not VCs. Given regulatory constraints on M&A and the fact that cloud usage drives recurring revenue, this strategy makes business sense—but could distort market dynamics. How will this affect the long-term economics and structure of the LLM market? Does this imply we’ll soon see new LLM startups continuously fail due to insufficient capital and talent?

Can OSS (open-source software) models shift AI’s economic model away from foundation models and toward cloud computing? Will Meta continue funding open models? If so, could a future Llama-N catch up with the most advanced proprietary models? A high-performing open model at the AI frontier could reshape parts of the AI infrastructure stack, shifting value from LLM vendors to cloud and inference service providers, reducing revenues for other foundational model companies.

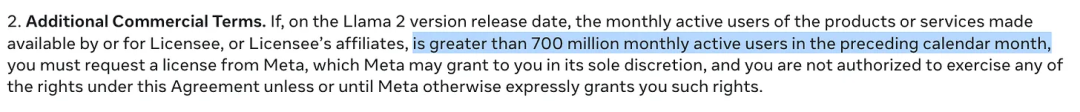

Llama2’s license includes a clause allowing commercial use if the user base is under 700 million. This effectively blocks some large competitors from adopting the model. A major cloud provider would need to pay Meta licensing fees to use Llama, creating a mechanism for Meta to maintain long-term control and monetization.

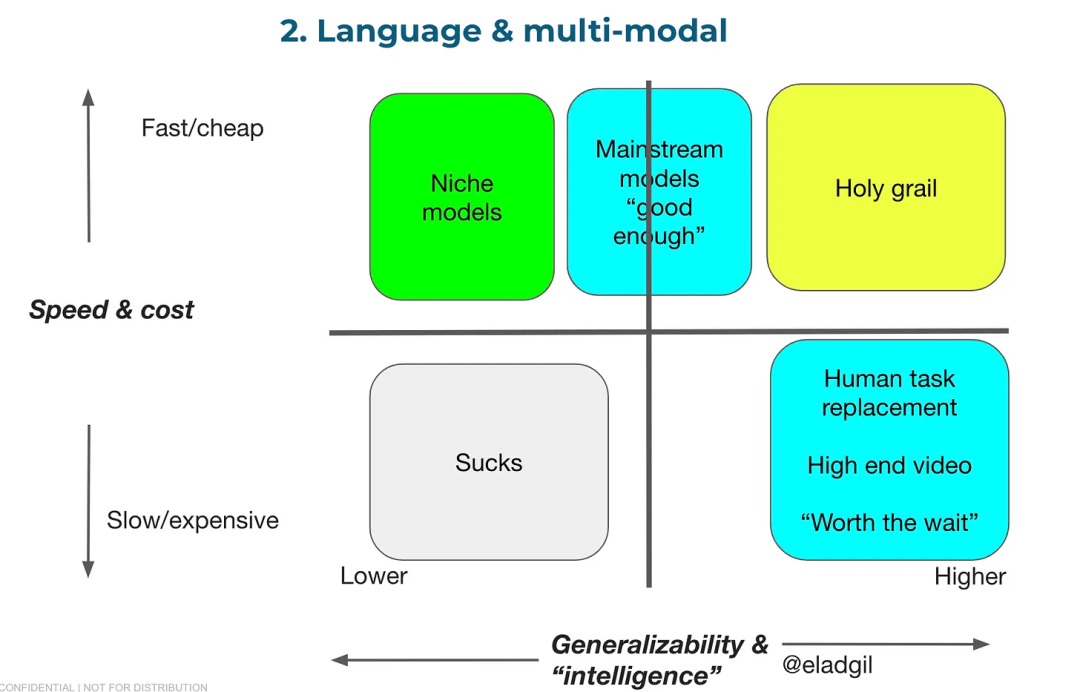

How should we think about the trade-off between model cost and performance? A slower but highly capable model might still offer great value relative to human task completion speed. The latest Gemini model appears to follow this path, offering over 1 million token context windows. Increasing context length and depth of understanding can fundamentally change how users perceive AI applications. On the opposite end, Mistral demonstrates the value of small, fast, low-cost, high-performance inference models. The table below breaks down this spectrum.

How will foundational model architectures evolve? Could agent-based models with different architectures partially replace or surpass the future potential of current LLMs? When will additional forms of memory and reasoning be integrated?

Will governments support or steer procurement toward regional AI leaders? Do they want models reflecting local values, languages, and cultural needs? Beyond cloud providers and global tech giants, another potential source of capital lies in nation-states. Today, Europe, Japan, India, the UAE, China, and others host promising local large model companies. Government funding alone could enable several multi-billion-dollar localized AI foundation model firms.

02. Infrastructure Questions

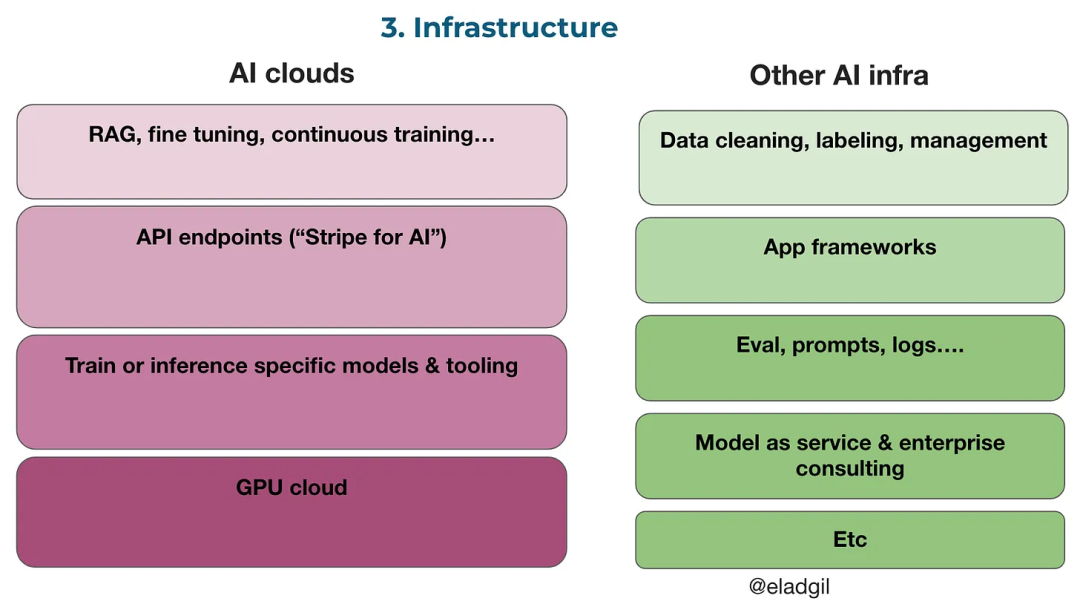

There are various types of infrastructure companies serving different purposes. For example, Braintrust offers evaluation, prompting, logging, and agent tooling, helping organizations move from “gut-feel” AI analysis to data-driven decision-making. Scale.ai and others play crucial roles in data labeling, fine-tuning, and related areas.

The biggest uncertainty in AI infrastructure revolves around the evolution of the AI cloud stack. Startups and enterprises have very different needs for AI cloud services. For startups, new cloud providers and tools (e.g., Anyscale, Baseten, Modal, Replicate, Together) are gaining traction, driving customer acquisition and revenue growth.

For large enterprises with specialized requirements, important questions remain unanswered. For instance: do current AI cloud companies need to build on-premises / BYOC / VPN versions for enterprise clients? Enterprises may optimize for:

(a) Using existing cloud credits already included in budgets;

(b) Avoiding full round-trips across networks/data hosts (e.g., AWS, Azure, GCP) due to latency and performance concerns;

(c) Meeting strict security and compliance standards (e.g., FedRAMP, HIPAA).

Short-term startup demand for AI cloud services may differ significantly from long-term enterprise needs.

To what extent is adoption of AI cloud services driven by GPU scarcity—or GPU arbitrage? Amid shortages of GPUs on major cloud platforms, companies are scrambling to secure sufficient hardware, accelerating adoption of newer GPU cloud startups. One possible strategy for NVIDIA could be prioritizing GPU allocation to these emerging providers—to reduce bargaining power of hyperscalers, fragment the market, and accelerate industry development via startups. When will the GPU bottleneck end, and how will this impact new AI cloud providers? It seems the end of GPU shortages on major platforms could negatively affect companies whose sole offering is GPU cloud access, whereas those providing broader tools and services should transition more smoothly.

Which services are being consolidated within the AI cloud ecosystem? Are they cross-selling embedded models and RAG (retrieval-augmented generation)? Do they offer continuous updates, fine-tuning, or other value-added services? What implications does this have for data labelers or companies offering overlapping services? Which capabilities get baked directly into model providers versus delivered through cloud platforms?

What business models will different players pursue in the AI cloud market?

The AI cloud market clearly has two segments: startups and mid-to-large enterprises. The "GPU-only" model defaults to the startup segment, which tends to require fewer managed cloud services. For large enterprises, availability of GPU cloud capacity on primary platforms may be more constraining. So, will companies offering developer tools, API endpoints, specialized hardware, etc., evolve into one of two archetypes—(a) an AI-focused “Snowflake/Databricks” model, or (b) an AI-focused “Cloudflare” model? If so, which companies are pursuing which path?

How large could the emerging AI cloud market become? Can it reach the scale of Heroku, DigitalOcean, Snowflake, or even AWS?

How is the AI tech stack evolving in light of models with extremely long context windows? How should we understand the interplay between context length, prompt engineering, fine-tuning, RAG, and inference costs?

How do regulatory policies restricting M&A affect this market? At least a dozen companies are building AI cloud-related products and services. Under governments actively opposing Big Tech consolidation, how should founders think about exit strategies? Should AI cloud providers consolidate to expand market size and optimize offerings?

03. AI Application Questions

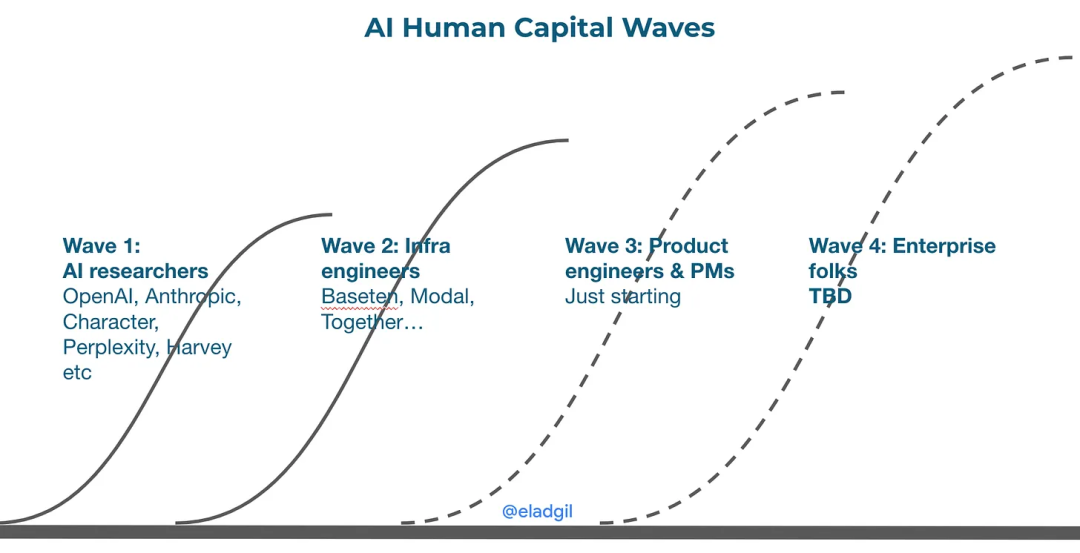

ChatGPT was the starting point for many AI founders. Before ChatGPT—and earlier breakthroughs like Midjourney and Stable Diffusion—most people weren’t closely following the Transformer/Diffusion model revolution now unfolding. Those closest to the technology—AI researchers and infrastructure engineers—were the first to launch startups based on it. Engineers, designers, and product managers further removed from the core models are only now beginning to grasp AI’s transformative potential.

ChatGPT launched about 15 months ago. If quitting a job takes 9–12 months, brainstorming initial ideas with co-founders a few months, and implementation another few months, then a wave of AI-native applications should soon flood the market.

B2B Applications: In this emerging wave of B2B AI apps, which companies and markets will matter most? Where will incumbents capture value, and where will startups succeed?

Consumers: The earliest AI products were "prosumer" tools—useful for both personal and professional use. Examples include ChatGPT, Midjourney, Perplexity, and Pika. Why are there so few AI products built specifically for mainstream consumers? The social app generation of 2007–2012 doesn't seem to be the target user base today. Building successful consumer AI requires fresh perspectives and smarter, more innovative users to help shape the next great wave of consumer AI.

Agents: AI agents can do many things. Which domains are ripe for focused product development, and where are startups still searching for viable use cases?

This concludes the full list of pressing, thought-provoking questions in the AI field. The development of generative AI is an exciting technological revolution unfolding before our eyes. We will undoubtedly witness more groundbreaking innovations ahead. Reflecting deeply on these questions can help entrepreneurs better meet market needs and advance the field.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News