How the 'European version of OpenAI' became GPT's strongest rival at a $2 billion valuation peak?

TechFlow Selected TechFlow Selected

How the 'European version of OpenAI' became GPT's strongest rival at a $2 billion valuation peak?

The rise of Mistral AI has brought more innovation and breakthroughs to the entire industry.

Author: MetaverseHub

"ChatGPT is as important as the invention of the internet and will change the world." Bill Gates' prediction about large models seems to be gradually becoming reality.

Over the past year, OpenAI has dominated the AI (artificial intelligence) field, with both the widespread adoption of ChatGPT and its internal turmoil capturing industry attention.

However, with the rise of Mistral AI, this landscape is undergoing unprecedented transformation.

As a strong competitor to OpenAI, Mistral AI has demonstrated remarkable breakthroughs in both technology and product development, emerging as a shining star in the AI field, nicknamed the "European version of OpenAI."

Compared to OpenAI, Mistral AI places greater emphasis on practical applications of technology, striving to apply cutting-edge AI technologies to solve real-world problems.

In terms of financing, Mistral AI secured $113 million in seed funding right after its founding, attracting several renowned investment firms such as Lightspeed Venture Partners, Salesforce, and BNP Paribas.

Within just a few months, the company completed an additional $415 million in Series A funding, reaching a valuation of up to $2 billion. This scale of financing is extremely rare among AI startups, not only demonstrating high recognition and expectations from capital markets but also providing robust financial support for its future growth.

The rise of Mistral AI not only challenges OpenAI but also injects new vitality into the entire AI sector, bringing more innovation and breakthroughs to the industry.

01. An Innovative Force Leading the AI Revolution

Mistral AI, officially named Mistral Artificial Intelligence, is a company dedicated to AI research and application, particularly in building online chatbots, search engines, and other AI-powered products.

Since its inception, Mistral AI has adhered to a human-centered philosophy, aiming to improve people's lives and work through smarter, more human-like AI systems, delivering greater convenience and well-being. The company is committed to leveraging advanced AI technologies to provide efficient and intelligent solutions across industries.

Although still a startup, the founders of Mistral AI each have impressive backgrounds.

Arthur Mensch previously worked as a researcher at Google’s artificial intelligence company DeepMind, while Timothée Lacroix and Guillaume Lample held key technical roles at Meta.

Their prior experiences gave them deep expertise in multimodal technologies, RAG, algorithm optimization, and extensive research in model inference, pre-training, and model embedding.

A statement on Mistral AI’s official website fully reflects its ambition: "Our mission is to advance AI for open communities and enterprise customers. We are committed to driving the AI revolution by developing open-weight models that rival proprietary solutions."

Although currently a small creative team, Mistral AI maintains high scientific standards and develops efficient, useful, and trustworthy AI models through groundbreaking innovations. This may be one of the reasons why Mistral AI has gained such favor.

02. Major Leap Forward in Large Language Models

Mistral AI’s most notable product is undoubtedly Mixtral 8x7B, one of the most competitive open-source large models on the market today, featuring multiple unique capabilities and clearly outperforming other large models in performance.

At the core of Mixtral 8x7B lies its highly innovative MoE (Mixture of Experts) architecture. The MoE architecture uses a gating network to route input data to specific neural network components known as "experts." In Mixtral 8x7B, there are eight such experts, each with up to 7 billion parameters.

Despite having eight experts, only two are activated per data processing task. This resource allocation algorithm significantly optimizes processing speed while maintaining model performance.

For training and fine-tuning, Mixtral AI uses multilingual data for pre-training, including English, French, Italian, German, and Spanish. The Instruct model is trained using supervised fine-tuning and Direct Preference Optimization (DPO), achieving high scores on benchmark tests like MT-Bench.

During in-depth research on Mixtral 8x7B, Mistral AI also focused on fine-tuning certain functionalities, especially versions capable of following instructions, pushing the model toward greater precision and personalization.

Beyond its outstanding performance, another key reason for Mixtral 8x7B’s acclaim is its openness.

When releasing this large model, Mistral AI directly published its model weights—an approach highly effective in attracting attention from the AI community, ensuring broad accessibility for both academic and commercial use. This openness encourages diverse applications and could lead to new breakthroughs in large models and language understanding.

Mixtral 8x7B’s innovative approach and exceptional performance have made it an industry benchmark in the large model space. Despite these achievements, Mistral AI continues actively optimizing the model’s performance.

03. Milestone Developments at Mistral AI

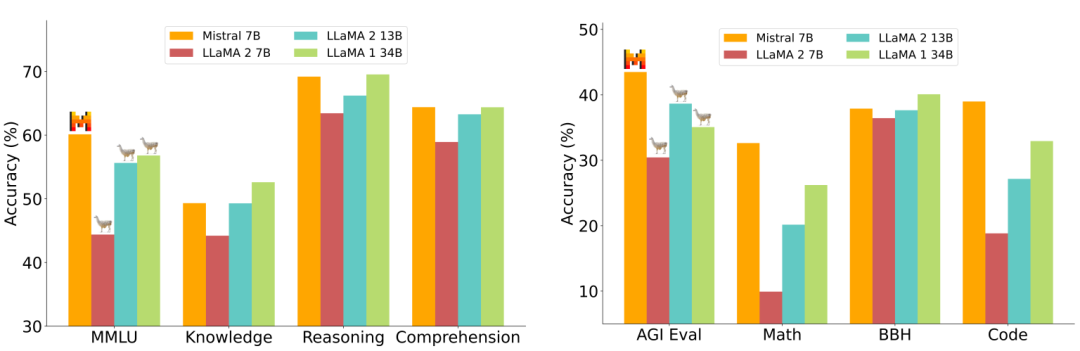

The emergence of Mixtral 8x7B marks a significant breakthrough in AI technology, particularly in model architecture and efficiency. How does it compare against other leading large models?

Can It Surpass the Giants?

Since ChatGPT’s debut, OpenAI has been regarded as the gold standard in large language models. However, Mistral AI has introduced a fully open-source, open-weight model that demonstrates outstanding performance across numerous benchmarks, even surpassing OpenAI’s GPT-3.5 and Meta’s Llama 2 13B in certain aspects.

Specifically, in the Massive Multitask Language Understanding (MMLU) test covering 57 subjects across mathematics, U.S. history, computer science, law, and more, Mistral AI achieved a standout accuracy rate of 60.1%, compared to slightly above 44% for Llama 2 7B and around 55% for Llama 2 13B.

Likewise, in tests involving commonsense reasoning and reading comprehension, Mistral 7B outperformed both Llama models with accuracy rates of 69% and 64%, highlighting its strengths in deep language understanding.

Mistral 7B excels in deep language understanding because its training involved vast amounts of complex and varied textual data, enhancing its contextual awareness and reasoning ability—enabling it to better grasp the internal logic and semantic meaning of texts during testing, resulting in more accurate and insightful responses.

Compared to GPT-3, Mistral AI focuses on faster inference and handling longer sequences. By employing grouped-query attention and sliding window attention mechanisms—a type of attention pattern based on transformer models—it optimizes for lower latency and higher throughput. This makes it ideal for large-scale, rapid-processing applications at lower cost, positioning it as the most cost-effective solution.

By contrast, GPT-3 is renowned for its deep language understanding and multitasking capabilities, optimized for processing shorter sequences. For example, GPT-3 performs exceptionally well in question-answering tasks, capable of understanding and generating accurate answers. Thanks to its powerful language comprehension, it can quickly summarize long documents and also perform text completion, language translation, sentiment analysis, and more.

High-Performance Small Model, But Lacking 'Safety Guardrails'

Mistral 7B has drawn significant attention due to its high performance and strong adaptability, characterized by a "small digital footprint," meaning it requires relatively low computational resources and storage space during operation.

Unlike other models that strictly depend on powerful hardware, Mixtral 7B can even run on small personal computers without a dedicated GPU. This enables flexible deployment via tools such as the vLLM inference server and the open-source Skypilot framework on any cloud platform, including AWS, GCP, and Azure. Additionally, the model supports local integration with developer-provided reference implementations.

Despite its high performance and flexible deployment capabilities, security remains a vulnerability for Mistral AI.

LLMs like GPT-3 and Llama 2 include strict content filters that prevent the generation of messages deemed harmful by their parent companies, whereas Mixtral 7B lacks such "safety guardrails." Users have reported asking Mistral AI’s chatbot how to build bombs or commit murder, receiving disturbingly detailed guidance in response.

While Mistral AI emphasizes open sharing of its technology, this openness could become a double-edged sword, as regulators might impose stricter measures due to the absence of traditional content filtering.

On the other hand, Mistral AI CEO Arthur Mensch stated at an AI safety summit: "There is a trade-off between the risks and benefits brought by open source, and we need dynamic dialogue to find the best solutions."

It is reported that the company is building a platform with modular filters and modular mechanisms to manage its model network. Perhaps the company will address AI safety and protection from within the model architecture itself.

In today’s fiercely competitive large language model landscape, Mistral AI stands out with its exceptional performance and adaptability. Yet, facing potential AI safety challenges, industry professionals are contemplating how to strike the right balance between openness and security.

04. Co-Creating an Intelligent Future with Google Cloud

As everyone knows, Google Cloud is a global leader in cloud computing. When it joins forces with Mistral AI—the dark horse in the AI field—an infinitely promising future begins to unfold before us.

Last month, Google Cloud announced a global partnership with Mistral AI, under which Mistral AI will leverage Google Cloud’s infrastructure to distribute and commercialize its large language models.

Leveraging Google Cloud’s powerful computing and big data technologies, Mistral AI is poised to achieve unprecedented breakthroughs in model inference and pre-training. This will not only further advance AI technology but also deliver smarter, more efficient solutions across industries.

Meanwhile, the collaboration will accelerate Mistral AI’s real-world applications across various sectors—from e-commerce, finance, healthcare to education—bringing greater convenience and benefits to humanity.

Of course, Mistral AI’s rise is no accident. As a vibrant and innovative company, Mistral AI has consistently pushed the boundaries of AI technology and applied it to solving practical problems.

Its outstanding performance and innovative capabilities have led many to wonder: Could this startup surpass OpenAI and emerge as the leader in Europe’s AI field? Only time will tell.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News