AI Agents: Redefining the Innovation Path for Web3 Games

TechFlow Selected TechFlow Selected

AI Agents: Redefining the Innovation Path for Web3 Games

This article reviews the development of "general large models, vertical-specific application agents, and generative AI applications" within the Web3 gaming sector.

Author: PSE Trading Analyst @Minta

Key Insights

-

AI Agents are tools based on general-purpose LLMs, enabling developers and users to directly build self-interacting applications.

-

The future landscape of the AI sector is likely to be "general large models + vertical applications"; AI Agents occupy the middleware niche connecting general large models and DApps. As such, their moat is relatively low, requiring network effects and enhanced user stickiness to strengthen long-term competitiveness.

-

This article reviews developments in Web3 gaming across "general large models, vertical application agents, and Generative AI applications." In particular, integrating Generative AI technology holds strong potential for delivering breakout games in the near term.

01 Technical Overview

This year, Large Language Models (LLMs) have taken center stage in the surge of Artificial General Intelligence (AGI) technologies. Core OpenAI researchers Andrej Karpathy and Lilian Weng have both highlighted that AI Agents powered by LLMs represent a key direction for AGI development. Many teams are now building AI Agent systems driven by LLMs. In short, an AI Agent is a computer program that uses vast data and complex algorithms to simulate human thinking and decision-making processes to perform various tasks and interactions—such as autonomous driving, speech recognition, and game strategy. The diagram from Abacus.ai clearly illustrates the fundamental principles of AI Agents, broken down into the following steps:

-

Perception and Data Collection: Inputting data or acquiring information through sensing systems (e.g., sensors, cameras, microphones), such as game states, images, and sounds.

-

State Representation: Processing and representing data in forms understandable to the Agent, such as converting it into vectors or tensors for input into neural networks.

-

Neural Network Model: Typically using deep neural networks for decision-making and learning—e.g., Convolutional Neural Networks (CNNs) for image processing, Recurrent Neural Networks (RNNs) for sequential data, or advanced architectures like Transformers.

-

Reinforcement Learning: Agents learn optimal action strategies through interaction with the environment. Other components include policy networks, value networks, training and optimization, and exploration vs. exploitation. For example, in gaming, a policy network takes the game state as input and outputs a probability distribution over actions; a value network estimates the value of a given state. Through repeated environmental interactions, the Agent continuously improves its policy and value networks via reinforcement learning to produce better outcomes.

In summary, AI Agents are intelligent entities capable of perception, decision-making, and action, playing significant roles across various domains—including gaming. OpenAI researcher Lilian Weng's comprehensive article "LLM Powered Autonomous Agents" introduces a particularly fascinating experiment: Generative Agents.

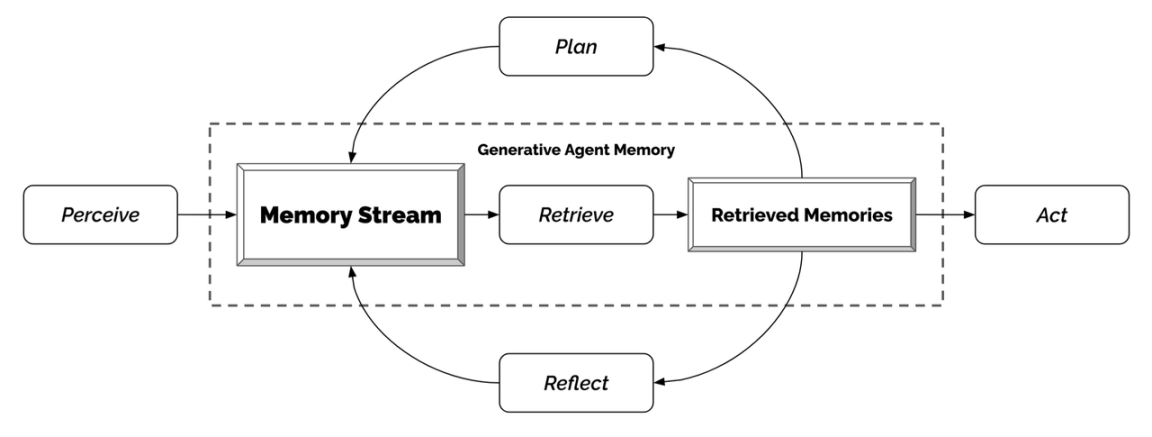

Generative Agents (GA), inspired by The Sims, used LLM technology to generate 25 virtual characters, each controlled by an LLM-powered Agent living and interacting within a sandbox environment. The design was clever—integrating LLMs with memory, planning, and reflection capabilities, allowing Agents to make decisions based on past experiences and interact with one another.

The article details how Agents use policy networks, value networks, and environmental interactions to continuously train and optimize their decision pathways.

Here's how it works: The Memory Stream acts as a long-term memory module recording all Agent interactions. The Retrieval model provides relevant memories (based on relevance, recency, and importance) to support decision-making (Plan). The Reflection mechanism summarizes past events to guide future behavior. Together, Plan and Reflect help transform insights and environmental inputs into concrete actions (Act).

This intriguing experiment demonstrates the capabilities of AI Agents—generating novel social behaviors, spreading information, remembering relationships (e.g., two virtual characters continuing a conversation), and coordinating social activities (e.g., hosting parties and inviting others). Overall, AI Agents are a fascinating tool, and their application in gaming warrants deeper exploration.

02 Technology Trends

2.1 AI Sector Trends

LaoBai, investment research partner at ABCDE, summarized Silicon Valley’s perspective on the next phase of AI development:

-

There will be no vertical-specific models—only general large models + vertical applications;

-

Data from edge devices (like smartphones) could become a barrier, making edge-based AI a promising opportunity;

-

Context length may soon undergo transformative growth (currently vector databases serve as AI memory, but context capacity remains limited).

From an industry evolution standpoint, general-purpose large models are too resource-intensive and broadly applicable, making redundant development unnecessary. Instead, focus should shift toward applying these general models to specific verticals.

Meanwhile, edge devices refer to terminals that process data and make decisions locally rather than relying on cloud centers or remote servers. Due to the diversity of edge devices, deploying AI Agents on them and properly accessing device data presents challenges—but also new opportunities.

Finally, the issue of Context has drawn increasing attention. In the context of LLMs, "Context" refers to the amount of information, while "Context length" indicates dimensionality. For instance, in an e-commerce prediction model, Context might include browsing history, purchase records, search logs, and user attributes. Context length refers to the aggregation of these dimensions—for example, combining data points like “a 30-year-old male user in Shanghai,” “recent competitor purchase history,” “purchase frequency,” and “browsing patterns.” Increasing Context length allows models to more comprehensively understand factors influencing user decisions.

Current consensus suggests that although today’s reliance on vector databases limits Context length, future breakthroughs will enable LLMs to handle longer, more complex contexts through more sophisticated methods—unlocking unforeseen application scenarios.

2.2 AI Agent Trends

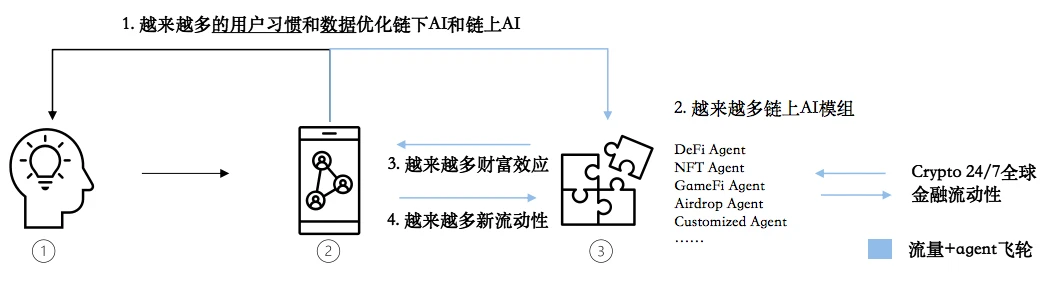

Folius Ventures summarized the application patterns of AI Agents in gaming, as shown below:

Item 1 in the diagram represents the LLM model, primarily responsible for converting traditional keyboard/click inputs into natural language, lowering the user entry barrier.

Item 2 is a front-end DApp integrated with AI Agents, providing functional services while collecting user habits and data from end-users.

Item 3 represents various AI Agents, existing as in-app features, bots, etc.

Overall, AI Agents—being code-based tools—can function as underlying programs extending DApp functionality and serving as catalysts for platform growth: i.e., middleware linking large models and vertical applications.

From a user perspective, DApps most likely to integrate AI Agents are open platforms like social apps, chatbots, and games—or transforming existing Web2 traffic gateways into simpler, more accessible AI+Web3 entry points. This aligns with ongoing industry efforts to lower the user barrier for Web3.

Based on industry dynamics, the middleware layer occupied by AI Agents tends to become highly competitive with little defensibility. Therefore, beyond improving user experience to meet B2C demands, AI Agents must build network effects or enhance user stickiness to strengthen their competitive advantage.

03 Landscape Mapping

Multiple approaches to applying AI in Web3 gaming have emerged, which can be categorized as follows:

-

General Models: Projects focused on building general AI models tailored to Web3 needs, identifying suitable neural network architectures and universal frameworks.

-

Vertical Applications: Targeted solutions addressing specific gaming problems or offering specialized services, typically appearing as Agents, Bots, or BotKits.

-

Generative AI Applications: The most direct application of large models is content generation. Since gaming is inherently a content-driven industry, Generative AI applications here are especially promising—from auto-generating world elements, characters, quests, and storylines, to generating strategies, decisions, and even evolving in-game ecosystems—enriching gameplay diversity and depth.

-

AI Games: Numerous games already integrate AI technology in varied ways, as discussed below.

3.1 General Large Models

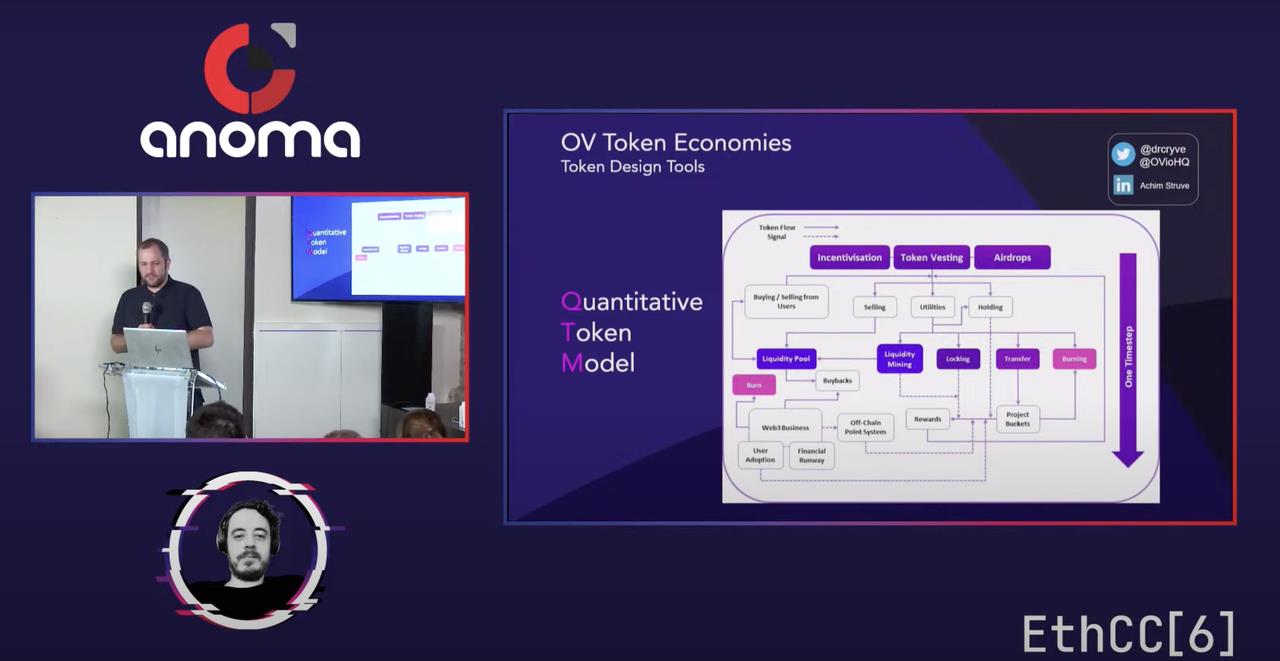

Web3 already features simulation models designed for economic modeling and ecosystem development, such as the Quantitative Token Model (QTM). Dr. Achim Struve from Outlier Ventures discussed some of these concepts during his talk at ETHCC—for example, leveraging LLMs to create digital twins of entire ecosystems for 1:1 simulation to ensure economic system robustness.

The QTM (Quantitative Token Model) shown below is an AI-driven reasoning model. It simulates over a fixed 10-year period with monthly time steps. At the start of each step, tokens are emitted into the ecosystem via modules for incentives, vesting, airdrops, etc. These tokens are then distributed across meta-buckets for further granular utility allocation, defining reward payouts and other mechanisms. Off-chain operations also consider general financial conditions—such as buybacks or burns—and measure user adoption rates.

Of course, output quality depends heavily on input accuracy, so thorough market research is essential before using QTM. Nevertheless, QTM stands as a practical AI-driven model applied to Web3 economic design, with many teams developing easier-to-use 2C/2B interfaces to lower adoption barriers.

3.2 Vertical Application Agents

Vertical applications mainly appear in the form of Agents—ranging from bots, botkits, virtual assistants, intelligent decision-support systems, to automated data-processing tools. Typically, AI Agents use OpenAI’s general models as a foundation, combine them with other open-source or proprietary technologies (e.g., text-to-speech), and fine-tune them with domain-specific datasets to create AI Agents outperforming ChatGPT in specific areas.

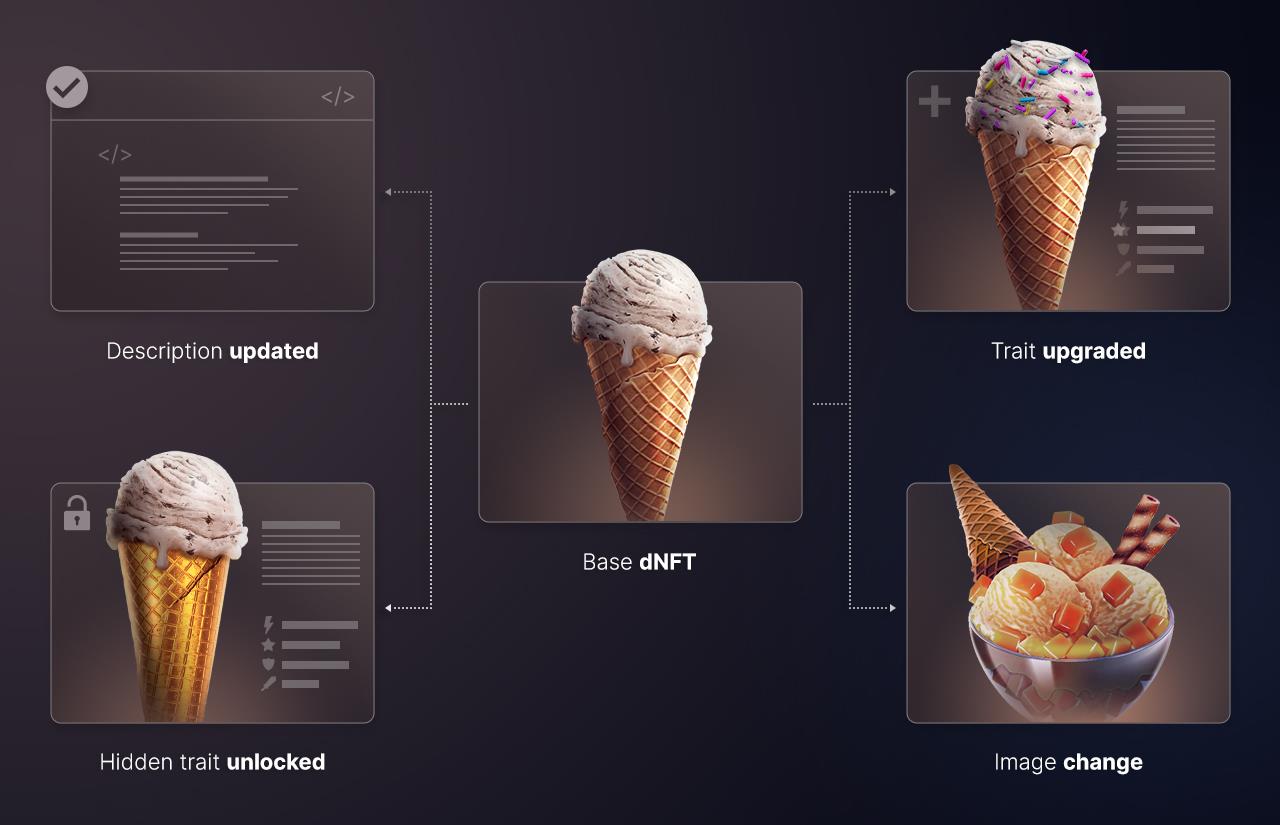

Currently, the most mature application in Web3 gaming is NFT Agents. There is broad consensus that NFTs will remain a core component of Web3 gaming.

With advancements in metadata management within the Ethereum ecosystem, programmable dynamic NFTs have emerged. For creators, this enables more flexible NFT functionalities. For users, increased interactivity generates valuable data. AI Agents can optimize these interactions and expand the application scope of interaction data, injecting innovation and value into the NFT ecosystem.

Case 1: Gelato's development framework allows developers to define custom logic to update NFT metadata based on off-chain events or time intervals. Gelato nodes trigger metadata changes when conditions are met, enabling automatic on-chain NFT updates. For example, this technology can pull real-time sports data via APIs and automatically upgrade an NFT’s skill attributes when an athlete wins a match.

Case 2: Paima offers application-level Agents for Dynamic NFTs. Paima’s NFT compression protocol mints minimal NFTs on L1 and evolves them based on game states from L2, offering players deeper, more interactive experiences—e.g., NFTs changing based on character XP, quest completion, or equipment.

Case 3: Modulus Labs, a well-known ZKML project, has made moves in the NFT space. They launched zkMon, an NFT series generated via AI and published on-chain, accompanied by a zero-knowledge proof (zkp). Users can verify whether their NFT originated from the corresponding AI model using the zkp. More details: Chapter 7.2: The World’s 1st zkGAN NFTs.

3.3 Generative AI Applications

As previously noted, gaming is fundamentally a content industry, and AI Agents can generate vast amounts of content quickly and cost-effectively—including unpredictable, dynamic characters. Thus, Generative AI is highly suited for gaming. Current applications in this domain fall into several main categories:

-

AI-generated characters: Battling AI opponents, AI-controlled NPCs, or fully AI-generated playable characters.

-

AI-generated content: Automatically creating quests, storylines, items, maps, etc.

-

AI-generated environments: Using AI to generate, optimize, or expand terrain, landscapes, and atmosphere within game worlds.

3.3.1 AI-Generated Characters

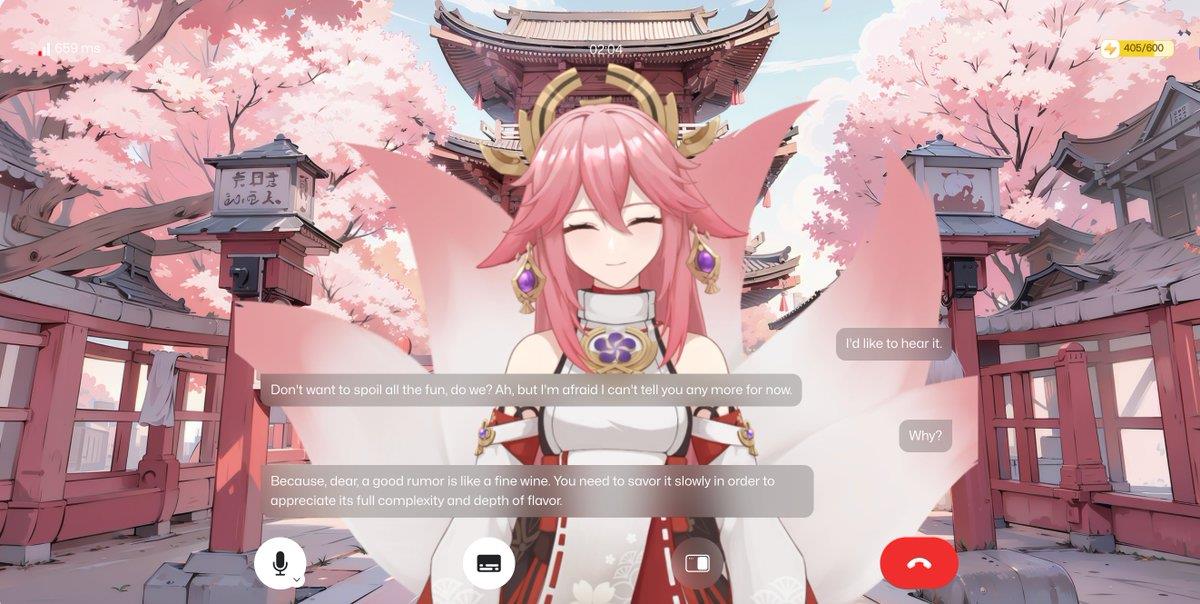

Case 1: MyShell

MyShell is a bot creation platform allowing users to build custom bots for chatting, language practice, gaming, or even psychological counseling. MyShell employs Text-to-Speech (TTS) technology, enabling voice cloning from just a few seconds of audio sample. Additionally, MyShell uses AutoPrompt, letting users instruct LLMs simply by describing their intent—laying the groundwork for personalized large language models.

Some users (noted) report that MyShell's voice chat is exceptionally smooth and faster than GPT’s, complete with Live2D support.

Case 2: AI Arena

AI Arena is an AI battle game where users train their combat spirits (NFTs) using LLM models, then deploy them in PvP/PvE arenas. Similar to Super Smash Bros., but with added competitive depth through AI training.

Paradigm led AI Arena’s funding round, and public testing has begun—players can join for free or purchase NFTs to boost training intensity.

Case 3: On-chain Chess Game Leela vs the World

Leela vs the World, developed by Modulus Labs, is a chess game where one player is AI and the other human. The game state resides in a smart contract. Players interact via wallets (contract calls). The AI reads the updated board state, makes a move, and generates a zero-knowledge proof (zkp) for the computation—all done on AWS. The zkp is then submitted to the on-chain contract for verification, and upon success, triggers the "move" function in the game contract.

3.3.2 AI-Generated Game Content

Case 1: AI Town

AI Town is a collaboration between a16z and its portfolio company Convex Dev, inspired by Stanford’s "Generative Agents" paper. It is a virtual town where each AI resident builds its own narrative through interactions and experiences.

Built using Convex’s serverless backend, Pinecone vector storage, Clerk authentication, OpenAI text generation, and Fly deployment. Notably, AI Town is fully open-source, allowing developers to customize components including feature data, sprite sheets, tilemaps, prompt templates, game rules, and logic. Beyond regular gameplay, developers can leverage the source code inside or outside the game to build new features—making AI Town not just a content-generation game, but also a developer ecosystem and toolkit.

Thus, AI Town serves as both a generative content game and a development platform.

Case 2: Paul

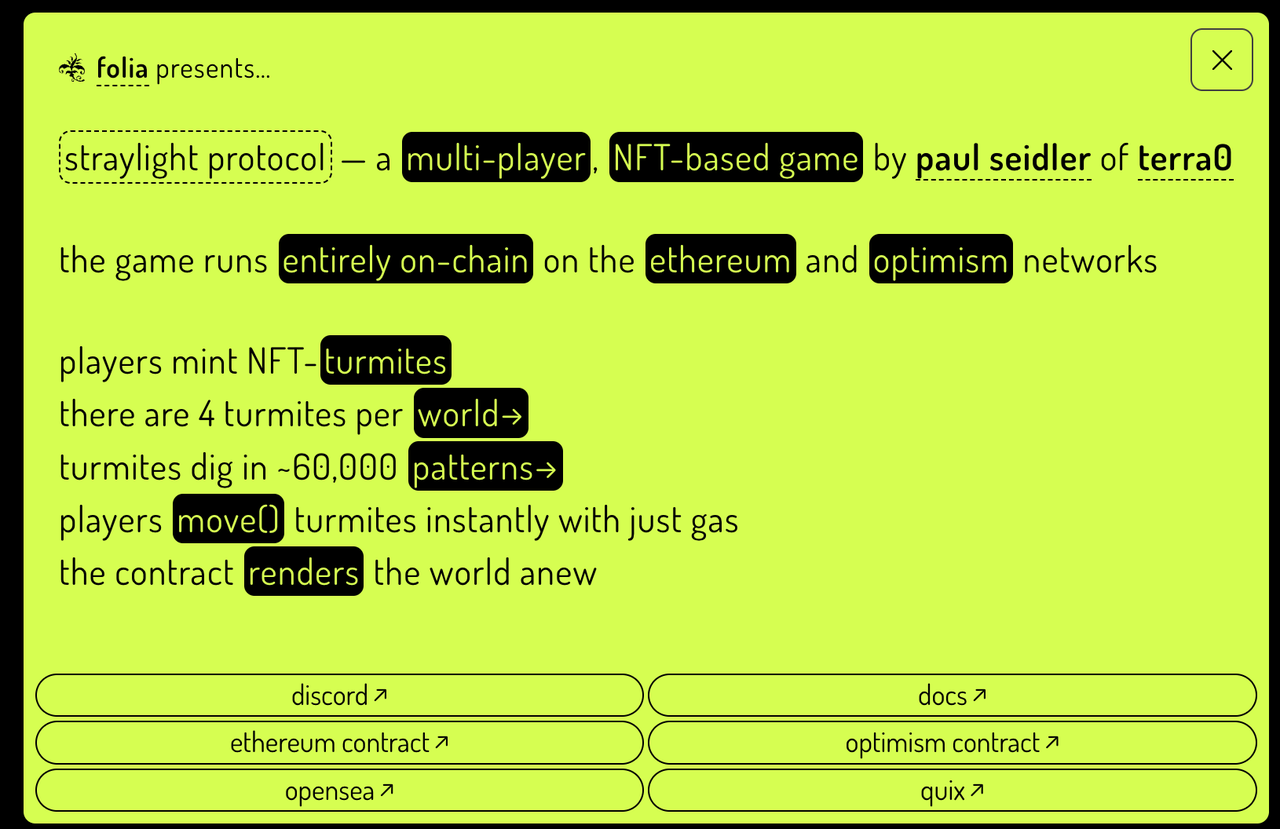

Paul is an AI story generator that provides a solution specifically for fully on-chain games—enabling AI-generated stories to be directly published on-chain. Its logic involves feeding the LLM a set of prior rules, allowing players to automatically generate derivative content based on those rules.

For example, the game Straylight Protocol uses Paul Seidler to launch its game. Straylight is a multiplayer NFT game centered around a fully on-chain version of "Minecraft," where players can mint NFTs and construct their own worlds based on predefined model rules.

3.3.3 AI-Generated Game Environments

Case 1: Pahdo Labs

Pahdo Labs is a game studio currently developing Halcyon Zero—a fantasy anime-style RPG and online game creation platform built on the Godot engine. Set in an ethereal fantasy world centered around a bustling town as a social hub.

What makes this game unique is that players can use in-game AI creation tools to rapidly generate custom 3D backgrounds and import favorite characters—truly democratizing UGC in gaming with accessible tools and immersive environments.

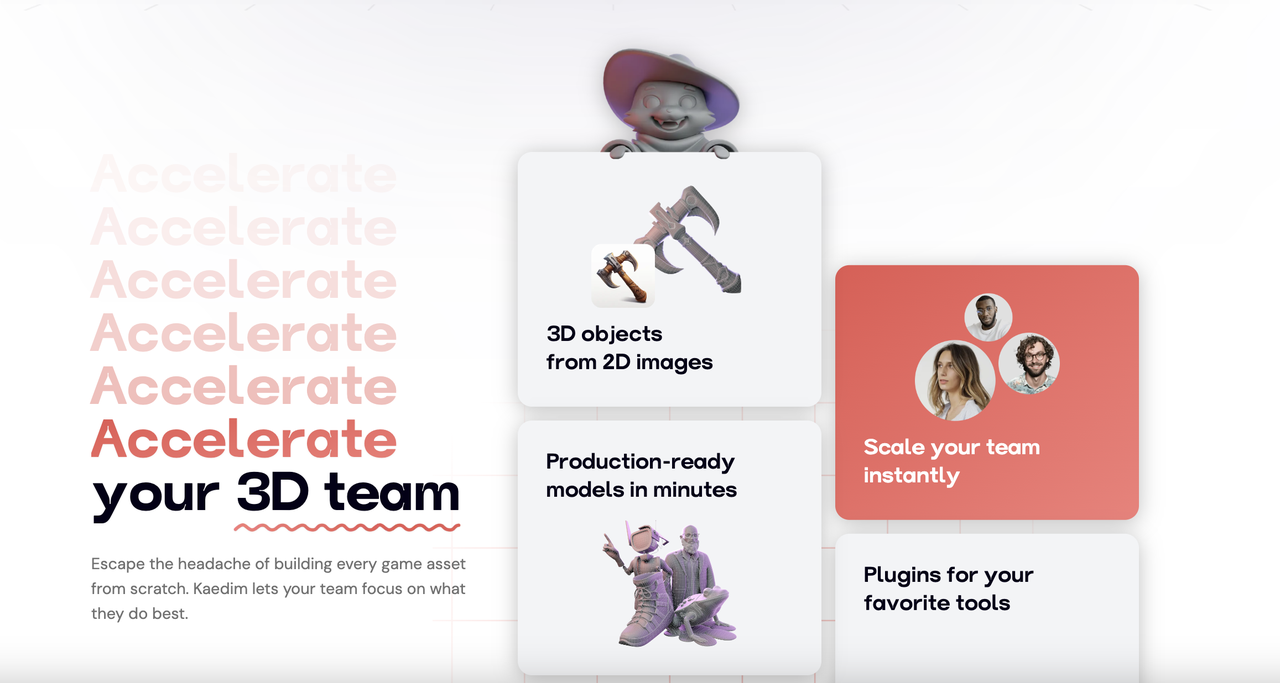

Case 2: Kaedim

Kaedim has developed a Generative AI-powered 3D model generation tool for game studios, enabling rapid batch creation of in-game 3D scenes/assets tailored to specific needs. Kaedim’s general product is still under development, expected to launch for game studios in 2024.

Kaedim’s core logic mirrors that of AI Agents: starting with a general large model, internal artists continuously feed high-quality data and provide feedback on outputs, iteratively training the model via machine learning until the AI Agent produces desired 3D environments.

04 Conclusion

In this article, we conducted a detailed analysis of AI applications in gaming. Overall, the future will undoubtedly see star unicorn projects emerge from general models and Generative AI in gaming. While vertical applications face lower barriers to entry, first-mover advantages are strong—if leveraged effectively to build network effects and user stickiness, their potential is immense. Moreover, Generative AI is naturally aligned with gaming as a content industry. With numerous teams actively exploring GA applications in games, breakout hits leveraging GA are highly likely in this cycle.

Beyond the directions mentioned, future explorations could include:

(1) Data + Application Layer: The AI data sector has already produced billion-dollar unicorns, and synergies between data and application layers offer rich possibilities.

(2) Integration with SocialFi: Innovating social interactions; using AI Agents to optimize community identity verification and governance; or enabling smarter personalized recommendations.

(3) As Agents grow more autonomous and mature, will the primary participants in Autonomous Worlds be humans or bots? Could on-chain autonomous worlds resemble Uniswap, where over 80% of DAUs are bots? If so, governance Agents integrated with Web3 governance concepts warrant serious exploration.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News