From Verifiable AI to Composable AI: Rethinking ZKML Application Scenarios

TechFlow Selected TechFlow Selected

From Verifiable AI to Composable AI: Rethinking ZKML Application Scenarios

AI applications cannot perform all computations on-chain, so they use zk or optimistic proofs to connect AI services to public blockchain systems in a more trustless manner.

Author: Turbo Guo

Reviewers: Mandy, Joshua

TLDR:

Modulus Labs enables verifiable AI by executing ML computations off-chain and generating zero-knowledge proofs (zkps) for them. This article re-examines the solution from an application perspective, analyzing where such technology is essential versus where demand is weaker, and ultimately explores two potential AI ecosystem models on public blockchains—horizontal and vertical. Key points include:

-

Whether verifiable AI is needed depends on whether it modifies on-chain data and involves fairness or privacy.

-

When AI does not affect on-chain state, it can act as an advisor; users can judge service quality based on actual outcomes without needing to verify computation processes.

-

When affecting on-chain state, if the service targets individuals and has no privacy implications, users can still directly assess AI quality without verifying computations.

-

When AI outputs impact fairness among multiple parties or involve personal data—such as evaluating community members for reward distribution, optimizing AMMs, or handling biometric data—users will want to audit the AI’s calculations. These are areas where verifiable AI may find product-market fit.

-

Vertical AI application ecosystem: Since one end of verifiable AI is a smart contract, there's potential for trustless interoperability between verifiable AI applications and even between AI services and native dapps—laying the foundation for a composable AI ecosystem.

-

Horizontal AI application ecosystem: Public blockchain systems can handle payment processing, dispute resolution, and matching user needs with AI services, enabling users to enjoy more freedom and decentralized AI experiences.

1. Introduction to Modulus Labs and Use Cases

1.1 Overview and Core Approach

Modulus Labs is an "on-chain" AI company that believes AI can significantly enhance smart contract capabilities, making web3 applications more powerful. However, applying AI in web3 presents a challenge: AI requires substantial computational power, yet running AI off-chain creates a black box, conflicting with web3’s principles of trustlessness and verifiability.

To address this, Modulus Labs adopts a zk rollup-inspired approach—off-chain execution with on-chain verification—and proposes a verifiable AI architecture: ML models run off-chain, while zero-knowledge proofs (zkps) are generated off-chain to verify the model architecture, weights, and inputs. These zkps can be submitted on-chain for validation via smart contracts, enabling trust-minimized interaction between AI and on-chain contracts—thus achieving “on-chain AI.”

Leveraging this verifiable AI concept, Modulus Labs has launched three “on-chain AI” applications and proposed numerous additional use cases.

1.2 Application Examples

-

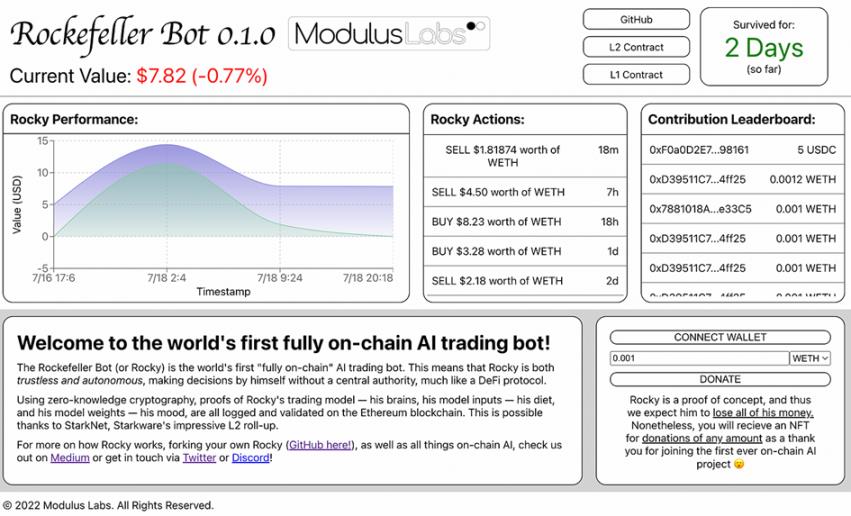

The first was Rocky Bot, an automated trading AI trained on historical wETH/USDC trading data. It predicts future wETH price movements and generates a zkp for its decision-making process before sending a transaction-triggering message to L1.

-

The second is the on-chain chess game “Leela vs the World,” where players compete against an AI. The game state resides in a smart contract. Players interact through their wallets, while the AI reads the latest board state, computes its move off-chain (on AWS), and generates a zkp for the entire process. The zkp is verified on-chain, triggering the AI’s move in the contract.

-

The third is an “on-chain” AI artist, which released the NFT series zkMon. The AI generates NFTs and publishes them on-chain, along with a zkp. Users can use the zkp to verify that their NFT was indeed generated by the specified AI model.

Additionally, Modulus Labs has mentioned several other potential use cases:

-

Using AI to evaluate users’ on-chain data and generate individual reputation scores, publishing zkps for user verification;

-

Optimizing AMM performance using AI, with zkps published for transparency;

-

Using verifiable AI to help privacy-focused projects comply with regulations without exposing private data (e.g., proving a transaction isn’t money laundering without revealing user addresses);

-

AI oracles that publish zkps so anyone can verify the reliability of off-chain data;

-

AI model competitions, where participants submit architectures and weights, run inference on standardized test inputs, generate zkps, and have winners automatically awarded via smart contracts;

-

Worldcoin envisions allowing users to download iris-recognition models locally, run them on-device, and generate zkps—enabling on-chain contracts to verify that iris codes were correctly generated without exposing biometric data.

1.3 Evaluating Need for Verifiable AI Across Scenarios

1.3.1 Scenarios Where Verifiable AI May Not Be Needed

In the case of Rocky Bot, users may lack both the need and ability to verify ML computation. First, most users lack technical expertise to conduct meaningful verification—even with tools, all they see is “I pressed a button and got a popup saying the AI used the right model,” which doesn’t guarantee authenticity. Second, users care primarily about returns. If performance is poor, they’ll switch providers—choosing the highest-performing model. In short, when users prioritize AI output effectiveness, verifying computation adds little value—they simply migrate to better-performing services.

One alternative approach is for AI to serve only as an advisor, with users executing trades manually. After inputting their goals, users receive suggested trade paths or directions from AI and decide whether to act. There's no need to verify underlying models—just pick the best-performing option.

A riskier but likely scenario is that users simply don’t care about control over assets or AI computation. When a profitable bot appears, people may willingly entrust funds to it—similar to depositing tokens into CEXs or traditional banks for wealth management. Users often ignore how things work, focusing only on final payouts—or even just what the interface claims they’ve earned. Such services could rapidly gain traction, potentially outpacing verifiable AI projects in iteration speed.

Moreover, if AI doesn’t modify on-chain state at all—if it merely fetches and pre-processes on-chain data—there’s no need to generate zkps for computations. We refer to these as “data services.” Examples include:

-

Mest’s chatbot is a typical data service—users can ask questions like “How much did I spend on NFTs?” and get answers based on their on-chain activity;

-

ChainGPT is a multi-functional AI assistant that interprets smart contracts before transactions, warns about frontrunning or sandwich attacks, and plans to offer AI news summaries, image generation, and NFT minting;

-

RSS3 offers AIOP, letting users select specific on-chain data and perform preprocessing to train custom AI models;

-

DefiLlama and RSS3 have developed ChatGPT plugins for querying on-chain data via natural language;

1.3.2 Scenarios Where Verifiable AI Is Needed

This article argues that scenarios involving multiple parties, fairness, or privacy require ZKPs for verification. Below we examine several use cases mentioned by Modulus Labs:

-

When communities distribute rewards based on AI-generated reputation scores, members will demand scrutiny of the evaluation process—which is essentially the ML computation;

-

AI-optimized AMMs involve multi-party economic interests and thus require periodic verification of AI operations;

-

Balancing privacy and regulatory compliance favors ZK solutions; if ML processes sensitive data, full computation must be backed by zkps;

-

Given oracles' broad impact, AI-driven ones should regularly produce zkps to prove correct operation;

-

In AI model competitions, participants and observers need assurance that computations comply with rules;

-

In Worldcoin’s envisioned use case, protecting biometric data is a strong user requirement.

Overall, when AI acts as a decision-maker whose output broadly affects fairness across parties—or when personal privacy is involved—users demand verification of the decision process, or at least assurance that the AI operates correctly.

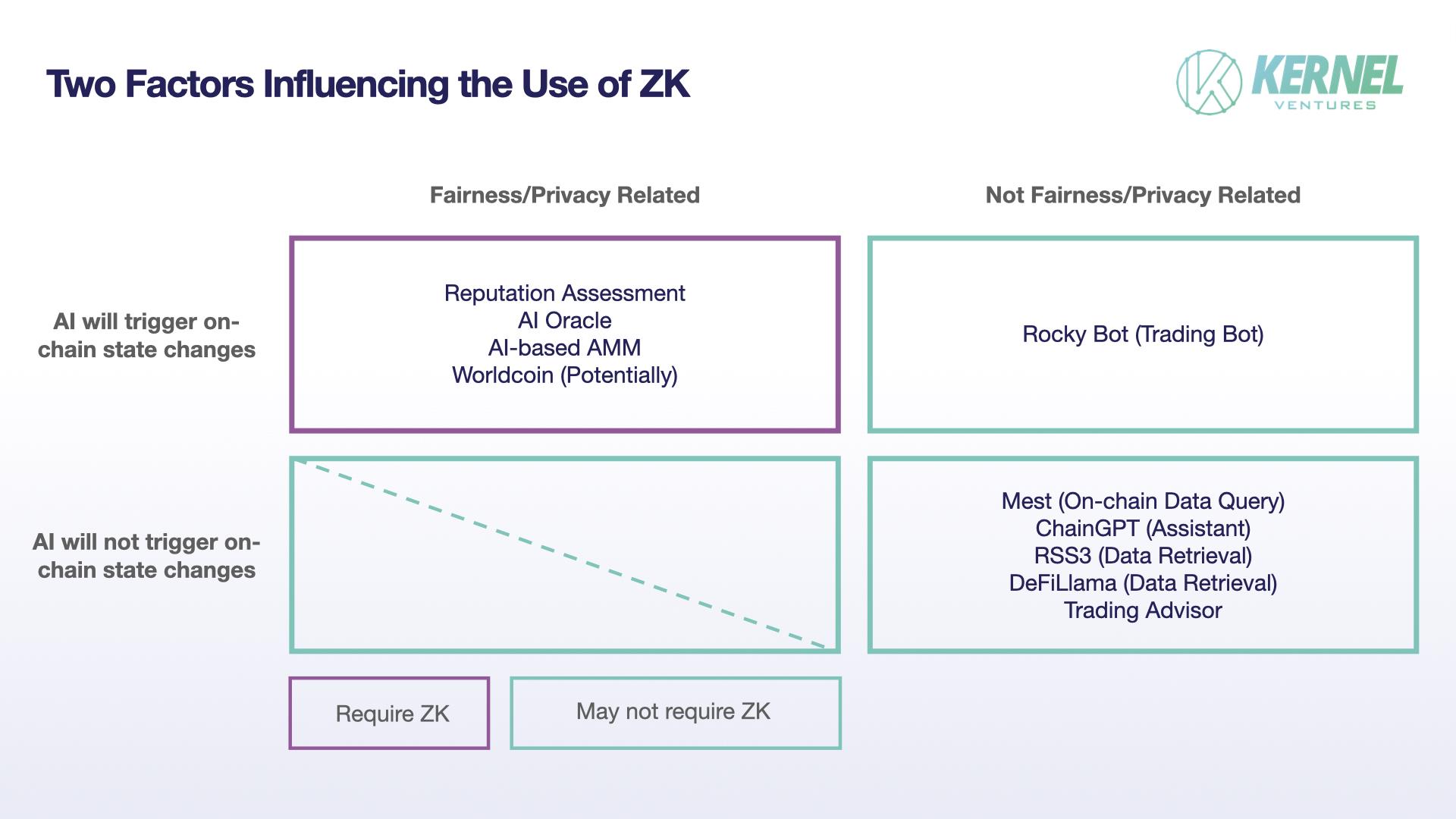

Therefore, two key criteria determine whether verifiable AI is needed: [1] Whether AI output modifies on-chain state, and [2] Whether it impacts fairness or privacy.

-

When AI output does not alter on-chain state, AI serves as an advisor—users judge quality by outcome, eliminating the need for computation verification;

-

When AI output changes on-chain state but targets individuals without privacy implications, users can still assess quality directly without verifying computation;

-

When AI output automatically alters on-chain data and affects fairness among multiple parties, the community demands verification of the AI’s decision logic;

-

When ML processes personal data, zk must protect privacy and meet regulatory standards.

2. Two Blockchain-Based AI Ecosystem Models

Regardless, Modulus Labs’ approach offers significant insight into how AI can integrate with crypto to deliver real-world value. But blockchain systems don’t just enhance individual AI services—they can enable entirely new AI ecosystems. These ecosystems redefine relationships between AI services, between AI and users, and across the entire value chain. We can categorize these emerging models as vertical and horizontal.

2.1 Vertical Model: Enabling Composability Between AIs

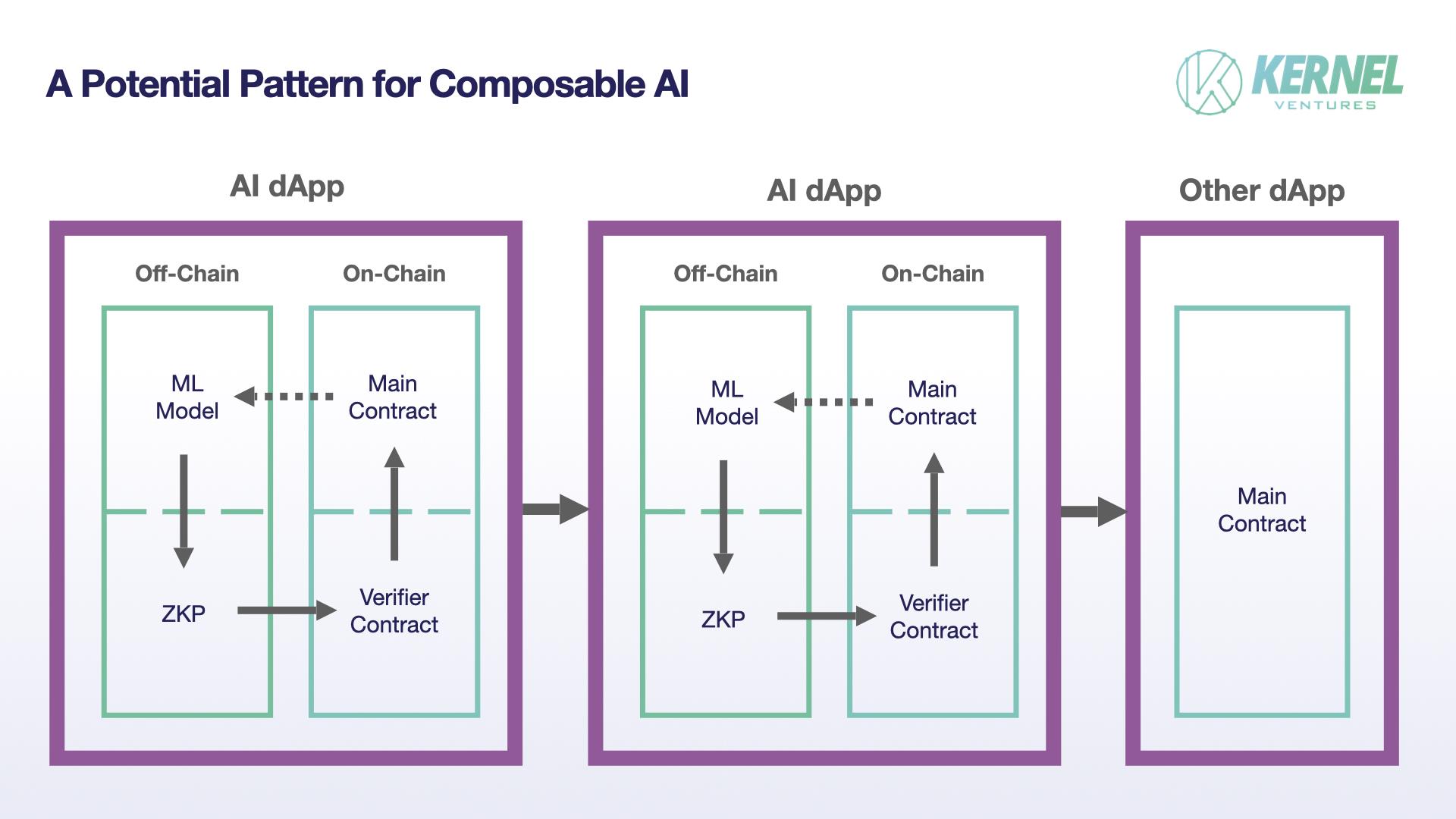

The “Leela vs the World” chess game highlights a unique aspect: users can bet on either side, with payouts automatically distributed post-game. Here, zkps serve not only to verify AI computation but also as trust guarantees that trigger on-chain state transitions. With such trust guarantees, AI services—and even AI and native dapps—could achieve dapp-level composability.

The basic building block of composable AI is [off-chain ML model → zkp generation → on-chain verification contract → main contract], inspired by the “Leela vs the World” framework. However, actual AI dapp architectures may differ. First, while chess requires a dedicated on-chain game state contract, many AI applications may not need one. For composability, however, having core logic managed via contracts simplifies integration. Second, the main contract doesn’t necessarily need to influence the AI model itself—an AI dapp might be unidirectional, where the model processes data and triggers a business-specific contract, which others can then call.

Extending this idea, contract-to-contract calls represent interactions across identity, assets, finance, and social data in web3. Consider this concrete example of AI service composition:

-

Worldcoin uses ML to generate iris codes and zkps from biometric data;

-

A reputation scoring AI verifies the DID corresponds to a real person (via iris data) and issues an NFT based on on-chain reputation;

-

A lending protocol adjusts credit limits based on the user’s NFT holdings.

Interactions between AIs within a blockchain framework aren’t purely theoretical. Loaf, a contributor to the fully on-chain game Realms, proposed that AI NPCs could trade with each other like players, enabling self-optimizing and autonomous economies. AI Arena developed an auto-battler game where users buy an NFT representing a robot powered by an AI model. Users play first, then train the AI on their gameplay data. Once strong enough, the AI competes autonomously in arenas. Modulus Labs noted AI Arena aims to make these AIs verifiable. Both cases illustrate AIs interacting and modifying on-chain states directly.

However, practical implementation of composable AI raises many open questions, such as how different dapps can reuse each other’s zkps or verification contracts. Yet, progress in zk tech—like RISC Zero’s work on complex off-chain computations and on-chain zkp verification—may eventually provide viable solutions.

2.2 Horizontal Model: Building Decentralized AI Service Platforms

Here, we introduce SAKSHI, a decentralized AI platform proposed by researchers from Princeton, Tsinghua University, UIUC, HKUST, Witness Chain, and EigenLayer. Its goal is to enable more decentralized, trustless, and automated access to AI services.

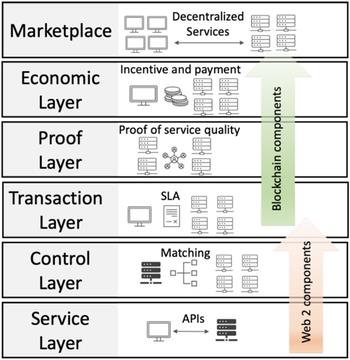

SAKSHI’s architecture consists of six layers: Service Layer, Control Layer, Transaction Layer, Proof Layer, Economic Layer, and Marketplace.

The Marketplace is closest to users, featuring aggregators that represent various AI providers. Users place orders through aggregators and agree on service quality and pricing via SLAs (Service-Level Agreements).

The Service Layer provides APIs to clients. Clients send ML inference requests to aggregators, which route them (via Control Layer routing) to appropriate AI service providers. Thus, Service and Control Layers resemble a multi-server web2 setup—but servers are operated independently and linked to aggregators via SLAs.

SLAs are deployed as smart contracts on the Transaction Layer (in this case, on Witness Chain). This layer tracks order status and coordinates between users, aggregators, and providers, handling disputes.

To ensure dispute resolution is evidence-based, the Proof Layer verifies whether providers followed SLA terms. Instead of zkps, SAKSHI uses optimistic proofs—relying on a network of challenger nodes to audit services, incentivized by Witness Chain.

Although SLAs and challengers reside on Witness Chain, it doesn’t rely on its native token for security. Instead, it leverages Ethereum’s security via EigenLayer—meaning the entire Economic Layer is built atop EigenLayer.

SAKSHI sits between AI providers and users, organizing diverse AI services in a decentralized manner—a horizontal model. Its innovation lies in letting AI providers focus solely on off-chain model management, while matchmaking, payments, and quality verification are handled via on-chain protocols—with automated dispute resolution. Currently theoretical, SAKSHI still faces many implementation details to resolve.

3. Outlook

Both composable AI and decentralized AI platforms share common traits in their blockchain-based ecosystem models. AI providers don’t directly interface with users—they only need to manage off-chain models and computation. Tasks like payment, dispute resolution, and matching user needs with services can be handled by decentralized protocols. As a trustless infrastructure, blockchains reduce friction between providers and users, granting users greater autonomy.

While the advantages of blockchain as an application base are well-known, they apply meaningfully to AI services. Unlike pure dapps, AI applications cannot run all computations on-chain, necessitating zk or optimistic proofs to securely integrate AI into blockchain systems.

With advancements like account abstraction smoothing user experience—abstracting away seed phrases, chains, and gas—blockchain ecosystems could feel as seamless as web2, while offering users greater freedom and composability. This makes blockchain-based AI ecosystems highly promising.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News