Interview with Miaoya Product Lead: On the First Day of AIGC Products, If You Don't Charge, You'll Never Get Paid

TechFlow Selected TechFlow Selected

Interview with Miaoya Product Lead: On the First Day of AIGC Products, If You Don't Charge, You'll Never Get Paid

When the effect reaches a point where everyone on the team feels it's truly great and experiences that "wow" moment, the probability of it going viral becomes very high.

Author | Wan Chen, Zheng Yue

Editor | Zheng Xuan

No one expected that the first truly breakout phenomenon-level product in China’s AIGC space would emerge from photo beautification (realistic portraits)—a field already considered highly mature.

Softly launched in mid-July, "Miao Ya" attracted countless fashion-conscious young women to download and try its service—offering artistic-quality personal portraits for just 9.9 RMB using only 20 user-uploaded photos. The resulting “Miao Ya portraits” quickly flooded social media platforms like WeChat Moments.

Miao Ya's breakout speed was astonishing, but the team behind it remained mysterious. Prior to launch and rapid popularity, there had been no previews at any of the dozens of large model product launches over the previous months. It wasn’t a venture started by industry veterans with tens or even hundreds of millions in angel funding. In fact, most people in the tech community were unsure whether Miao Ya was an independent startup or an internal project within a major tech company.

Recently, Zhang Yueguang, product lead of Miao Ya, participated in a group interview with several tech business media outlets including GeekPark.

Miao Ya is an internal incubation project under Alibaba’s UCWeb Entertainment division. After ChatGPT sparked a new wave of AI innovation, Zhang gathered a few colleagues in February this year to form an internal group called the “AIGC Breakthrough Team.” Within one month, they identified “realistic portrait generation” as their target direction, and spent the next three months refining what eventually became the Miao Ya product.

This inevitably evokes memories of Google’s famous “20% free time” policy—and iconic innovations such as Google Glass, Cardboard, and self-driving cars born from it. This Silicon Valley-style romantic beginning further heightened our curiosity about Miao Ya.

In a 90-minute session, Zhang answered a series of questions and addressed controversies surrounding Miao Ya. While details regarding underlying technology, user scale, team composition, and future monetization strategies were classified as trade secrets and thus not disclosed, the team did provide detailed explanations on why they chose realistic portraits, how the product came into being, data privacy concerns, and reasons for its viral success—offering us a glimpse behind Miao Ya’s veil of mystery.

Below is the edited transcript compiled by GeekPark.

01 From a small interest group to launching Miao Ya in three months

Q: How did your team go from zero to building Miao Ya?

A: The project began simply around January or February—without a formal business plan, more like an informal interest group. I teamed up with five or six colleagues to explore cutting-edge AI image technologies and products. On February 9th, we created a WeChat group named “AIGC Breakthrough Action Group.” From then on, we began tracking emerging tools, plugins, and technical advancements in AI imaging. By March, I felt key technical components had matured enough to justify focusing on realistic portraits.

Miao Ya team photo | Official Miao Ya Camera WeChat account

We spent over three months refining both technical and aesthetic aspects, including template design. By late June and early July, we felt the product was ready for users. We conducted a two-week internal test inviting friends and colleagues, receiving overwhelmingly positive feedback on product quality and user satisfaction. In mid-July, we officially launched. The response exceeded our expectations, growing faster than initially projected.

In terms of resources, initial demands were minimal. As the project grew, Miao Ya received increasing support. Most importantly, the company’s culture of tolerance and encouragement toward innovation allowed us to experiment freely.

Q: Why did you choose realistic portraits as the application area for AIGC?

A: I did some basic analysis on product direction. Previously, I worked on photo album products where we offered algorithm-based automatic classification. In users’ albums, over 70% of images are photos of real people. If you consider all types of visual content without filtering, realistic human portraits are the most valuable, most prevalent, and most widely viewed category.

Another factor: overseas products like Midjourney have succeeded, and some domestic players tried replicating them. But these models aim to draw anything—an artist who can paint everything—which requires extremely high technical and algorithmic capabilities. A more vertical focus is easier to execute well, which is why we chose realistic portraits.

Q: Many users say after trying Miao Ya, ‘For just 9.9 RMB, it beats chains like Tianzhenlan and Haematome.’ What do you think?

A: We never intended to replace Tianzhenlan or Haematome—that’s just a slogan users came up with. Our initial product format may resemble traditional photography services, but from our perspective, we hope to collaborate with and empower the photography industry. Physical studios like Haematome cannot be replaced—the experience of going to a studio and taking photos together holds deep emotional value. For example, gathering the whole family for a portrait session is meaningful in itself. No matter how advanced AI becomes, it can't replicate that shared moment.

Miao Ya Camera homepage

We want to reach underserved cities and less-developed regions where access to professional photography services is limited. Yet everywhere, people desire beautiful, memorable photos of themselves. That need exists universally.

As mentioned in our public thank-you letter, we invited photographers and designers to help create templates—their input enhanced our AI tools in magical ways. We see ourselves as complementary, not competitive—to offer tools to the ecosystem, not eliminate rivals.

Q: Looking back, why do you think Miao Ya went viral?

A: First and foremost, the team’s determination and hard work. Our culture is simple, direct, goal-oriented, and obsessed with excellence. We spent over three months perfecting the output to deliver superior user experience. Second, the company’s encouragement, support, and tolerance of innovation—plus additional backing once early results were shown—were crucial.

If analyzing the product itself, there are a few factors.

First, we got lucky. Second, user experience is paramount. Even if a product has high intrinsic value, poor usability will cause users to abandon it. Commercial value isn’t linear—it only materializes after surpassing a certain threshold. So when designing the product, we aimed for top-tier quality—ideally scoring above 90 points. When the result delivers a genuine “Wow” moment—when everyone on the team says, “This looks amazing”—the chances of virality skyrocket.

Also critical is controllability. During design, we adhered strictly to three principles: “True,” “Likeness,” and “Beauty.” “True” means no artificial AI look; “Likeness” means resembling the user; “Beauty” means being about 30% more attractive than the original. Only when all three hit above 90-point quality benchmarks did we release the product.

Q: Why set the number of uploaded photos at 20?

A: To achieve optimal results, 20 photos represent the current sweet spot. Specifics aren’t suitable for disclosure.

Miao Ya Camera requires users to upload 20 or more photos|Screenshot from Miao Ya

Q: Some analysts argue that trendy tool apps face replication risks. People speculate your model is fine-tuned open-source tech, suggesting low technical barriers. What are your actual technical or product moats?

A: We can’t disclose implementation specifics, but yes, we do have certain barriers. Most important is continuous iteration, expansion, and refinement of output quality. Our tech team constantly optimizes performance.

Q: Some guess you used Stable Diffusion with LoRA fine-tuning. Is that accurate?

A: Again, we can’t comment. Anything related to technical implementation falls under trade secrets and cannot be disclosed.

Q: Did you train your own base model for image generation? Are you using Alibaba Cloud’s image models?

A: Miao Ya is an internal project of Alibaba’s UCWeb Entertainment team. We are not using Alibaba Cloud’s relevant technologies. As for exactly how it works—I can’t elaborate.

We have a model called “Tiziano,” which many likely saw in our official thank-you letter released on July 17—named after the father of portraiture. This name reflects our original intent: delivering realistic portrait services from day one.

Q: How are Miao Ya’s current templates developed?

A: Our team includes many young members who discuss ideas efficiently. More importantly, we observe user preferences—what styles gain traction on social media and WeChat Moments.

We collect extensive user feedback—on preferred templates—and use that to guide new releases. Upcoming templates are largely driven by user demand.

Q: How does Miao Ya balance realism and beauty in outputs?

A: Seven or eight parts likeness, two or three parts enhancement. When defining our realistic portrait direction, we emphasized “True,” “Likeness,” and “Beauty”—no AI artifacts, maximally resembling the user, yet slightly improved. Whether it’s beauty filters or past generations of photo apps, this is standard practice.

We also give control back to users. Initially, the generated portrait might look very beautiful but less like you. We have a special feature allowing users to make it look more similar—with each click, it gets closer to your real appearance.

Q: Will Miao Ya always target young women exclusively?

A: Female users dominate nearly all selfie and image-centric products. Just look at the number of templates available—we clearly cater primarily to them.

02 Privacy and Data Security Controversies

Q: With Alibaba’s rich film and entertainment resources, do you plan dynamic face-swapping features—e.g., inserting verified faces into movie scenes?

A: We don’t prioritize integration with Alibaba’s entertainment assets, though if suitable opportunities arise—such as collaborations involving period dramas, ancient styles, or Qing dynasty themes—we’d consider exploring user interests in those directions.

Face swapping itself is something we坚决 avoid, because from the outset, I defined this product differently from earlier face-swap apps—even deliberately distancing ourselves from that category.

Q: What’s your view on the development of face-swap products in general?

A: Face-swapping is a fairly mature product category—we won’t judge its use cases. However, it does pose certain security risks.

The photos we generate via AI are inherently less realistic than direct face swaps. At minimum, they won’t pass strict facial landmark detection tests—they merely appear similar. Our method ensures user data safety and relatively secure generation, enabling longer-term sustainable growth.

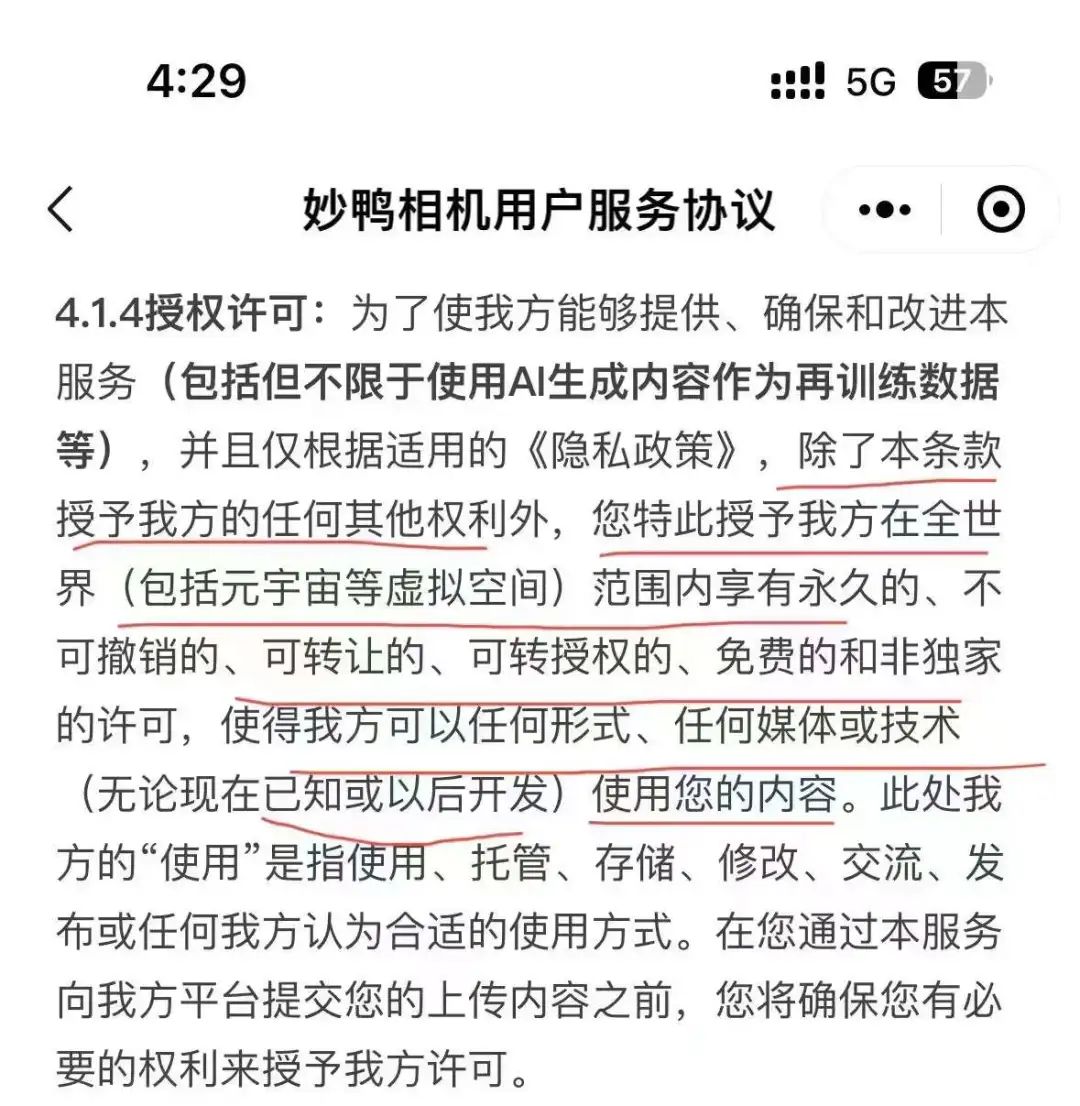

Q: How do you respond to criticism over your privacy policy?

A: Miao Ya sparked controversy due to wording in our initial user agreement. We revised it immediately and acknowledge this was our fault. In hindsight, we didn’t explain the terms clearly enough or present them in the simplest way possible.

Even under the old agreement, careful reading shows it was fundamentally similar to the new version: all generated content belongs entirely to users, and we cannot reuse it. However, to provide core services—displaying, sharing, downloading—we must obtain user authorization to operate on their content. That was the original intent, though phrasing caused confusion, so we updated it.

Our intention has always been: everything created belongs solely to the user. We retain nothing. We don’t store or use your data. If we show your work somewhere, it’s only to serve you—that’s our principle.

Q: Using Miao Ya requires uploading 20 photos. You claim post-generation deletion of digital avatars, yet earlier versions mentioned using data for training. Why?

A: First, we never used user data for training—never have, never will. The original clause frightened many people.

Original controversial Miao Ya Camera user agreement

Let me clarify again: the preceding clause stated all generated content belongs to users. Precisely because we fully transfer ownership, we needed explicit permission to display or process that content.

If you review other platforms’ agreements, many don’t state that user-created content belongs to users—they’re vague or even claim ownership. If the platform owns it, obviously no extra consent is needed.

This misstep was ours—we expressed good intentions in a way that terrified users. Let me reiterate: our team never considered collecting or abusing private user data. Once we realized the language wasn’t user-friendly and caused misunderstanding, we changed it instantly—without hesitation—because we weren’t doing anything wrong in the first place.

Q: Are facial features extracted or leaked?

A: We do not extract facial landmarks—there’s no such operation. During upload, there is a verification step mainly for safety checks—to ensure photos comply with national regulations—but we do not collect facial data points.

Second, creating a digital twin isn’t about extracting facial traits. Conversely, we cannot reverse-engineer your original 20 photos from the avatar—it’s technically impossible. Though I can’t reveal technical details, rest assured: no extraction of facial information occurs.

That’s why I said earlier: posting your real face on social media or WeChat Moments carries higher risk than using our generative tool—if you're concerned about security.

Q: If you don’t extract facial data, how do you achieve current results?

A: We use modern AI techniques, but definitely not through facial landmark extraction. Industry experts may speculate various technical paths—I won’t confirm any—but I assure you: no security risks involved. Everything in AI is probabilistic; it cannot reconstruct or deduce your original photos. So it’s very safe.

Q: What specific measures has Miao Ya taken to protect user privacy?

A: Our agreement commits to not retaining user photo data. All uploaded images—whether 20 or more—are deleted upon completion of the digital twin. Thus, we neither keep raw data nor can we reverse-calculate it from the avatar.

Second, we never share your digital twin or any data with third parties. Each user sees only their own creations and chooses whether to share externally.

Third, we’ve implemented robust cybersecurity protections. Even though we protect user privacy internally, we must guard against external hackers—this benefits greatly from Alibaba’s overall expertise in security and data protection.

Please don’t panic excessively. Fundamentally, this is a photo-editing tool. Outputs are visible only to you and lack inherent public-sharing mechanisms—making it actually safer. Some ask: “Can AI now generate my face—could it be misused?” But realistically, no matter how convincing, it only resembles you. Whereas if someone posts your real photo publicly, and someone cuts out your face, that’s far more dangerous. Our generated images are far less risky.

Overall, this product poses negligible risk to users—almost none. Data isn’t stored, cannot be reverse-calculated, original features aren’t captured—all outputs are private, sharing decisions belong to users, and shared images can’t pass facial verification systems since they aren’t exact replicas.

03 Day One of AIGC: If You Don’t Charge, You’ll Never Get Paid

Q: What’s the current app download volume and user retention like?

A: Specific metrics are confidential and cannot be shared. But we believe the data is solid and meets expectations. During the two-week beta, around 10,000 users joined—core users mostly from our colleague network, primarily internet professionals.

We have plans for future user scale, but can’t disclose details. Our goal is serving broader audiences to meet evolving market needs.

Q: How do you plan to maintain user engagement? There are concerns this app could fade quickly. Your thoughts?

A: Viral effect apps are common in this industry. Every few years—or annually during peak internet trends—similar products emerge. Some capture attention briefly then vanish; others endure. Take “Lian Meng,” widely thought to disappear after its viral moment. In reality, its functionality was absorbed into TikTok filters and effects—a stable, ongoing need still used daily by millions.

With Miao Ya, we aim beyond fleeting fame—to become a reliable, enduring service. That requires continuously improving quality and expanding offerings. Exact methods can’t be shared, but our mission is clear: low-cost, high-quality image services for everyone.

Q: Miao Ya’s B2B workstation launches today (August 4), inviting select AI-savvy designers for beta testing. Does it include community features? How will you collaborate with photographers? Which designers are targeted? What pain points does it solve?

A: The B2B workstation has no community aspect. Our current B2B offering is simple—essentially an ecosystem extension of our C2C service. We hope to partner with AI-interested designers and photographers, providing tools and co-creating templates to better serve users. Specific collaboration models can’t be revealed, but essentially: joint template creation.

Q: Why charge 9.9 RMB? Why commercialize from day one?

Benefits included in 9.9 RMB|Screenshot from Miao Ya

A: Two factors drive monetization potential. First, compute costs are high—a known industry challenge. Second, AIGC’s business logic differs fundamentally from the internet era. In the AIGC era, if you don’t charge users on day one, you’ll never get paid. Good AI products must start charging immediately.

Abstractly, all internet businesses revolve around information flow and distribution channels. Platforms move info from point A to B—regardless of content type, it’s essentially a channel. Initially free, they later add commissions or secondary monetization.

But AI doesn’t change the channel—it changes the factory. AI products *are* factories, not distributors. If a factory can’t sell its goods on day one, it never will. That’s why I believe strong AI products hold great commercial promise.

We chose 9.9 RMB after extensive calculations—to establish a simple, intuitive price perception. Based on organic user sharing patterns, this price point proves easily acceptable and understandable.

Q: After going viral, wait times were long. Now reduced, but still 3–4 hours. What optimizations were made? At what cost?

A: Reduced wait times resulted from massive compute scaling. While we expand capacity, user demand also grows—so queues persist. The root solution lies in increasing compute power. Scaling costs are manageable; pricing considered multiple factors. I’ve spoken with other teams—they say developing such a product involves invisible hurdles they can’t overcome. Others could build something similar outwardly, but hesitate: too many uploads mean prohibitively high compute costs, economically unviable. Here, the company gave Miao Ya tremendous room and support. Combined with monetization, we’re in a relatively healthy position.

Q: Beyond 9.9 RMB, will B2B introduce other pricing models?A: Stay tuned—we can’t disclose specifics. But naturally, we follow basic business logic: revenue, cost, profit. Profitability hasn’t been calculated yet, but I believe it’s a relatively healthy business model.

Q: At what point in the realistic portrait space would you feel satisfied enough to expand into other areas?

A: I think we’re just getting started. The road ahead is long.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News