a16z:AI 也躲不过广告,背后是巨大的变现压力

TechFlow Selected TechFlow Selected

a16z:AI 也躲不过广告,背后是巨大的变现压力

Advertising is the best way to bring internet services to as many consumers as possible.

Author: Bryan Kim

Translated by TechFlow

TechFlow Intro: The internet is a universal-access miracle that opens doors to opportunity, exploration, and connection. And advertising pays for this miracle. Bryan Kim, a partner at a16z, notes that OpenAI’s announcement last month of plans to introduce ads for free users may be the “biggest non-news of 2026 so far.”

Because if you’ve been paying attention, the signs have been everywhere. Advertising is the best way to bring internet services to as many consumers as possible.

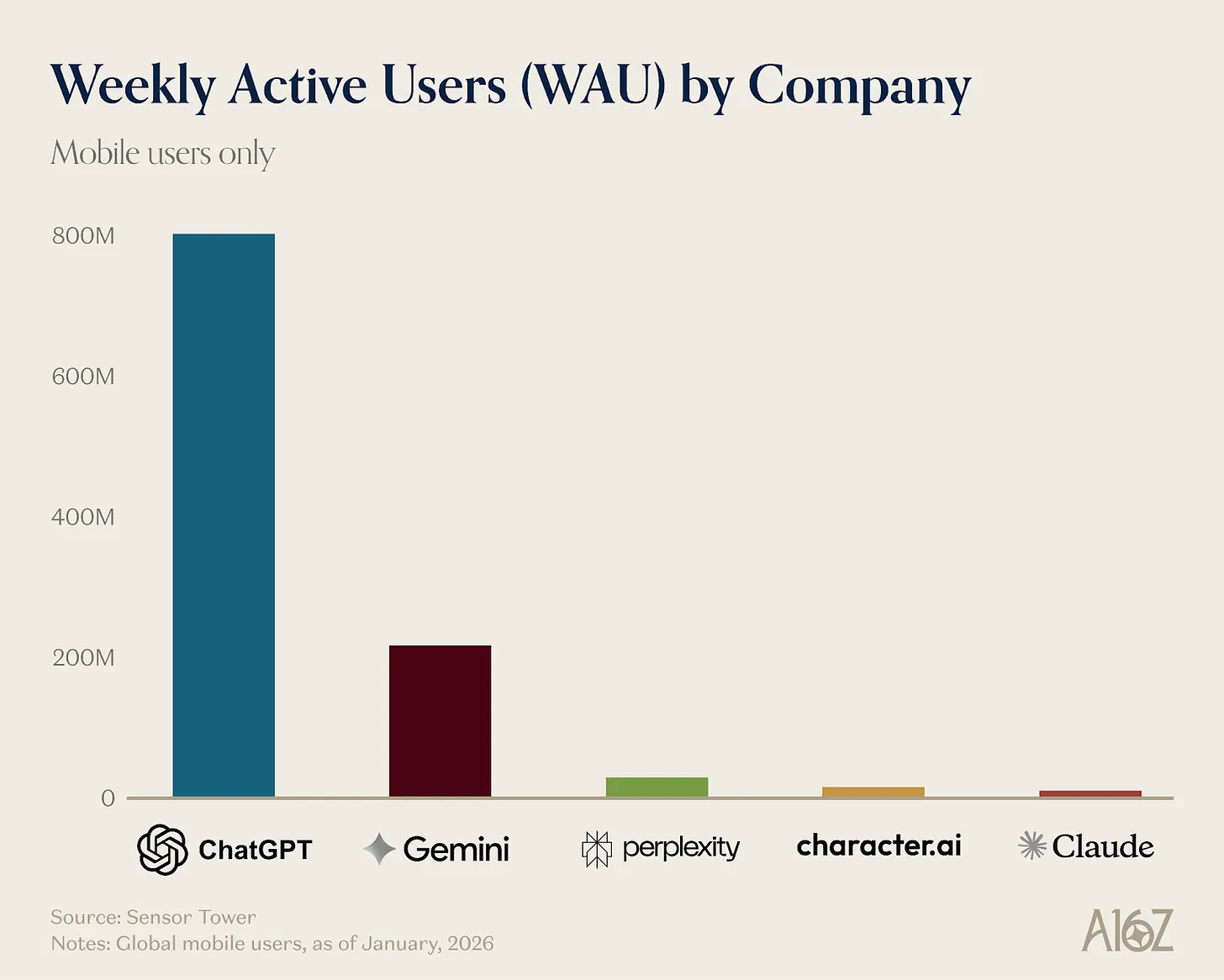

Data shows conversion rates for consumer AI subscription companies are uniformly low (5–10%). Most people use AI for personal productivity tasks—writing emails, searching for information—not high-value pursuits like programming. Even just 5–10% of 800M WAU translates to 40–80M paying users—but reaching one billion users requires advertising.

Full Text Below:

The internet is a universal-access miracle that opens doors to opportunity, exploration, and connection. And advertising pays for this miracle. As Marc has long argued, “If you take a principled stance against advertising, you’re also taking a stance against broad access.” Advertising is why we get great things.

So OpenAI’s announcement last month of plans to introduce ads for free users may be the “biggest non-news of 2026 so far.” Because of course, if you’ve been paying attention, the signs have been everywhere. Fidji Simo joined OpenAI in 2025 as CEO of Applications—a move many interpreted as “implementing ads, just as she did at Facebook and Instacart.” Sam Altman has been previewing ad rollouts on business podcasts. Tech analysts like Ben Thompson have predicted ads almost since ChatGPT’s launch.

But the main reason ads aren’t surprising is that they’re the best way to bring internet services to as many consumers as possible.

The Long Tail of LLM Users

“Luxury beliefs”—a term that gained traction a few years ago—refers to adopting positions not out of genuine principle but for optics. Tech has plenty of such examples, especially around advertising. Despite all the moral hand-wringing over bingo phrases like “selling data!” or “tracking!” or “attention harvesting,” the internet has always run on advertising—and most people prefer it this way. Internet advertising created one of history’s greatest “public goods” at trivial cost—occasionally enduring ads for cat sleeping bags or hydroponic living rooms. Those who pretend this is bad usually want to prove something to you.

Any internet history buff knows advertising is central to platform monetization: Google, Facebook, Instagram, and TikTok all launched free, then monetized via targeted ads. Advertising can also supplement low-ARPU subscription tiers, as Netflix did with its newer $8/month option introducing ads to the platform. Advertising has done an excellent job training people to expect most things online to be free—or nearly so.

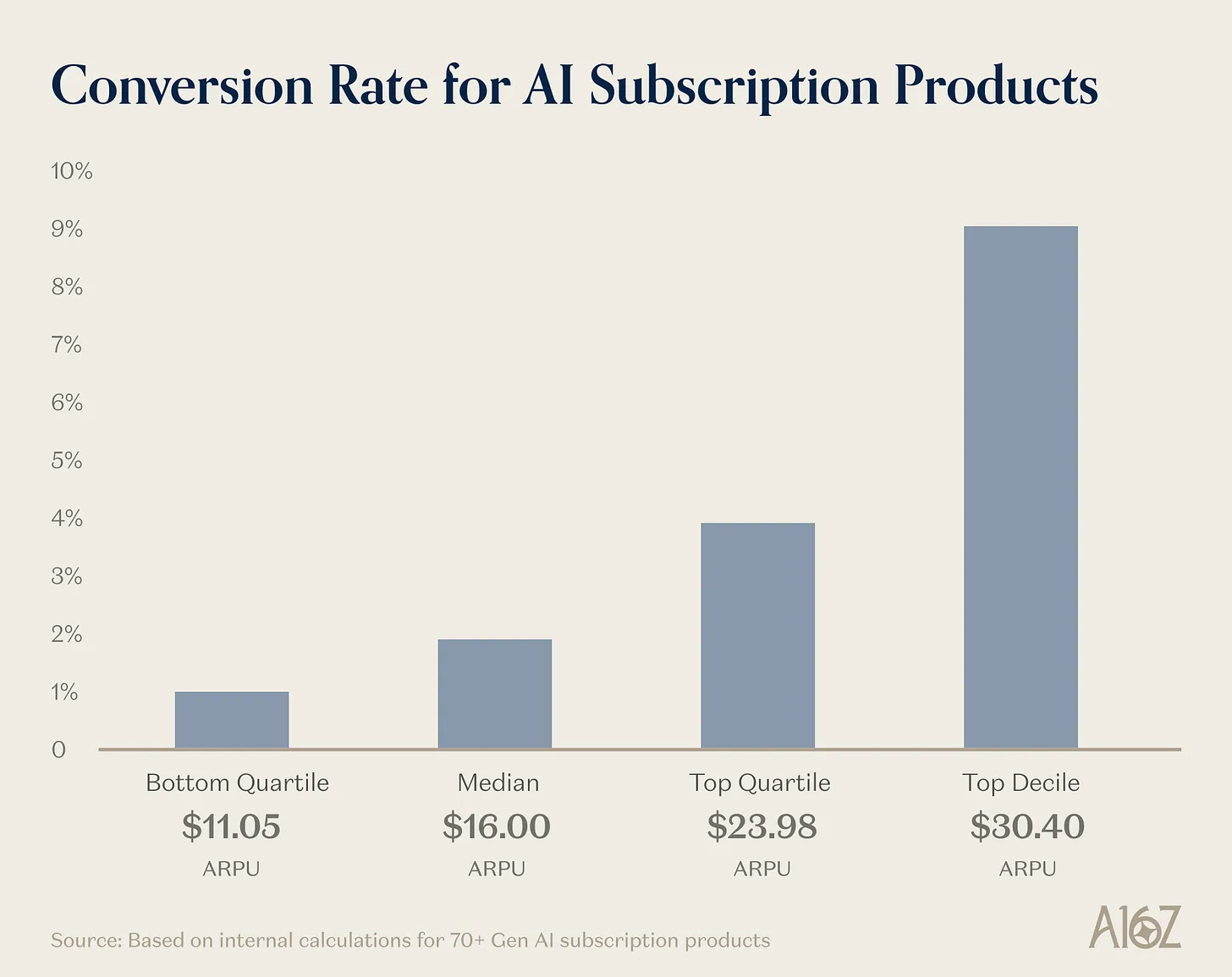

We now see this pattern emerging among frontier labs, specialized model companies, and smaller consumer AI startups. From our survey of consumer AI subscription companies, we see subscription conversion remains a real challenge across the board:

So what’s the solution? As we know from past consumer success stories, advertising is typically the best way to scale a service to billions of users.

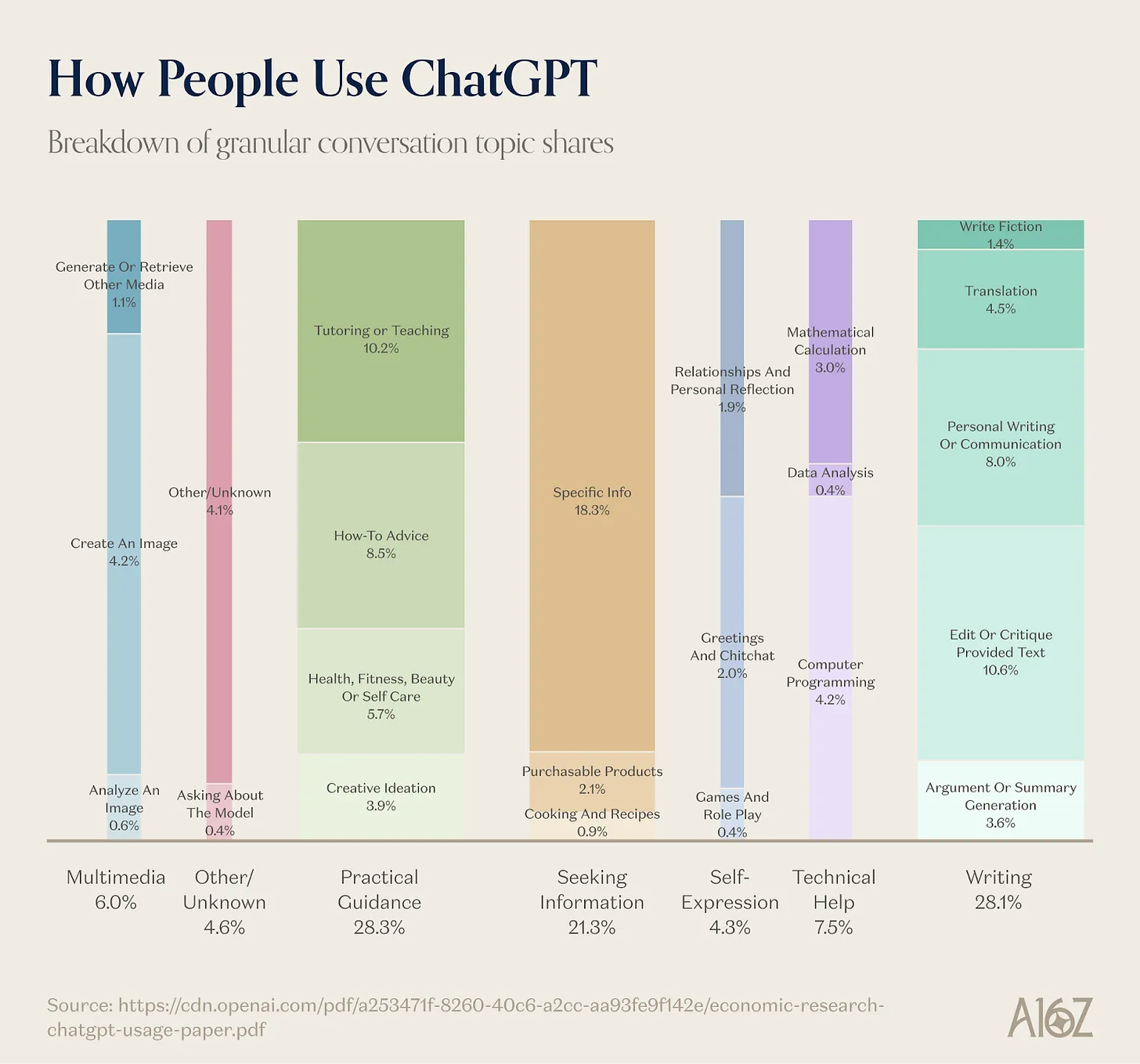

To understand why most people won’t pay for AI subscriptions, it helps to understand what people actually do with AI. Last year, OpenAI released data on this:

In short, most people use AI for personal productivity: writing emails, searching for information, tutoring, advice, etc. Meanwhile, high-value uses—like programming—account for only a tiny fraction of total queries. Anecdotally, we know programmers are among LLMs’ most loyal users; some even adjust their sleep schedules to optimize daily usage limits. For them, a $20 or $200 monthly subscription doesn’t feel excessive—the value they get (equivalent to a team of highly efficient SWE interns) may exceed the subscription fee by orders of magnitude.

But for users leveraging LLMs for general queries, advice, or even writing assistance, the actual cost of paying is too high. Why pay for answers to “Why is the sky blue?” or “What caused the Peloponnesian War?” when Google Search used to deliver perfectly adequate answers for free? Even in writing assistance (some users do rely on it for email drafting and routine tasks), it rarely completes enough of a person’s work to justify individual subscription payments. Moreover, most people simply don’t need advanced models or features: you don’t need the best reasoning model to write an email or suggest recipes.

Let’s step back and acknowledge a few things. The absolute number of people paying for products like ChatGPT remains enormous: 5–10% of 800M WAU. That’s 40–80 million people! Crucially, the Pro tier’s $200 price point is ten times what we consider the upper limit for consumer software subscriptions. But if you want ChatGPT to reach one billion users (and beyond) for free, you’ll need to introduce something beyond subscriptions.

The good news? People actually do like ads! Ask an average Instagram user—they’ll likely tell you the ads they see are genuinely useful: they discover products they truly want and need, and make purchases that meaningfully improve their lives. Characterizing ads as exploitative or intrusive is regressive: maybe we feel that way about TV ads, but most of the time, targeted ads are actually quite good content.

I’m using OpenAI here as an example (they’ve been one of the most candid labs about usage trends). But this logic applies to all frontier labs: if they want to scale to billions of users, they’ll ultimately need to introduce some form of advertising. Consumer monetization models remain unsolved in AI. In the next section, I’ll outline some approaches.

Possible AI Monetization Models

My general rule of thumb in consumer app development is that you need at least 10 million WAU before introducing ads. Many AI labs have already crossed that threshold.

We already know ad units are coming to ChatGPT. What might they look like—and what other ad and monetization models could work for LLMs?

1. Higher-Value Search and Intent-Based Ads: OpenAI has confirmed these ads—e.g., recipe ingredients, travel hotel recommendations—will soon roll out to free and low-cost tiers. These ads will be visually distinct from ChatGPT’s answers and clearly labeled as sponsored.

Over time, ads may begin to feel more like prompts: you express intent to buy something, and an agent fulfills your request end-to-end—selecting from both sponsored and non-sponsored options. In many ways, these ads resemble the earliest ad units from the 1990s and 2000s—and the sponsored search ads Google perfected (notably, Google still derives the vast majority of its revenue from advertising, and didn’t launch subscriptions until over 15 years into its history).

2. Instagram-Style Contextual Ads: Ben Thompson points out OpenAI should have introduced ads into ChatGPT responses earlier. First, it would have acclimated non-paying users to ads sooner (when they held a real lead over Gemini in capabilities). Second, it would have given them a head start building truly outstanding ad products—ones that predict what you want, rather than opportunistically serving ads based on intent-driven queries. Instagram and TikTok deliver astonishing ad experiences—showing you products you never knew you wanted but instantly need—and many users find them helpful, not intrusive.

Given the volume of personal information and memory OpenAI holds, there’s ample opportunity to build a similar ad product for ChatGPT. Of course, user experience differs between apps: Can you translate Instagram or TikTok’s more “lean-back” ad experience into ChatGPT’s more engaged, interactive model? That’s a much harder—and far more lucrative—question.

3. Affiliate Commerce: Last year, OpenAI announced partnerships with marketplace platforms and individual retailers to launch instant checkout, letting users purchase directly within chats. You could imagine this evolving into its own dedicated shopping vertical, where agents proactively hunt for clothing, home goods, or rare items you’re tracking—earning revenue share for each sale facilitated through the model provider’s marketplace.

4. Gaming: Gaming is often overlooked—or obscured—as its own ad unit. We’re unsure how games fit into ChatGPT’s ad strategy, but they deserve mention here. App-install ads (many of which are mobile games) have long been a major driver of Facebook’s ad growth. Games are so profitable that it’s easy to imagine significant ad budgets flowing here.

5. Goal-Based Bidding: This one’s intriguing for fans of auction algorithms—or former blockchain gas-fee optimizers pivoting to LLMs. What if you could set bounties for specific queries (e.g., $10 for a Noe Valley real estate alert) and let the model allocate massive compute toward delivering precise results? You’d achieve perfect price discrimination based on the “value” you assign to a query—and gain stronger guarantees of high-quality chain-of-thought reasoning for searches that matter most to you.

Poke is one of the best existing examples: users must explicitly negotiate subscription services with the chatbot (admittedly, this doesn’t map cleanly to compute costs—but it’s still an interesting illustration of what’s possible). In some ways, this already works for certain models: both Cursor and ChatGPT use routers that select models based on inferred query complexity. But even when you pick a model from a dropdown, you can’t control the underlying compute allocated to your question. For highly active users, being able to specify—in dollar terms—how valuable a question is to them could be highly attractive.

6. Subscriptions for AI Entertainment & Companionship: Two main use cases show AI users’ willingness to pay: coding and companionship. CharacterAI boasts one of the highest WAU counts among non-lab AI companies—and charges a $9.99 subscription, offering a hybrid of companionship and entertainment. Yet even though people do pay for companion apps, we haven’t yet seen companion products cross the threshold where they can reliably monetize via ads.

7. Token-Based Usage Pricing: In AI creative tools and coding, token-based pricing is another common monetization model. It’s become an attractive pricing mechanism for companies with power users—letting them tier and charge more based on usage.

Monetization remains an unsolved problem in AI, and most users are still enjoying the free tiers of their preferred LLMs. But that’s temporary: internet history tells us ads will find a way.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News