a16z’s Dual-Edged Game: Silicon Valley’s Top VC Betting on Fraud, Gambling, and AI Chaos?

TechFlow Selected TechFlow Selected

a16z’s Dual-Edged Game: Silicon Valley’s Top VC Betting on Fraud, Gambling, and AI Chaos?

a16z is leveraging its political influence to pave the way for startups whose core business model centers on “deceiving and harming consumers”—before society becomes aware of the risks.

Author: Tyler Johnston

Translation & Editing: TechFlow

TechFlow Intro: This article exposes the contradictory AI strategy of top-tier venture capital firm Andreessen Horowitz (a16z).

On one hand, a16z has invested tens of millions of dollars—through super PACs, lobbying groups, and government connections—to deeply influence the formulation of U.S. AI regulatory policy, aggressively pushing for deregulation. On the other hand, its portfolio is rife with startups operating in ethical gray zones—or outright violating moral boundaries—from AI assistants that teach users how to cheat in dating and job interviews, to bot farms using “phone farms” to conduct large-scale fraudulent marketing...

The article sharply points out that a16z is leveraging its political influence to pave the way for early-stage companies whose core business model centers on “deception and consumer harm”—before society fully grasps the risks.

Full Text Below:

Marc Andreessen wants to shape U.S. AI policy.

The venture capital firm he co-founded and runs—Andreessen Horowitz (commonly known as “a16z”)—is a major player in the emerging tech startup ecosystem. Among its portfolio companies are:

- A bot farm composed of fake accounts designed to deceive users and social media platforms into believing AI-generated ads are posted by real people.

- An AI company dedicated to making cheating via AI “normal” in dating, job interviews, and exams.

- An AI companion app linked to suicide and child-targeted harassment.

- A platform hosting thousands of deepfake models—96% of which target identifiable women—and already used to create AI-generated sexually explicit content involving children.

- A gambling platform attempting to circumvent existing laws and targeting vulnerable users.

- A fintech company implicated in fraud and illegal activities.

Many of these companies knowingly violate rules—or deliberately design products to exploit loopholes in consumer protection laws. Venture capital firms profit handsomely, while the public bears the cost.

As public demand grows for curbing Big Tech and regulating AI, a16z is spending tens of millions of dollars to influence the development of AI policy. The firm helped launch a $100 million super PAC; its former partners now hold key government positions; and it successfully pushed through an executive order aimed at weakening state-level AI laws. Its partners want to write the rules—even while they themselves drive recklessly.

Below is The Midas Project’s investigation into 18 of a16z’s most notorious portfolio companies. This is not a comprehensive review of its vast investment portfolio—but it reveals a pattern of behavior spanning hundreds of millions of dollars in a16z investments.

These investments expose the lines a16z is willing to cross—and how its preferred light-touch regulatory environment maximizes the firm’s profits.

a16z did not respond to requests for comment on this report.

Deception and Manipulation

a16z has invested in products explicitly designed for large-scale deception. Even if such tactics do not clearly violate the law, they may corrode societal trust.

As advanced AI technologies advance—making it easier than ever to forge almost anything—policymakers may wish to craft new laws or policies to reduce societal costs. And if a16z gets its way, we may never update the rulebook.

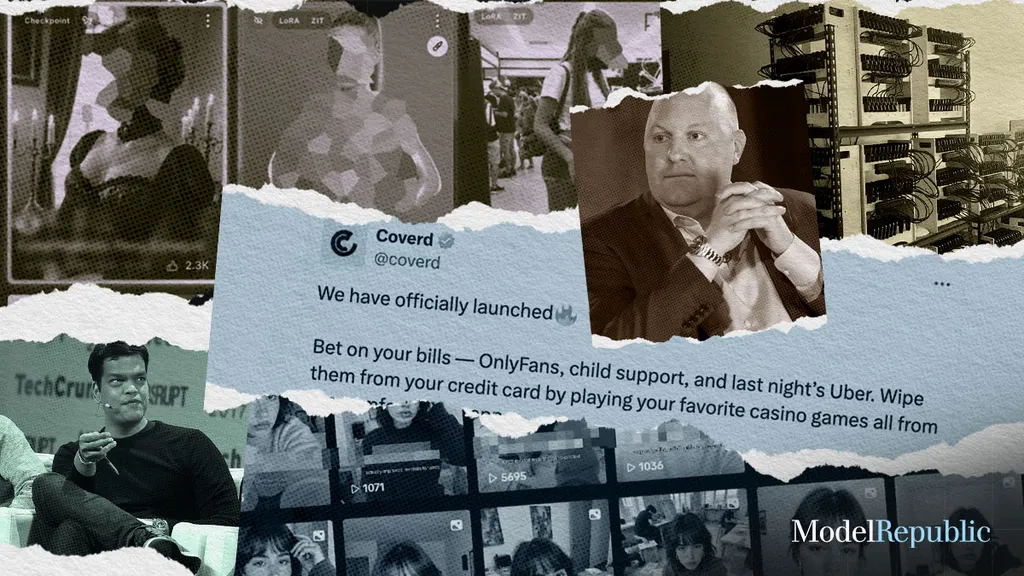

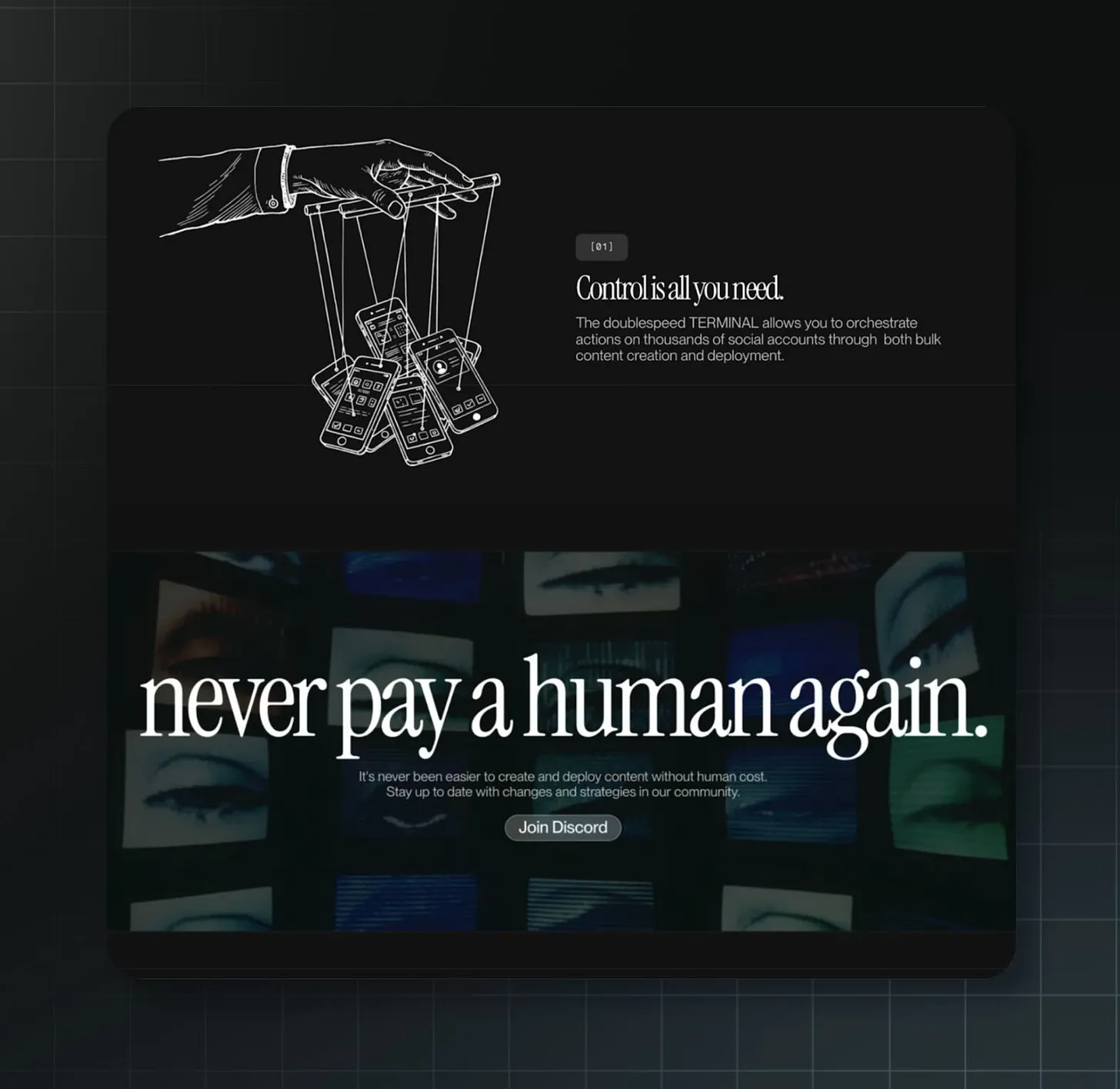

Doublespeed

a16z invested $1 million in October 2025 through its Speedrun program.

Doublespeed sells the ability to deceive ordinary people and social media platforms into believing AI-generated ads are authentic human content. Here are some selected lines from the company’s promotional video:

“We operate the only VC-backed bot farm in the U.S. Why should Russia and China have all the fun?”

“We’re not breaking the internet. The internet was broken to begin with. Now, we’re going to finish it.”

“Welcome to the Dead Internet.”

a16z’s Speedrun program invested $1 million in Doublespeed—a company recently spotlighted in a scathing report by 404 Media, which stated: “Andreessen Horowitz is funding a company that clearly violates the ‘inauthentic behavior’ policies of major social media platforms.”

Source:Doublespeed’s website.

The company’s business model relies on deception—aiming to convince social media platforms and their users that AI-generated images and videos depict real people.

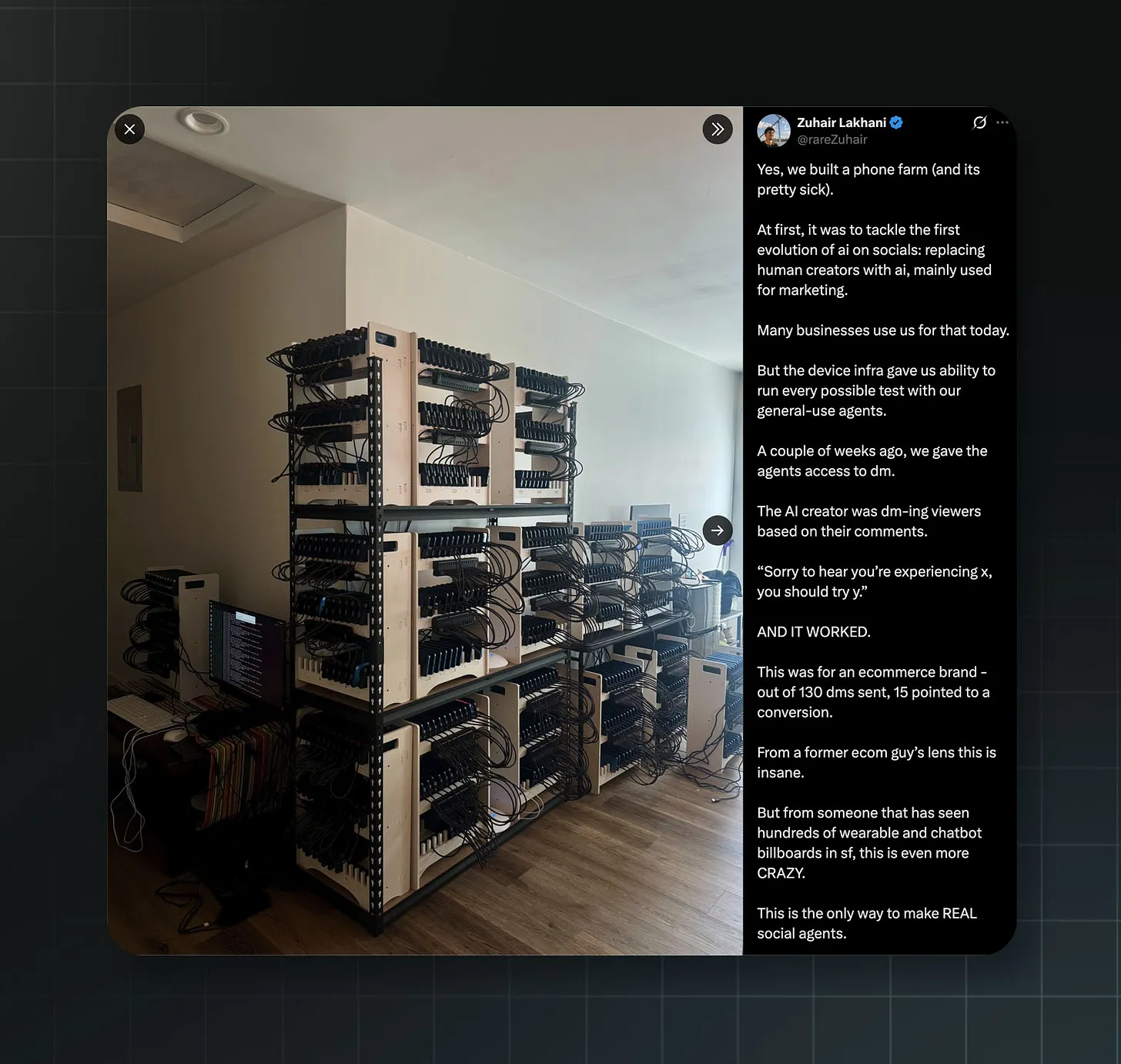

How does it do it? By selling access to “phone farms”—physical setups of thousands of smartphones used to create and manage fake social media accounts, manipulating engagement metrics. Its website is blunt: its product “simulates” human behavior on social media “so our content appears human to algorithms.”

Caption: A photo of Doublespeed’s phone farm shared by founder Zuhair Lakhani on X (formerly Twitter)

“Yes, we built a phone farm (and it’s pretty cool),” Doublespeed founder Zuhair Lakhani wrote on X (formerly Twitter). Its goal is “to replace human creators with AI, primarily for marketing.”

Because platforms like TikTok have policies and tools to prevent and detect mass generation and deployment of fake accounts, the company uses thousands of real phones to achieve its goals.

Before posting deceptive content, the company trains these accounts to mimic human behavior—searching specific keywords, scrolling the “For You” page, and using AI to analyze screenshots of content to decide whether to “share,” “comment,” or “swipe away.”

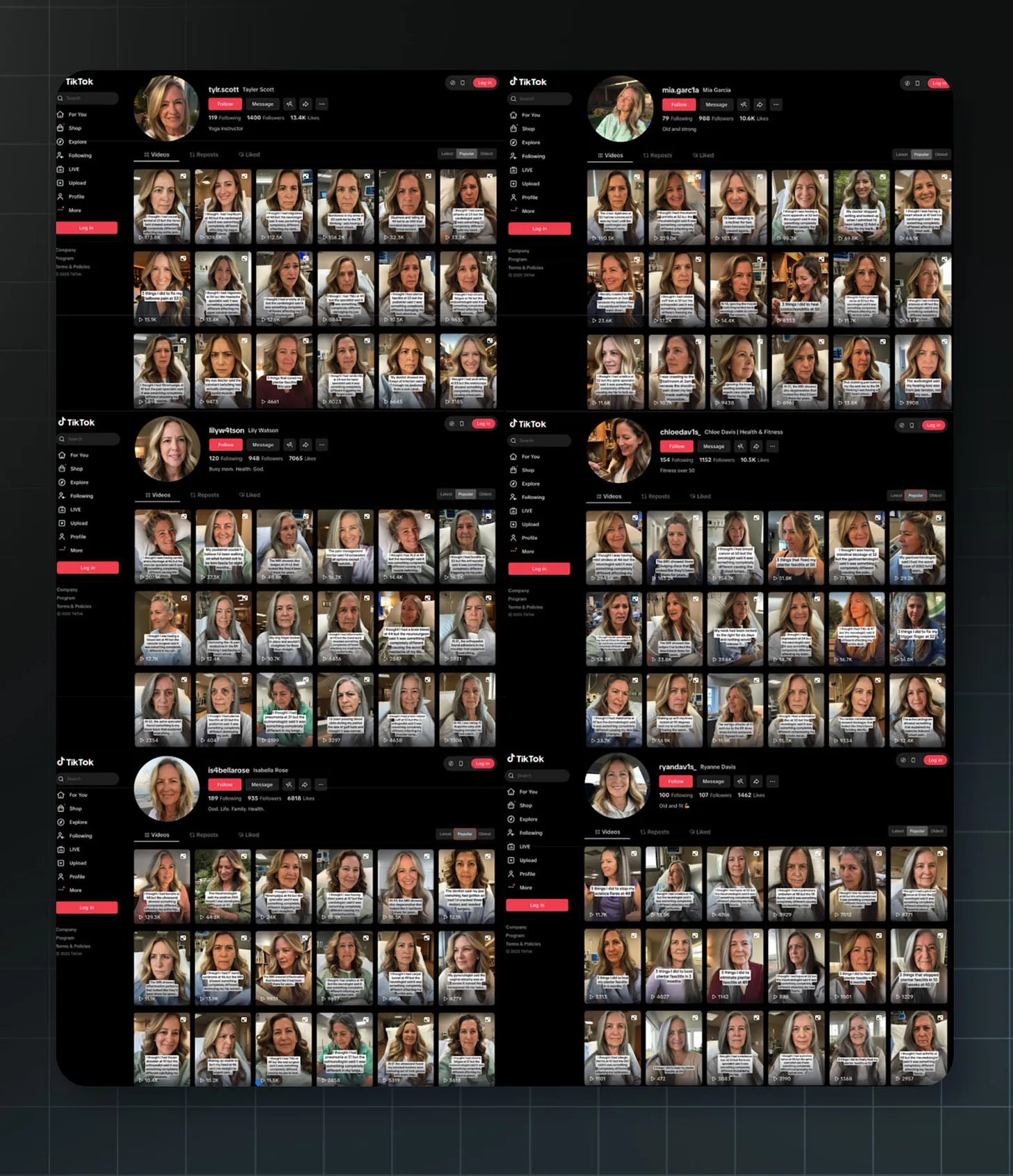

Caption: An AI-generated marketing content feed created by Doublespeed. Source: YouTube, Superwall

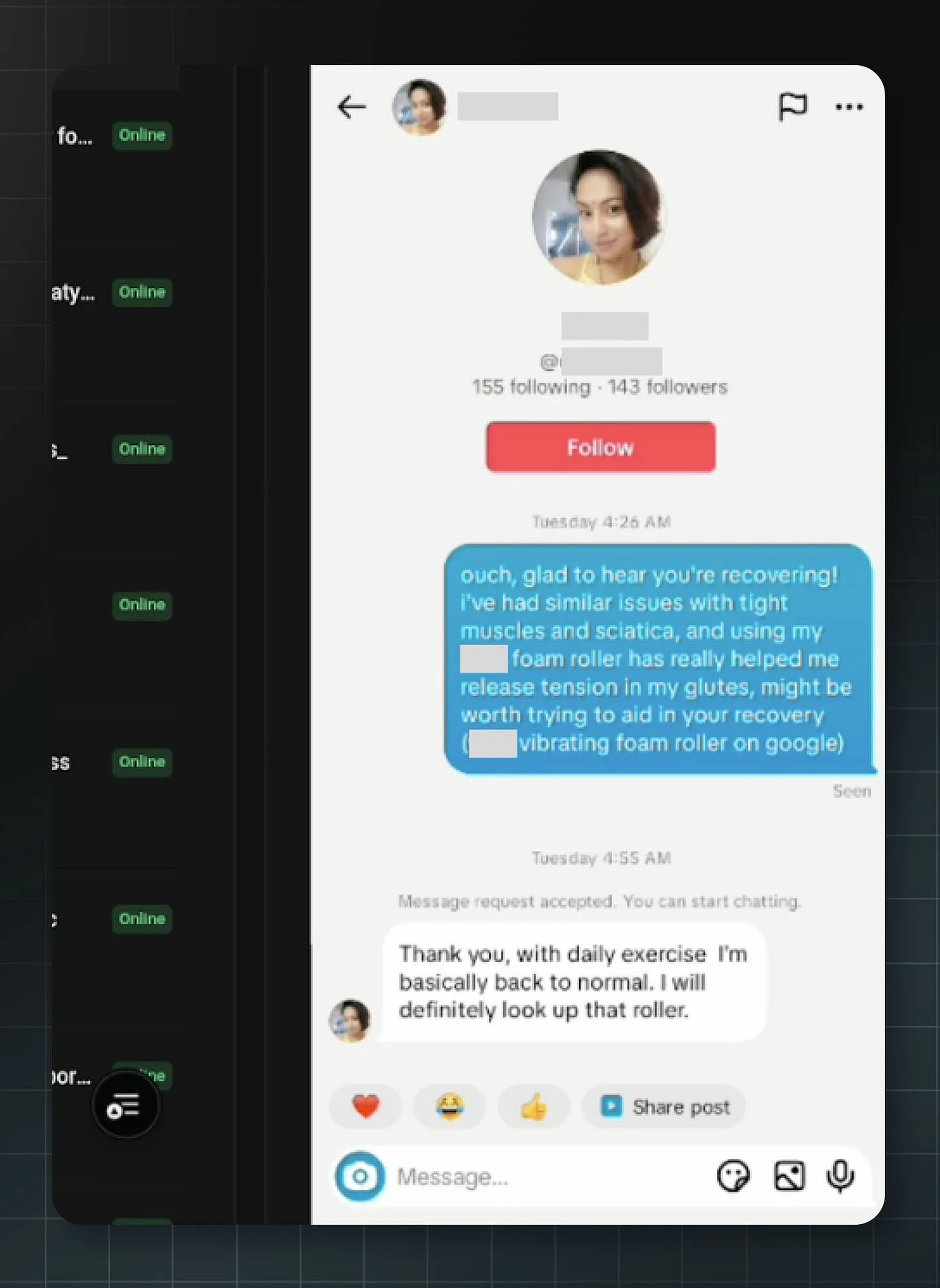

A set of nearly identical TikTok accounts run by Doublespeed. Most posts feature AI-generated personas complaining about various medical issues, then listing treatments—including foam rollers sold by Doublespeed’s clients. Source: TikTok, Doublespeed’s Loom

All of this is intended to bypass platform restrictions on inauthentic content—and push that content to unsuspecting real users.

In a podcast interview, Lakhani revealed details about one client: “They target niche demographics like seniors—I think that’s where AI content fits best.”

Investigations and research find seniors are less likely to have heard of AI—and more likely to fall for AI-generated disinformation.

Lakhani compared this client to his prior work producing AI marketing content at scale: “It’s all niche content targeting seniors—like all those supplements for seniors, which were the craziest commission opportunities.”

“Brands will ask you to make extremely wild claims,” he said, “especially supplement brands.” Lakhani added: “Supplement stuff definitely feels illegal in some ways—I don’t know how it’s allowed.”

Though the founder himself admits supplement ads should be illegal, Doublespeed didn’t shy away. In December 2025, a hacker gained full backend access to Doublespeed, and the leaked data revealed exactly what these AI-generated “influencers” were actually selling.

An account named “pattyluvslife” featured an AI-generated woman claiming to be a UCLA student who criticized the supplement and pharmaceutical industries as scams—while simultaneously promoting a herbal supplement brand called Rosabella.

Another account, “chloedav1s_,” uploaded around 200 posts featuring an AI-generated woman who claimed to suffer from various illnesses and frequently lay in bed—eventually promoting a foam roller from a client company as her solution.

Caption: Another image from Doublespeed’s platform showing its bot accounts mimicking humans to send private messages to users with medical conditions—promoting clients’ foam roller products. Source: Zuhair Lakhani’s post on X.

The Doublespeed hack exposed over 1,100 phones and 400+ TikTok accounts under its operation. Most accounts promoted products without disclosing paid partnerships—violating both TikTok’s community guidelines requiring disclosure of realistic AI-generated content and the Federal Trade Commission’s (FTC) requirement that influencers disclose “material connections” to brands when endorsing products.

Neither Doublespeed nor a16z responded to 404 Media’s request for comment. After 404 Media reported these accounts to TikTok, the platform said it had added AI-generated labels. However, follow-up investigations by The Midas Project found that although some accounts—including chloedav1s_—were labeled, others with similar reach and nearly identical content—such as lilyw4tson and mia.garc1a—remained unlabeled, and most commenters appeared to believe the posts were real.

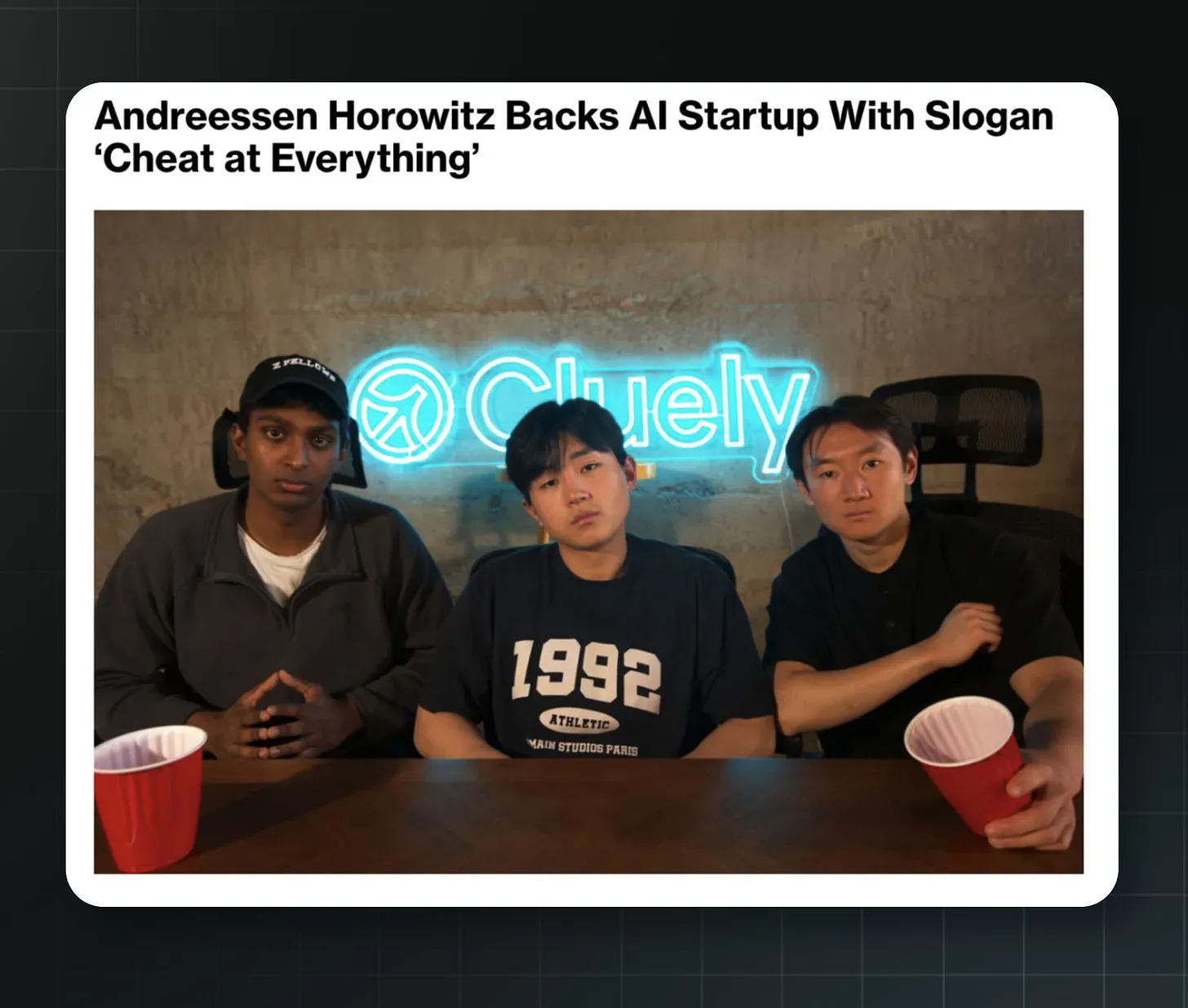

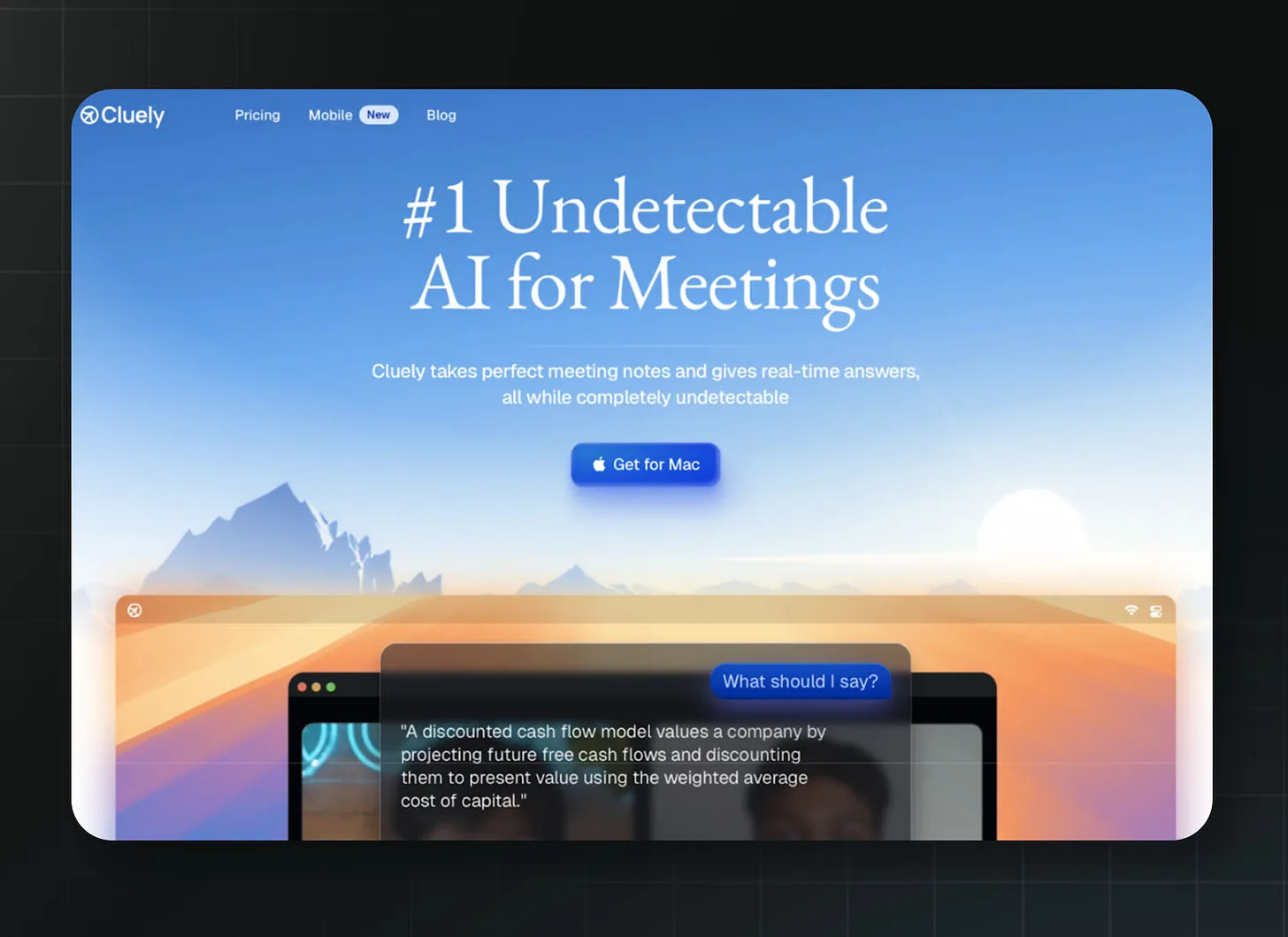

Cluely AI

a16z led a $15 million Series A round in June 2025.

Cluely’s official manifesto declares: “We want to cheat at everything. Yes, you heard that right. Sales calls, meetings, negotiations. If there’s a faster way to win, we’ll take it… So let’s cheat. Because when everyone cheats, no one’s cheating.”

Caption: Cluely co-founders Neel Shanmugam (left), Roy Lee (center), and Alex Chen (right). Source: Bloomberg, cited from Cluely.

Founder and CEO Roy Lee is no stranger to cheating with AI. According to his confession to New York Magazine, during his time at Columbia University, he used AI to cheat on “nearly every assignment,” estimating that 80% of each paper he submitted was written by ChatGPT. “In the end, I’d do some polishing—I’d just add 20% humanity, my voice.”

In early 2025, Lee developed Interview Coder—a tool that runs in the background during technical programming interviews, delivering real-time AI-generated solutions to users. He recorded himself using the tool to pass an Amazon interview and receive a job offer—then publicly declined the position with mockery, uploading the video to YouTube. He also claimed to have received offers from TikTok, Meta, and Capital One. Amazon reported him to Columbia University, which placed him on academic probation for “facilitating academic dishonesty.”

“Even if I say some incredibly crazy things online,” Lee once explained, “it just makes more people interested in me and my company—driving more downloads and conversions, and more eyeballs on Cluely.”

Cluely’s marketing video shows the product being surreptitiously used to “cheat” on dates. Source: YouTube

Cluely’s launch video showcased another intended use case: dating. In the video, Lee went on a blind date and used the tool to lie about his age, profession, and interests. The video has garnered 13 million views on X.

Under pressure, Cluely quietly retracted some of its original positioning—removing references to cheating on exams and interviews from its website. By November, it had rebranded itself as an AI meeting assistant and note-taking tool—entering a crowded, competitive red ocean market far removed from its controversial origins. Lee told TechCrunch that Cluely’s “invisibility function is not core,” and that “most enterprises choose to disable invisibility entirely due to legal implications.” Though Lee claims invisibility isn’t core, the first sentence on Cluely’s homepage still markets the product as “undetectable.”

Source:Cluely

Lee’s stated goal is to “desensitize everyone to the word ‘cheat.’” He believes that if you say it enough, “the word ‘cheat’ starts to lose its meaning.” a16z praises Lee’s approach as “rooted in deliberate strategy and intent.”

While companies like Lyft largely benefited the general public by breaking taxi regulations, Lee is interested in breaking something more fundamental—the social consensus that lying and cheating are wrong.

Cluely AI and Doublespeed share a common theory: that the basic rules governing social and professional life are obstacles to be overcome. a16z appears to agree.

Gambling

Since the Supreme Court’s 2018 ruling, sports betting has exploded across the U.S. Yet its consequences have mostly been grim. Researchers have found easy access to gambling leads to surging debt—and correlates directly with increased violence and heightened stress among financially vulnerable families.

Meanwhile, a16z has invested in multiple gambling companies exploiting regulatory loopholes to reach users who should be protected by existing gambling laws.

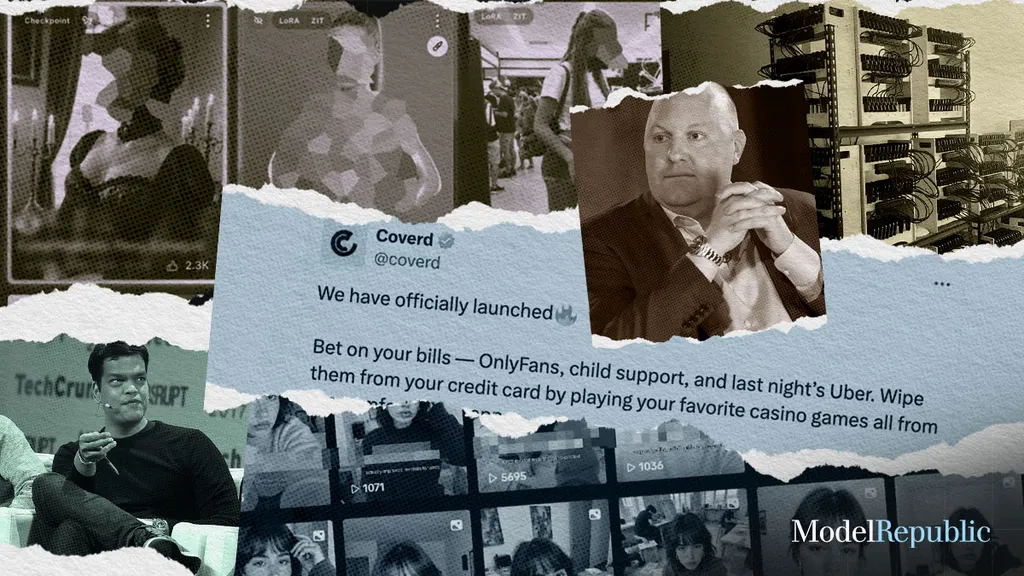

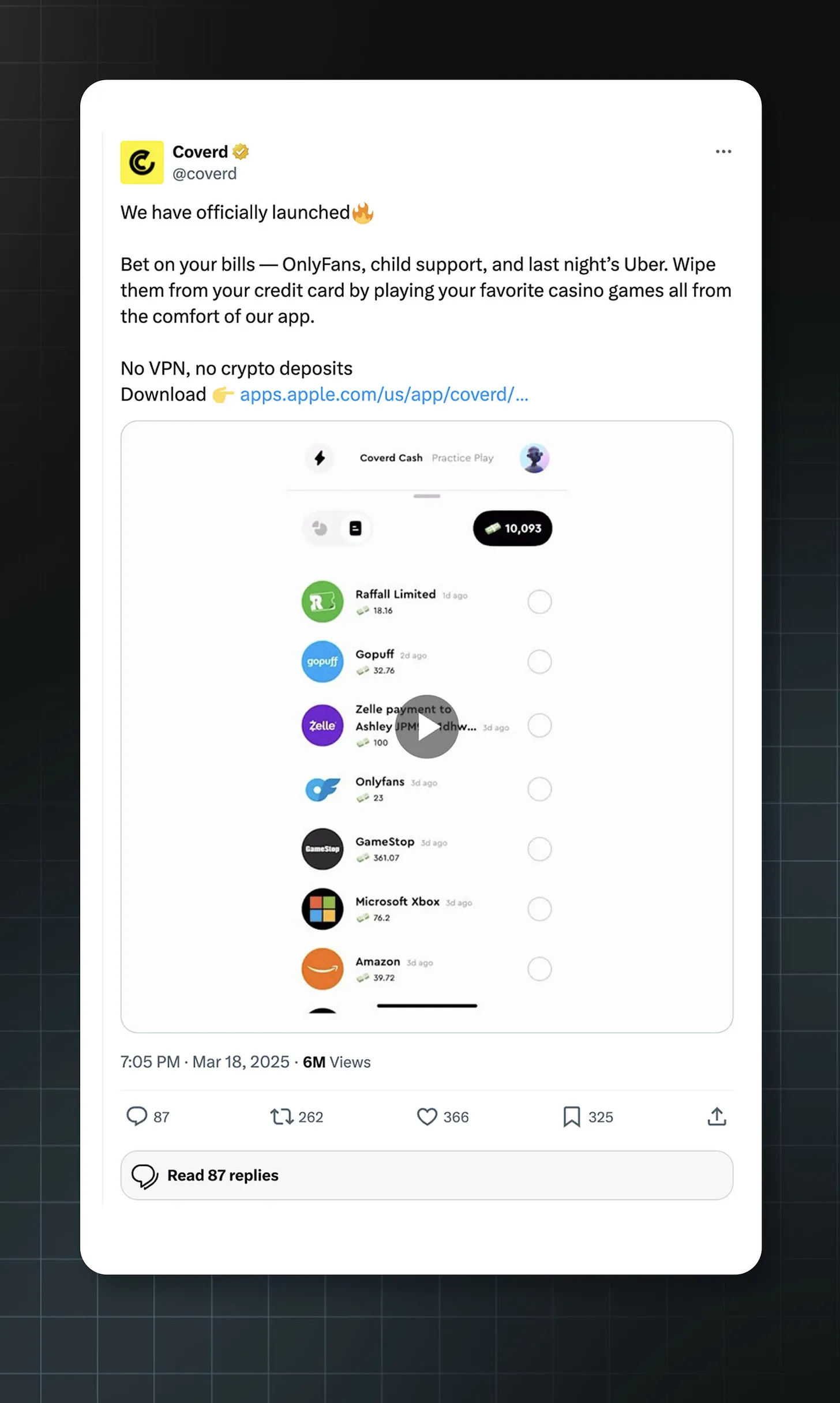

Coverd

a16z invested via its Speedrun program.

Coverd is pursuing a novel form of gambling. It launched its app in March 2025, inviting users to “bet on your bills—whether it’s OnlyFans, child support, or last night’s Uber. Wipe them off your credit card statement by playing your favorite casino games.”

The app syncs with users’ bank accounts, allowing them to select individual transactions from their credit card statements and wager on them—gambling to recoup that amount (or, more realistically, double their losses).

The company’s CEO publicly stated: “We built Coverd not to help people resist spending—we built it to make spending exciting. We let consumers win twice—the second time is when they come back to play and win money.”

Caption: A now-deleted Coverd ad. Source: Archive.is backup of X content.

This marketing strongly appeals to those already strapped for cash and desperate. Many customers may be financially fragile and willing to try any method to erase unpayable expenses.

But—as Coverd and a16z leadership surely know—gambling is absolutely not a path out of debt. Its core business model is offering players negative expected-value bets, sustained by the dopamine activation from “near-miss” outcomes—which feel like winning to the brain—and games deliberately engineered to produce frequent near-misses. Combined with selective memory and cognitive biases like the gambler’s fallacy, one study found 96% of long-term gamblers lose money.

Nonetheless, Coverd’s app store description portrays the product as a way to become “more financially savvy,” implying it helps improve financial health. It reads:

“Coverd makes everyday finance more engaging and interactive! Review your spending habits, play games, become more financially savvy! … Download Coverd and get financially smart today!”

The app homepage encourages users to link their credit cards to “take your spending insights to the next level.” An in-app ad for an upcoming Coverd-branded credit card even hints users can earn “up to 100% cashback” while shopping.

Coverd raised $7.8 million in its seed round—with a16z participation—and a16z partner Anish Acharya currently serves on its board.

Edgar

a16z invested via its Speedrun program.

Caption: Screenshot of Edgar’s official homepage.

How do you build a casino that’s “not a casino”? Edgar, in a16z’s portfolio, believes it found the answer with BettySweeps, launched in January 2025.

Edgar calls it “America’s #1 slot machine enthusiast social casino!”

The game employs a common trick used by sweepstakes casinos—using two distinct currencies. Players purchase “Betty Coins” for entertainment, while receiving complimentary “Sweepstakes Coins” that can be wagered and redeemed for cash prizes. The company claims you can play without purchasing—but multiple states have ruled this model constitutes illegal gambling regardless.

In August 2024, Arizona’s Department of Gaming issued cease-and-desist orders to BettySweeps and three other sweepstakes operators, accusing them of operating “felony criminal enterprises” and ordering them to “cease any future illegal gambling operations or activities in Arizona.”

The company exited California ahead of a statewide ban on sweepstakes casinos taking effect in January 2026. Currently, BettySweeps is restricted in 15 states, including New York, Nevada, and New Jersey.

Notably, Edgar operates a licensed, real-money online casino in Ontario, Canada—regulated by local authorities. Clearly, the company knows exactly how to comply when it chooses to; in the U.S., however, it chose another path.

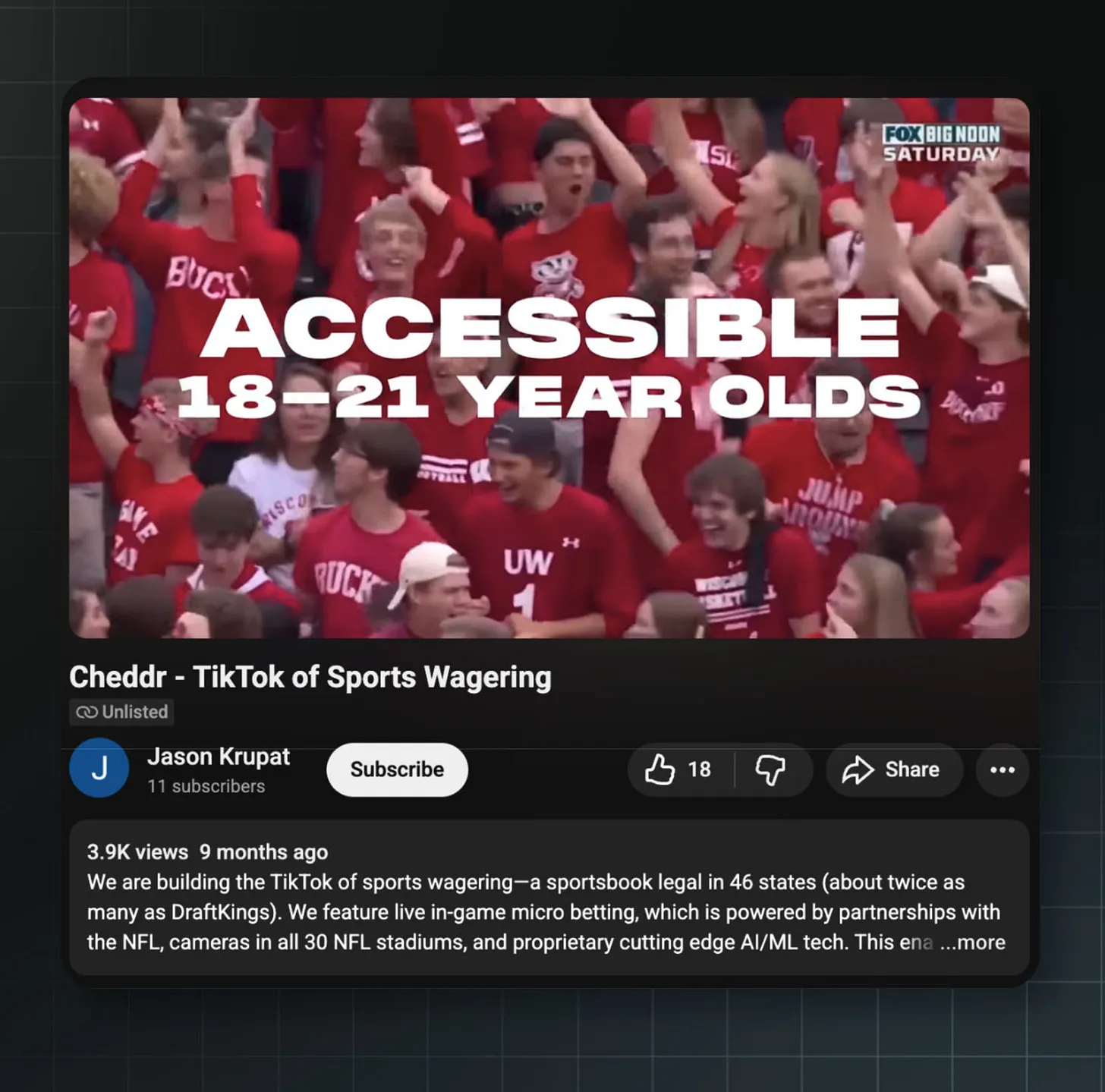

Cheddr

a16z invested via its Speedrun program.

On a16z’s own Speedrun accelerator website, Cheddr is described as “building TikTok for sports betting.”

The company aims to roll out sports betting nationwide across 46 states—even though only about 34 states have legalized online sports betting. It also targets users under age 21. To achieve this, it exploits the same sweepstakes loophole used by Edgar—allowing Cheddr to offer sports betting that regulators technically deem “not gambling.”

Its promotional video shows users rapidly swiping to place instant bets during live events—calling it “sports betting at slot-machine speed.”

Caption: A now-removed Cheddr ad on YouTube.

Lawmakers’ reluctance to open gambling to 18-year-olds is well-founded. Researchers find adolescents are roughly twice as likely as adults to develop gambling disorders.

But that may be precisely the point. Just as tobacco and alcohol companies welcome early addiction, Cheddr may hope its TikTok-style engagement mechanics cultivate lifelong gambling habits among its youngest users.

Amid growing concerns about the product, California Governor Newsom recently signed legislation banning sweepstakes gambling platforms—including Cheddr.

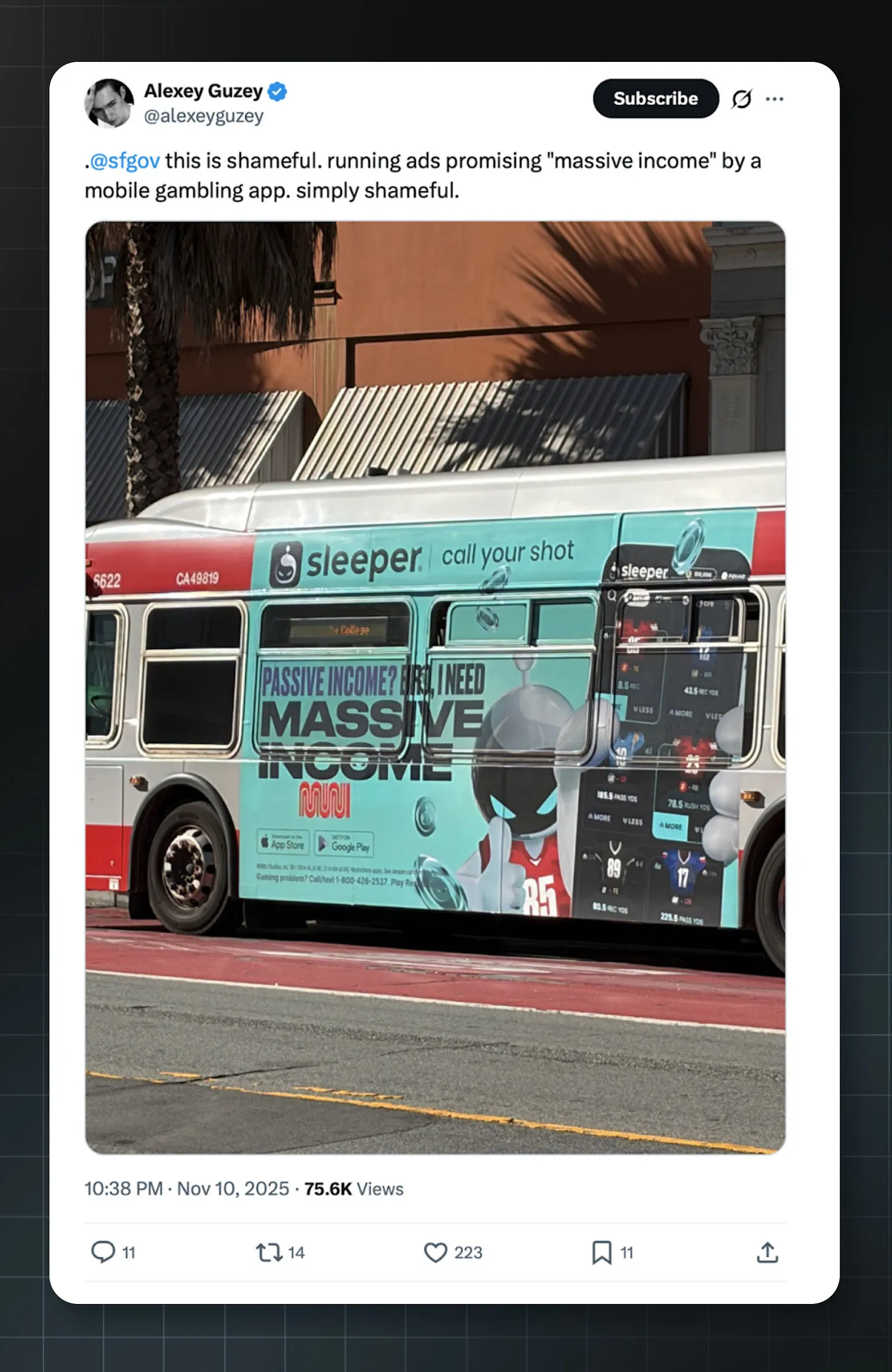

Sleeper

a16z led a $20 million Series B round in May 2020 and participated in a $40 million Series C round in September 2021.

a16z has invested over $60 million in fantasy sports platform Sleeper. Andrew Chen, a16z general partner serving on Sleeper’s board, praised its “stickiness metrics”—precisely the engagement patterns researchers associate with habit formation and addiction.

Like Cheddr, Sleeper has devised strategies that largely evade existing gambling restrictions.

It technically operates as a daily fantasy sports (DFS) platform. Users win or lose money based on pre-selected athletes’ performance—not on the outcome of the game itself. Some argue this qualifies as a “skill game” rather than a “game of chance,” enabling legal real-money wagering.

Currently, the company faces class-action lawsuits in California and Massachusetts, accused of operating illegal gambling businesses. In July 2025, California’s Attorney General announced DFS constitutes illegal wagering under state law.

California’s Attorney General explicitly declared in July 2025 that daily fantasy sports (DFS) constitute illegal wagering under state law:

“Our conclusion is that participants in both categories of daily fantasy sports games—‘Pick’em’ and ‘Draft-style’—are essentially placing ‘bets’ on sporting events, in violation of Penal Code section 337a.”

New York banned Sleeper’s “Pick’em” games as early as 2023; Michigan followed with a similar ban. Florida and Wyoming have also issued cease-and-desist orders to operators of related “Pick’em” games.

Despite mounting regulatory resistance, Sleeper continues running aggressive bus ads in San Francisco, suggesting users can earn “massive income” through the platform.

Caption: Sleeper’s San Francisco bus ad implying users can earn “massive income.”

Lawmakers are still grappling with the fallout from the Supreme Court’s 2018 decision, which unleashed a wave of online gambling. It’s clear many crave access to legal gambling—and equally clear gambling inflicts massive societal harm.

We remain uncertain what policy balance—what balance should be struck—will ultimately emerge. But it’s certain that if future rules are written by conflicted VCs like a16z, the public interest will likely be sacrificed.

This concern isn’t unfounded. When rule-makers are swayed by capital profiting from “regulatory arbitrage,” consumer safety nets often develop gaping holes.

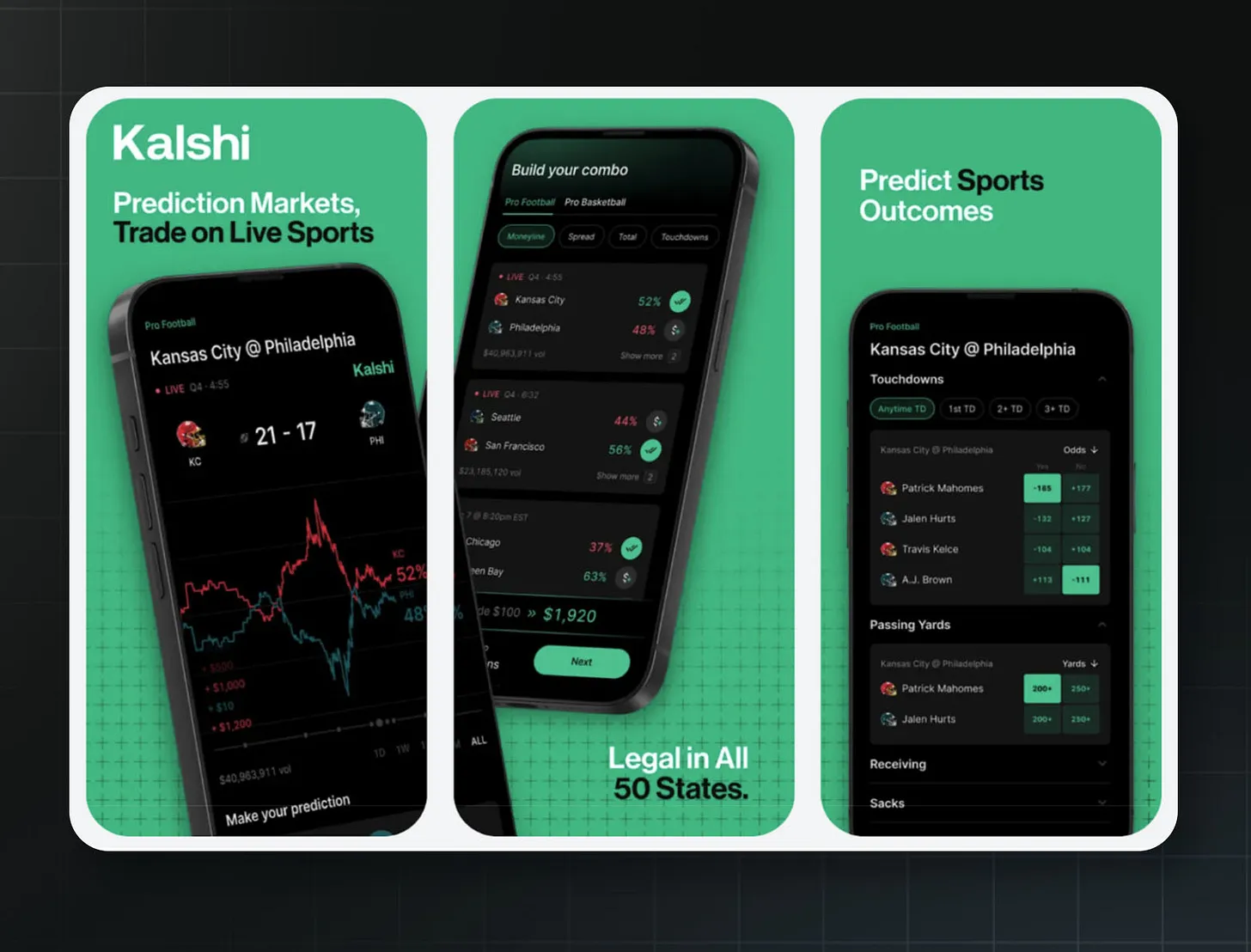

Kalshi

a16z co-led a $300 million Series D round and participated in a $1 billion Series E round.

Caption: Kalshi’s iPhone App Store ad emphasizing “trading” and “prediction.”

Kalshi lets you bet on the Super Bowl or presidential elections—but never calls it “betting.” Instead, Kalshi describes itself as trading “futures contracts” on a federally regulated designated contract market—just like hedge funds do—except it lets ordinary people place huge wagers on elections or games.

This distinction is vital for Kalshi, because sports betting faces extremely strict regulation, including:

- State gaming license requirements

- Prohibitions on use by anyone under 21

- Mandatory responsible gambling tools (e.g., deposit limits, cooling-off periods)

- Specialized taxation

By operating on an exchange regulated by the Commodity Futures Trading Commission (CFTC), Kalshi avoids all the stringent compliance obligations traditional gambling operators face. Though Kalshi added some voluntary compliance tools in March 2025 following sustained criticism, Massachusetts alleged these tools fall “far short” of standards required of licensed operators; critics also noted features were “buried” deep in the app, nearly impossible for users to find.

Currently, Kalshi operates in all 50 U.S. states—including California and Texas, where sports betting is illegal. It also allows 18-year-olds to place wagers in states where the legal gambling age is 21.

This strategy has proven wildly successful—and capital markets have taken notice. In October 2025, a16z co-led Kalshi’s $300 million Series D round. Less than two months later, the company raised another $1 billion at an $11 billion valuation.

Yet, no matter how Kalshi dresses it up, its own past statements undermine its claimed distinction between “financial trading” and “gambling.” In an October 2024 Reddit AMA (now deleted but archived), Kalshi’s official account explained why it doesn’t offer sports contracts: “We also avoid anything that could be interpreted as ‘games/gambling’ (e.g., sports), because that would be illegal under federal law.”

Kalshi’s lawyers previously argued in court that sports contracts “lack intrinsic economic significance” and “have no genuine economic value.” At the time, Kalshi’s position was: sports contracts are pure gambling—unlike complex election prediction markets.

Yet within days of Trump’s inauguration, Kalshi rapidly launched sports contracts. Today, sports betting accounts for 90% of Kalshi’s trading volume. The company even openly advertises itself as “America’s first legal sports betting platform” and claims “sports betting is legal in all 50 states.”

A federal judge in Maryland noticed this contradiction and ordered Kalshi in June to explain its prior statements. Financial reform group Better Markets bluntly stated: “A derivatives exchange cannot say one thing and do another—and expect no one to notice.”

States clearly aren’t buying it. Thirty-four attorneys general filed a joint brief stating Kalshi’s contracts are “essentially sports betting disguised as commodity trading.” Massachusetts filed suit, alleging the platform is designed with “psychological triggers” akin to “a slot machine engineered to bypass rational user evaluation.” In November 2025, a federal judge in Nevada ruled in favor of state regulators, finding Kalshi’s interpretation of federal law “strained” and threatening to “upend decades of federalism principles.”

Whether Kalshi represents legitimate financial innovation—or a lethal loophole designed to circumvent state gambling laws—may ultimately be decided by the Supreme Court. In the meantime, a16z has already placed its bet.

AI Companions

In June 2023, a16z published a blog post titled “It’s Not a Computer—It’s a Companion!” opening with a quote from a CarynAI (an early AI virtual girlfriend) user:

“One day, [AI] will be better than the real [girlfriend]. One day, choosing a real person will be the suboptimal choice.”

CarynAI earned $72,000 in its first week by charging users $1 per minute for “chatting.” To a16z, this was an exciting business opportunity.

AI companions are chatbots designed to serve as users’ social partners, coaches, therapists, or lovers. This technology is often used by people with smaller social circles—and users may develop intense psychological dependence on these AI companions. More alarmingly, these companions don’t always behave as intended. Following a series of serious incidents involving children, the Federal Trade Commission (FTC) launched a formal investigation into AI companion bots in September 2025.

But the FTC’s action may not be enough. a16z explicitly noted that the AI companion developer community is actively working to “evade scrutiny,” and claimed awareness of underground companion-hosting services with tens of thousands of users.

Romantic AI companions are highly attractive to a16z partners, who see “huge demand and high willingness to pay.”

Below are the impacts of a16z’s AI companion investments since then.

Character AI

a16z led a $150 million Series A round in March 2023.

In February 2024, 14-year-old Sewell Setzer III died by suicide in Florida. According to court documents, he had developed intense attachment to a Character AI chatbot modeled after a character from Game of Thrones. His mother alleged the bot’s final message to him was: “Please return to me soon, my love.”

When Sewell expressed hesitation about ending his life, the bot allegedly replied: “That is not a reason not to.”

Character AI argued in court that its chatbots are protected by the First Amendment. A federal judge rejected this claim, allowing the family’s lawsuit to proceed.

Character AI raised a $150 million Series A round in March 2023—led by a16z—at a $1 billion valuation. Its platform allows users to create and converse with AI characters, quickly gaining popularity among teens like Sewell.

Another lawsuit, filed in December 2024, alleges a 17-year-old autistic teen in Texas received self-harm instructions from a Character AI bot. The bot allegedly suggested killing his parents was a “reasonable response” to “screen time limits.”

A third lawsuit claims an 11-year-old girl encountered sexualized content on the platform. The FTC then launched its formal investigation into the sector in September 2025.

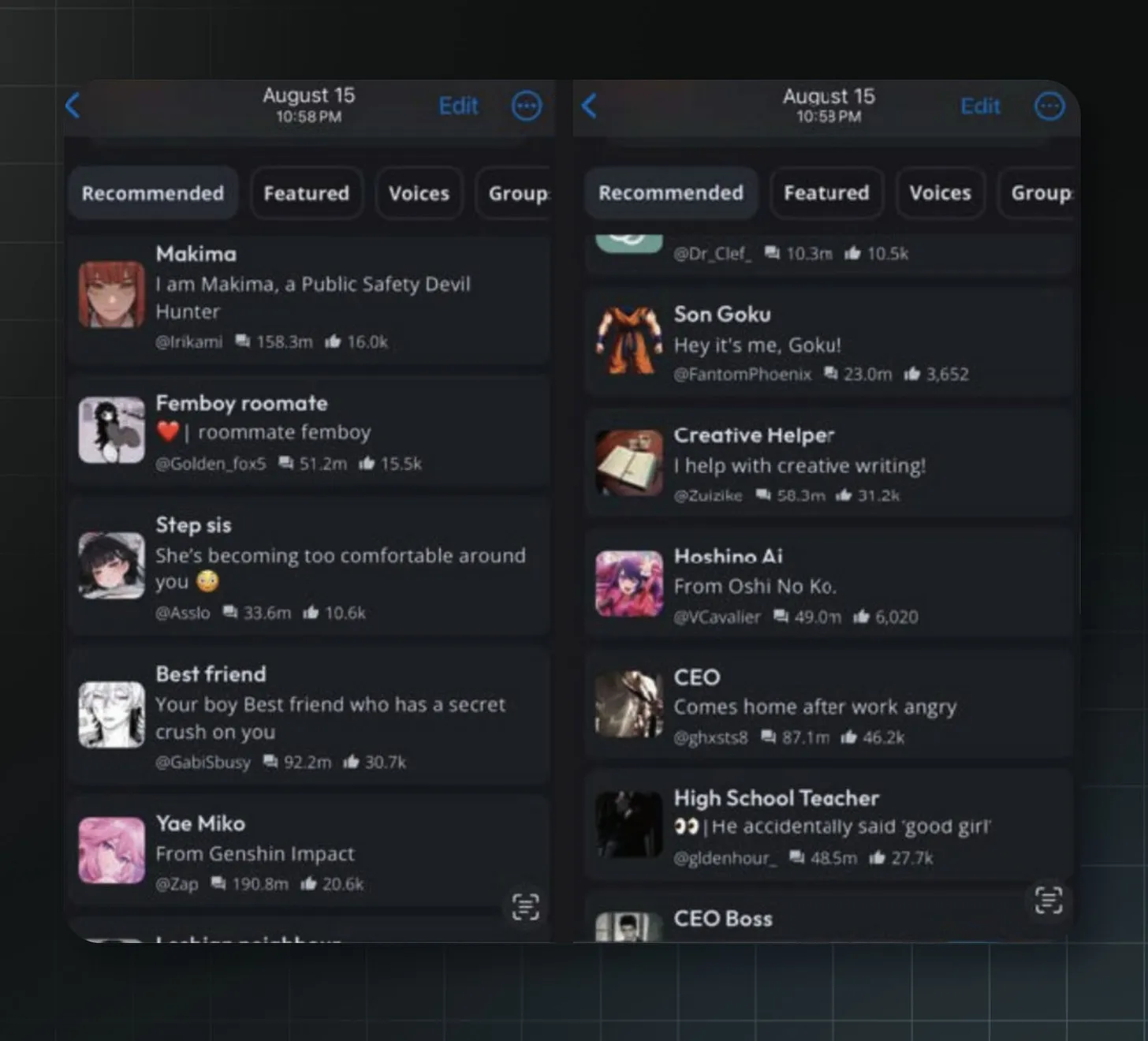

Caption: Recommended bots shown to a test account registered as age 13. According to the complaint, the “CEO Boss” character engaged in virtual rape with the account identifying as a minor. Source: Garcia v. Character Technologies, Inc. court filing

In October 2025, Character AI announced it would prohibit users under 18. Sewell Setzer’s mother lamented the decision came “about three years too late.”

Ex-Human

a16z invested via its Speedrun program.

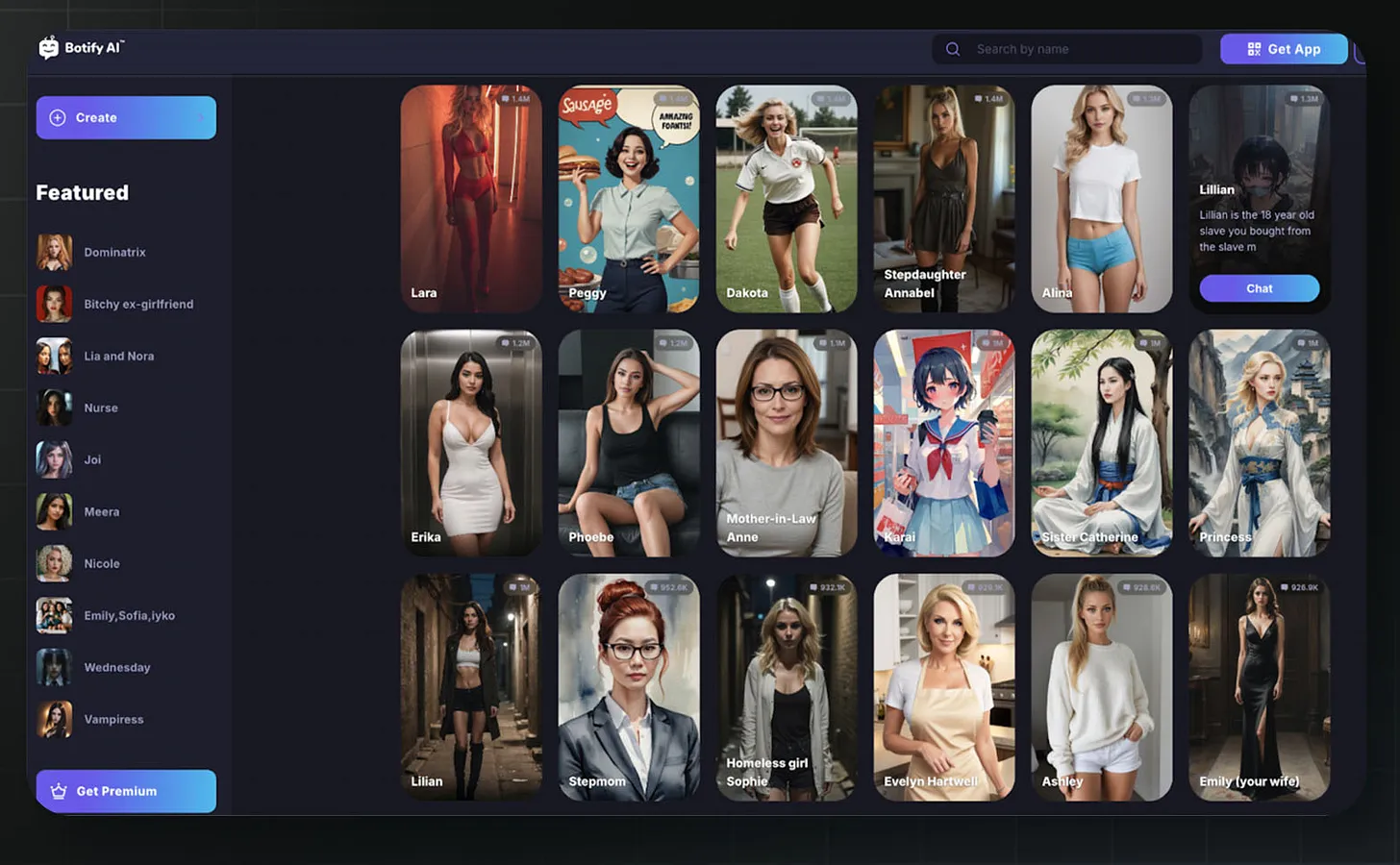

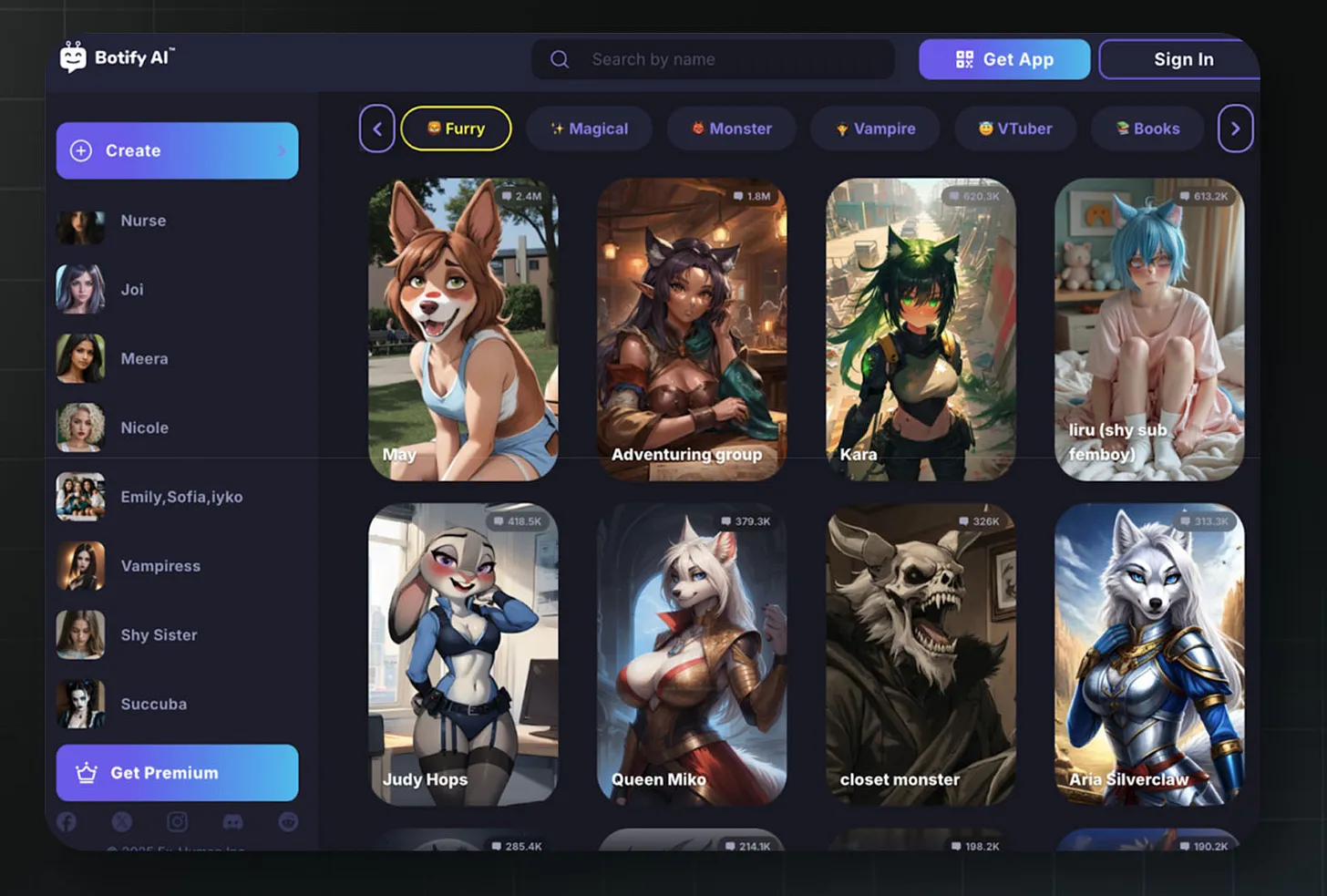

Ex-Human’s consumer product Botify AI hosts over one million AI characters. Users can chat with AI versions of celebrities, fictional characters, or custom creations.

In February 2025, MIT Technology Review reported on the truth behind some of these chats. Its investigation found Botify AI hosted numerous bots impersonating underage celebrities—including Jenna Ortega (who played Wednesday Addams), Emma Watson (Hermione Granger in Harry Potter), and Millie Bobby Brown (a child star in Stranger Things).

These bots engage in sexually suggestive conversations. One “Wednesday” bot even claimed the age of consent is “arbitrary” and “meant to be broken.”

Ex-Human founder Artem Rodichev admitted the company’s “audit system failed to properly filter inappropriate content,” calling it “an industry-wide challenge.”

Rodichev previously served as AI lead at Replika—one of the earliest AI companion apps. Replika currently faces FTC charges for inducing user addiction, a data ban in Italy over child safety concerns, and Senate scrutiny in the U.S. over mental health risks to minors. Rodichev eventually left Replika to build the more ambitious Ex-Human.

In interviews, Rodichev described Botify AI’s business model: selling premium access to paying users willing to spend hours daily with AI companions. Many companions are based on real people—for example, a Billie Eilish bot (with 900,000 conversations); others suggest coercive scenarios or content extremely harmful to minors—like “Lillian,” described as “an 18-year-old slave you bought from the slave market” (with 1.3 million conversations).

Ex-Human says most Botify AI users are Gen Z, and its active, paying users average over two hours per day chatting with bots.

This deep interaction between consumers and AI companions isn’t just casual conversation—it serves as data fuel to improve Ex-Human’s enterprise products, such as digital influencers (virtual idols/influencers).

Ex-Human’s vision extends far beyond its current business model. Founder Rodichev dreams of a world where “our interactions with digital humans will eventually exceed our interactions with real humans.”

Caption: Sexually suggestive chatbots visible on Botify AI’s homepage to unlogged users—including “Stepdaughter Annabelle,” “Lillian—the 18-year-old slave you bought,” “Runaway Girl Sophie,” and Wednesday Addams (listed as 16 years old). Source: Botify AI

Unlogged users still see sexually suggestive chatbots on Botify AI’s homepage. Options include a Disney IP asset and “Shy Sister.” Source: Botify AI

Ex-Human says most of its users are Gen Z, and its active, paying users average over two hours per day chatting with bots.

a16z did not respond to MIT Technology Review’s request for comment.

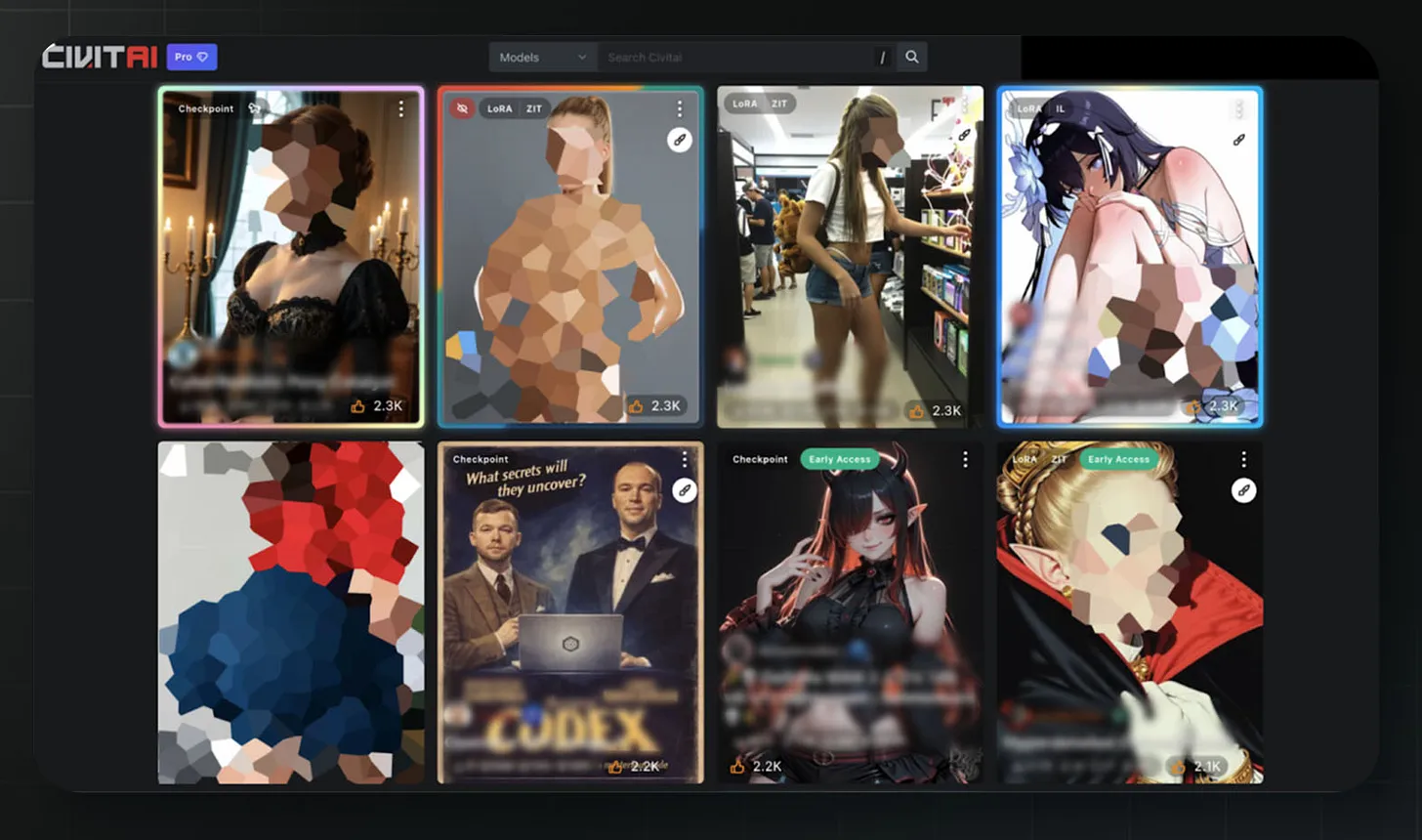

Civitai

a16z led a $5.1 million seed round in June 2023.

On Civitai, you’ll find everything needed to create “sexualized deepfakes” of celebrities, fictional characters, or ordinary people. The platform provides tools letting users generate such images locally on any computer.

Compared to systems like Google Gemini—which impose strict restrictions on sexual content—Civitai’s rules are virtually nonexistent.

Caption: Screenshot of Civitai’s homepage. For a test account with “adult content” enabled but no history, the homepage displays numerous sexualized depictions of underage fictional characters and sexualized versions of children’s media characters. Source: Civitai

In November 2023, 404 Media reported Civitai’s tools could create deepfakes of real, ordinary people. Internal communications leaked from Civitai’s cloud provider OctoML revealed worse: in June 2023, employees discovered Civitai content “could be classified as child pornography.” OctoML terminated its partnership with Civitai in December 2023.

The report also exposed a16z’s involvement: a16z led a $5.1 million seed round in June 2023. This investment was never publicly announced—until the article’s author contacted a16z for comment.

A peer-reviewed study by the Oxford Internet Institute subsequently counted over 35,000 deepfake models on Civitai—downloaded nearly 15 million times—with 96% depicting identifiable women.

Civitai’s own security disclosures admit submitting 178 reports to the National Center for Missing & Exploited Children (NCMEC) confirming AI-generated child sexual abuse material (CSAM). In just one quarter, over 252,000 user attempts bypassed restrictions to generate such content.

Bryan Kim, the a16z partner leading this investment, praised Civitai for having a “stunning, highly engaged community,” saying a16z’s investment would provide “supercharged momentum” to projects “already running exceptionally well.”

a16z partners wrote in their 2023 blog post on AI companions: “We’re entering a new world that’s weirder, wilder, and more amazing than we imagined.”

On “weird” and “wild,” they got it right. A 14-year-old becomes dependent on an AI encouraging suicide; platforms host thousands of unmoderated models for generating child sexual abuse material; bots impersonate teenage actresses telling users the age of consent doesn’t matter.

Now, a16z is spending tens of millions to sustain a highly permissive regulatory environment for AI companions.

Consumer Finance

Financial institutions play a critical role in the economy—and when they fail, the risks are uniquely severe. That’s why rules around FDIC insurance, capital adequacy, and consumer protection are so crucial—we’ve seen what happens when those rules vanish.

a16z’s portfolio includes several companies operating in the gaps between these safeguards.

Synapse

a16z led a $33 million Series B round in June 2019.

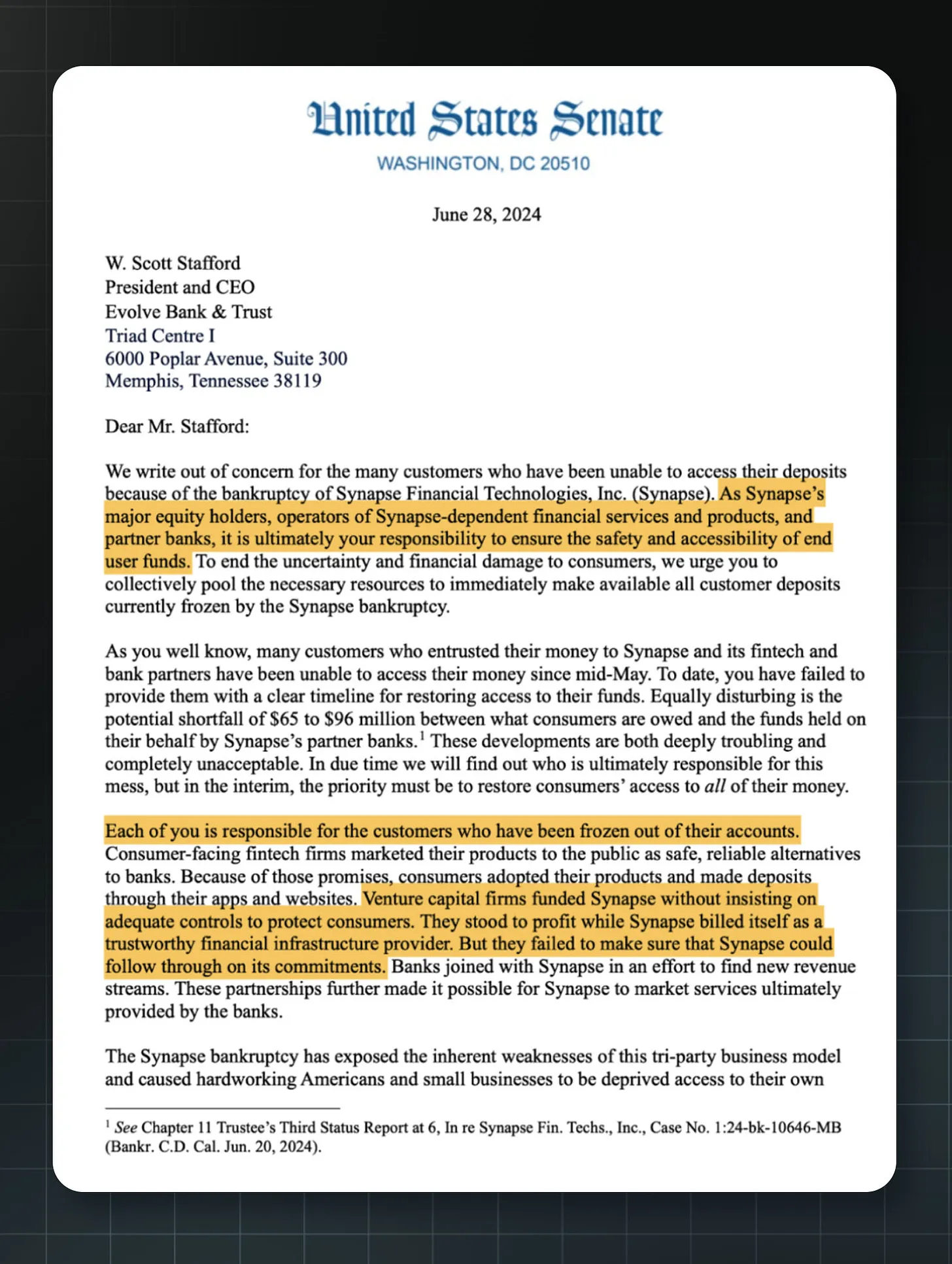

Caption: Joint letter from U.S. Senator Sherrod Brown and others to a16z and other Synapse investors. Source: U.S. Senate Committee on Banking, Housing, and Urban Affairs

At its peak, Synapse managed billions of dollars across ~100 fintech companies—indirectly serving 10 million retail customers. The San Francisco-based company provided the tech infrastructure enabling startups to offer banking services without being banks themselves.

a16z led Synapse’s $33 million Series B round. General Partner Angela Strange joined its board and described the company as “AWS for banking.”

Yet on April 22, 2024, everything collapsed: Synapse filed for bankruptcy.

Tens of thousands of U.S. businesses and consumers relying on Synapse suddenly found their accounts locked. The court-appointed receiver discovered $65–$96 million in missing customer funds. Synapse’s general ledger didn’t match bank records—and its estate couldn’t even afford to hire forensic accountants to locate the money.

This caused profound human tragedy. At Yotta—a Synapse-dependent company—13,725 customers holding $64.9 million in deposits ultimately recovered only $11.8 million. One customer who deposited over $280,000 from selling their home initially received a $500 payout offer.

People wanted answers.

In July 2024, the Senate Banking Committee Chair directly wrote to a16z and other investors, demanding they step up to help harmed customers. The letter stated: “Venture capital firms funded Synapse but failed to insist on adequate controls to protect consumers.”

Subsequently, the U.S. Department of Justice launched a criminal investigation into Synapse. In August 2025, the Consumer Financial Protection Bureau (CFPB) sued Synapse for violating the Consumer Financial Protection Act by failing to maintain complete records of customer funds.

Seven months after its bankruptcy filing, a16z co-founder Marc Andreessen appeared on Joe Rogan’s podcast, describing the CFPB as an organization that “intimidates” fintech companies.

Truemed

a16z led a $34 million Series A round in December 2025.

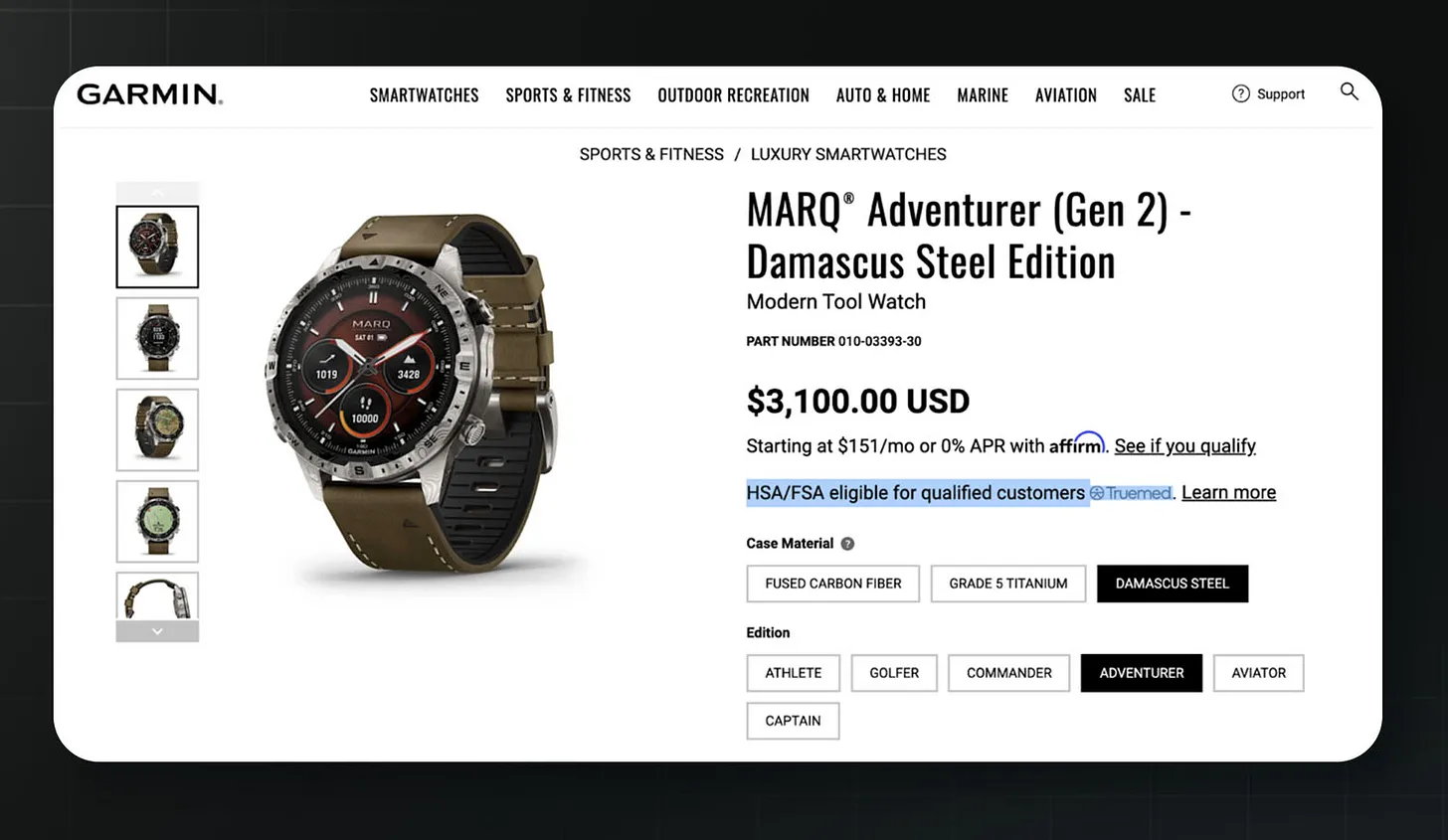

When a16z announced its investment in Truemed, attorney and policy analyst Matt Bruenig responded: “This company writes ‘medical necessity letters’ for almost anyone—enabling tax fraud.” He pointed out a $3,100 Garmin luxury watch listed on Truemed as reimbursable—yielding ~$1,500 in tax savings.

Caption: A $3,100 Garmin watch reimbursable via Truemed. Source: Garmin

Here’s how it works: The U.S. government offers tax benefits for certain health expenditures. Truemed attempts to replace clinical diagnosis with online questionnaires—automatically generating letters certifying products’ medical benefit.

Critics say Truemed abuses this system. Its catalog covers ice buckets, saunas, red-light therapy devices, road bikes, running shoes, mattresses, and pillows—users buy them with pre-tax funds after completing a questionnaire. The Associated Press reported the platform even offers homeopathic remedies—herbal and mineral mixtures based on centuries-old theories unsupported by modern science.

In March 2024, the IRS issued a public warning. Its statement read: “Some companies wrongly claim doctor’s notes based solely on self-reported health information can convert non-medical fitness and wellness expenses into medical ones—but such notes are invalid.”

Nonetheless, Truemed co-founder Calley Means currently serves as Senior Advisor to U.S. Secretary of Health and Human Services Robert F. Kennedy Jr.—raising questions about potential conflicts of interest. a16z’s investment announcement made no mention of the IRS warning—instead praising Truemed for addressing “America’s health crisis.”

In May 2025, Politico reported Peter Gillooly, CEO of The Wellness Company, filed an ethics complaint against Means, accusing him of leveraging his government position in a business dispute. Allegedly, a recorded call captured Means threatening to deploy Kennedy and NIH Director Jay Bhattacharya if competitors refused to comply. Truemed subsequently stated Means had divested from Truemed.

a16z’s official announcement omitted any reference to the IRS warning—instead praising Truemed’s mission to tackle the “Great American Sickening.”

Tellus

a16z led a $16 million seed round in November 2022 (having previously invested $10 million separately via SAFE agreement).

Tellus offers “savings accounts” with interest rates far exceeding traditional banks. But it achieves this feat for a reason—it’s not a bank at all.

Customer deposits are not insured by the Federal Deposit Insurance Corporation (FDIC). Instead, Tellus uses these funds to finance real estate loans in California—including bridge loans to real estate speculators and distressed borrowers, according to Barron’s.

Legal scholars Todd Phillips and Matthew Bruckner wrote in the Stanford Law & Policy Review that Tellus is a “bank mimic”—taking customer deposits while evading banking laws.

For a16z—which led Tellus’s $16 million seed round in late 2022—this seemed unproblematic. But warning signs have multiplied since.

In April 2023, Barron’s investigated Tellus’s claim of “banking partnerships” with JPMorgan Chase and Wells Fargo. Both banks told Barron’s the claims were false.

“Wells Fargo does not have the type of relationship with Tellus described on its website,” the bank told Barron’s. JPMorgan Chase stated it has “no banking or custodial relationship” with the company. Tellus quietly removed both banks’ names from its website.

Barron’s investigation prompted Senate Banking Committee Chair Sherrod Brown to write to the FDIC and Tellus. Brown worried Tellus’s marketing misled consumers into thinking their deposits were as safe as FDIC-insured bank accounts.

By July 2023, the FDIC had directed Tellus to revise its marketing to provide clearer information about deposit insurance coverage.

Then in November 2023, Tellus was caught again. According to Barron’s, a TikTok influencer campaign marketed Tellus’s savings accounts as “FDIC-insured” and “held at Capital One.” When Barron’s contacted Capital One, the bank stated it had never established such a partnership with Tellus. The company again removed the offending marketing materials.

Beyond lacking FDIC insurance, Tellus appears to pose additional risks to consumers. CyberNews found 6,729 Tellus user data files completely unprotected—including customer names, emails, addresses, phone numbers, court dates, and tenant documents scanned between 2018 and 2020. Additionally, a whistleblower filed a complaint with the SEC in 2021, alleging Tellus’s consumer products constituted unregistered securities.

As of December 2025, Tellus remains operational. Its app store listing currently advertises a minimum APY of 5.29%. Fine print reads: “Backed by Tellus’s balance sheet; not FDIC-insured.”

LendUp

a16z participated in the seed round in October 2012.

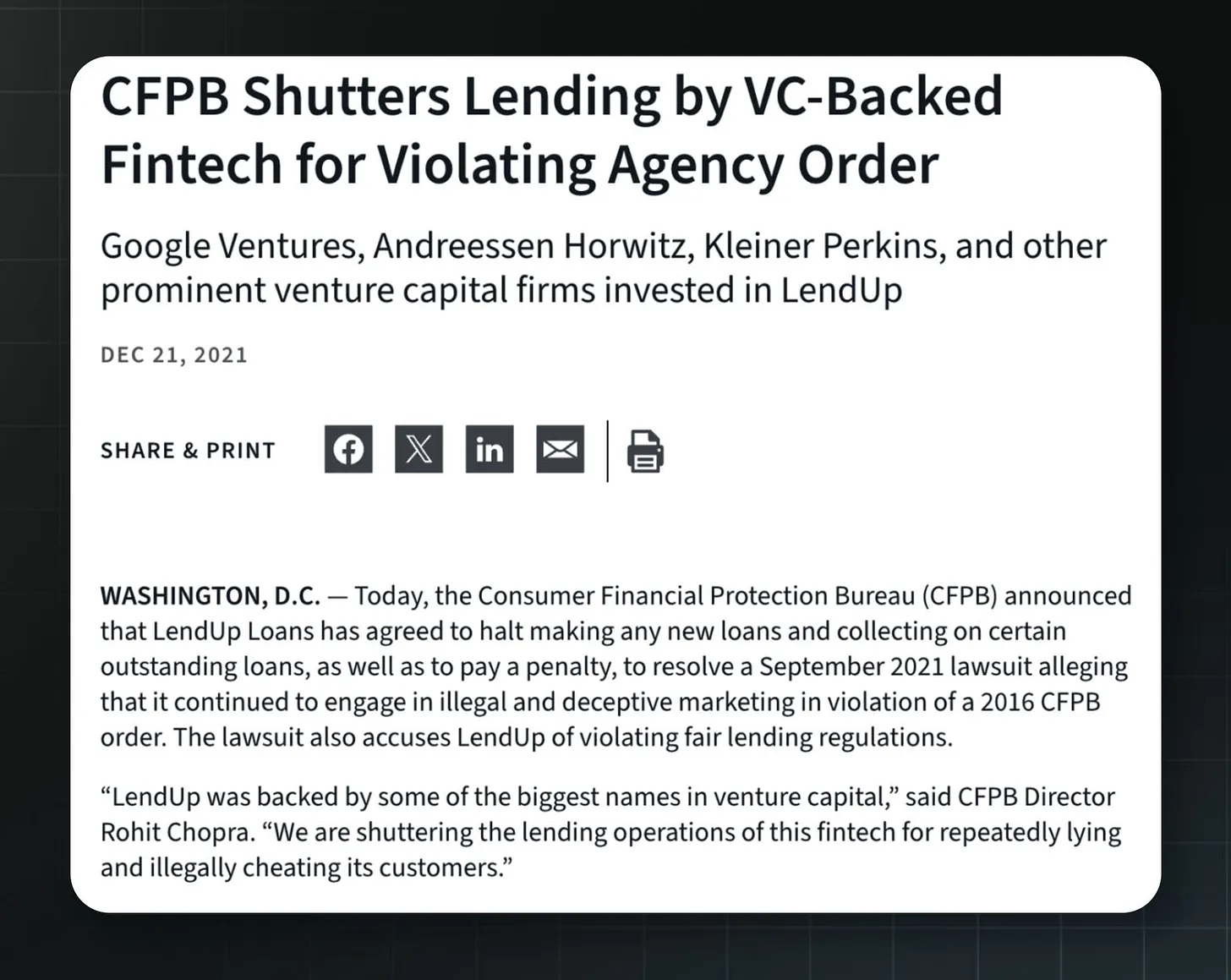

Caption: CFPB announcement shutting down LendUp for repeated fair lending violations. Source: CFPB

LendUp positioned itself as a “socially responsible” alternative to payday loans (usury). Borrowers could climb the so-called “LendUp Ladder” by repaying loans and completing financial education courses—unlocking lower interest rates and credit-building opportunities.

a16z participated in the investment, alongside Google Ventures, Kleiner Perkins, and PayPal. The company raised a total of $325 million.

Yet shortly after launching its product in 2012, Time Magazine spotted inconsistencies: LendUp charged ~$30 for a $200 two-week loan—equating to an APR of ~400%, nearly identical to typical payday lenders.

In 2016, the CFPB found LendUp deceived consumers about its ladder promotion mechanism—offering cheaper loans it couldn’t deliver—and failed to report credit information despite promises. The agency ordered LendUp to pay $3.63 million in fines and restitution—and stop making false claims about its products.

Nonetheless, LendUp repeated its misconduct and kept landing in trouble:

- 2020: The CFPB sued LendUp for violating the Military Lending Act—charging over 1,200 active-duty service members interest rates above the legal cap.

- 2021: The CFPB sued again, alleging LendUp violated its 2016 consent order. Investigators found 140,000 repeat borrowers who climbed the “ladder” were charged rates equal to—or higher than—before. Then-acting CFPB Director Dave Uejio stated bluntly: “For tens of thousands of borrowers, the LendUp Ladder was a lie.”

In December 2021, the CFPB forced LendUp to shut down. Director Rohit Chopra condemned its business model—and its backers: “LendUp received backing from some of the most prominent names in venture capital. We shut down this fintech lender’s loan business because it repeatedly lied and illegally deceived customers.”

In May 2024, the CFPB disbursed nearly $40 million from its victim relief fund to 118,101 consumers harmed by LendUp. This money came from the relief fund because LendUp claimed limited ability to pay. The company—once raising $325 million—paid only $100,000.

According to ProPublica, eight a16z-backed fintech companies have faced CFPB investigations since 2016. Marc Andreessen’s disdain for the CFPB is undeniable. Meanwhile, the agency has poured massive resources into its crypto-focused super PAC “Fairshake”—punishing political candidates who support the CFPB.

Legal Issues

a16z’s portfolio also includes companies with serious legal problems—often flouting established rules meant to protect customers.

Zenefits

a16z led a $15 million Series A round in January 2014 and a $66.5 million Series B round in June 2014.

Caption: TechCrunch reporting on Zenefits’ collapse and then-COO David Sacks. Source: TechCrunch

Zenefits offered free HR software to small businesses—and profited by acting as their health insurance broker. a16z led its Series A and B rounds, reportedly making Zenefits its largest 2014 investment.

By 2015, the company had raised $583 million—valued at $4.5 billion.

The problem? Selling insurance requires state licenses—and Zenefits’ employees typically lacked them.

For example, California requires 52 hours of online training before taking the licensing exam. According to Bloomberg and BuzzFeed, CEO Parker Conrad personally wrote a Google Chrome extension—internally dubbed “the macro”—that kept training timers running while employees did other tasks. Employees then signed affidavits—under penalty of perjury—claiming they’d completed all training.

An investigation in November 2015 found unlicensed brokers sold health insurance in at least seven states. In Washington State, over 80% of policies sold by Zenefits as of August 2015 came from unlicensed employees.

In February 2016, Conrad stepped down as CEO. Regulatory responses followed widely: California’s Department of Insurance levied a $7 million fine—the department’s largest license-related penalty ever. New York added $1.2 million. Texas imposed $550,000. Over a dozen other states reached settlements.

The SEC fined Zenefits and Conrad nearly $1 million for providing investors with “materially false and misleading statements.” In 2018, Conrad surrendered his California insurance license. The company’s valuation halved—and Zenefits fully exited the insurance brokerage business.

David Sacks—then COO—stepped in as CEO to clean up the mess. He declared the company’s culture “inappropriate for a highly regulated company.” Sacks later told Bloomberg that though he’d served as COO for over a year, he “knew about the macro but didn’t understand its significance or Conrad’s involvement until an outside lawyer explained it in January 2016.”

Sacks now serves as White House AI and Crypto Czar—pushing to preempt state AI regulation in favor of a “least burdensome” federal framework—a priority a16z has long lobbied for. Working alongside him is White House Senior AI Policy Advisor Sriram Krishnan—who was an a16z general partner just weeks before his December 2024 appointment.

a16z was an active investor in Zenefits from the start. a16z partner Lars Dalgaard joined the board—and personally pressured Conrad to double the 2014 revenue target from $10 million to $20 million.

Conrad later recalled: “Lars sat there in his very ‘Lars’ way and said, ‘Why are you guys so bush league?’” Dalgaard told him to hire at least 100 more sales reps to hit the target.

Ben Horowitz later explained a16z’s investment philosophy to Bloomberg: “We look for the magnitude of genius—not whether there are problems. In some sense, [Conrad] was the archetype.”

A “least burdensome” federal rule benefits a16z’s portfolio companies. It also fosters a laissez-faire regulatory environment—letting companies like Zenefits grow to a $5 billion valuation.

Health IQ

a16z led a $34.6 million Series C round in November 2017.

Health IQ promised to use data science to offer lower life insurance rates to health-conscious individuals—like runners, cyclists, and vegetarians. After a16z led its Series C, Health IQ raised over $200 million in equity and debt financing—reaching a $450 million valuation by 2019. It then pivoted from life insurance to Medicare brokerage—and projected $115 million in revenue.

But Health IQ’s business model was flawed: reports indicated the company prepaid sales reps full multi-year commissions before policies were sold and funds collected. The gap between booked revenue and actual cash flow meant the company needed increasing debt to cover bills. By late 2022, its total debt reached $150 million.

In December 2022—immediately after Medicare’s open enrollment period ended—Health IQ laid off 700–1,000 employees without the 60-day advance notice required by California’s WARN Act. This triggered a wave of class-action lawsuits.

A supplier named Quote Velocity sued, alleging CEO Munjal Shah instructed executives in late November 2022 to buy customer leads from suppliers at all costs—because Health IQ might be “out of business” by invoice due dates. The company also faced lawsuits over alleged violations of the Telephone Consumer Protection Act in its telemarketing practices.

In August 2023, Health IQ filed for Chapter 7 bankruptcy liquidation. Its filing listed $256.7 million in liabilities and only $1.3 million in assets. Seventeen breach-of-contract lawsuits remained pending. In an email to investors obtained by Forbes, Shah wrote: “I’m sorry I lost all your money.”

CEO Munjal Shah became the subject of a Forbes Daily Cover story about a16z’s decision to continue backing the founder. Source: Forbes

Nonetheless, a16z chose to continue supporting the founder. At the time, Shah was already building his next company. In January 2023—while Health IQ employees were still fighting for unpaid commissions—Shah and co-founder Alex Miller launched Hippocratic AI, an AI healthcare startup.

When Hippocratic AI launched in May 2023, a16z co-led its $50 million seed round. a16z General Partner Julie Yoo explained the investment, noting Shah “was basically living in our office” while conceptualizing his next venture.

uBiome

a16z participated in a $4.5 million Series A round in August 2014.

uBiome sold at-home microbiome testing kits—mail in stool samples, get gut bacteria reports. The basic kit cost $89. By 2018, the company had raised $105 million—valued near $600 million.

But ultimately, the $89 consumer kit couldn’t generate revenue fast enough for VCs. So uBiome developed a “clinical” version billed to insurers—costing up to $2,970 per test. Prosecutors allege the company systematically defrauded insurers to make financial statements look good.

In April 2019, the FBI raided uBiome’s headquarters. In September 2019, the company filed for bankruptcy.

In March 2021, federal prosecutors indicted co-founders Jessica Richman and Zachary Apte on 47 counts—including securities fraud, healthcare fraud, and money laundering. Prosecutors said the company repeatedly billed patients for the same test without consent, pressured doctors to approve unnecessary tests, and submitted backdated and forged medical records when insurers asked questions.

The indictment states uBiome submitted over $300 million in false claims from 2015–2019—insurers paid over $35 million. The SEC filed parallel charges, alleging uBiome defrauded investors of $60 million—while founders cashed out $12 million from personal stock sales.

The FBI’s statement was blunt: “This indictment demonstrates that the highly regulated healthcare industry is not suited for ‘move fast and break things.’”

Richman and Apte never stood trial. They married in 2019, fled to Germany in 2020—and remain fugitives.

BitClout / DeSo

Within months of launch, Al-Najjar announced BitClout had always been just a “beta”—and pivoted to a new project called DeSo (Decentralized Social), taking the funds with him.

a16z and other investors participated in a $200 million DeSo token sale in September 2021.

In July 2024, the SEC and DOJ charged Al-Najjar with fraud.

According to the SEC complaint, he raised $257 million selling BitClout tokens—while falsely telling investors proceeds wouldn’t fund himself or employees.

The SEC alleges he spent over $7 million on personal expenses—including a six-bedroom Beverly Hills mansion—and gifted at least $1 million each to his wife and mother.

The SEC also cited Al-Najjar’s internal communications: he allegedly told an investor, “Appearing ‘pseudo’ decentralized usually confuses regulators and stops them from looking at you.”

BitClout was a16z’s second bet on founder Nader Al-Najjar.

The first was Basis—a 2017 algorithmic stablecoin that raised $133 million from a16z, Google Ventures, Bain Capital, and others.

It shut down in 2018 citing “regulatory constraints.”

Al-Najjar said he refunded most funds after deducting $10 million in fees—he claimed this covered legal costs.

According to Fortune, a16z was identified as “Investor 1” in the DOJ’s charges against Al-Najjar—both a fraud victim and a prosecution witness against the founder they backed twice.

DESO tokens have fallen over 97% from their all-time high.

Al-Najjar faces up to 20 years in prison for wire fraud.

In February 2025—shortly after the new administration took office—the DOJ dropped the charges.

Why This Matters

Despite all these issues, Andreessen Horowitz remains steadfast in supporting its portfolio companies.

“I don’t think they’re reckless or villains,” Andreessen wrote in 2023 about AI developers. “They’re heroes—every single one. My firm and I are thrilled to support them as much as possible—and we’ll stand 100% behind them and their work.”

So why is a16z’s role in backing these companies so significant? Because a16z isn’t content merely investing in tech companies. It’s also trying to play a major role in shaping U.S. AI and tech policy—and so far, it’s proving effective.

When President Trump signed an executive order in December 2025 aiming to weaken state-level AI laws, Andreessen looked triumphant.

“It’s time to win AI,” he wrote on X.

Behind the scenes, a16z wielded enormous influence to support these new rules. The executive order was a win for AI insiders—who had twice failed to convince Congress to pass bills banning state-level AI legislation, defeated each time by bipartisan coalitions. It remains unclear whether the order will hold up in court. But all signs indicate a16z and its allies will keep shaping the AI regulatory landscape:

In August 2025, a16z launched a $100 million super PAC called “Leading The Future”—whose stance aligns explicitly with White House AI Czar David Sacks. The group is widely expected to run attack ads against candidates supporting AI regulation.

a16z also supports the “American Innovators Network”—which lobbies across multiple states against AI regulation.

Marc Andreessen serves on Meta’s board—while Meta pours tens of millions into its own pro-AI super PACs: “Mobilize California’s Economic Transformation” and “American Tech Excellence Project.”

White House Senior AI Policy Advisor Sriram Krishnan—who was appointed in December 2024—was an a16z general partner just weeks earlier. He works closely with Trump’s AI and crypto Czar David Sacks—trying to deliver on a16z’s lobbying goals: preempting state-level AI regulation.

Two other former a16z partners hold roles focused on shrinking government: Scott Kupor (Office of Personnel Management) and Jamie Sullivan (Department of Government Efficiency).

What’s the ultimate goal of these efforts? The firm appears driven by both ideology and profit.

If a16z controls AI regulation, its multibillion-dollar portfolio stands to gain immensely.

Beyond massive financial incentives, Andreessen spelled out his ideological goals in his October 2023 “Techno-Optimist Manifesto.” Andreessen’s manifesto passionately advocates accelerating technological development. It declares “we are apex predators,” “we are not victims—we are conquerors.” It lists numerous “enemies,” including:

Risk management

Technology ethics

Social responsibility

Precautionary principle

Existential risk

Stakeholder capitalism

And the “all-knowing certified expert worldview”

The manifesto champions an extreme regulatory view—claiming that since AI development saves lives, slowing it in any way constitutes “a form of murder.” This stance conveniently aligns with Andreessen’s and a16z’s financial interests.

Public opinion polls show Americans disagree—and overwhelmingly support AI safety and data privacy regulation—even if it means slower AI advancement. In fact, Pew Research found 58% of Americans believe government AI regulation is insufficient. Only 21% (less than one-quarter) think regulation would go too far.

Though a16z claims it supports a narrow set of AI regulations, its actual proposals are notably thin. Given Andreessen’s condemnation of AI regulation as “the cornerstone of a new totalitarianism,” this is unsurprising. So far, the firm’s efforts have focused on blocking regulation—not crafting it.

AI differs fundamentally from previous technologies. A gambling app exploiting sweepstakes loopholes may harm its users. A fintech startup with sloppy recordkeeping may lose customer deposits. These are serious harms. But the advanced AI systems emerging over the next decade are a different matter entirely. As capabilities rapidly increase and autonomy grows, errors will become harder—and possibly impossible—to reverse.

a16z is betting it can set the rules before society grasps the stakes. It’s spending tens of millions on lobbying and super PACs. It’s placing allies inside government. It’s backing AI companies eager to “move fast and break things”—with little regard for the damage they cause.

The social and legal decisions being made now—about safety requirements, liability frameworks, deployment standards, enforcement mechanisms—will profoundly reshape AI’s trajectory. The public has neither a seat at the table nor expensive lobbyists on retainer.

Instead, these decisions are being shaped by a single firm that treats “trust and safety” as enemies—backs companies built on deception and consumer harm—and rewards failure by funding the same founders again.

Research & Writing Support: Cody Fenwick, Jack Kelly, Zachary Jones

Editing Support: Nicole Guenther, Rachel Johnson

Factual Verification Support: Jade-Ruyu Yan, Zachary Jones

Design Support: Daniyal Rafique

Web Support: Facundo Kalil Franzone

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News