The people need a bad capitalist, AI creates a delivery rumor.

TechFlow Selected TechFlow Selected

The people need a bad capitalist, AI creates a delivery rumor.

Fake news spreads because it looks too much like what you already believe in your heart.

Written by: Curry, TechFlow

Something quite surreal happened last week.

The CEOs of two American food delivery giants—one worth $2.7 billion and the other running the world's largest ride-hailing platform—were both awake writing lengthy online posts in the early hours of Saturday to clear their names.

It all started with an anonymous post on Reddit.

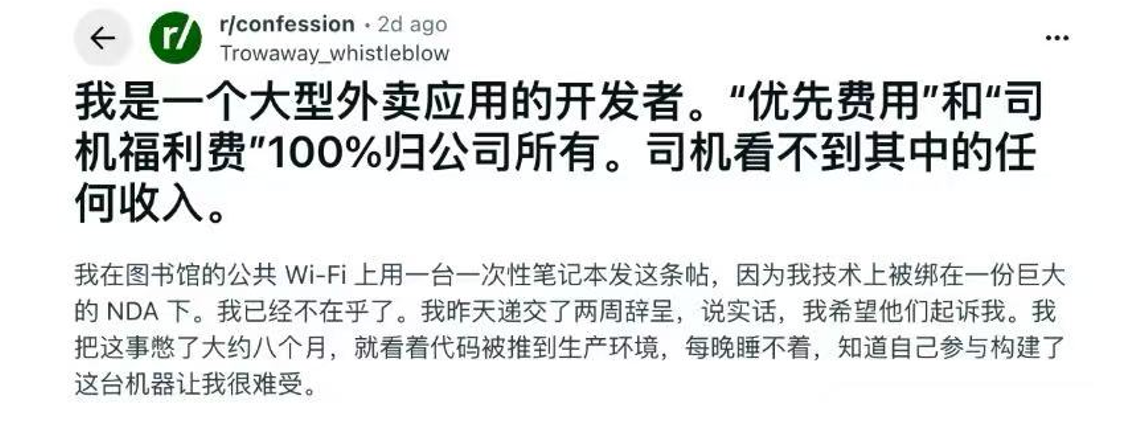

The poster claimed to be a backend engineer at a major food delivery platform, who, while drunk, went to a library to spill the beans using public WiFi.

The gist of the content was:

The company analyzes ride-hailing drivers' situations and assigns them a "desperation score"—the more financially desperate the driver, the fewer good orders they get; the so-called priority delivery for food orders is fake, with regular orders being delayed; various "driver welfare fees" aren't given to drivers at all, but are instead used to lobby Congress against unions...

The post ended in a very convincing manner: I'm drunk, I'm angry, so I'm blowing the whistle.

It perfectly cast the poster as a whistleblower exposing how "big companies use algorithms to exploit drivers."

Three days after the post went up, it garnered 87,000 upvotes and made it to Reddit's front page. Someone also screenshotted and posted it on X, where it received 36 million impressions.

Keep in mind, the U.S. food delivery market has only a few major players. The post didn't name names, but everyone was guessing who it was.

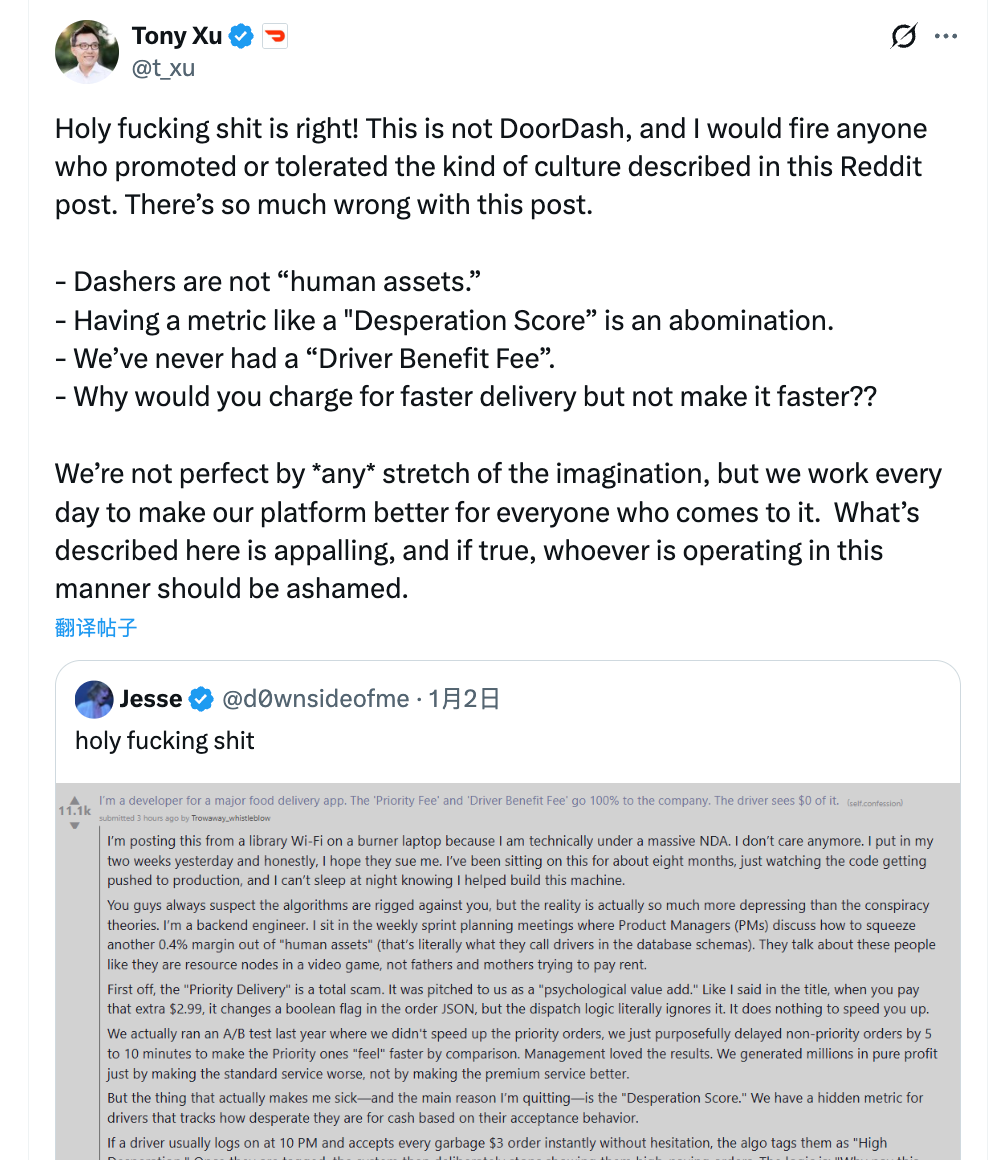

DoorDash CEO Tony Xu couldn't sit still any longer and tweeted that this wasn't something they did, and he'd fire anyone who dared to do such a thing. Uber's COO also jumped in to respond, "Don't believe everything you see online."

DoorDash even published a five-point statement on its official website, refuting each point in the爆料. These two companies, with a combined market cap of over $80 billion, were forced into overnight PR clarifications by an anonymous post.

Then, the post was actually proven to be AI-generated.

It was exposed by Casey Newton, a reporter from the overseas tech media Platformer.

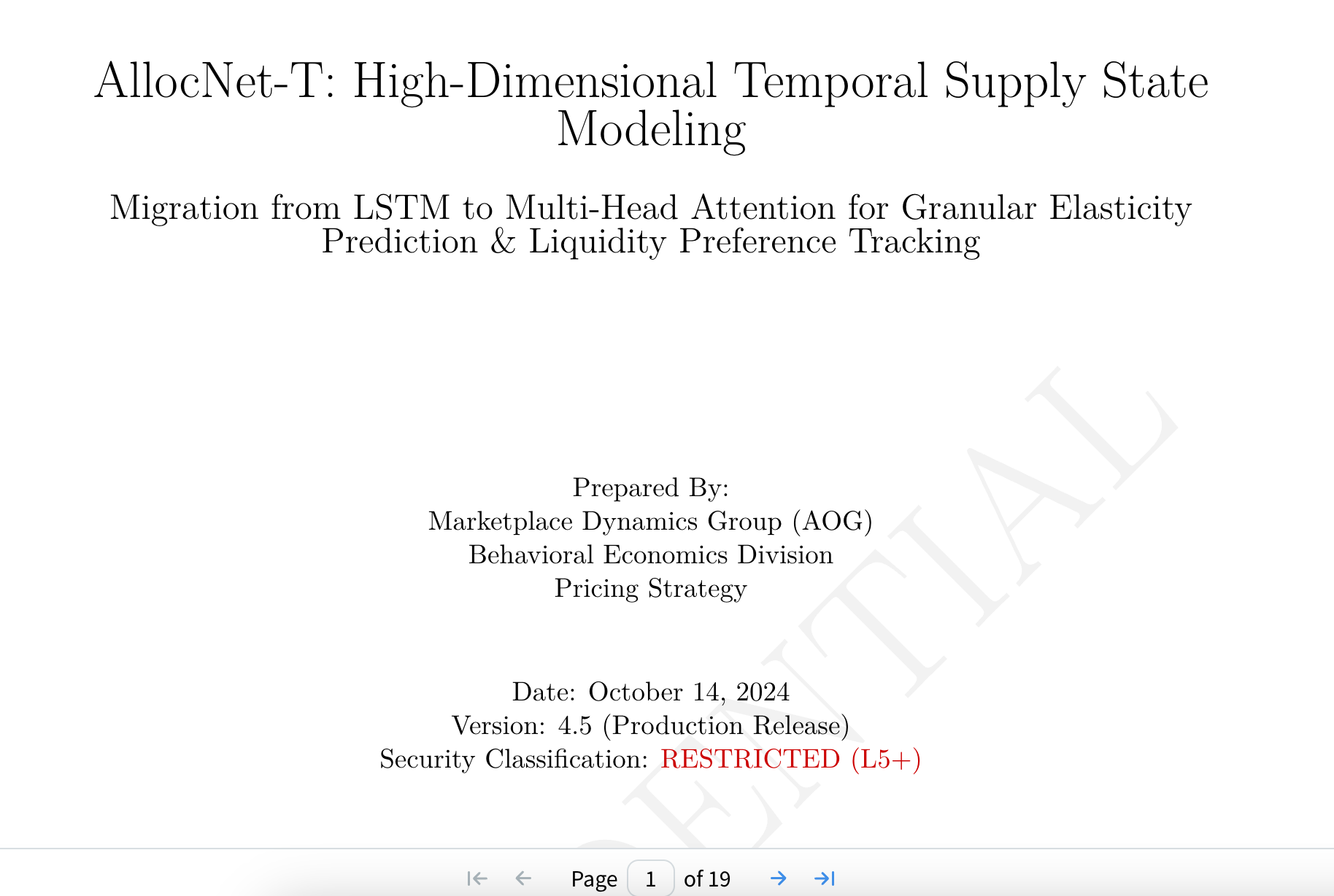

He contacted the爆料er, who immediately sent over an 18-page "internal technical document" with a very academic title: "AllocNet-T: High-Dimensional Temporal Supply State Modeling."

Translated roughly as "High-Dimensional Temporal Supply State Modeling." Each page bore a "Confidential" watermark, attributed to Uber's "Market Dynamics Group · Behavioral Economics Department."

The content explained how the model mentioned in the Reddit爆料 post, which assigns drivers a "desperation score," actually calculates it. It included architecture diagrams, mathematical formulas, data flow charts...

(Screenshot of the fake paper, at first glance, it looks convincingly real)

Newton said the document initially fooled him. Who would go to the trouble of forging an 18-page technical document to bait a reporter?

But now it's different.

This 18-page document can be generated by AI in just a few minutes.

At the same time, the爆料er also sent the reporter a blurred photo of their own Uber employee ID card, claiming they actually worked there.

Out of curiosity, reporter Newton ran the employee ID through Google Gemini for verification. Gemini said the image was AI-generated.

It could be identified because Google embeds invisible watermarks, called SynthID, in content generated by its own AI. They're invisible to the naked eye but detectable by machines.

Even more absurdly, the employee ID card bore the "Uber Eats" logo.

An Uber spokesperson confirmed: We don't have employee ID cards branded "Uber Eats" at all; all badges only say Uber.

Clearly, this fake爆料 "whistleblower" didn't even know who they were trying to smear. When the reporter requested social media account information like LinkedIn for further verification,

the爆料er deleted their account and vanished.

Actually, what we want to discuss isn't that AI can fabricate things—that's not new.

What we want to discuss more is: Why were tens of millions of people willing to believe an anonymous爆料 post?

In 2020, DoorDash was sued for using tips to offset drivers' base pay and settled for $16.75 million. Uber once had a tool called Greyball specifically to evade regulators. These are real events.

It's easy to find a subconscious agreement that: platforms are not good entities, and this judgment is definitely correct.

So when someone says "food delivery platform algorithms exploit drivers," people's first reaction isn't "Is this true?" but "As expected."

Fake news can spread because it looks like something people already believe in their hearts.

What AI does is reduce the cost of this "looking like" to almost zero.

There's another detail in this story.

The scam was uncovered thanks to Google's watermark detection. Google develops AI, and Google also develops AI detection tools.

But SynthID can only check Google's own AI. This time they caught it because the造假er happened to use Gemini. With another model, they might not have been so lucky.

So solving this case was less a technical victory and more:

The other side made a rookie mistake.

Previously, a Reuters调查 found that 59% of people worry they can't distinguish between truth and falsehood online.

The food delivery company CEOs' clarification tweets were seen by hundreds of thousands, but how many firmly believe it's just PR, just lies? Even though that fake爆料 post has been deleted, people are still骂ing food delivery platforms in the comments.

A lie has already traveled halfway around the world while the truth is still tying its shoes.

Think again, what if this post wasn't about Uber, but about Meituan or Ele.me?

Things like "desperation scores," "using algorithms to exploit delivery riders," "welfare fees not given to riders at all." When you see these accusations, isn't your first reaction emotional agreement?

"Delivery Riders, Trapped in the System"—you remember that article, right?

So the issue isn't whether AI can fabricate things. The issue is, when a lie looks like something people already believe in their hearts, does truth even matter anymore?

What that person who deleted their account and ran away was after, we don't know.

We only know they found an emotional outlet and poured a bucket of AI-generated fuel into it.

The fire started. As for whether it's burning real or fake wood, who cares?

In fairy tales, Pinocchio's nose grows when he lies.

AI doesn't have a nose.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News