Vitalik Interview: Exploring Ethereum's 2025 Vision, Innovative Integration of PoS, L2, Cryptography, and AI

TechFlow Selected TechFlow Selected

Vitalik Interview: Exploring Ethereum's 2025 Vision, Innovative Integration of PoS, L2, Cryptography, and AI

This interview was conducted in Chinese, and Vitalik demonstrated very fluent Chinese language skills.

Author: DappLearning

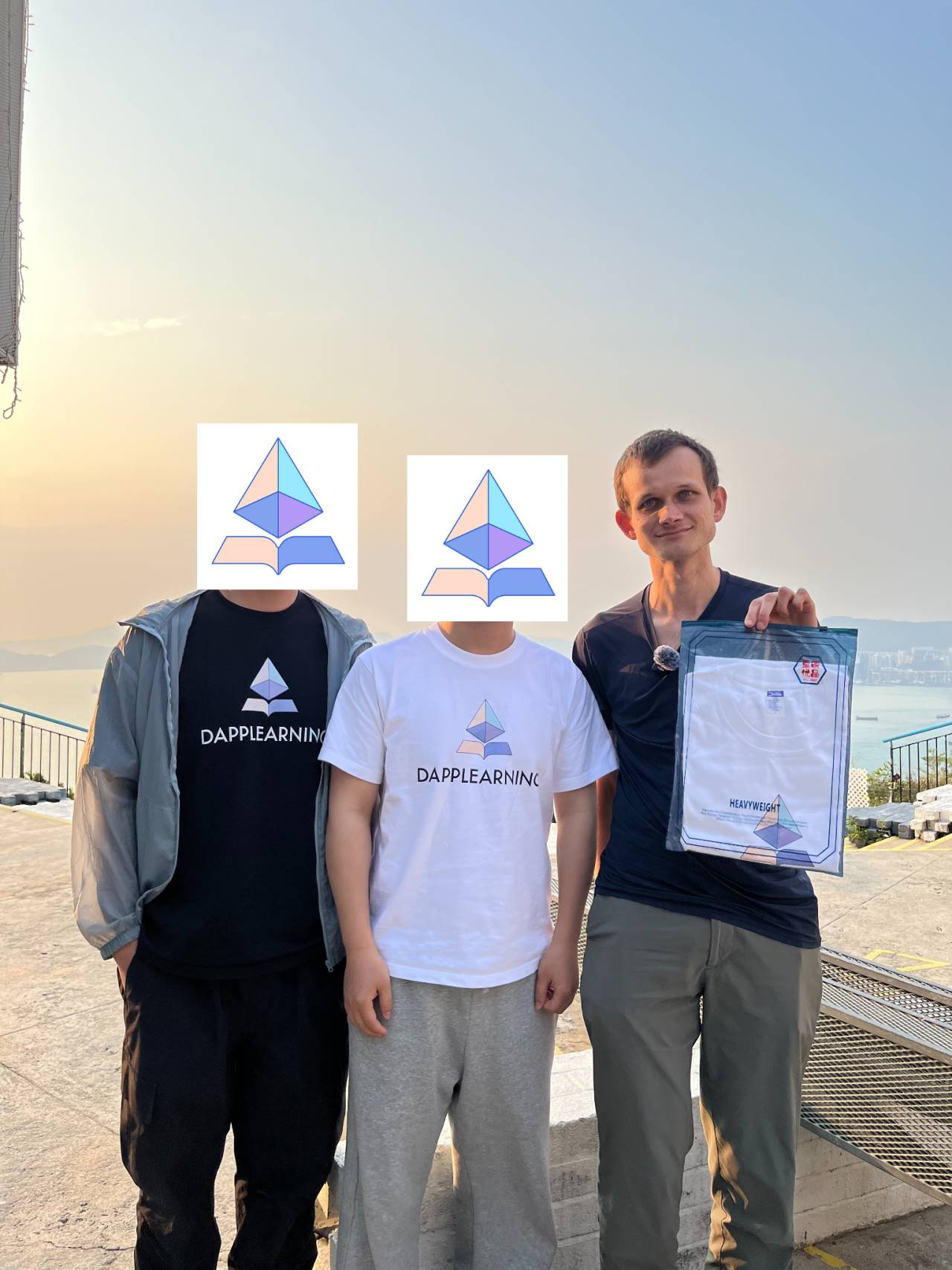

On April 7, 2025, at the Pop-X HK Research House event co-hosted by DappLearning, ETHDimsum, Panta Rhei, and UETH, Vitalik and Xiao Wei appeared together at the event.

During a break, Yan, founder of the DappLearning community, interviewed Vitalik. The conversation covered multiple topics including ETH POS, Layer2, Cryptography, and AI. This interview was conducted in Chinese, with Vitalik demonstrating exceptional fluency.

Below is the full transcript (edited for clarity):

01 Thoughts on the POS Upgrade

Yan:

Hello Vitalik, I'm Yan from the DappLearning community. It's an honor to interview you here.

I've been following Ethereum since 2017. Back in 2018 and 2019, there was intense debate around POW versus POS—a discussion that may continue for some time.

Now, ETH’s POS has been running stably for over four years, with hundreds of thousands of validators in the consensus network. However, ETH’s exchange rate against BTC has continued to decline. There are both positive aspects and challenges here.

Looking at this moment in time, what are your thoughts on Ethereum's POS upgrade?

Vitalik:

I think the prices of BTC and ETH have absolutely nothing to do with POW or POS.

The BTC and ETH communities have very different perspectives, and they approach things in fundamentally different ways.

Regarding ETH’s price, one issue I see is that ETH has many possible futures—imaginable scenarios where Ethereum hosts many successful applications, but those applications don’t necessarily bring proportional value to ETH.

This is a concern shared by many in the community, but it's actually quite normal. Take Google, for example—they build many products and do many interesting things, yet over 90% of their revenue still comes from Search.

Ethereum’s ecosystem is similar. Some applications pay high transaction fees and consume a lot of ETH, while others may be successful but contribute relatively little value to ETH.

This is something we need to reflect on and improve—we need to support more applications that create long-term value for ETH holders.

So I believe ETH’s future success will emerge in these areas. I don’t think it's strongly tied to improvements in consensus algorithms.

02 PBS Architecture and Centralization Concerns

Yan:

Yes, the prosperity of the ETH ecosystem is a key reason why developers like us are motivated to build on it.

OK, what are your thoughts on ETH2.0’s PBS (Proposer & Builder Separation) architecture? It seems promising—eventually, people could use a smartphone as a light node to verify ZK proofs, and anyone could become a validator by staking just 1 ether.

But Builders might become more centralized. They’d need to handle MEV resistance and generate ZK proofs. With Based rollups, Builders would take on even more responsibilities, such as acting as Sequencers.

Wouldn't this make Builders too centralized? Validators may remain decentralized, but if one part of the chain becomes compromised, it could affect the entire system. How can we address censorship resistance in this context?

Vitalik:

Yes, this is an important philosophical question.

In the early days of Bitcoin and Ethereum, there was an implicit assumption:

Block construction and block verification are symmetric operations.

If you're building a block with 100 transactions, your node must process all 100 transactions’ worth of gas. When you broadcast that block, every other node must do the same work. So if we set the gas limit so that any laptop, MacBook, or small server can build blocks, then similarly configured nodes must also be able to verify them.

That was the old paradigm. Now we have ZK, DAS, stateless verification, and other new technologies.

Previously, block construction and verification had to be symmetric—but now they can be asymmetric. Building a block may become very computationally intensive, while verifying it can become extremely lightweight.

Take stateless clients as an example: If we adopt this technology and increase the gas limit tenfold, the computational power required to build a block becomes massive—ordinary computers may no longer suffice. You might need a high-end Mac Studio or powerful server.

But verification costs become much lower, because verification requires no storage—only bandwidth and CPU. Add ZK proofs, and even CPU costs for verification can be eliminated. With DAS, verification costs become extremely low. So while block construction becomes more expensive, verification becomes cheaper.

Is this better than the current situation?

This is complex. Here's how I think about it: Suppose Ethereum has some "super nodes"—nodes with high computational power needed for heavy lifting.

How do we prevent them from misbehaving? There are several types of attacks to consider:

First: A 51% attack.

Second: Censorship attacks. If they refuse certain user transactions, how do we mitigate this risk?

Third: MEV-related manipulation. How can we reduce these risks?

For 51% attacks, since verification is done by Attesters, these attester nodes only need to verify DAS, ZK proofs, and stateless client data. Verification is cheap, so becoming a consensus node remains accessible.

Suppose 90% of block-building is done by you, 5% by someone else, and 5% by others. If you refuse certain transactions, it’s not catastrophic—because you can’t interfere with consensus itself.

You can't launch a 51% attack, so your worst action is censoring specific users’ transactions. But users can simply wait ten or twenty blocks until another builder includes their transaction. That’s point one.

Point two: We have the concept of Fossil.

Fossil separates the role of “transaction selection” from “execution.” This allows transaction inclusion decisions to be more decentralized. Small nodes gain independent ability to include transactions in future blocks. Large nodes, in contrast, have limited influence [1].

This model is more complex than the old ideal where every node was a personal laptop. But look at Bitcoin—it already uses a hybrid architecture, with miners operating large data centers.

In POS, we’re doing something similar: some nodes require more computation and resources, but their powers are limited. Other nodes remain highly decentralized and distributed, ensuring network security and decentralization. This approach is more complex, which presents its own challenges.

Yan:

Very insightful. Centralization isn’t inherently bad—as long as we can constrain malicious behavior.

Vitalik:

Exactly.

03 Issues Between Layer1 and Layer2, and Future Directions

Yan:

Thanks for clearing up a long-standing confusion. Let’s move to the next topic. As someone who’s witnessed Ethereum’s journey, I see that Layer2 has become very successful. TPS is no longer a bottleneck—unlike during the ICO era when networks were constantly congested.

I personally find L2s quite usable now. However, the issue of liquidity fragmentation across L2s has prompted various proposed solutions.

What are your views on the relationship between Layer1 and Layer2? Is Ethereum mainnet currently too passive, too decentralized, offering no constraints on L2s? Should L1 establish rules or revenue-sharing models with L2s? Or adopt approaches like Based Rollup? Justin Drake recently proposed this on Bankless, and I largely agree. What’s your view—and if such solutions exist, when might they launch?

Vitalik:

I think our current Layer2 landscape faces a few issues.

First, progress on security isn’t fast enough. I’ve been pushing all L2s to upgrade to Stage 1, and hope they reach Stage 2 this year. I’m actively encouraging teams and supporting L2BEAT’s transparency efforts in this area.

Second, interoperability between L2s—cross-chain transactions and communication—needs improvement. If two L2s belong to the same ecosystem, interacting should be simpler, faster, and cheaper.

Last year we began working on this—the Open Intents Framework and Chain-specific addresses—mostly UX-focused improvements.

I actually think 80% of the L2 cross-chain problem is a UX issue.

Solving UX can be painful, but with the right direction, complex problems become manageable. That’s where we’re focused.

Some things require deeper innovation. For example, Optimistic Rollup withdrawals currently take a week. If you hold a token on Optimism or Arbitrum and want to bridge it to L1 or another L2, you must wait seven days.

You can use market makers who wait a week (for a fee). Regular users can use frameworks like Open Intents Across Protocol to bridge between L2s—fine for small transfers. But for large amounts, market makers face liquidity limits, requiring higher fees. Last week I published an article [2], supporting a 2-of-3 validation model: combining OP + ZK + TEE.

This approach satisfies three goals simultaneously:

First: Fully trustless. No Security Council needed. TEE acts only as a supplementary component—not fully trusted.

Second: Enables early adoption of ZK technology, though ZK is still immature, so we can’t rely solely on it yet.

Third: Reduces withdrawal time from one week to one hour.

Imagine users leveraging the Open Intents Framework—market makers’ liquidity costs drop by 168x, as rebalancing wait times shrink from one week to one hour. Long-term, we aim to reduce withdrawal time from one hour down to 12 seconds (current block time), or even 4 seconds with SSF.

We’re also exploring zk-SNARK aggregation to parallelize proof generation and reduce latency. Users using direct ZK methods won’t need intents, but intent-based paths offer extremely low cost—this is part of broader interoperability.

Regarding L1’s role: In early L2 roadmaps, many thought we could mirror Bitcoin’s path—L1 used minimally, mainly for proofs, while L2 handles everything else.

But we realized if L1 plays no role, it’s dangerous for ETH.

As discussed earlier, one major fear is: Ethereum’s applications succeed, but ETH does not.

If ETH fails to capture value, the community lacks funding to support future innovations. Without L1 involvement, user experience and architecture are controlled entirely by L2s and apps—no one represents ETH. So assigning more roles to L1 in certain applications benefits ETH.

Next, we must clarify: What should L1 do? What should L2 do?

In February, I wrote an article [3] arguing that in an L2-centric world, L1 still has critical roles: L2s post proofs to L1; if an L2 fails, users rely on L1 to migrate; Key Store Wallets operate via L1; Oracle data can be stored on L1—many mechanisms depend on L1.

High-value applications like DeFi are often better suited for L1. One reason: their time horizon is long—users commit assets for one, two, or three years.

This is especially clear in prediction markets—e.g., “What will happen in 2028?”

A problem arises if an L2’s governance fails. In theory, users can exit—to L1 or another L2. But if assets are locked in long-term smart contracts within that L2, users cannot withdraw. Thus, many theoretically secure DeFi apps are practically insecure.

For these reasons, some applications should remain on L1—prompting renewed focus on L1 scalability.

We now have a roadmap: by 2026, four to five upgrades will enhance L1 scalability.

First: Delayed Execution (separating block validation from execution)—validate blocks in one slot, execute in the next. This increases maximum execution time from 200ms to 3–6 seconds, allowing more processing time [4].

Second: Block-Level Access List—each block declares which account states and storage it accesses, similar to stateless without witnesses. This enables parallel EVM execution and I/O, simplifying parallelization.

Third: Multidimensional Gas Pricing [5]—setting per-dimension caps on block capacity, crucial for security.

Fourth: Historical Data Management (EIP-4444)—nodes no longer store all historical data permanently. Instead, each node stores ~1%, with data distributed peer-to-peer—your node stores some, mine stores others—enabling more decentralized storage.

If we combine these four upgrades, we believe L1 gas limits could increase tenfold. Applications will have greater incentive to rely on L1, benefiting both L1 and ETH.

Yan:

Great. Next question: Will we see the Pectra upgrade this month?

Vitalik:

We’re aiming for two upgrades: Pectra likely by end of this month, followed by Fusaka in Q3 or Q4.

Yan:

Wow, so soon?

Vitalik:

Hopefully.

Yan:

My next question relates to this. As someone who’s watched Ethereum grow, I know we maintain multiple clients (consensus and execution)—five or six—for security, requiring extensive coordination, leading to long development cycles.

It’s a trade-off: slower than other L1s, but more secure.

Are there ways to shorten upgrade intervals—avoid waiting 18 months per upgrade? I’ve seen you propose some ideas—could you elaborate?

Vitalik:

Yes. One solution: improve coordination efficiency. We now have more people moving between teams, improving inter-team communication.

If a client team identifies an issue, they can share it with researchers. Thomas joining EF as one of our new EEs helps—he’s from a client team and now works within EF, enabling better coordination. That’s point one.

Second: Be stricter with client teams. Currently, we wait for all five teams to be ready before launching a hard fork. Now we’re considering starting upgrades once four teams are ready—no need to wait for the slowest, which incentivizes faster progress.

04 Views on Cryptography and AI

Yan:

Healthy competition is good. Every upgrade is exciting—but let’s not keep people waiting too long.

Now, a more open-ended question on cryptography.

When our community launched in 2021, we brought together developers from major exchanges and venture researchers to discuss DeFi. 2021 was truly a golden age—everyone learning, designing, and participating in DeFi.

Since then, ZK topics—Groth16, Plonk, Halo2—have become increasingly difficult for developers to keep up with, as the field advances rapidly.

Also, ZKVM is advancing quickly, making ZKEVM less dominant. As ZKVM matures, developers may no longer need to understand ZK internals deeply.

What advice or thoughts do you have on this?

Vitalik:

I believe the best path for ZK ecosystems is enabling most ZK developers to work with high-level languages (HLL). They should write app logic in HLL, while proof system researchers continue optimizing底层 algorithms. Layering is essential—developers shouldn’t need to understand lower layers.

Currently, Circom and Groth16 have strong ecosystems, but this imposes significant limitations. Groth16 has notable drawbacks—each app needs its own trusted setup, and efficiency is suboptimal. We should invest more in helping modern HLLs succeed.

ZK RISC-V is also promising. RISC-V can serve as an HLL platform—many applications, including EVM and others, can run on it [6].

Yan:

Great—then developers just need to learn Rust. Last year at Devcon Bangkok, I was impressed by advances in applied cryptography.

What are your thoughts on combining ZKP with MPC and FHE? Any advice for developers?

Vitalik:

Very interesting. FHE looks promising, but I have concerns—both MPC and FHE typically require a committee—say, seven or more nodes. If 51% or even 33% are compromised, the system fails. It creates something like a Security Council—actually worse. For example, a Stage 1 L2 only fails if 75% of its council is compromised [7]. That’s point one.

Second: Security Council members—if reliable—often keep keys offline in cold wallets. But in most MPC/FHE systems, committee members must stay online to keep the system running, so they’re likely hosted on VPSs or servers—easier targets for attackers.

This worries me. These approaches have benefits, but aren’t perfect.

Yan:

One last lighter question—I notice you’ve been paying attention to AI lately. Let me share a few perspectives:

Elon Musk said humans might just be bootloaders for silicon-based civilization.

“The Network State” suggests authoritarian regimes may prefer AI, while democracies favor blockchain.

From our crypto experience, decentralization assumes rule-following, mutual checks, and risk awareness—yet this often leads to elitism. What are your thoughts? Just casual reflections.

Vitalik:

Good question—where to begin?

AI is complex. Five years ago, no one predicted the US would lead in closed-source AI while China leads in open-source AI. AI amplifies everyone’s capabilities, but sometimes strengthens authoritarian powers.

Yet AI can also democratize access. When I use AI, I notice: in fields where I’m already among the top thousand globally—like ZK development—AI helps me little; I still write most code myself. But in areas where I’m a beginner—like Android app development—I’ve never built a native Android app. Ten years ago I made one using a JS framework converted to an app, but never native.

Earlier this year, I experimented: could GPT help me build an app? Within one hour, it was done. The gap between experts and beginners has shrunk dramatically thanks to AI, which also unlocks new opportunities.

Yan:

That’s a great perspective. I used to think AI would help experienced programmers learn faster, but disadvantage newcomers. But clearly, it boosts beginners too. It’s more leveling than divisive, right?

Vitalik:

Yes. But an important question we must consider is how technologies—blockchain, AI, cryptography, and others—interact and shape society.

Yan:

So you still hope humanity avoids elite domination—aiming instead for societal Pareto optimality. Ordinary people empowered by AI and blockchain becoming super individuals.

Vitalik:

Exactly—super individuals, super communities, super humanity.

05 Hopes for Ethereum’s Ecosystem and Advice for Developers

Yan:

Alright, final question: What hopes or messages do you have for developer communities? Any words for Ethereum developers?

Vitalik:

To all Ethereum application developers—think deeply.

There are now abundant opportunities to build applications on Ethereum—things previously impossible are now feasible.

Several reasons:

First: L1’s TPS was once severely limited—now that’s solved.

Second: Privacy was once unsolvable—now it’s addressable.

Third: AI has reduced the difficulty of building anything. Despite growing complexity in Ethereum’s ecosystem, AI helps everyone understand it better.

So many ideas that failed five or ten years ago might succeed today.

In today’s blockchain application landscape, the biggest issue is twofold:

One: Extremely open, decentralized, secure, idealistic applications—with only 42 users.

Two: Casinos.

Both extremes are unhealthy.

We need applications that:

One: People actually want to use—deliver real value.

Applications that make the world better.

Two: Have sustainable business models—economically viable without relying solely on limited grants from foundations or organizations. That’s a challenge.

But today, everyone has more resources than before. So if you find a good idea and execute well, your chance of success is very high.

Yan:

Looking back, Ethereum has been remarkably successful—leading the industry, solving real problems while maintaining decentralization.

One thing resonates deeply: our community is non-profit. Through Gitcoin Grants, OP’s retroactive rewards, and token airdrops from various projects, we’ve found strong support for building in the Ethereum ecosystem. We’re now thinking about how to sustainably operate long-term.

Building on Ethereum is truly inspiring. We look forward to seeing the "world computer" realized. Thank you for your time.

Interview conducted in Mount Davis, Hong Kong

April 7, 2025

Final photo with Vitalik 📷

References mentioned by Vitalik, compiled by the editor:

[1]:

https://ethresear.ch/t/fork-choice-enforced-inclusion-lists-focil-a-simple-committee-based-inclusion-list-proposal/19870

[2]:

https://ethereum-magicians.org/t/a-simple-l2-security-and-finalization-roadmap/23309

[3]:

https://vitalik.eth.limo/general/2025/02/14/l1scaling.html

[4]:

https://ethresear.ch/t/delayed-execution-and-skipped-transactions/21677

[5]:

https://vitalik.eth.limo/general/2024/05/09/multidim.html

[6]:

https://ethereum-magicians.org/t/long-term-l1-execution-layer-proposal-replace-the-evm-with-risc-v/23617

[7]:

https://specs.optimism.io/protocol/stage-1.html?highlight=75#stage-1-rollup

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News