In a digital world, how does encryption technology protect personal data privacy?

TechFlow Selected TechFlow Selected

In a digital world, how does encryption technology protect personal data privacy?

With the surge in AI development, privacy protection has improved, but privacy and verifiability have become further complicated.

Author: Defi0xJeff, head of steak studio

Translation: zhouzhou, BlockBeats

Editor's Note: This article focuses on various technologies that enhance privacy and security, including zero-knowledge proofs (ZKP), trusted execution environments (TEE), fully homomorphic encryption (FHE), and more. It explores their applications in AI and data processing, how they protect user privacy, prevent data leaks, and improve system security. The article also highlights several case studies such as Earnifi, Opacity, and MindV, demonstrating how these technologies enable risk-free voting, encrypted data processing, and more—while acknowledging significant challenges like computational overhead and latency.

The following is the original content (slightly edited for readability):

With the surge in data supply and demand, individuals are leaving increasingly extensive digital footprints, making personal information more vulnerable to misuse or unauthorized access. We have already seen cases of personal data breaches, such as the Cambridge Analytica scandal.

Those who haven't caught up can refer to part one of the series, where we discussed:

• The importance of data

• The growing demand for data in artificial intelligence

• The emergence of the data layer

Regulations such as GDPR in Europe, CCPA in California, and similar laws around the world have made data privacy not just an ethical issue but a legal requirement, pushing companies to ensure data protection.

With the rapid advancement of AI, while enhancing privacy protection, it has further complicated the fields of privacy and verifiability. For example, although AI can help detect fraudulent activities, it also enables deepfake technology, making it harder to verify the authenticity of digital content.

Advantages

• Privacy-preserving machine learning: Federated learning allows AI models to be trained directly on devices without centralizing sensitive data, thus protecting user privacy.

• AI can be used to anonymize or pseudonymize data, making it difficult to trace back to individuals while still enabling analysis.

• AI is critical in developing tools to detect and reduce the spread of deepfakes, thereby ensuring the verifiability of digital content (as well as detecting/verifying the authenticity of AI agents).

• AI can automatically ensure data processing practices comply with legal standards, making verification processes more scalable.

Challenges

• AI systems often require massive datasets to function effectively, but the ways data is used, stored, and accessed may lack transparency, raising privacy concerns.

• With sufficient data and advanced AI techniques, individuals might be re-identified from datasets meant to be anonymous, undermining privacy protections.

• Since AI can generate highly realistic text, images, or videos, distinguishing between real and AI-generated fake content becomes more difficult, challenging verifiability.

• AI models may be deceived or manipulated (adversarial attacks), compromising the verifiability of data or the integrity of the AI system itself (as demonstrated by cases like Freysa and Jailbreak).

These challenges have accelerated the rapid development of AI, blockchain, verifiability, and privacy technologies, leveraging the strengths of each. We are witnessing the rise of the following technologies:

• Zero-knowledge proofs (ZKP)

• Zero-knowledge Transport Layer Security (zkTLS)

• Trusted Execution Environment (TEE)

• Fully Homomorphic Encryption (FHE)

1. Zero-Knowledge Proofs (ZKP)

ZKPs allow one party to prove to another that they know certain information or that a statement is true, without revealing anything beyond the proof itself. AI can leverage this to prove that data processing or decisions meet certain criteria without disclosing the underlying data. A good case study is getgrass.io, where Grass uses idle internet bandwidth to collect and organize public web data for training AI models.

The Grass Network allows users to contribute their idle internet bandwidth via browser extensions or apps. This bandwidth is used to scrape public web data, which is then processed into structured datasets suitable for AI training. The network performs this web scraping process through nodes operated by users.

Grass Network emphasizes user privacy by only scraping public data, not personal information. It uses zero-knowledge proofs to verify and protect data integrity and provenance, preventing data tampering and ensuring transparency. All transactions from data collection to processing are managed via a sovereign data rollup on the Solana blockchain.

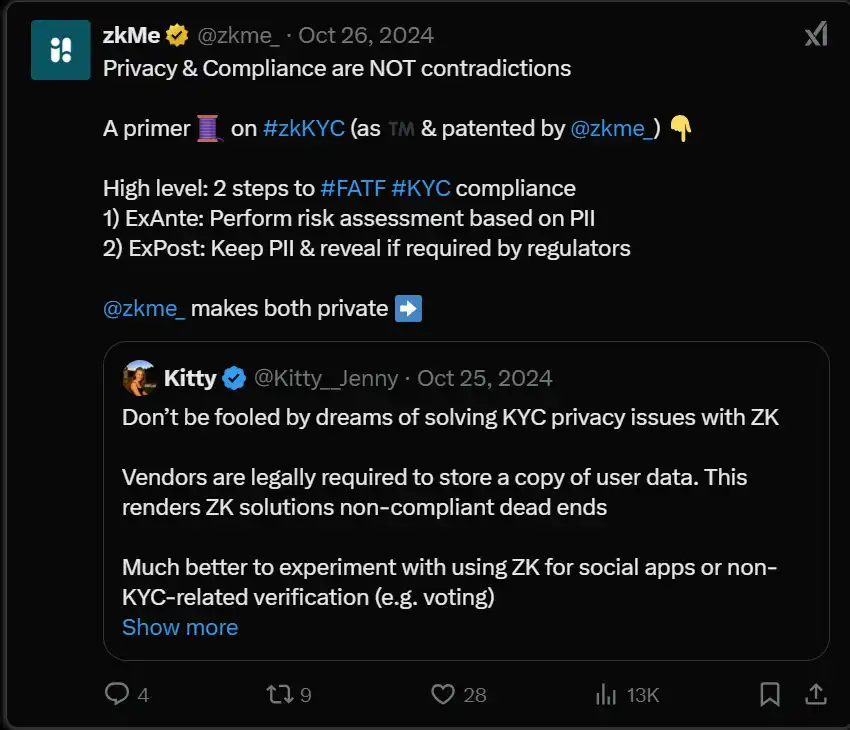

Another strong case study is zkMe.

zkMe’s zkKYC solution addresses the challenge of conducting KYC (Know Your Customer) processes in a privacy-preserving manner. By leveraging zero-knowledge proofs, zkKYC enables platforms to verify user identities without exposing sensitive personal information, thus maintaining compliance while protecting user privacy.

2. zkTLS

TLS = Standard security protocol providing privacy and data integrity between two communicating applications (typically associated with the "s" in HTTPS). zk + TLS = Enhanced privacy and security in data transmission.

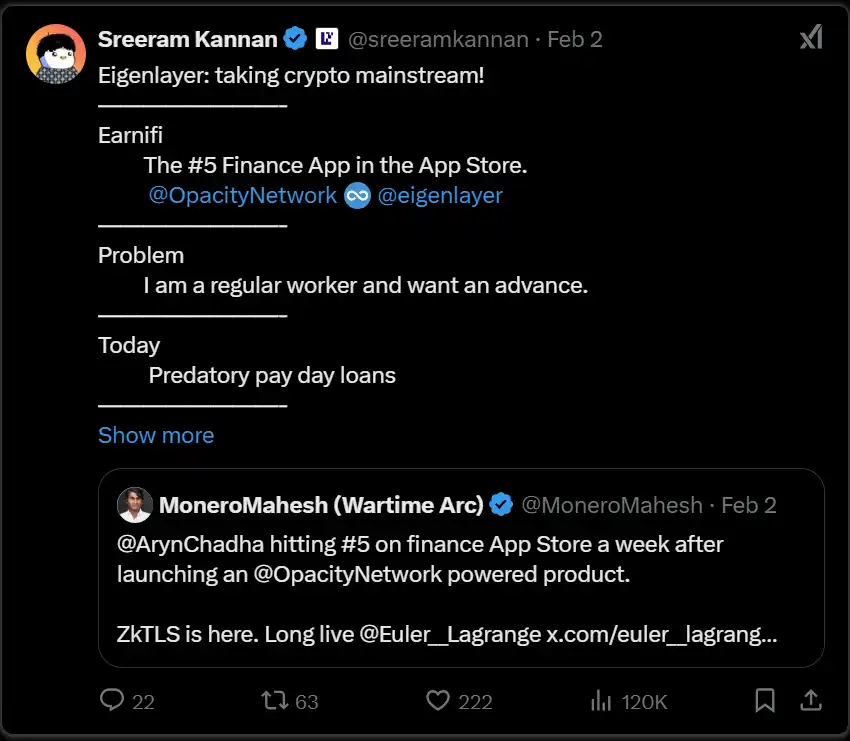

A good case study is OpacityNetwork.

Opacity uses zkTLS to provide secure and private data storage solutions. By integrating zkTLS, Opacity ensures that data transmitted between users and storage servers remains confidential and tamper-proof, addressing inherent privacy issues in traditional cloud storage services.

Use Case—Early Wage Access: Earnifi is reportedly rising to the top of app store rankings, especially within financial apps, and leverages OpacityNetwork’s zkTLS.

• Privacy: Users can provide income or employment status to lenders or other services without revealing sensitive banking details or personal documents like bank statements.

• Security: The use of zkTLS ensures these transactions are secure, verified, and remain private. It eliminates the need for users to entrust all their financial data to third parties.

• Efficiency: The system reduces costs and complexities associated with traditional early wage access platforms, which may require cumbersome verification processes or extensive data sharing.

3. TEE

Trusted Execution Environment (TEE) provides hardware-enforced isolation between normal execution environments and secure execution environments. This may currently be the most well-known security implementation in AI agents, ensuring they operate as fully autonomous agents. Popularized by 123skely’s aipool tee experiment: a TEE presale event where the community sends funds to an agent that autonomously issues tokens based on predefined rules.

Marvin Tong’s PhalaNetwork: MEV protection, integration with ai16zdao’s ElizaOS, and Agent Kira as a verifiable autonomous AI agent.

Fleek’s one-click TEE deployment: Focused on simplifying usage and improving developer accessibility.

4. FHE (Fully Homomorphic Encryption)

A form of encryption that allows computations to be performed directly on encrypted data without first decrypting it.

A good case study is mindnetwork.xyz and its proprietary FHE technology/use cases.

Use Case—FHE Restaking Layer and Risk-Free Voting

FHE Restaking Layer

By using FHE, restaked assets remain encrypted, meaning private keys are never exposed, significantly reducing security risks. This ensures privacy while still enabling transaction verification.

Risk-Free Voting (MindV)

Governance voting occurs on encrypted data, ensuring votes remain private and secure, reducing risks of coercion or bribery. Users gain voting power (vFHE) by holding restaked assets, decoupling governance from direct asset exposure.

FHE + TEE

By combining TEE and FHE, they create a robust security layer for AI processing:

• TEE protects operations within the computing environment from external threats.

• FHE ensures that operations are always performed on encrypted data throughout the process.

For institutions handling transactions ranging from $100 million to over $1 billion, privacy and security are crucial to prevent front-running, hacking, or exposure of trading strategies.

For AI agents, this dual encryption enhances privacy and security, making it highly valuable in areas such as:

• Privacy of sensitive training data

• Protection of internal model weights (preventing reverse engineering/IP theft)

• User data protection

The main challenge for FHE remains high overhead due to computational intensity, leading to increased energy consumption and latency. Current research is exploring methods such as hardware acceleration, hybrid encryption techniques, and algorithm optimization to reduce computational burden and improve efficiency. Therefore, FHE is best suited for low-computation, high-latency applications.

Summary

• FHE = Perform operations on encrypted data without decryption (strongest privacy protection, but most expensive)

• TEE = Hardware-based secure execution in isolated environments (balances security and performance)

• ZKP = Prove statements or authenticate identity without revealing underlying data (ideal for proving facts/credentials)

This is a broad topic, so this is not the end. One key question remains: In an era of increasingly sophisticated deepfakes, how can we ensure that AI-driven verifiability mechanisms are truly trustworthy? In part three, we will delve deeper into:

• The verifiability layer

• The role of AI in verifying data integrity

• The future of privacy and security

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News