Hardcore Conversation with Scroll's Zhang Ye: zkEVM, Scroll, and the Future

TechFlow Selected TechFlow Selected

Hardcore Conversation with Scroll's Zhang Ye: zkEVM, Scroll, and the Future

This article is an excellent resource for understanding Scroll, where Zhang Ye answers 15 questions about Scroll and zkEVM.

Interviewers: Nickqiao & Faust, Geeker Web3

Interviewee: YeZhang, Co-founder of Scroll

Editors: Faust, Jomosis

On June 17, with the help of Scroll’s DevRel—Vincent Jin, Geeker Web3 and BTCEden were honored to invite YeZhang, co-founder of Scroll, to answer various questions about Scroll and zkEVM.

During the session, both technical topics and fun anecdotes about Scroll were discussed, along with its grand vision of empowering real economies in Africa, Latin America, and other developing regions. This article is a transcript of the interview, over 10,000 words long, covering at least 15 key topics:

-

Potential applications of ZK outside blockchain

-

Differences in engineering difficulty between zkEVM and zkVM

-

Technical challenges Scroll faced during zkEVM implementation

-

Scroll's improvements to Zcash's halo2 proving system

-

How Scroll collaborates with Ethereum's PSE team

-

How Scroll ensures circuit security through code audits

-

Scroll’s preliminary roadmap for next-gen zkEVM and proving systems

-

Scroll’s Multi Prover design and how it builds its Prover network (zk mining pool), etc.

In addition, YeZhang shared a grand vision at the end: Scroll will root itself in underbanked regions such as Africa, Turkey, and Southeast Asia, aiming to create real-world economic use cases to move “from virtual to real.” This article may be one of the best resources for understanding Scroll more deeply. We highly recommend reading it thoroughly.

1. Faust: Professor Zhang, what are your thoughts on ZK applications beyond Rollups? Many people assume ZK is mainly useful for mixers, private transfers, ZK Rollups, or ZK bridges. But outside Web3—say, in traditional industries—there are already many ZK applications. Where do you see ZK being adopted most significantly in the future?

YeZhang: That's a great question. People working on ZK in traditional industries have been exploring various use cases for five or six years now. The applications of ZK within blockchain are actually quite narrow, which is exactly why Vitalik believes that in ten years, ZK use cases could be as large as blockchain itself.

I believe ZK has tremendous potential in any scenario requiring trust assumptions. Imagine you need to perform a heavy computation task. If you rent servers from AWS, run your job, and get results, it’s like doing computation on your own device—you pay for server rental, but the cost is often high.

But if we adopt a computing outsourcing model, many people can use their idle devices or resources to share your computational load, potentially reducing your costs below renting dedicated servers. However, there's a trust issue—you don’t know whether the result returned by others is correct. Suppose you have a complex calculation and outsource it to me; I return a random result after half an hour. You have no way to verify this result because I could fabricate anything.

However, if I can prove to you that the computed result is valid, then you can trust it—and feel confident outsourcing even more tasks. ZK can turn many untrusted data sources into trusted ones. This capability is powerful: using ZK, you can efficiently leverage cheap but untrusted third-party computing resources.

I think this scenario is very meaningful and could give rise to new business models around outsourced computing. In academic literature, this concept is known as verifiable computation—making computations inherently trustworthy. Beyond that, ZK can also apply to database systems. For instance, running a local database might be too expensive, so you consider outsourcing storage to someone who has spare capacity. But you’d worry they might alter your data or return incorrect results when you query via SQL.

To address this, you could require them to generate a proof. If feasible, you could safely outsource not just compute but also data storage, while still receiving trustworthy results. These are all part of a broader category of verifiable computation applications.

There are many other examples. I recall a paper discussing Verifiable ASICs, where ZK algorithms are embedded directly into chips. When programs run on these chips, outputs automatically include a cryptographic proof. With this, any device could produce verified, trustworthy results.

Another somewhat whimsical idea is Photo Proof. Today, we often can't tell whether photos have been edited. But ZK could prove that a photo hasn't been tampered with. For example, camera software could embed digital signatures automatically upon taking a picture—essentially stamping it cryptographically. If someone later uses Photoshop to modify your image, signature verification would detect the alteration.

With ZK, even after minor edits like rotation or cropping, you could generate a ZK proof showing only superficial changes were made—preserving the core content. This proves your modified version remains faithful to the original, without malicious manipulation.

This concept can extend to video and audio: using ZK, you don’t need to disclose exactly what changes were made, yet you can prove the essence of the original content wasn't altered, only non-substantive adjustments applied. There are many such interesting applications where ZK can play a role.

Currently, the reason ZK applications haven't seen widespread adoption is primarily due to high costs. Existing ZK proof generation schemes cannot efficiently generate proofs for arbitrary computations in real time. The overhead of ZK is typically 100x to 1000x that of the original computation—and those numbers are already conservative estimates.

Imagine a computation that normally takes one hour. Generating a ZK proof for it might incur 100x overhead—that’s 100 hours. While GPUs or ASICs can accelerate this, the computational cost remains massive. If you ask me to compute something complicated and also generate a ZK proof, I might refuse—it requires 100x extra resources, making it economically impractical. Therefore, generating one-off ZK proofs in point-to-point scenarios is currently prohibitively expensive.

That said, this is precisely why ZK fits blockchain so well: blockchains perform redundant computation across many nodes—classic 1-to-many scenarios. In a blockchain network, thousands of nodes execute identical tasks. With 10,000 nodes, the same computation runs 10,000 times. But if off-chain work generates a single ZK proof, all 10,000 nodes merely verify the proof instead of re-executing everything. Effectively, you replace 10,000 redundant executions with one proof generation plus lightweight validation—a huge net saving in total resource usage.

Thus, the more decentralized a chain is, the better it aligns with ZK, since anyone can verify ZKPs at near-zero cost. By paying upfront for proof generation, we free up the majority of participants from costly recomputation. This matches perfectly with blockchain’s public verifiability and abundant 1-to-many patterns.

One additional point not mentioned earlier: current high-overhead ZK proofs used in blockchain are non-interactive—you send a proof once and done. On-chain interaction must be minimal. But there exists a more efficient alternative: interactive proofs. For example, you send me a challenge, I respond, you reply again, and so on. Through multiple rounds of exchange, we may drastically reduce overall computational burden. If practical, such methods could unlock larger-scale ZK applications.

Nickqiao: What’s your view on zkML—combining ZK with machine learning? How promising is its development outlook?

YeZhang: zkML is indeed an intriguing direction—applying zero-knowledge techniques to machine learning. But I think it still lacks killer application scenarios. Most believe that as ZK systems improve in performance, they’ll eventually support ML-level workloads. Currently, zkML efficiency allows inference tasks like GPT-2, technically feasible—but limited to inference only. Ultimately, we’re still exploring: what kind of applications truly require proving the correctness of ML inference? That’s a tough question.

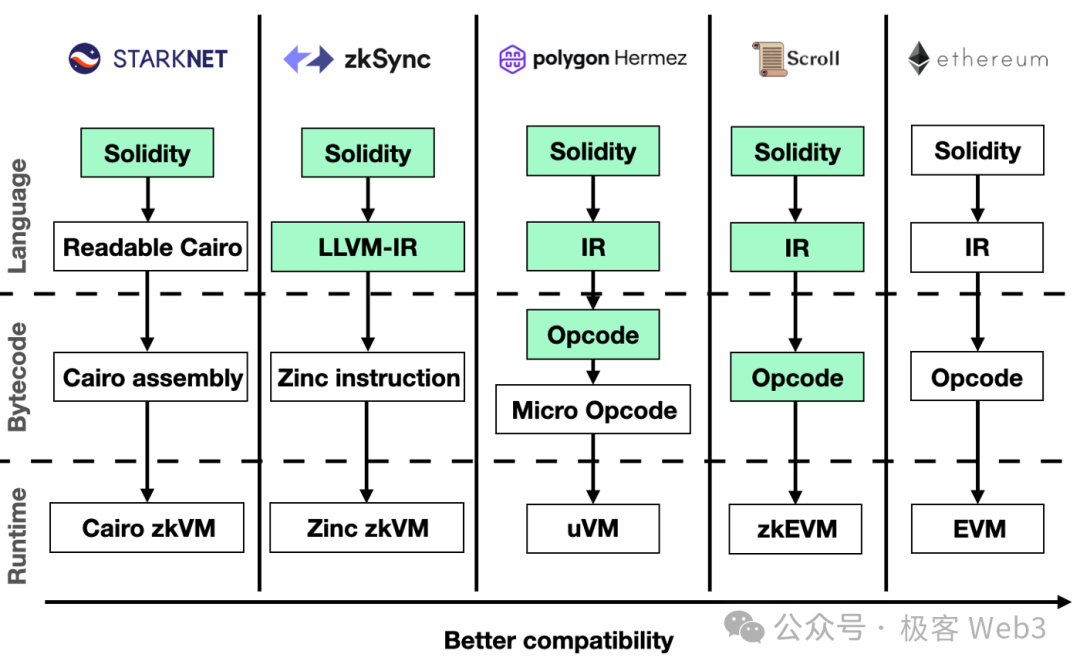

2. Nickqiao: Could you elaborate on the engineering difficulty difference between zkEVM and zkVM?

YeZhang: First, both zkEVM and zkVM involve building custom ZK circuits tailored to a VM’s opcode/instruction set. The engineering difficulty of zkEVM depends heavily on implementation approach. When we started our project, ZK efficiency wasn’t high enough. The most efficient method was writing individual circuits for each EVM opcode and combining them into a full zkEVM.

But this approach is extremely challenging—much harder than zkVM, given that EVM has over 100 opcodes, each needing a custom circuit, all integrated together. Every time an EIP adds a new opcode—like EIP-4844—the zkEVM must follow suit. Eventually, you end up writing extensive circuits and undergoing lengthy audits. So development complexity and workload are vastly greater compared to zkVM.

In contrast, zkVM defines its own instruction set, which can be designed to be simple and ZK-friendly. Once built, you rarely need to change the underlying codebase to support upgrades or precompiles. Thus, the main effort and ongoing maintenance burden shift to the compiler—the component translating smart contracts into zkVM opcodes—unlike zkEVM, where complexity lies in circuit design.

From an engineering standpoint, I believe zkVM is easier to implement than a fully customized zkEVM. However, running EVM bytecode atop zkVM generally performs worse than a native zkEVM, which is purpose-built. That said, prover efficiency has improved 3–5x, even 5–10x over the past two years. As zkVM efficiency rises, its performance gap may soon be outweighed by advantages in ease of development and maintenance. For both zkVM and zkEVM, aside from performance, the biggest bottleneck remains engineering complexity—requiring a strong technical team to maintain such a sophisticated system.

3. Nickqiao: Can you share some technical difficulties Scroll encountered during zkEVM implementation, and how you resolved them?

YeZhang: Looking back, the biggest challenge was the sheer uncertainty at the beginning. When we launched, almost no one else was building zkEVM—we were among the first teams trying to make zkEVM go from impossible to possible. Theoretically, within the first six months, we established a viable framework. Later, implementing it involved enormous engineering effort and deep technical hurdles, such as dynamically supporting different pre-compiles and optimizing opcode aggregation—both posing significant engineering challenges.

We were also the only—and earliest—team to support EC Pairing (elliptic curve pairing) as a precompile. Implementing Pairing circuits is extremely difficult, involving intricate math and cryptography. It demands strong expertise in both mathematics and engineering from the developers.

Later on, we had to consider long-term maintainability of our tech stack and decide when to upgrade to zkEVM 2.0. We have a dedicated research team actively exploring alternatives—such as supporting EVM via zkVM—and publishing related papers.

In summary, initial challenges revolved around turning zkEVM from theory into reality—mainly engineering and optimization. Now, the next major challenge is deciding when and how to transition to a more efficient ZK proving system, migrating our current codebase to next-gen zkEVM, and determining what new features it will enable. There’s still much exploration ahead.

4. Nickqiao: It sounds like Scroll is considering switching ZK proving systems. From what I understand, Scroll currently uses a PLONK + Lookup-based algorithm. Is this still optimal for zkEVM today? And what proving system does Scroll plan to adopt in the future?

YeZhang: Let me briefly address PLONK and Lookup first. Currently, this combination remains the most suitable for implementing zkEVM or zkVM. Most implementations are tightly coupled with specific choices of PLONK and Lookup. When people refer to PLONK, they usually mean using PLONK’s arithmetization—its method of expressing circuits numerically—as the foundation for zkVM circuit design.

Lookup refers to a type of constraint used when designing circuits. So when we say “PLONK + Lookup,” we mean using PLONK-style constraints in writing zkEVM/zkVM circuits—which is currently the most common practice.

On the backend, the line between PLONK and STARK has blurred—they differ mainly in polynomial commitment schemes but are otherwise similar. Even combining STARK with Lookup yields outcomes comparable to PLONK + Lookup. Differences mostly lie in prover efficiency and proof size. Still, for frontend design, PLONK + Lookup remains the best fit for zkEVM today.

Regarding the second question—what proving system Scroll plans to switch to: Scroll aims to always stay at the cutting edge of zk technology and architecture. So yes, we’ll adopt newer technologies. But safety and stability are top priorities, so we won’t aggressively overhaul our proving system. Instead, we’ll likely take gradual steps—using Multi Prover as a bridge—to smoothly evolve toward the next iteration. Above all, we want the transition to be seamless.

That said, switching to a new proving system is still early—we’re looking at timelines of 6 to 12 months down the road.

5. Nickqiao: Has Scroll introduced any unique innovations on top of the current PLONK + Lookup-based proving system?

YeZhang: The system currently running on Scroll’s mainnet is halo2. Halo2 originated from the Zcash team, who developed a flexible backend supporting Lookup and customizable circuit formats. We collaborated with Ethereum’s PSE team to modify halo2—replacing its original IPA-based polynomial commitment scheme with KZG, thereby shrinking proof sizes and enabling more efficient ZK proof verification on Ethereum.

Additionally, we’ve invested heavily in GPU hardware acceleration, achieving 5–10x faster ZKP generation compared to CPU-only setups. Overall, we replaced halo2’s original polynomial commitment with a more verification-friendly version and implemented numerous prover optimizations, pushing hard on engineering execution.

6. Nickqiao: So Scroll now jointly maintains the KZG version of halo2 with Ethereum’s PSE team. Can you describe how this collaboration works?

YeZhang: Before launching Scroll, we already knew several engineers from the PSE team. We discussed our intention to build zkEVM and estimated feasibility. Coincidentally, they were planning the same thing—so we naturally teamed up.

We met fellow zkEVM enthusiasts through the Ethereum community and Ethereum Research forums—people who shared our goal of productizing zkEVM and serving Ethereum. This led us into open-source collaboration mode. Our cooperation resembles an open-source community rather than a commercial company. For example, we hold weekly calls to sync progress and discuss issues.

We’ve maintained the codebase openly—from improving halo2 to realizing zkEVM—with mutual code reviews throughout the exploratory process. You can see from GitHub contribution stats: roughly half the code was written by PSE, half by Scroll. Later, we completed audits and shipped production-grade code live on mainnet. In short, our collaboration with Ethereum PSE follows an organic, open-source community path.

7. Nickqiao: You mentioned that writing zkEVM circuits requires deep knowledge of math and cryptography—few people truly grasp it. How does Scroll ensure correctness and minimize bugs in circuit design?

YeZhang: Since our code is open source, nearly every PR undergoes review by our team, Ethereum contributors, and community members—a rigorous audit process. Moreover, we’ve spent over $1 million on circuit audits alone, engaging top-tier firms specializing in cryptography and circuit auditing—such as Trail of Bits and Zellic. We also hired OpenZeppelin to audit our on-chain smart contracts. Essentially, every security-critical component receives premium-level audit coverage. Internally, we maintain a dedicated security team conducting continuous testing to enhance Scroll’s resilience.

Nickqiao: Besides traditional audits, do you employ formal verification or other mathematically rigorous methods?

YeZhang: We’ve looked into Formal Verification early on, and Ethereum has recently been exploring how to formally verify zkEVM. It’s a promising direction. But currently, full formal verification of zkEVM is premature—we can only begin with small modules. Formal Verification carries costs: you must first write a formal specification (spec), which isn’t easy. Maturing this process will take considerable time.

Therefore, I don’t think we’re ready for comprehensive formal verification of zkEVM yet. However, we continue collaborating closely with Ethereum and external partners to explore pathways toward zkEVM formal verification.

Still, for now, manual auditing remains the gold standard. Even with specs and formal tools, flawed specs lead to flawed conclusions. So for now, manual audits combined with open-source transparency and bug bounty programs remain the best way to ensure Scroll’s code stability.

However, in next-generation zkEVM development, figuring out how to enable formal verification—how to design a zkEVM that makes spec-writing easier and allows formal proofs of security—is Ethereum’s ultimate goal. Once a zkEVM can be formally verified, it can be confidently deployed on Ethereum mainnet.

8. Nickqiao: Regarding Scroll’s use of halo2, would supporting new proving systems like STARK incur high development costs? Could it support multiple proving systems via a plug-in architecture?

YeZhang: halo2 is a highly modular ZK proving system. You can swap out components like fields or polynomial commitments. Simply replacing KZG with FRI effectively creates a STARK-compatible version of halo2—and indeed, some have already done this. So halo2’s compatibility with STARK is entirely feasible.

In practice, however, pursuing peak efficiency often conflicts with modularity—because abstraction layers sacrifice fine-tuned optimization, incurring performance trade-offs. We’re actively evaluating whether future directions should favor modular frameworks versus highly customized ones, especially since we have a strong ZK engineering team capable of maintaining independent proving stacks to maximize zkEVM efficiency. Trade-offs exist, but halo2 supports FRI.

9. Nickqiao: What are Scroll’s primary R&D focuses regarding ZK right now? Optimizing existing algorithms? Adding new features?

YeZhang: The core focus of our engineering team remains doubling current prover performance and achieving maximum EVM compatibility. In the next upgrade, we aim to retain our position as the most EVM-compatible ZK Rollup. Right now, no other zkEVM matches our level of compatibility.

That’s one pillar of our engineering efforts: continuing to optimize provers and compatibility while lowering fees. We’ve already committed substantial manpower—about half our engineering force—to researching next-gen zkEVM, targeting minute- or even second-level ZK proof generation with dramatically higher prover efficiency.

Meanwhile, we're exploring new zkEVM execution layers. Our nodes previously used go-ethereum, but now better-performing Rust-based clients like Reth are emerging. We’re investigating how to integrate next-gen zkEVM with Reth to boost overall chain performance. We’ll evaluate optimal implementation strategies and migration paths for zkEVM built around new execution layers.

10. Nickqiao: Given Scroll’s interest in supporting diverse proving systems, is there a need to deploy multiple verifier contracts on-chain—for cross-verification?

YeZhang: These are two separate issues. First: Is it valuable to build modular proving systems and support diverse provers? Yes, absolutely. We've always been an open-source project. The more general-purpose the framework we release, the more contributors will build on it, growing our community organically and enabling external contributions in tooling and development. So creating a ZK proving framework useful not just for Scroll but for others too is highly meaningful.

Second: Doing on-chain cross-verification using multiple systems like PLONK and STARK. This is orthogonal to whether the system supports multiple provers. Few projects verify the same zkEVM output twice—once with PLONK, once with STARK—because it offers little security benefit while increasing prover costs. Such cross-verification is rare.

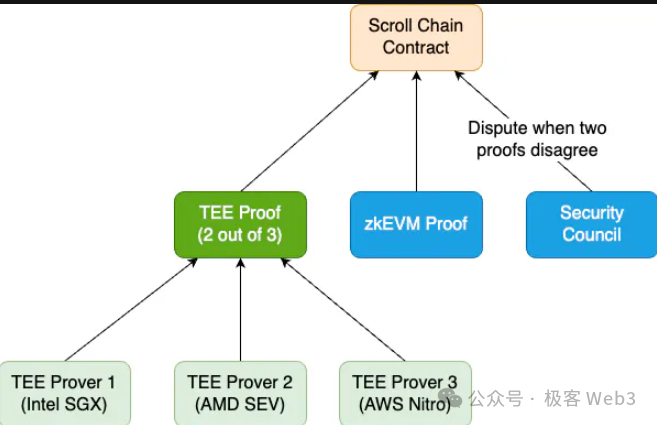

We’re actually building something called Multi Prover: two provers independently prove the same block, then aggregate their proofs off-chain before submitting a single combined proof for on-chain verification. We won’t do on-chain SNARK vs. STARK cross-verification. Our Multi Prover design ensures redundancy—if one prover has a bug, the other can keep the system running. This is about fault tolerance, not cross-verification.

11. Nickqiao: In Scroll’s Multi Prover setup, how do the individual provers differ in their proof procedures?

YeZhang: Suppose we have a standard halo2-based zkEVM with a regular prover generating ZKPs for on-chain validation. But here’s the problem: zkEVMs are complex and may contain bugs. A hacker—or even the team—could exploit a bug to forge a proof and drain funds. That’s dangerous.

The core idea behind Multi Prover was first proposed by Vitalik at an event in Bogota: if a zkEVM might have bugs, run multiple different types of provers simultaneously. For example, use a TEE-based SGX prover (which Scroll currently employs), or an optimistic prover, or a zkVM executing EVM. All these provers concurrently prove the validity of an L2 block.

Suppose three distinct provers generate three proofs. Only when all three pass verification—or at least two out of three—can the L2 state be finalized on Ethereum. Multi Prover ensures that if one prover fails, the others can compensate, greatly enhancing system reliability and ZK Rollup security. Of course, drawbacks exist—higher total operational costs. We’ve published a detailed blog explaining these trade-offs.

12. Nickqiao: Regarding ZK proof generation, how is Scroll building its proving network (ZK mining pool)? Is it self-built, or will you outsource computation to third parties like Cysic?

YeZhang: Our current design is straightforward: we want more GPU owners or miners to join our proving network (ZK mining pool). However, Scroll’s Prover Market is currently operated centrally by us. We collaborate with third parties possessing GPU clusters who run provers—but this is to ensure mainnet stability, as decentralizing provers introduces risks.

For example, poor incentive design might result in insufficient proof generation, degrading network performance. Early on, we chose a relatively centralized approach. But our interface and framework are designed to easily transition to decentralization. Anyone could use our tech stack to build a decentralized prover network—just add incentives.

But for now, to ensure Scroll’s stability, our prover network remains centralized. In the future, we plan to broadly decentralize the prover network—enabling individuals to run their own prover nodes. We’re also partnering with third-party platforms like Cysic and Snarkify Network to explore how teams launching their own Layer2s using our stack can plug into external Prover Markets and directly call their services.

13. Nickqiao: Has Scroll made any investments or achieved results in ZK hardware acceleration?

YeZhang: This ties directly to what I mentioned earlier—Scroll’s two founding pillars: first, making zkEVM go from impossible to possible; second, achieving that breakthrough thanks to advances in ZK hardware acceleration.

Three years before starting Scroll, I began researching ZK hardware acceleration, including work on ASICs and GPU acceleration, resulting in published papers. We possess deep expertise and credibility in ZK hardware, spanning chip design and GPU optimization, both academically and practically.

However, Scroll will focus specifically on GPU-based hardware acceleration, as we lack FPGA or dedicated hardware development resources and tape-out experience. Instead, we partner with hardware companies like Cysic to handle specialized hardware, while we concentrate on the software-adjacent domain of GPU acceleration. Our team optimizes GPU acceleration and open-sources the results, allowing external partners to develop specialized chips like ASICs. We frequently exchange insights and tackle shared challenges together.

14. Nickqiao: You mentioned Scroll may switch to other proving systems. Could you explain newer ones like Nova or others? What advantages do they offer?

YeZhang: One internal research direction involves using smaller finite fields compatible with existing proving systems—libraries like PLONKy3 enable fast operations over small fields. Transitioning from large to small fields is one possibility.

We’re also exploring GKR, a proving system with linear-time proof generation complexity—significantly lower than other provers. However, mature engineering implementations are lacking, requiring substantial investment to realize.

GKR excels at handling repeated computations. For example, verifying 1,000 signature checks becomes highly efficient under GKR. Polyhedra, a ZK bridge, uses GKR to verify signatures with remarkable efficiency. Since EVM contains many repetitive steps, GKR can significantly reduce ZK proof generation costs.

Another advantage: GKR requires far less computation than other systems. Using PLONK or STARK to prove a Keccak hash function requires committing to every intermediate variable throughout the entire computation process.

With GKR, you only commit to the input layer. Intermediate values are derived via recursive relations, eliminating the need to commit to each step—greatly reducing computational overhead. The sum-check protocol behind GKR is now adopted by newer frameworks like Jolt, Lookup arguments, and other advanced ZK designs—indicating strong potential. We’re seriously studying this direction.

Then there’s Nova, which gained popularity a few months ago due to its suitability for repetitive computations. Normally, proving 100 tasks incurs ~100x overhead. Nova folds these tasks incrementally—pairwise linear combinations—until a single final statement emerges. Proving this final statement validates all 100 prior ones, drastically compressing overhead.

Subsequent work like HyperNova extends Nova beyond R1CS to support lookup and other circuit formats, enabling VMs to be proven under Nova-like systems. But currently, Nova and GKR aren’t production-ready—efficient libraries are scarce.

Moreover, Nova’s folding mechanism differs fundamentally from traditional provers. It’s still immature—an intriguing candidate, but not yet robust. For now, from a production-readiness standpoint, established proving systems remain ahead. Long-term, it’s unclear which will prevail.

15. Faust: Finally, let’s talk about values. I remember you said last year that after visiting Africa, you believed blockchain could gain mass adoption in economically disadvantaged regions. Could you expand on that?

YeZhang: I hold a strong belief: blockchain genuinely has utility in economically underdeveloped countries. Today’s blockchain space sees many scams, shaking people’s confidence. Why work in an industry perceived as valueless? Does blockchain really serve any purpose? Beyond speculation or gambling, are there real-world applications?

I believe visiting places like Africa reveals blockchain’s true potential. People in China or Western nations enjoy stable currencies and robust financial systems. Chinese users love WeChat Pay and Alipay; the RMB is relatively stable. There’s little need to use blockchain for payments.

But in regions like Africa, there’s genuine demand for blockchain and on-chain stablecoins due to severe inflation. For example, certain African countries face 20% inflation every six months. Every time you buy groceries after six months, prices jump 20%; over a year, even more. Their currency constantly depreciates—so does their wealth. Many seek refuge in USD, stablecoins, or other stable foreign currencies.

Africans struggle to open bank accounts in developed countries. Stablecoins become a necessity. Even without blockchain, they want access to USD. Clearly, holding USD via stablecoins is the best option. Upon receiving wages, many Africans immediately convert to USDT or USDC on Binance, withdrawing when needed. This creates authentic demand and real-world usage of blockchain.

After visiting Africa, you notice Binance established a strong presence there early. Many Africans rely on stablecoins—trusting exchanges more than their own national monetary systems. Locals often can’t access loans. Need $100? Banks impose endless paperwork and conditions—often denying applications. But on exchanges or DeFi platforms, borrowing is far more accessible. So yes, in Africa, blockchain meets tangible needs.

Most people aren’t aware of this—those on Twitter often ignore or never see such stories. Many underdeveloped regions—including high-Binance-user countries like Turkey, parts of Southeast Asia, and Argentina—show frequent exchange usage. Binance’s success there already proves strong real demand for blockchain.

Therefore, I believe these markets and communities are genuinely worth building for. We have a dedicated team in Turkey and a large Turkish community. We plan to gradually expand into Africa, Southeast Asia, Argentina, and similar regions. Among all Layer2s, Scroll is perhaps best positioned to succeed there—not just technologically, but culturally. Though our three founders are Chinese, our team spans at least 20–30 nationalities. With ~70 people total, nearly every region has 2–3 representatives. Our culture is highly diverse. In contrast, other major Layer2s like OP, Base, and Arbitrum are predominantly Western.

In conclusion, we aim to build real-use-case infrastructure in economically disadvantaged regions with genuine blockchain demand—akin to “surrounding the city from the countryside”—to drive mass adoption organically. My trip to Africa left a deep impression. Right now, Scroll’s usage cost is still somewhat high for average users. I hope we can reduce it tenfold or more, and find ways to bring more users onto blockchain.

Let me add a possibly controversial example: Tron. Many have negative perceptions, yet many economically disadvantaged populations use it. HTX’s past exchange strategy and marketing helped Tron achieve real network effects. I believe if an Ethereum-native chain could onboard these users into the Ethereum ecosystem, it would be a monumental achievement—and a positive contribution to the industry. I find that deeply meaningful.

Nowadays, many Ethereum L2s obsess over TVL metrics: you have $600M, we have $700M, they hit $1B. But to me, more impactful news would be: “Tether just minted another $1B of USDT on this L2” or issued X amount of stablecoins. When a chain grows organically to that scale—without relying on airdrop farming to capture user demand—that’s true success, at least in my eyes: when real user needs grow so large that people start using your chain daily.

Finally, a quick aside: Scroll’s ecosystem will host many upcoming events. Please follow our progress and participate in our DeFi ecosystem.

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News