FMG Report: Three Problems of AI and DePIN's Solutions

TechFlow Selected TechFlow Selected

FMG Report: Three Problems of AI and DePIN's Solutions

The key issue is not the amount of computing power, but how to empower AI products.

Author: FMGResearch

TL; DR

In the AI era, product competition relies heavily on resource availability (computing power, data, etc.), especially stable and reliable resources.

Model training and iteration also require a large user base (IPs) to feed data, enabling qualitative improvements in model efficiency.

Integration with Web3 enables small-to-medium AI startups to leapfrog traditional AI giants.

For DePIN ecosystems, resource availability such as computing power and bandwidth sets the floor (raw computing integration offers no moat); while AI model application, deep optimization (e.g., BitTensor), specialization (Render, Hivemapper), and effective data utilization determine the ceiling.

Under the AI+DePIN paradigm, model inference & fine-tuning, and mobile AI model markets will gain increasing attention.

AI Market Analysis & Three Key Questions

Statistics show that from September 2022—just before ChatGPT’s launch—to August 2023, the world's top 50 AI products accumulated over 24 billion visits, growing at an average of 236.3 million visits per month.

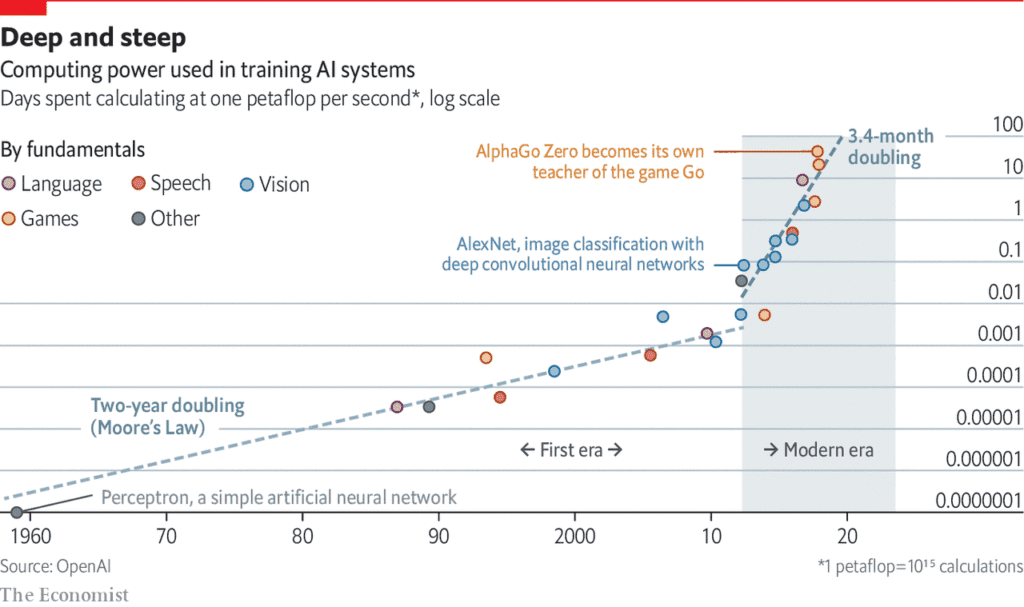

The boom in AI products is accompanied by escalating dependence on computing power.

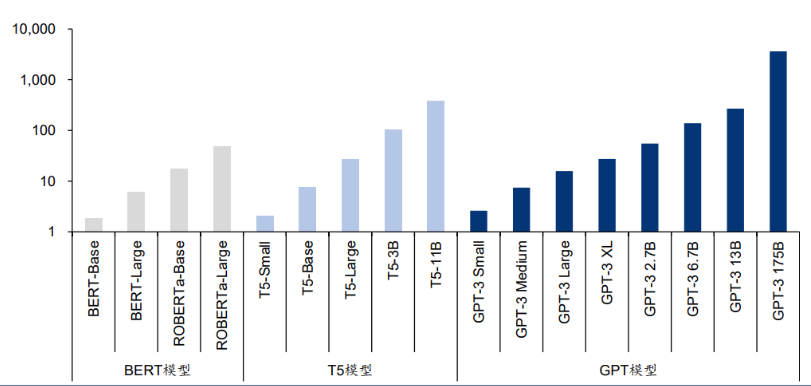

Source: Language Models are Few-Shot Learners

A paper from the University of Massachusetts Amherst noted that "training a single AI model emits carbon equivalent to the lifetime emissions of five automobiles." However, this analysis only accounts for one-time training. As models improve through repeated training cycles, energy consumption increases dramatically.

The latest language models contain billions or even trillions of parameters. GPT-3, a popular model, has 175 billion machine learning parameters. Training it requires 1,024 A100 GPUs, 34 days, and costs $4.6 million.

Post-AI-era product competition has evolved into a war centered on computing and other resources.

Source: AI is harming our planet: addressing AI’s staggering energy cost

This leads to three key questions: First, does an AI product have sufficient resource support (computing power, bandwidth, etc.), particularly stable and decentralized access? Reliability demands adequate decentralization of computing power. In traditional markets, chip shortages combined with geopolitical and ideological barriers give chipmakers inherent advantages, allowing them to sharply inflate prices. For example, the NVIDIA H100 chip rose from $36,000 in April 2023 to $50,000, further increasing costs for AI teams.

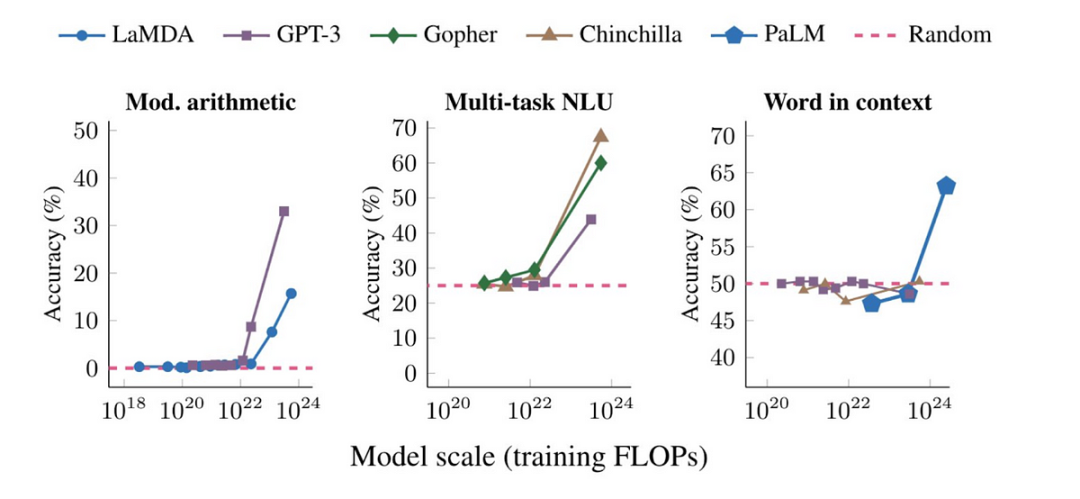

Second, meeting hardware requirements solves infrastructure needs, but model training and iteration still demand a massive user base (IPs) to provide training data. Once model scale surpasses a threshold, performance across tasks shows breakthrough growth.

Third, small-to-medium AI startups struggle to achieve leapfrogging innovation. Centralized control of computing power in traditional finance leads to monopolistic AI model development, with giants like OpenAI and Google DeepMind deepening their moats. Smaller AI teams must pursue differentiated strategies.

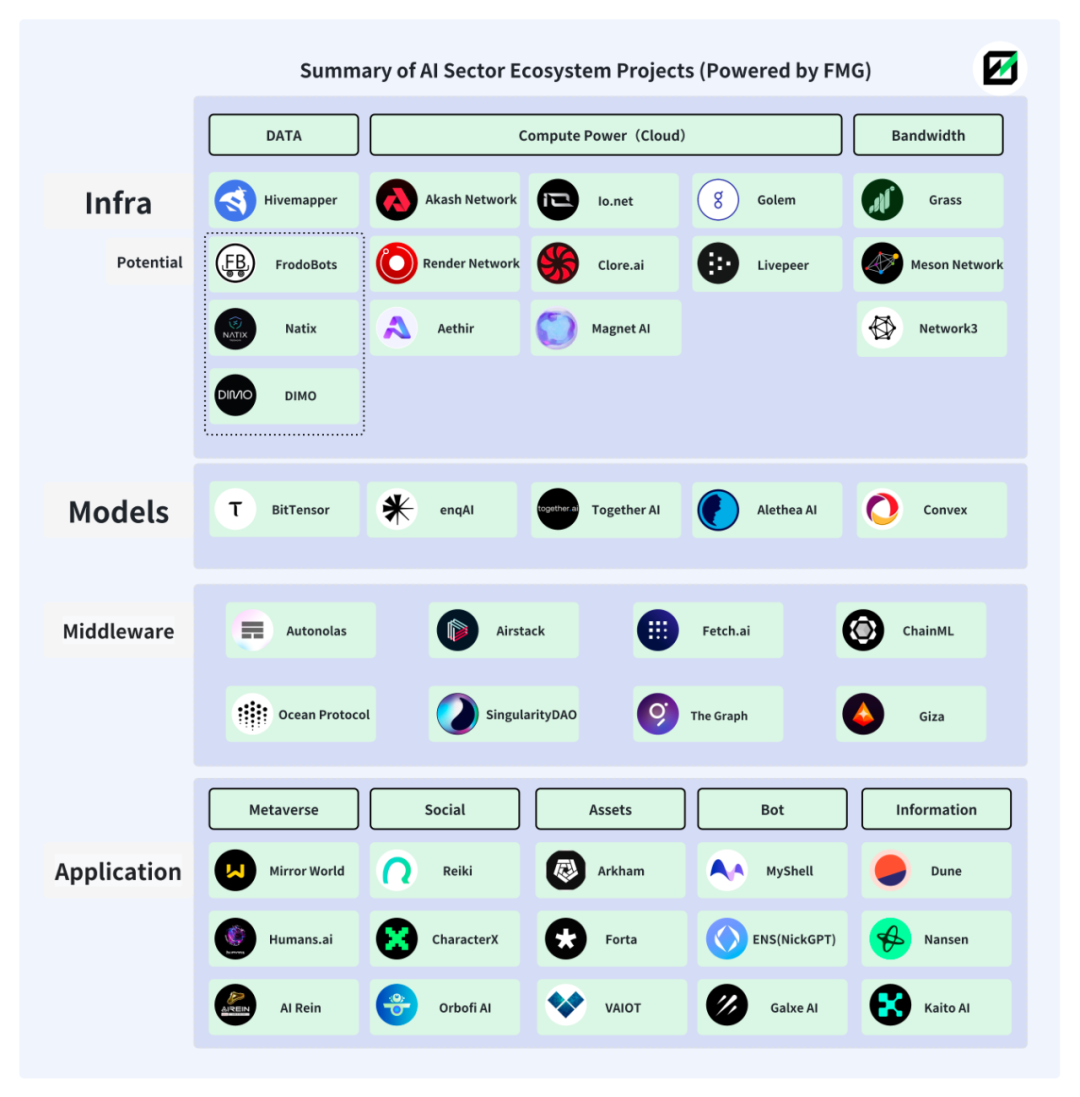

All three issues can be addressed through Web3. In fact, the convergence of AI and Web3 is not new and already supports a vibrant ecosystem.

Below is a partial map of the AI+Web3 landscape curated by Future Money Group.

AI+DePIN

1. DePIN’s Solution

DePIN stands for Decentralized Physical Infrastructure Networks—a blend of people and devices forming production relationships. By integrating tokenomics with hardware (e.g., computers, vehicle cameras), DePIN seamlessly connects users and devices while enabling sustainable economic operations.

Compared to broader Web3, DePIN naturally integrates deeper with hardware and traditional enterprises, giving it inherent advantages in attracting off-chain AI teams and capital.

DePIN’s pursuit of distributed computing and contributor incentives directly addresses AI’s dual needs for computing power and IP diversity.

-

DePIN leverages tokenomics to onboard global computing power (data centers & idle personal resources), reducing centralization risks and lowering costs for AI teams.

-

The vast and diverse IP pool within DePIN ensures varied, objective data sources, enhancing AI model performance through abundant data contributors.

-

Overlap between DePIN and Web3 user profiles allows AI projects to develop uniquely Web3-native AI models, enabling differentiation unavailable in traditional AI markets.

In Web2, AI model data collection typically relies on public datasets or self-collected data, limiting scope due to cultural and geographical biases, resulting in subjectively "distorted" outputs. Traditional methods are further constrained by inefficiency and high costs, making it difficult to scale models (in parameters, training duration, and data quality). For AI models, larger scale generally leads to qualitative leaps in performance.

Source: Large Language Models’ emergent abilities: how they solve problems they were not trained to address?

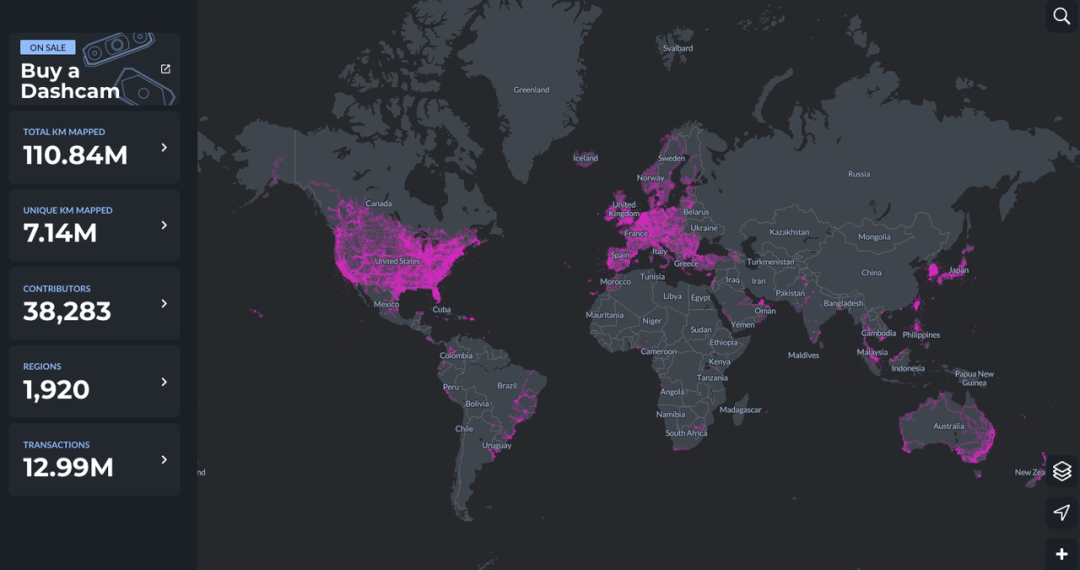

DePIN holds natural advantages here. Take Hivemapper: nearly 40,000 contributors across 1,920 global regions supply data for its MAP AI (mapping AI model).

The fusion of AI and DePIN marks a new level of AI+Web3 integration. Current Web3 AI projects largely emerge at the application layer and remain dependent on Web2 infrastructure—embedding existing AI models from centralized platforms into Web3 apps—rarely venturing into original model creation.

As a result, Web3 remains downstream in the value chain, unable to capture outsized returns. The same applies to distributed computing platforms: merely combining AI with computing power fails to unlock full potential. In such setups, providers earn minimal excess profits, and the ecosystem architecture is too simple to drive flywheel effects via tokenomics.

However, the AI+DePIN concept is breaking this pattern, shifting Web3’s focus toward broader AI model innovation.

2. AI+DePIN Project Overview

DePIN natively possesses what AI urgently needs: hardware (computing power, bandwidth, algorithms, data), users (data providers for model training), and built-in incentive mechanisms (tokenomics).

We propose a bold definition: any project that provides complete objective conditions (computing power / bandwidth / data / IPs), enables AI model scenarios (training / inference / fine-tuning), and incorporates tokenomics can be classified as AI+DePIN.

Future Money Group outlines classic AI+DePIN paradigms below.

We categorize projects by resource type—computing, bandwidth, data, and others—and analyze each segment.

2.1 Computing Power

Computing power forms the core of the AI+DePIN sector and hosts the largest number of projects. Primary computing components include GPUs (Graphics Processing Units), CPUs (Central Processing Units), and TPUs (specialized ML chips). Due to high manufacturing complexity, TPUs are mainly developed by Google and offered only as cloud rental services, limiting market size. GPUs, similar to CPUs but more specialized, excel at parallel complex mathematical computations. Originally designed for gaming and animation rendering, GPUs now serve far broader purposes. Thus, GPUs dominate today’s computing market.

Consequently, many AI+DePIN computing projects specialize in graphics and video rendering or related gaming applications, driven by GPU capabilities.

Globally, computing power in AI+DePIN comes from three sources: traditional cloud providers, idle personal computing, and proprietary hardware. Cloud providers contribute the most, followed by personal resources. Thus, these platforms often act as computing intermediaries, serving AI model developers.

Currently, computing capacity is underutilized. For example, Akash Network uses only about 35% of its total capacity, with the rest idle. Io.net faces similar issues.

This underuse stems partly from limited current demand for AI training and explains why AI+DePIN can offer cheaper computing. As the AI market expands, utilization rates should improve.

Akash Network: Decentralized peer-to-peer cloud marketplace

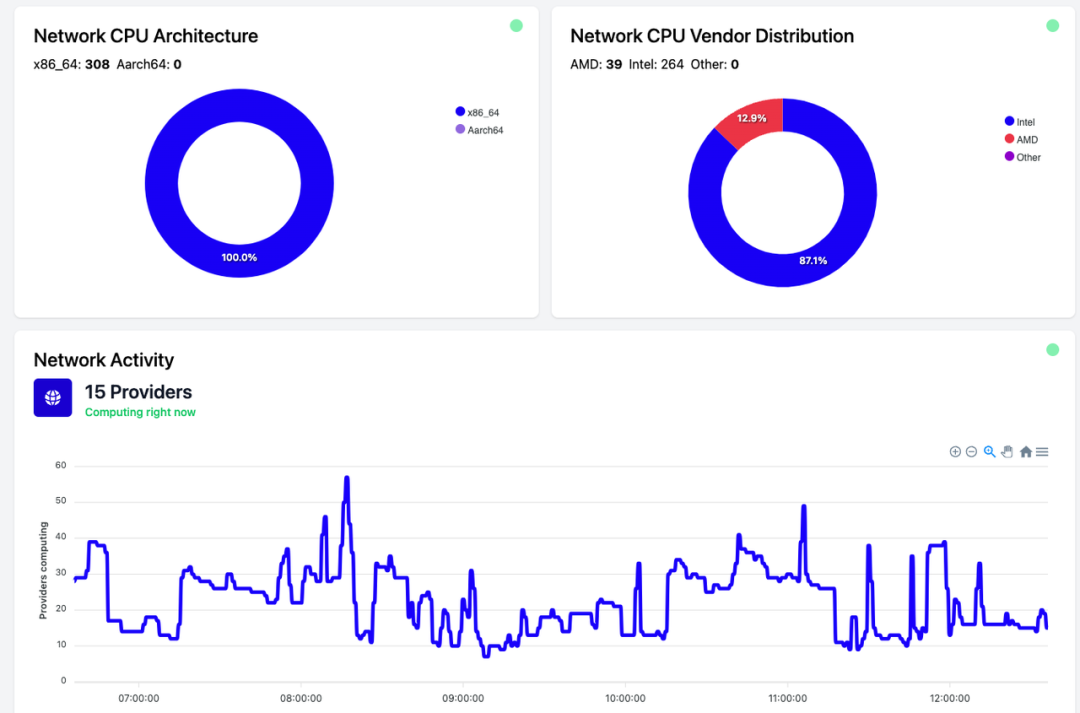

Akash Network is a decentralized peer-to-peer cloud marketplace, often likened to “Airbnb for cloud services.” It enables users and organizations of all sizes to deploy services quickly, securely, and affordably.

Like Render, Akash offers GPU deployment, leasing, and AI model training services.

In August 2023, Akash launched Supercloud, allowing developers to set their desired price for deploying AI models, while providers with spare capacity host them—a feature highly reminiscent of Airbnb, enabling providers to rent unused capacity.

Through open bidding, Akash incentivizes contributors to share idle computing resources, achieving more efficient utilization and offering competitive pricing to consumers.

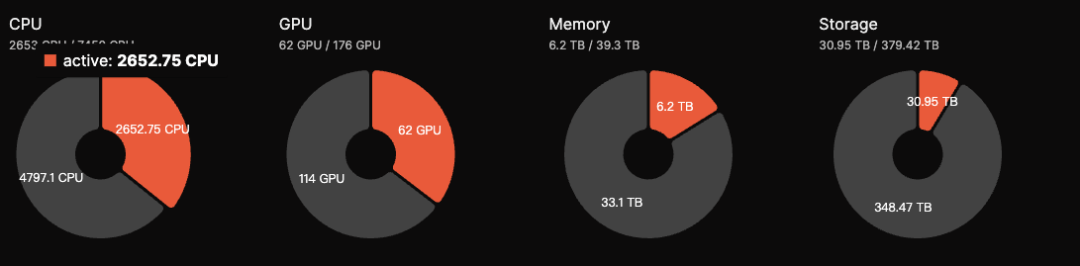

Akash currently has 176 GPUs in its ecosystem, with 62 active (35% utilization), down from 50% in September 2023. Estimated daily revenue is around $5,000. AKT tokens support staking, with users earning approximately 13.15% annual yield by helping secure the network.

Akash demonstrates strong metrics within the AI+DePIN space. With a $700M FDV, it has significant upside compared to peers like Render and BitTensor. Akash has also integrated with BitTensor’s Subnet to expand its ecosystem. Overall, Akash ranks among the leading AI+DePIN projects with solid fundamentals.

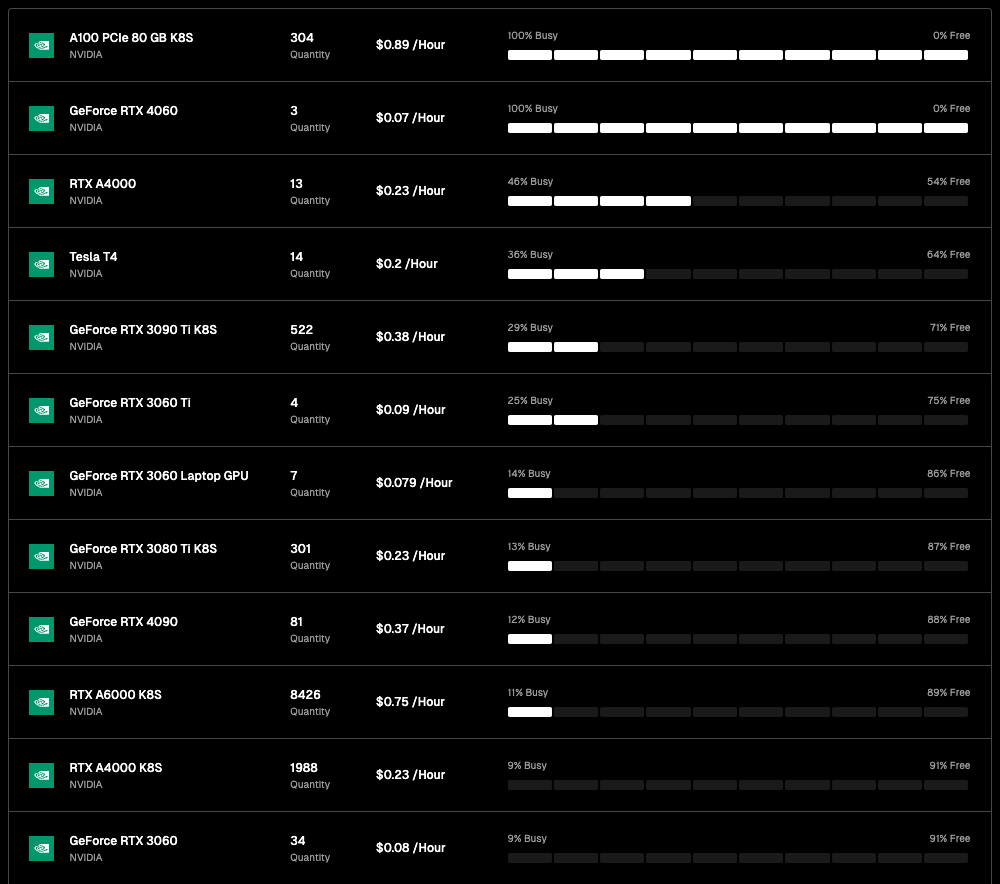

io.net: AI+DePIN with the largest GPU count

io.net is a decentralized computing network supporting the development, execution, and scaling of ML (machine learning) applications on Solana. Leveraging the world’s largest GPU cluster, it allows ML engineers to access distributed cloud computing at a fraction of centralized service costs.

Official data claims io.net has over 1 million standby GPUs. Additionally, its partnership with Render expands available GPU deployment options.

While io.net boasts a large GPU inventory, most come from partnerships with cloud providers or individual node contributions, with high idling rates. For instance, among 8,426 RTX A6000 GPUs, only 11% (927) are active, and many other GPU models see almost no usage. However, io.net’s key advantage lies in low pricing: while Akash charges ~$1.5/hour per GPU, io.net offers rates as low as $0.1–1.

Moving forward, io.net plans to allow GPU providers in its ecosystem to stake native assets to increase usage chances—the more staked, the higher the selection probability. AI engineers who stake can also access high-performance GPUs.

In terms of GPU scale, io.net leads among the ten projects listed. Even excluding idle units, its active GPU count ranks first. Regarding tokenomics, io.net’s native token IO is set to launch in Q1 2024, with a max supply of 22.3 million. A 5% fee on network usage will burn IO tokens or reward new users. The deflationary-burn model suggests strong upside potential, contributing to high market interest despite no token release yet.

Golem: CPU-based computing marketplace

Golem is a decentralized computing marketplace where anyone can share and aggregate computational resources via a shared network. It offers GPU and CPU rental services.

The Golem market consists of three parties: suppliers, demanders, and software developers. Demanders submit computing tasks, which Golem distributes to suitable suppliers (based on RAM, storage, CPU cores). Upon completion, payments are settled via tokens.

Golem primarily uses CPUs for computing aggregation. While cheaper than GPUs (an Intel i9-14900K costs ~$700 vs. $12,000–25,000 for an A100), CPUs lack high-concurrency processing and are less energy-efficient. Thus, CPU-based computing may appear weaker in narrative appeal compared to GPU-focused projects.

Magnet AI: AI Model Tokenization

Magnet AI aggregates GPU providers to deliver model training services for various AI developers. Unlike other AI+DePIN platforms, Magnet AI allows AI teams to issue ERC-20 tokens for their models. Users engaging with different models receive token airdrops and rewards.

In Q2 2024, Magnet AI will launch on Polygon zkEVM and Arbitrum.

Similar to io.net, both platforms aggregate GPU resources to support AI model training.

The difference lies in focus: io.net emphasizes GPU resource aggregation, encouraging clusters, enterprises, and individuals to contribute GPUs for rewards—driven by compute supply.

Magnet AI focuses more on AI models. With model-specific tokens, it may attract and retain users through token distribution and airdrops, using tokenization to draw in AI developers.

Simply put: Magnet builds a marketplace using GPUs where any AI developer or model deployer can issue ERC-20 tokens, and users can earn or hold various model tokens.

Render: Specialist in Graphics Rendering AI Models

Render Network is a blockchain-based, decentralized GPU rendering solution connecting creators with idle GPU resources. It aims to eliminate hardware constraints, reduce time and cost, and enhance digital rights management, accelerating metaverse development.

According to the Render whitepaper, artists, engineers, and developers can build AI applications such as AI-assisted 3D content generation, AI-accelerated full rendering, and train AI models using Render’s 3D scene graph data.

Render provides a Render Network SDK, enabling developers to use its distributed GPUs for AI tasks ranging from NeRF (Neural Radiance Fields) and LightField rendering to generative AI.

Per Global Market Insights, the global 3D rendering market is projected to reach $6 billion. With a $2.2B FDV, Render still has room to grow.

Exact GPU data for Render isn’t publicly available. However, Render’s parent company OTOY has shown strong ties to Apple. OTOY’s flagship renderer, OctaneRender, supports industry-leading 3D tools across VFX, gaming, motion design, architectural visualization, and simulation—including native support for Unity3D and Unreal Engine.

Google and Microsoft have also joined the RNDR network. Render processed nearly 250,000 rendering jobs in 2021, and artists in its ecosystem generated around $5 billion in NFT sales.

Thus, Render’s valuation should align with the broader rendering market potential (~$30B). Combined with its BME (Burn & Mint Equilibrium) economic model, Render retains significant upside potential in both token price and FDV.

Clore.ai: Video Rendering

Clore.ai is a PoW-based platform offering GPU rental services. Users can rent out their GPUs for AI training, video rendering, and cryptocurrency mining, while others access these resources at lower costs.

Services include: AI training, film rendering, VPN, crypto mining. When rendering demand exists, the network assigns tasks. Otherwise, it mines the cryptocurrency with the highest real-time profitability.

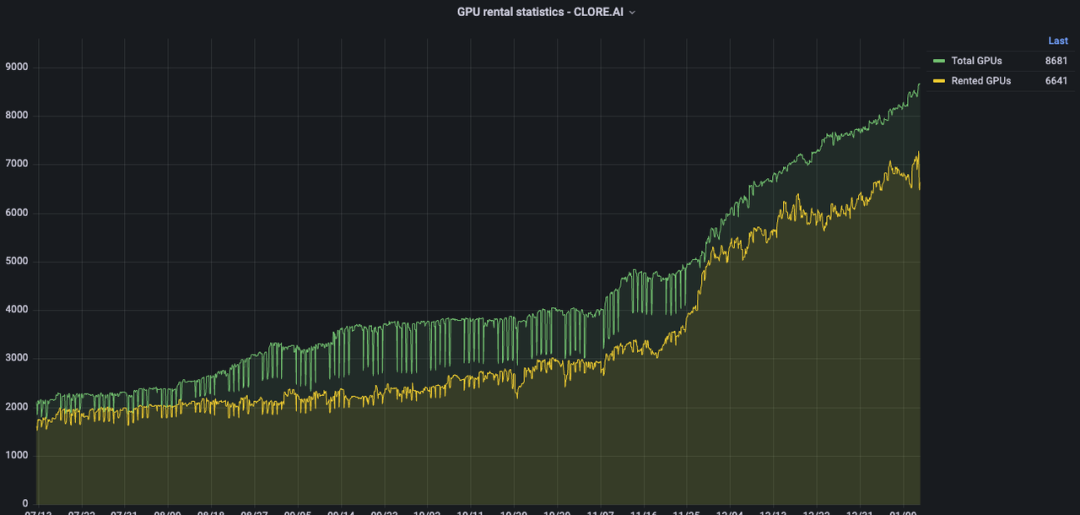

Over the past six months, Clore.ai’s GPU count grew from 2,000 to ~9,000, surpassing Akash in total integration. Yet, its secondary market FDV is only about 20% of Akash’s.

Token-wise, CLORE uses PoW mining with no pre-mine or ICO: 50% of each block goes to miners, 40% to renters, 10% to the team.

Total supply is 1.3 billion, mined since June 2022, with near-full circulation expected by 2042. Current circulating supply is ~220 million (rising to ~250 million by end-2023, or 20% of total). Thus, actual FDV is ~$31M. Theoretically, Clore.ai is severely undervalued. However, with 50% allocated to miners and high sell pressure, price appreciation faces strong resistance.

Livepeer: Video Rendering & Inference

Livepeer is an Ethereum-based decentralized video protocol that rewards participants for securely processing video content at reasonable costs.

Officially, Livepeer processes millions of minutes of video transcoding weekly across thousands of GPUs.

Livepeer may adopt a “mainnet + subnet” structure, allowing node operators to create subnets and fulfill tasks settled on the mainnet. For example, an AI video subnet could specialize in training AI models for video rendering.

Going forward, Livepeer plans to expand beyond model training into inference & fine-tuning.

Aethir: Focused on Cloud Gaming and AI

Aethir is a cloud gaming platform and decentralized cloud infrastructure (DCI) built for gaming and AI companies. It offloads heavy GPU workloads from players, ensuring ultra-low latency gameplay across devices and locations.

Additionally, Aethir offers deployment services including GPU, CPU, and storage. On September 27, 2023, Aethir officially launched commercial cloud gaming and AI computing services, leveraging decentralized computing to support its platform’s games and AI models.

Cloud gaming shifts rendering workloads to the cloud, eliminating device hardware and OS limitations, significantly expanding the potential player base.

2.2 Bandwidth

Bandwidth is one of the resources DePIN provides to AI. The global bandwidth market exceeded $50B in 2021 and is projected to surpass $100B by 2027.

As AI models grow larger and more complex, training increasingly relies on parallel computing strategies such as data, pipeline, and tensor parallelism. In such setups, inter-device communication becomes critical. Thus, network bandwidth plays a vital role in large-scale AI training clusters.

More importantly, stable and reliable bandwidth ensures synchronized responses across nodes, technically avoiding single points of control (e.g., Falcon’s low-latency, high-bandwidth relay network balances latency and bandwidth needs), ultimately ensuring network trustworthiness and censorship resistance.

Grass: Mobile-compatible bandwidth mining product

Grass is the flagship product of Wynd Network, which focuses on open web data and raised $1M in 2023. Grass allows users to earn passive income by selling unused internet bandwidth.

Users can sell internet bandwidth on Grass to support AI model training for development teams and earn token rewards.

Grass is launching a mobile version. Since mobile and PC have different IP addresses, this expansion will provide more unique IPs. Grass can then leverage these diverse IPs to enhance data efficiency for AI training.

Currently, Grass offers two IP contribution methods: browser extension for PC and mobile app download. (PC and mobile must be on different networks.)

As of November 29, 2023, Grass had 103,000 downloads and 1.45 million unique IPs.

Mobile and PC differ in AI demand, leading to different applicable AI training categories.

For example, mobile devices generate extensive data on image optimization, facial recognition, real-time translation, voice assistants, and device performance tuning—areas where PCs fall short.

Currently, Grass holds an early-mover advantage in mobile AI model training. Given the massive global mobile market potential, Grass’s outlook is promising.

However, Grass currently lacks detailed information on AI models, suggesting initial operations may focus primarily on token mining.

Meson Network: Layer 2 with Mobile Compatibility

Meson Network is a next-generation storage acceleration network built on blockchain Layer 2. It aggregates idle server bandwidth via mining and serves file and streaming acceleration markets—including websites, video, live streaming, and blockchain storage.

Think of Meson Network as a bandwidth pool connecting suppliers and consumers.

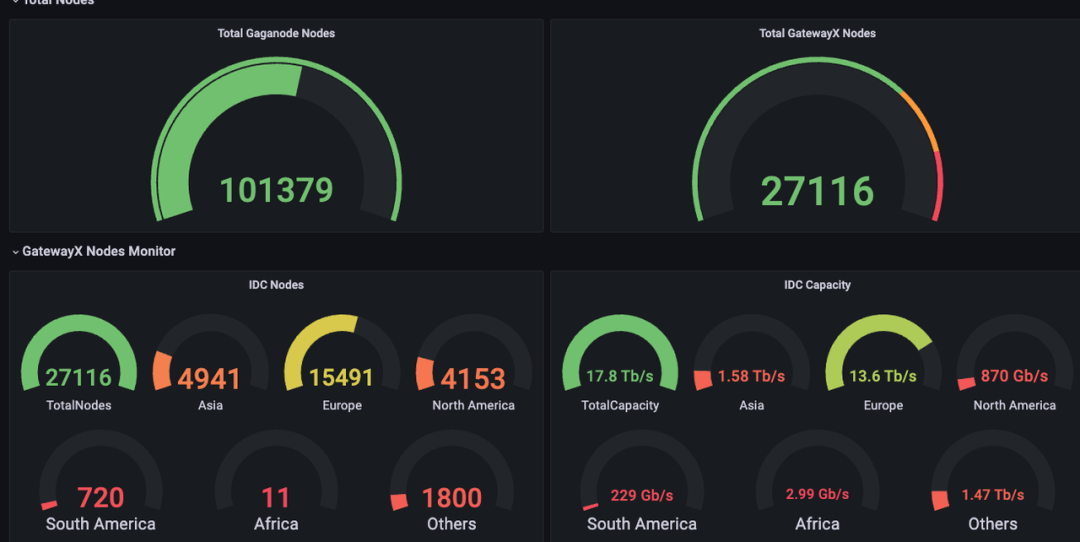

Within Meson’s product suite, two products—GatewayX and GaGaNode—collect bandwidth from global nodes, while IPCola monetizes the aggregated bandwidth.

GatewayX: Focuses on commercial idle bandwidth, targeting IDC centers.

Dashboard data shows over 20,000 global IDC nodes connected, delivering 12.5 TiB/s transmission capacity.

GaGaNode: Aggregates residential and personal device bandwidth, supporting edge computing.

IPCola: Meson’s monetization channel, handling IP and bandwidth allocation.

Meson reports over $1M in revenue over the past six months. Official stats list 27,116 IDC nodes and 17.7 TiB/s capacity.

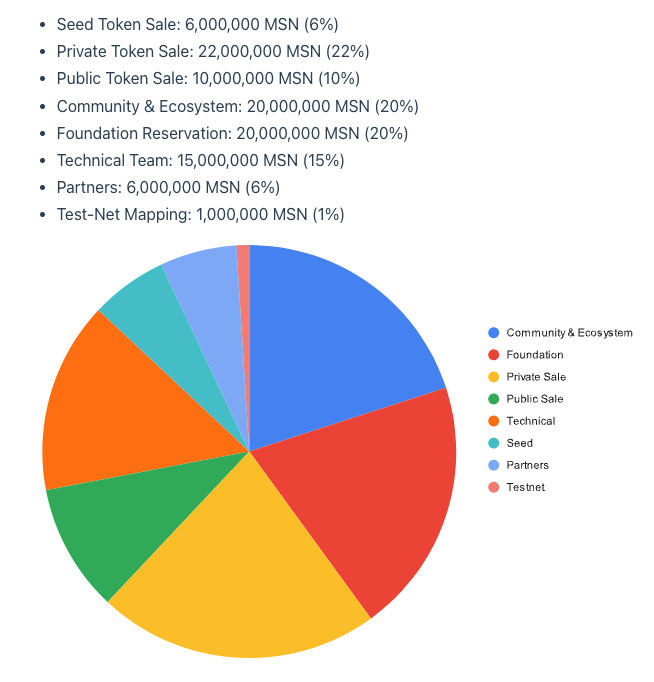

Meson plans to launch its token in March–April 2024 and has disclosed its tokenomics.

Token name: MSN, initial supply 100 million, 5% mining inflation in Year 1, decreasing by 0.5% annually.

Network3: Integrated with Sei Network

Network3 is an AI company building a dedicated AI Layer 2 integrated with Sei. Using AI algorithm optimization, compression, edge computing, and privacy-preserving computation, it helps global AI developers train and validate models efficiently and at scale.

Per official data, Network3 has over 58,000 active nodes providing 2PB of bandwidth. It has partnered with 10 blockchain ecosystems, including Alchemy Pay, ETHSign, and IoTeX.

2.3 Data

Unlike computing and bandwidth, the data supply market remains niche and highly specialized. Demand usually comes from the project itself or related AI developers—such as Hivemapper.

Training a map model using self-sourced data is logically straightforward. Thus, we can extend our view to similar DePIN projects like DIMO, Natix, and FrodoBots.

Hivemapper: Focused on empowering its own Map AI product

Hivemapper is a top DePIN project on Solana aiming to build a decentralized “Google Maps.” Users buy Hivemapper dashcams, share real-time footage, and earn HONEY tokens.

Future Money Group previously covered Hivemapper in detail in “FMG Report: Up 19x in 30 Days—Understanding Automotive DePIN Through Hivemapper,” so we won’t repeat it here. We classify Hivemapper as AI+DePIN because it launched MAP AI—an AI-powered mapping engine that generates high-quality maps from dashcam data.

MAP AI introduces a new role: AI Trainer. This role includes both dashcam data contributors and those actively training the MAP AI model.

Hivemapper keeps AI trainer requirements low, using gamified tasks like geolocation guessing to lower entry barriers and attract more IPs. The richer the IP pool, the more efficient data collection becomes. Contributors earn HONEY tokens as rewards.

AI use cases in Hivemapper remain narrow. It doesn’t support third-party model training—MAP AI exists solely to enhance its own mapping product. Thus, investment logic remains unchanged.

Potential

DIMO: In-car data collection

DIMO is a car IoT platform on Polygon enabling drivers to collect and share vehicle data—mileage, speed, location, tire pressure, battery/engine health, etc.

By analyzing this data, DIMO predicts maintenance needs and alerts users. Drivers gain deeper insights into their vehicles and earn DIMO tokens by contributing data. Data consumers can extract insights on battery, autonomous systems, and controls.

Natix: Privacy-enabled map data collection

Natix is a decentralized network using patented AI privacy tech. It connects global camera-equipped devices (smartphones, drones, cars) to form a privacy-compliant camera network, collecting data to populate decentralized dynamic maps (DDMap).

Data contributors are rewarded with tokens and NFTs.

FrodoBots: Decentralized network powered by robots

FrodoBots is a social DePIN game using mobile robots equipped with cameras to collect visual data.

Users buy robots to join the game, interact globally, and simultaneously collect road and map data via robot cameras.

These three projects share two key elements: data collection and IP provision. Though none currently conduct AI model training, they lay essential groundwork. Like Hivemapper, they rely on camera-based data to build comprehensive maps, limiting applicable AI models to mapping-centric domains. AI integration would help them build stronger moats.

A key concern: camera-based data collection raises bidirectional privacy issues—e.g., capturing pedestrians’ images infringes on likeness rights, and users care deeply about personal privacy. Natix, for instance, uses AI to protect privacy.

2.4 Algorithms

Computing, bandwidth, and data focus on resource distinctions, while algorithms center on AI models. Here, we use BitTensor as an example. BitTensor neither contributes data nor computing directly. Instead, it uses blockchain and incentives to schedule and rank algorithms, creating a free, knowledge-sharing model marketplace for AI.

Like OpenAI, BitTensor aims to match traditional giants in inference performance while preserving decentralization.

Algorithm-focused projects are somewhat ahead of their time, with few parallels. As AI models—especially Web3-born ones—proliferate, model competition will become standard.

Competition will elevate downstream AI activities—especially inference and fine-tuning. Model training is just the upstream phase. A model must first be trained to gain basic intelligence, then undergo careful inference and adjustment (optimization) before being deployed at the edge. These stages demand complex architectures and robust computing—indicating vast growth potential.

BitTensor: AI Model Oracle

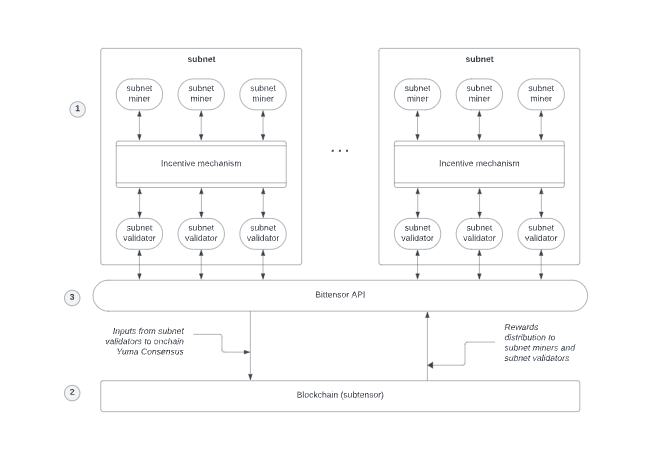

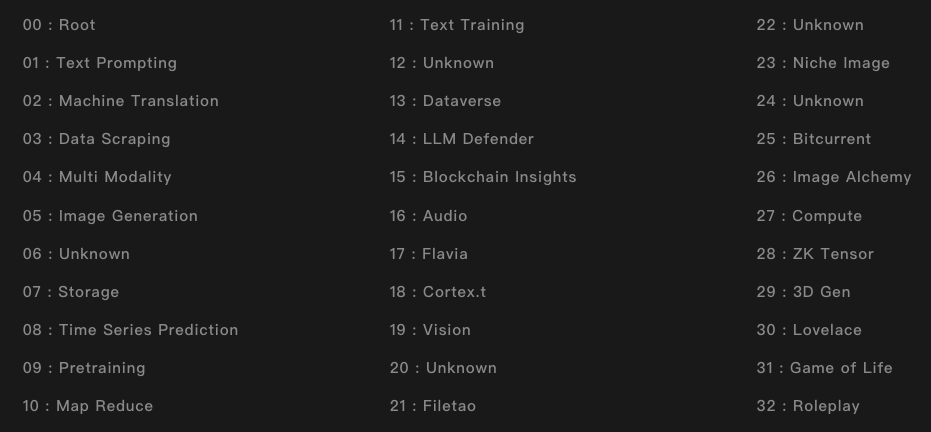

BitTensor is a decentralized machine learning ecosystem architecturally similar to Polkadot’s mainnet + subnet model.

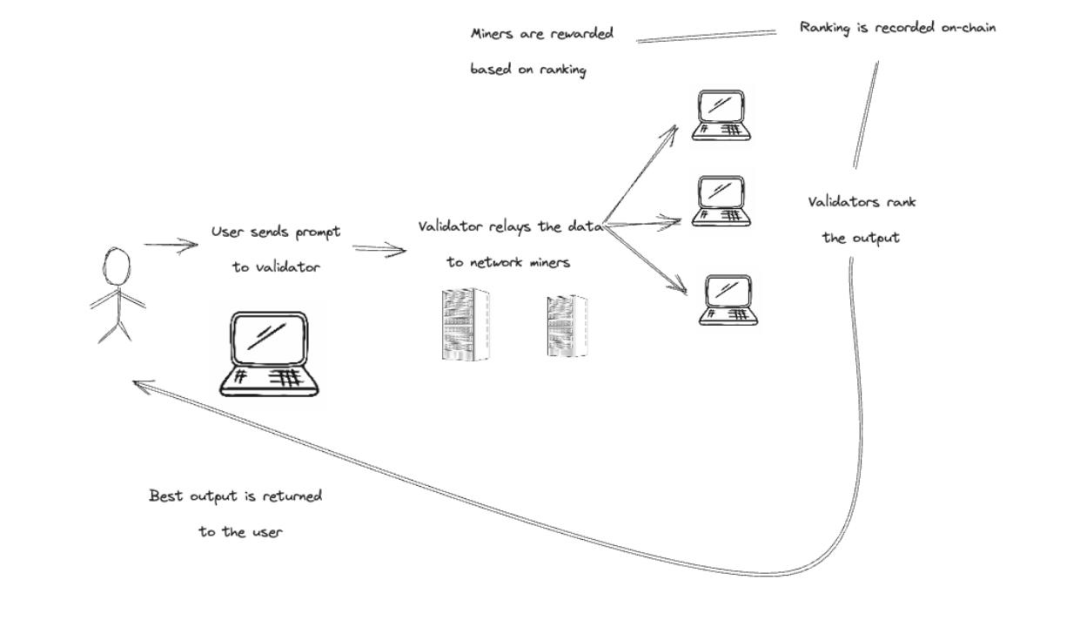

Workflow: Subnets send activity data to the Bittensor API (acting as an oracle), which forwards useful information to the mainnet for reward distribution.

BitTensor’s 32 subnets

Key roles in BitTensor:

-

Miners: Providers of global AI algorithms/models who host models on the Bittensor network. Different models form different subnets.

-

Validators: Evaluators within the Bittensor network. They assess model quality and effectiveness, ranking models based on task performance to help users find optimal solutions.

-

Users: End consumers of Bittensor’s AI models—individuals or developers building AI-powered applications.

-

Join TechFlow official community to stay tuned

Telegram:https://t.me/TechFlowDaily

X (Twitter):https://x.com/TechFlowPost

X (Twitter) EN:https://x.com/BlockFlow_News

Add to FavoritesShare to Social Media